数据预处理 泰坦尼克号

什么是数据预处理? (What is Data Pre-Processing?)

We know from my last blog that data preprocessing is a data mining technique that involves transforming raw data into an understandable format. Real-world data is often incomplete, inconsistent, and/or lacking in certain behaviors or trends, and is likely to contain many errors. Data preprocessing is a proven method of resolving such issues. Data preprocessing prepares raw data for further processing.

从我的上一篇博客中我们知道,数据预处理是一种数据挖掘技术,它涉及将原始数据转换为可理解的格式。 实际数据通常不完整,不一致和/或缺少某些行为或趋势,并且可能包含许多错误。 数据预处理是解决此类问题的一种行之有效的方法。 数据预处理将准备原始数据以进行进一步处理。

So in this blog we will learn about the implementation of data pre-processing on a data set. I have decided to do my implementation using the Titanic data set, which I have downloaded from Kaggle. Here is the link to get this dataset- https://www.kaggle.com/c/titanic-gettingStarted/data

因此,在本博客中,我们将学习在数据集上实施数据预处理的方法。 我决定使用我从Kaggle下载的Titanic数据集进行实施。 这是获取此数据集的链接-https : //www.kaggle.com/c/titanic-gettingStarted/data

Note- Kaggle gives 2 datasets, the train and the test dataset, so we will use both of them in this process.

注意 -Kaggle提供了2个数据集,即训练和测试数据集,因此在此过程中我们将同时使用它们。

预期的结果是什么? (What is the expected outcome?)

The Titanic shipwreck was a massive disaster, so we will implement data pre- processing on this data set to know the number of survivors and their details.

泰坦尼克号沉船事故是一场巨大的灾难,因此我们将对该数据集进行数据预处理,以了解幸存者的人数及其详细信息。

I will show you how to apply data preprocessing techniques on the Titanic dataset, with a tinge of my own ideas into this.

我将向您展示如何在Titanic数据集上应用数据预处理技术,并结合我自己的想法。

So let’s get started…

因此,让我们开始吧...

导入所有重要的库 (Importing all the important libraries)

Firstly after loading the data sets in our system, we will import the libraries that are needed to perform the functions. In my case I imported NumPy, Pandas and Matplot libraries.

首先,在将数据集加载到我们的系统中之后,我们将导入执行功能所需的库。 就我而言,我导入了NumPy,Pandas和Matplot库。

#importing librariesimport numpy as npimport matplotlib.pyplot as pltimport pandas as pd

#importing librarys将numpy导入为npimport matplotlib.pyplot作为pltimport熊猫作为pd

使用Pandas导入数据集 (Importing dataset using Pandas)

To work on the data, you can either load the CSV in excel software or in pandas. So I will load the CSV data in pandas. Then we will also use a function to view that data in the Jupyter notebook.

要处理数据,可以在excel软件或熊猫中加载CSV。 因此,我将在熊猫中加载CSV数据。 然后,我们还将使用一个函数在Jupyter笔记本中查看该数据。

#importing dataset using pandasdf = pd.read_csv(r’C:\Users\KIIT\Desktop\Internity Internship\Day 4 task\train.csv’)df.shapedf.head()

#使用pandasdf = pd.read_csv(r'C:\ Users \ KIIT \ Desktop \ Internal Internship \ Day 4 task \ train.csv')df.shapedf.head()导入数据集

#Taking a look at the data format belowdf.info()

#看看下面的数据格式df.info()

Let’s take a look at the data output that we get from the above code snippets :

让我们看一下从以上代码片段获得的数据输出:

If you carefully observe the above summary of pandas, there are total 891 rows, Age shows only 714 (means missing), Embarked (2 missing) and Cabin missing a lot as well. Object data types are non-numeric so we have to find a way to encode them to numerical values.

如果您仔细观察以上熊猫的摘要,则总共有891行,“年龄”仅显示714行(均值缺失),上船(缺失2幅)以及机舱缺失很多。 对象数据类型是非数字的,因此我们必须找到一种将其编码为数值的方法。

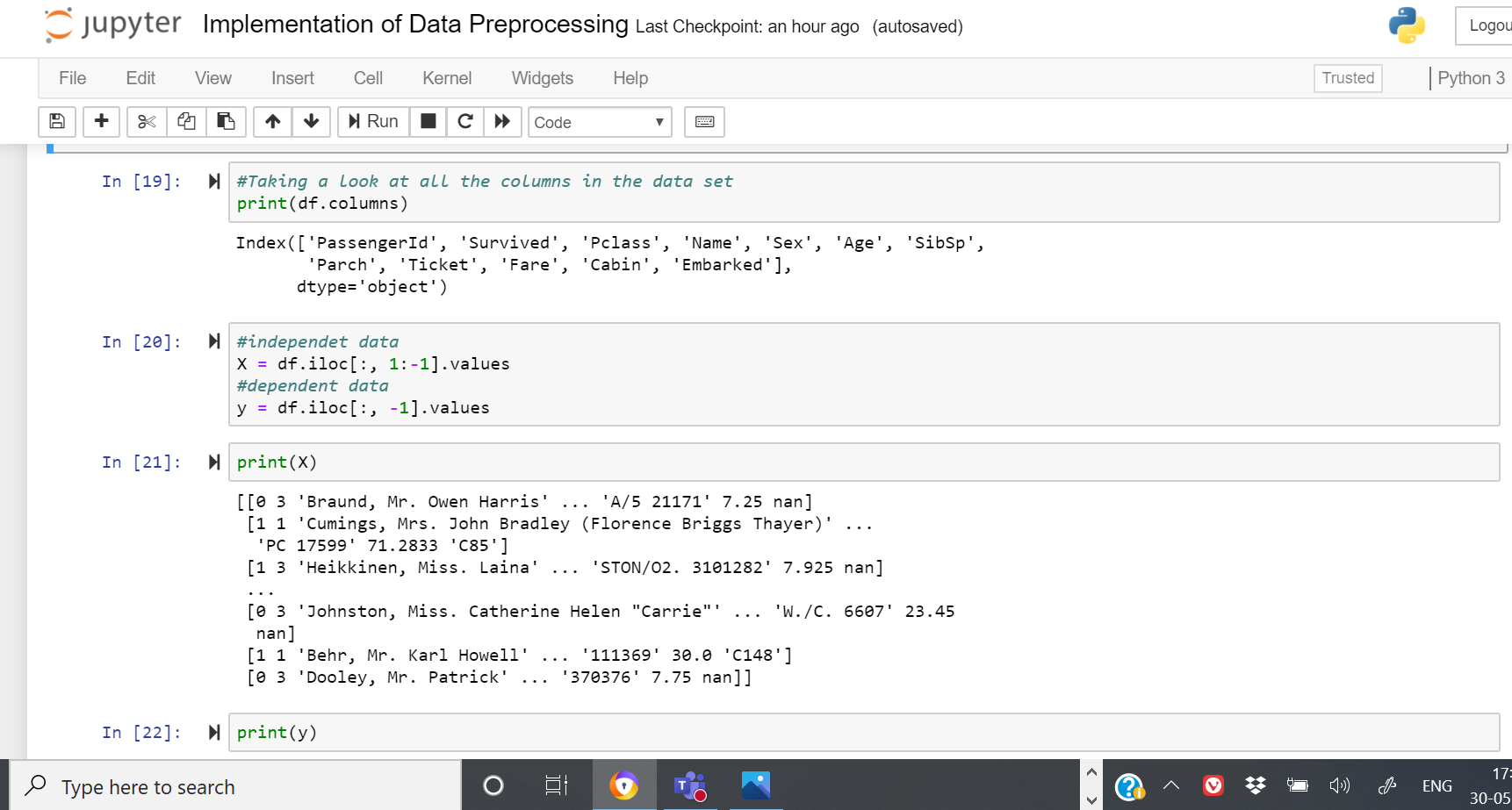

查看特定数据集中的列 (Viewing the columns in the particular dataset)

We use a function to view all the columns that are being used in this dataset for a better reference of the kind of data that we are working on.

我们使用一个函数来查看此数据集中正在使用的所有列,以更好地参考我们正在处理的数据类型。

#Taking a look at all the columns in the data setprint(df.columns)

#查看数据setprint(df.columns)中的所有列

定义独立和相关数据的值 (Defining values for independent and dependent data)

Here we will declare the values of X and y for our independent and dependent data.

在这里,我们将为我们的独立数据和相关数据声明X和y的值。

#independet dataX = df.iloc[:, 1:-1].values#dependent datay = df.iloc[:, -1].values

#independet dataX = df.iloc [:, 1:-1] .values#dependent datay = df.iloc [:, -1] .values

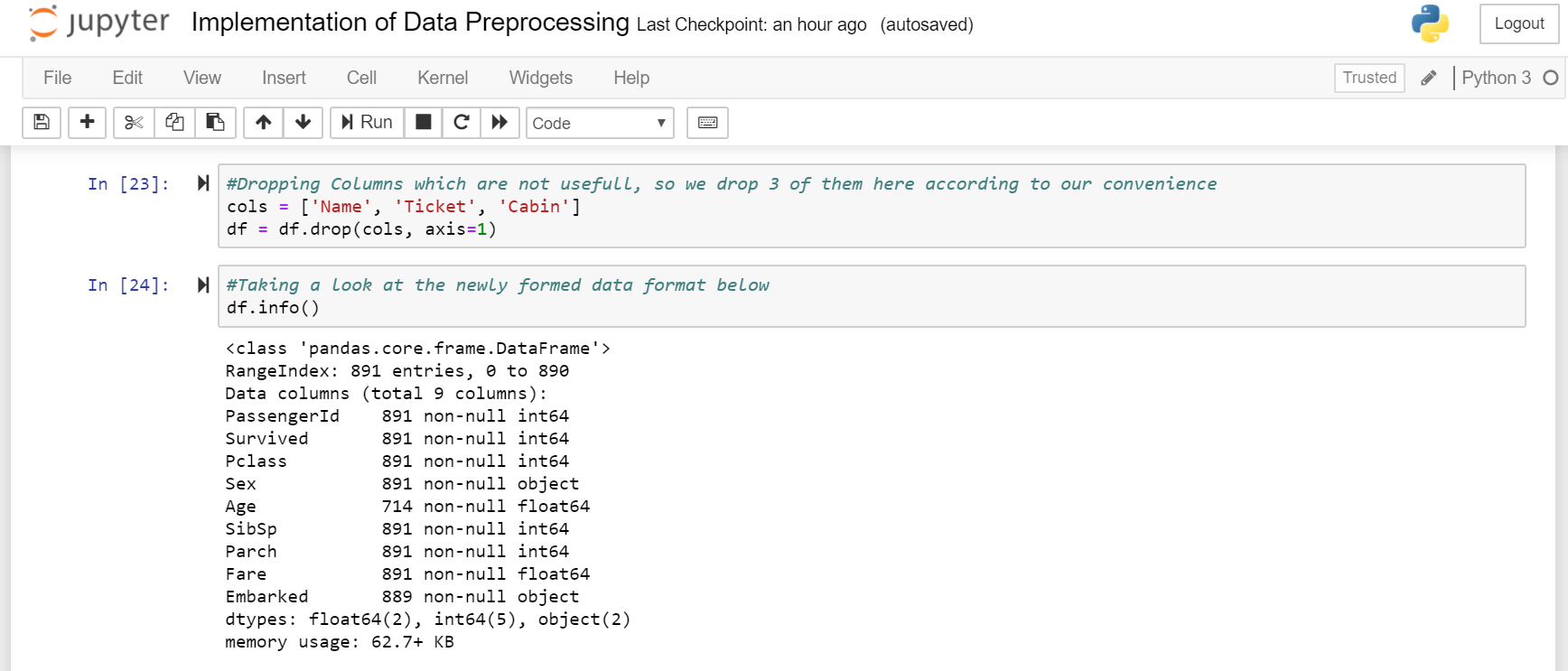

删除无用的列 (Dropping Columns which are not useful)

Lets try to drop some of the columns which many not contribute much to our machine learning model such as Name, Ticket, Cabin etc.

让我们尝试删除一些对我们的机器学习模型贡献不大的列,例如名称,票务,机舱等。

So we will drop 3 columns and then we will take a look at the newly generated data.

因此,我们将删除3列,然后看一下新生成的数据。

#Dropping Columns which are not usefull, so we drop 3 of them here according to our conveniencecols = [‘Name’, ‘Ticket’, ‘Cabin’]df = df.drop(cols, axis=1)

#删除没有用的列,因此我们根据我们的便便性将其中的3个放置在此处colcols = ['Name','Ticket','Cabin'] df = df.drop(cols,axis = 1)

#Taking a look at the newly formed data format belowdf.info()

#在下面的df.info()中查看新形成的数据格式

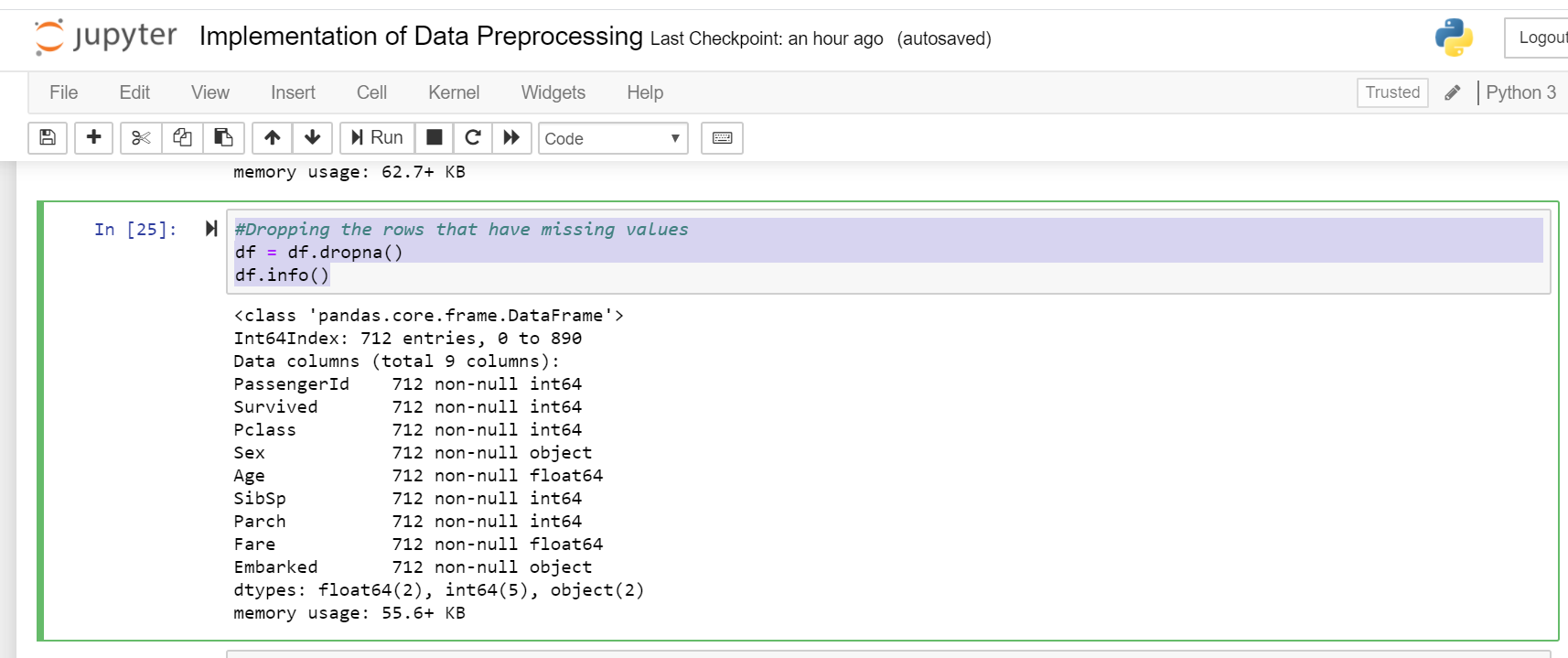

删除缺少值的行 (Dropping rows having missing values)

Next if we want we can drop all rows in the data that has missing values (NaN). You can do it like the code shows-

接下来,如果需要,我们可以删除数据中所有缺少值(NaN)的行。 您可以像代码所示那样进行操作-

#Dropping the rows that have missing valuesdf = df.dropna()df.info()

#删除缺少值的行df = df.dropna()df.info()

删除缺少值的行的问题 (Problem with dropping rows having missing values)

After dropping rows with missing values we find that the dataset is reduced to 712 rows from 891, which means we are wasting data. Machine learning models need data for training to perform well. So we preserve the data and make use of it as much as we can. We will see it later.

删除缺少值的行后,我们发现数据集从891减少到712行,这意味着我们在浪费数据 。 机器学习模型需要用于训练的数据才能表现良好。 因此,我们保留并尽可能多地利用数据。 我们稍后会看到。

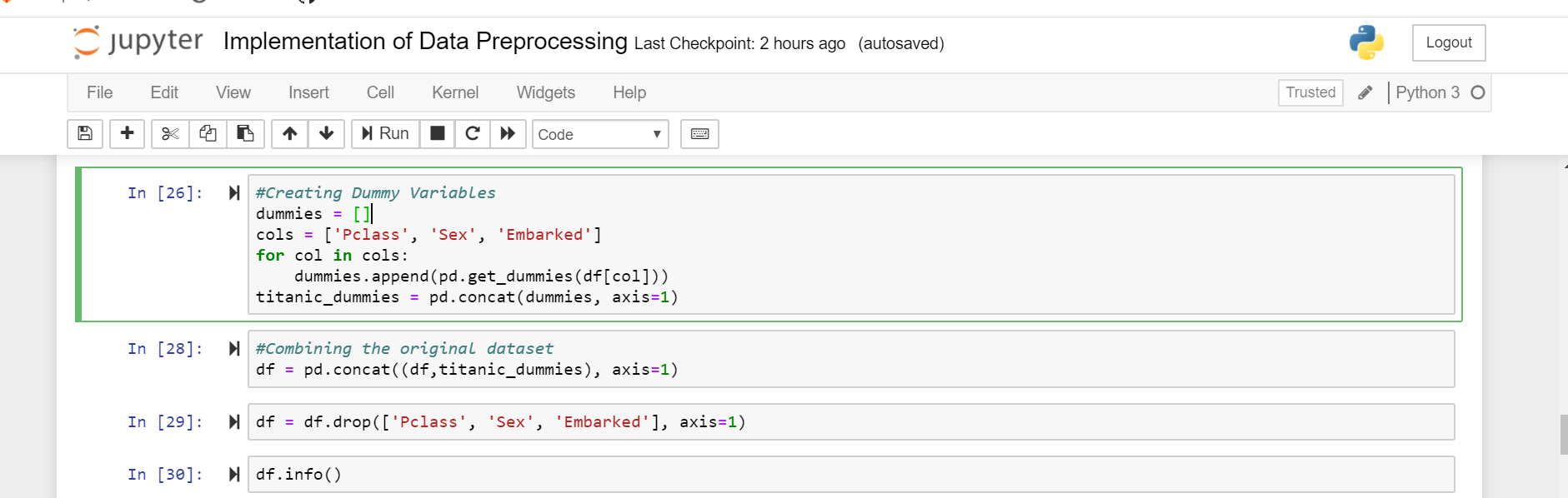

创建虚拟变量 (Creating Dummy Variables)

Now we convert the Pclass, Sex, Embarked to columns in pandas and drop them after conversion.

现在,我们将Pclass,Sex,Embeded转换为熊猫中的列,并在转换后将其删除。

#Creating Dummy Variablesdummies = []cols = [‘Pclass’, ‘Sex’, ‘Embarked’]for col in cols:dummies.append(pd.get_dummies(df[col]))titanic_dummies = pd.concat(dummies, axis=1)

#为col中的col创建虚拟变量dummies = [] cols = ['Pclass','Sex','Embarked'] cols:dummies.append(pd.get_dummies(df [col]))titanic_dummies = pd.concat(Dummies,axis = 1)

So on seeing the information we know we have 8 columns transformed to columns where 1,2,3 represents passenger class.

因此,在查看信息后,我们知道我们将8列转换为其中1,2,3代表乘客舱位的列。

And finally we concatenate to the original data frame column wise.

最后,我们将原始数据帧按列连接。

#Combining the original datasetdf = pd.concat((df,titanic_dummies), axis=1)

#合并原始数据集df = pd.concat((df,titanic_dummies),axis = 1)

Now that we converted Pclass, Sex, Embarked values into columns, we drop the redundant same columns from the data frame and now take a look at the new data set.

现在,我们将Pclass,Sex,Embarked值转换为列,然后从数据框中删除了冗余的相同列,现在来看一下新的数据集。

df = df.drop([‘Pclass’, ‘Sex’, ‘Embarked’], axis=1)

df = df.drop(['Pclass','Sex','Embarked'],axis = 1)

df.info()

df.info()

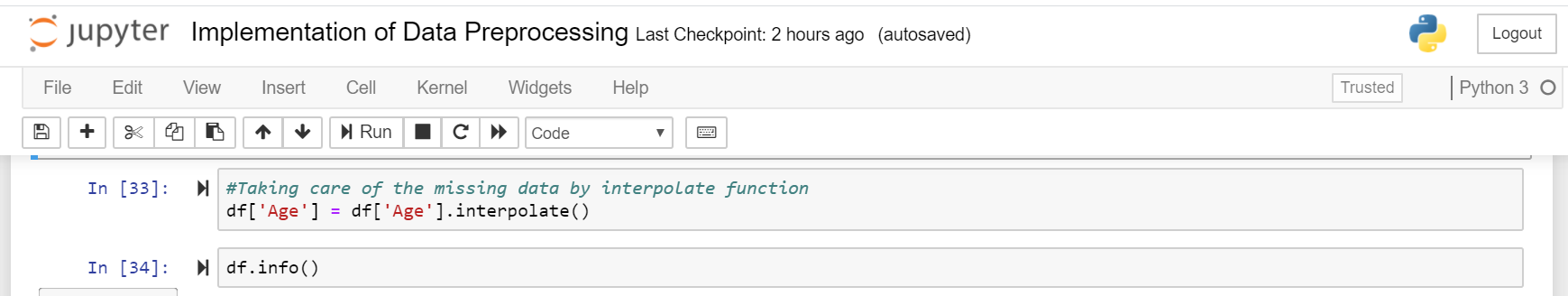

照顾丢失的数据 (Taking Care of Missing Data)

All is good, except age which has lots of missing values. Lets compute a median or interpolate() all the ages and fill those missing age values. Pandas has a interpolate() function that will replace all the missing NaNs to interpolated values.

一切都很好,除了年龄,它有很多缺失的值。 让我们计算所有年龄的中位数或interpolate()并填充那些缺失的年龄值。 熊猫有一个interpolate()函数,它将所有缺少的NaN替换为插值。

#Taking care of the missing data by interpolate functiondf[‘Age’] = df[‘Age’].interpolate()

#通过插值函数df ['Age'] = df ['Age']。interpolate()处理丢失的数据

df.info()

df.info()

Now lets observe the data columns. Notice age which is interpolated now with imputed new values.

现在让我们观察数据列。 注意使用新的插值插入的年龄。

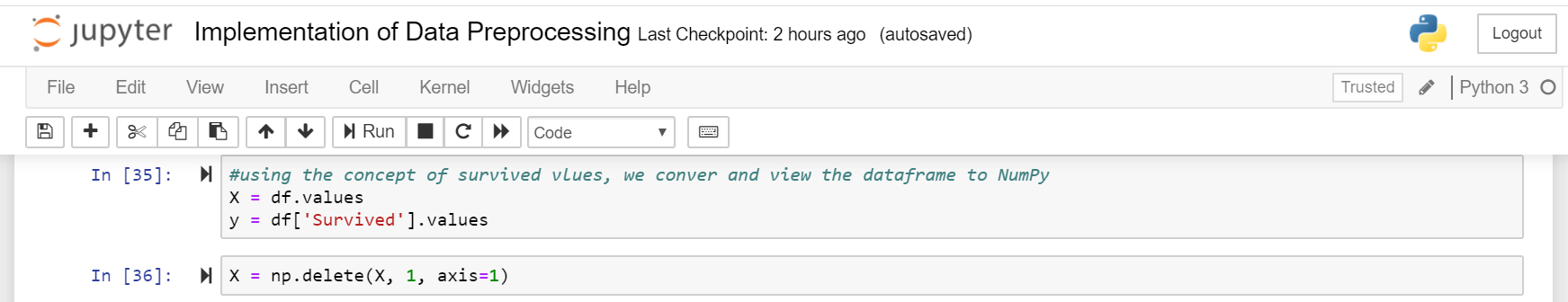

将数据帧转换为NumPy (Converting the data frame to NumPy)

Now that we have converted all the data to numeric, its time for preparing the data for machine learning models. This is where scikit and numpy come into play:

现在,我们已将所有数据转换为数字,这是为机器学习模型准备数据的时间。 这是scikit和numpy发挥作用的地方:

X = Input set with 14 attributesy = Small y Output, in this case ‘Survived’

X =具有14个属性的输入集y =小y输出,在这种情况下为“生存”

Now we convert our dataframe from pandas to numpy and we assign input and output.

现在,我们将数据帧从熊猫转换为numpy,并分配输入和输出。

#using the concept of survived vlues, we conver and view the dataframe to NumPyX = df.valuesy = df[‘Survived’].values

#使用幸存的虚拟词的概念,我们将数据帧收敛并查看为NumPyX = df.valuesy = df ['Survived']。values

X = np.delete(X, 1, axis=1)

X = np.delete(X,1,轴= 1)

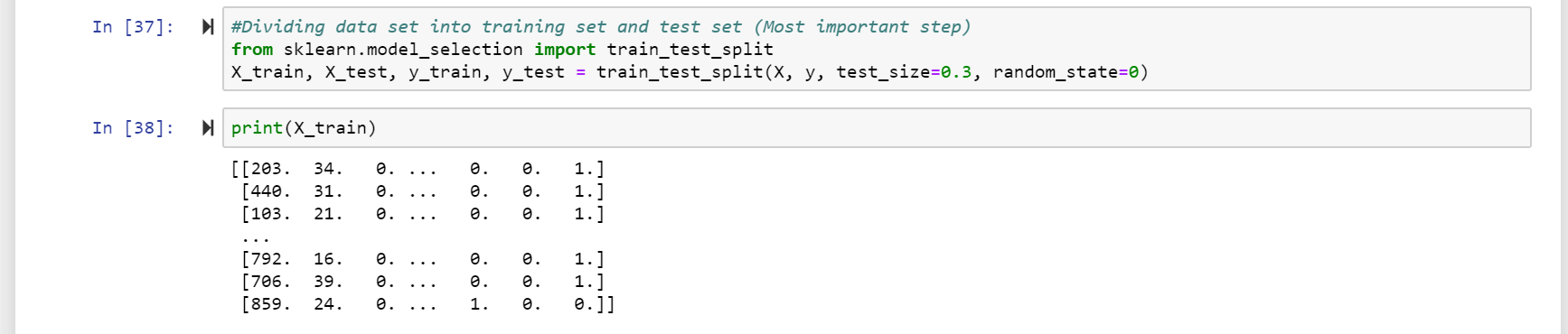

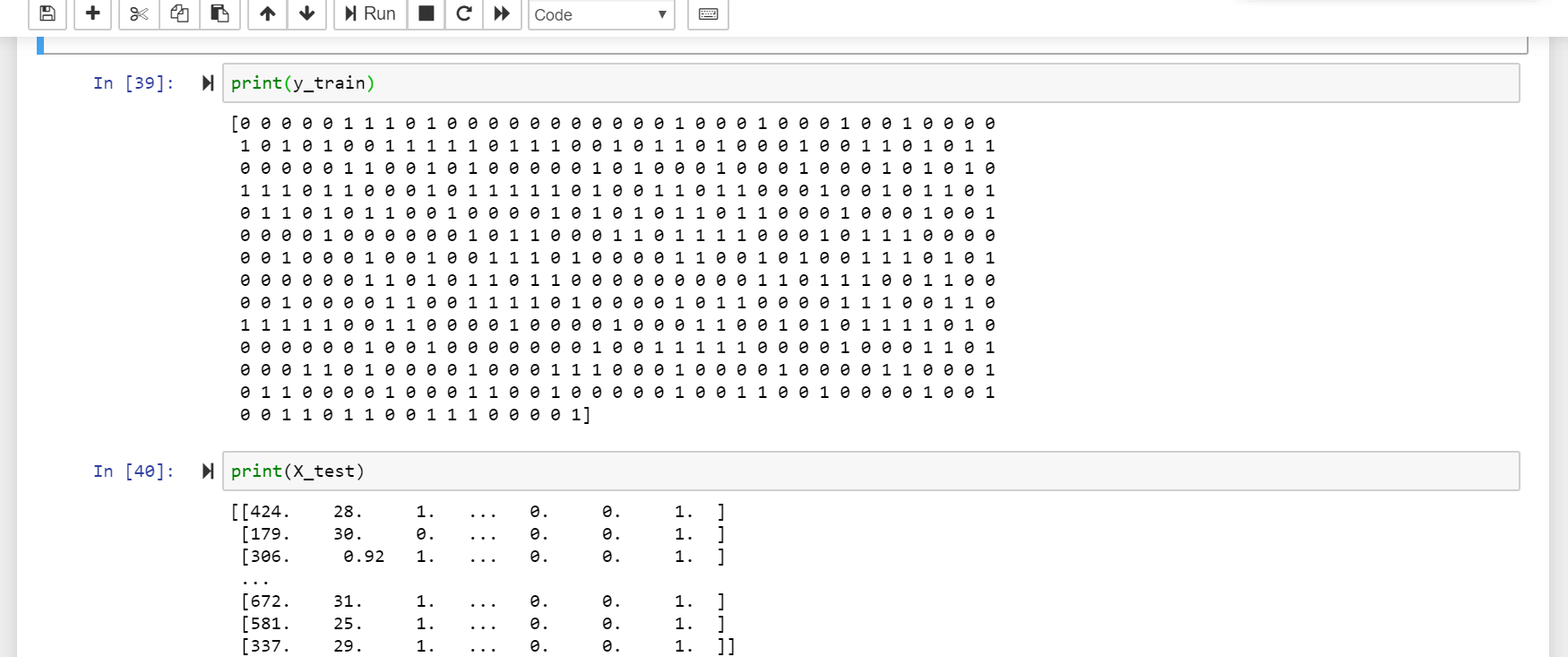

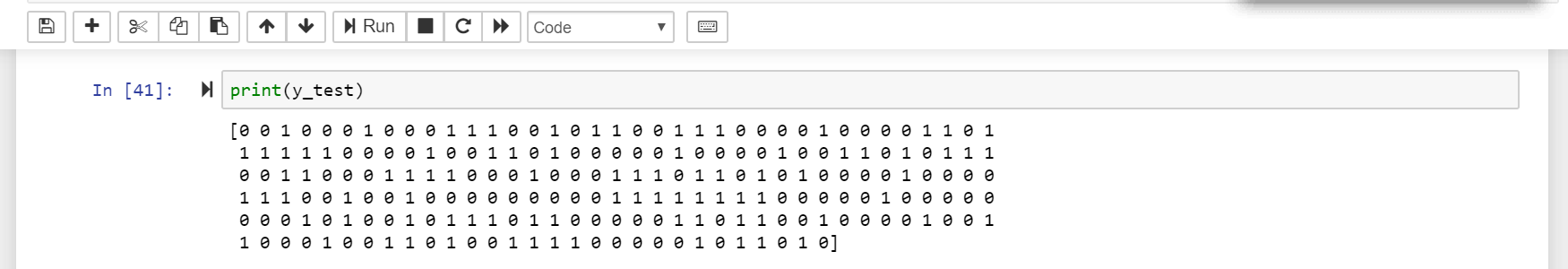

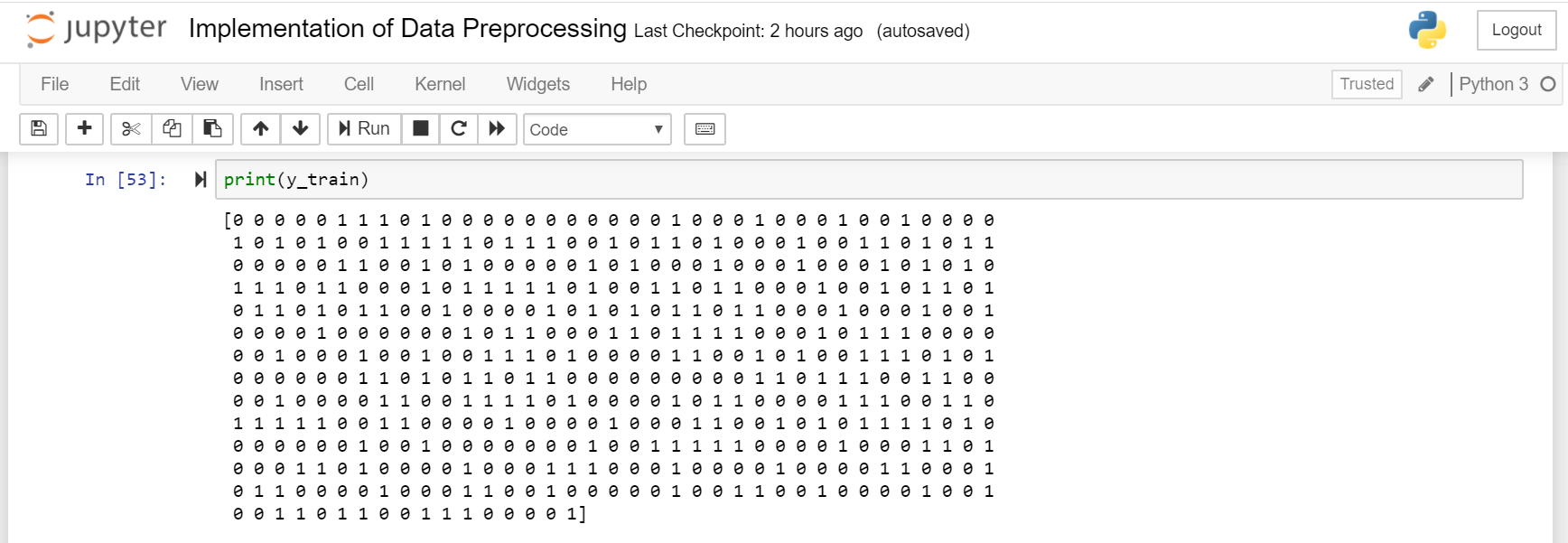

将数据集分为训练集和测试集 (Dividing data set into training set and test set)

Now that we are ready with X and y, lets split the dataset for 70% Training and 30% test set using scikit model_selection like in code and the 4 print functions after that-

现在我们已经准备好使用X和y,让我们使用scikit model_selection像代码中那样拆分70%Training和30%Test Set的数据集,然后使用4个打印功能-

#Dividing data set into training set and test set (Most important step)from sklearn.model_selection import train_test_splitX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

#从sklearn.model_selection导入数据集分为训练集和测试集(最重要的步骤)import train_test_splitX_train,X_test,y_train,y_test = train_test_split(X,y,test_size = 0.3,random_state = 0)

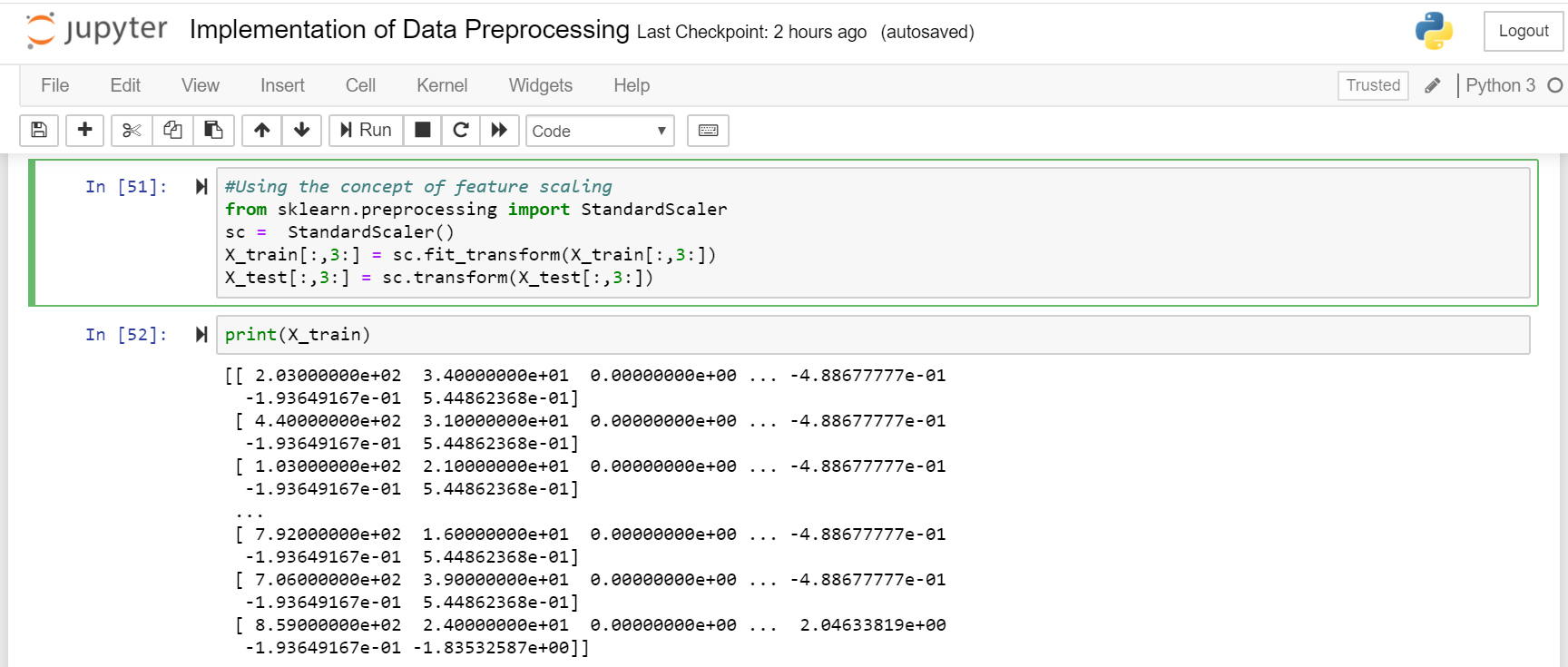

功能缩放 (Feature Scaling)

Feature Scaling is an important step of data preprocessing. Feature Scaling makes all data in such way that they lie in same scale usually -3 to +3.

特征缩放是数据预处理的重要步骤。 Feature Scaling使所有数据处于相同的比例,通常为-3至+3。

In out data set some field have small value and some field have large value. If we apply out machine learning model without feature scaling then prediction our model have high cost(It does because small value are dominated by large value). So before apply model we have to perform feature scaling.

在输出数据集中,某些字段的值较小,而某些字段的值较大。 如果我们在没有特征缩放的情况下应用机器学习模型,那么预测我们的模型将具有较高的成本(这是因为小值由大值主导)。 因此,在应用模型之前,我们必须执行特征缩放。

We can perform feature scaling in two ways.

我们可以通过两种方式执行特征缩放。

I-:Standardizaion x=(x-mean(X))/standard deviation(X)

I-:标准化x =(x均值(X))/标准差(X)

II-:Normalization-: x=(x-min(X))/(max(X)-min(X))

II-:归一化-:x =(x-min(X))/(max(X)-min(X))

#Using the concept of feature scalingfrom sklearn.preprocessing import StandardScalersc = StandardScaler()X_train[:,3:] = sc.fit_transform(X_train[:,3:])X_test[:,3:] = sc.transform(X_test[:,3:])

#使用sklearn.preprocessing import的特征缩放概念,StandardScalersc = StandardScaler()X_train [:,3:] = sc.fit_transform(X_train [:,3:])X_test [:,3:] = sc.transform(X_test [ :,3:])

That’s all for today guys!

今天就这些了!

This is the final outcome of the whole process. For more of such blogs, stay tuned!

这是整个过程的最终结果。 有关此类博客的更多信息,请继续关注!

翻译自: https://medium.com/all-about-machine-learning/understanding-data-preprocessing-taking-the-titanic-dataset-ebb78de162e0

数据预处理 泰坦尼克号

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/389590.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

方法源码解析(二))