prompt 介绍

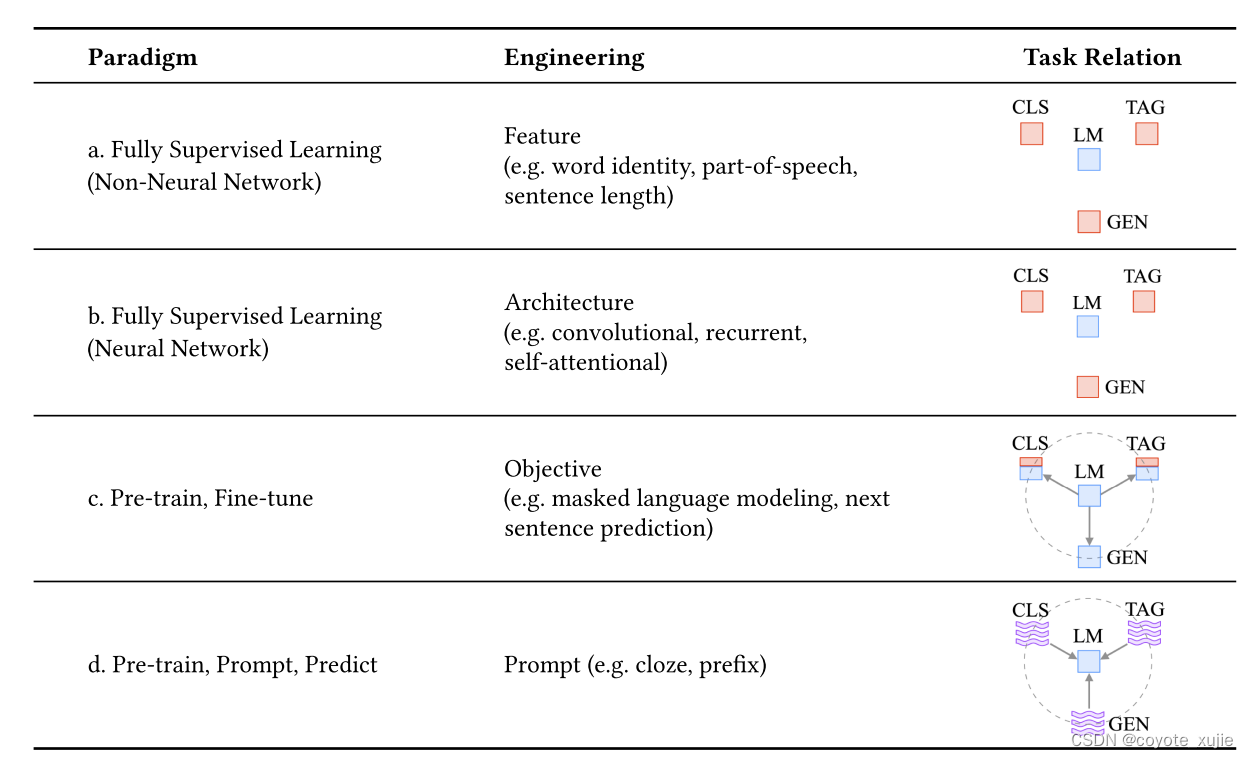

Fine-Tuning to Prompt Learning

Pre-train, Fine-tune

- BERT

- bidirectional transformer,词语和句子级别的特征抽取,注重文本理解

- Pre-train: Maked Language Model + Next Sentence Prediction

- Fine-tune: 根据任务选取对应的representation(最后一层hidden state输出),放入线性层中

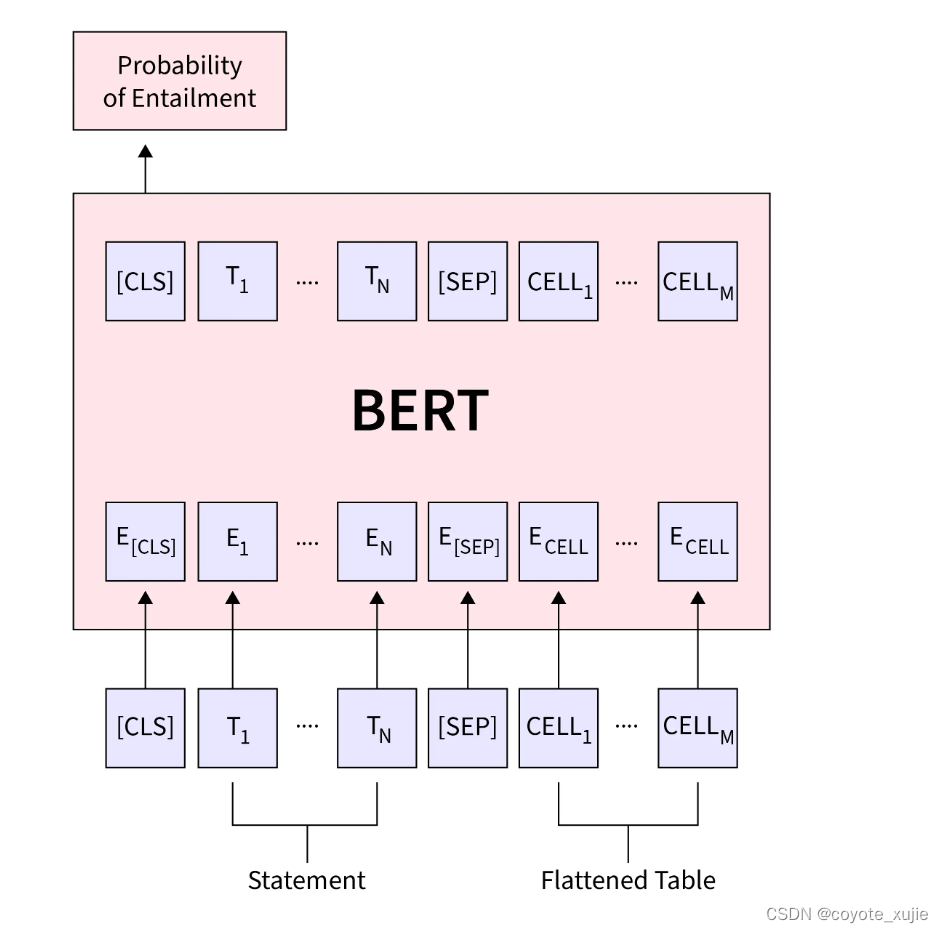

例:Natural Language Inference

Pre-train, Fine-tune: models

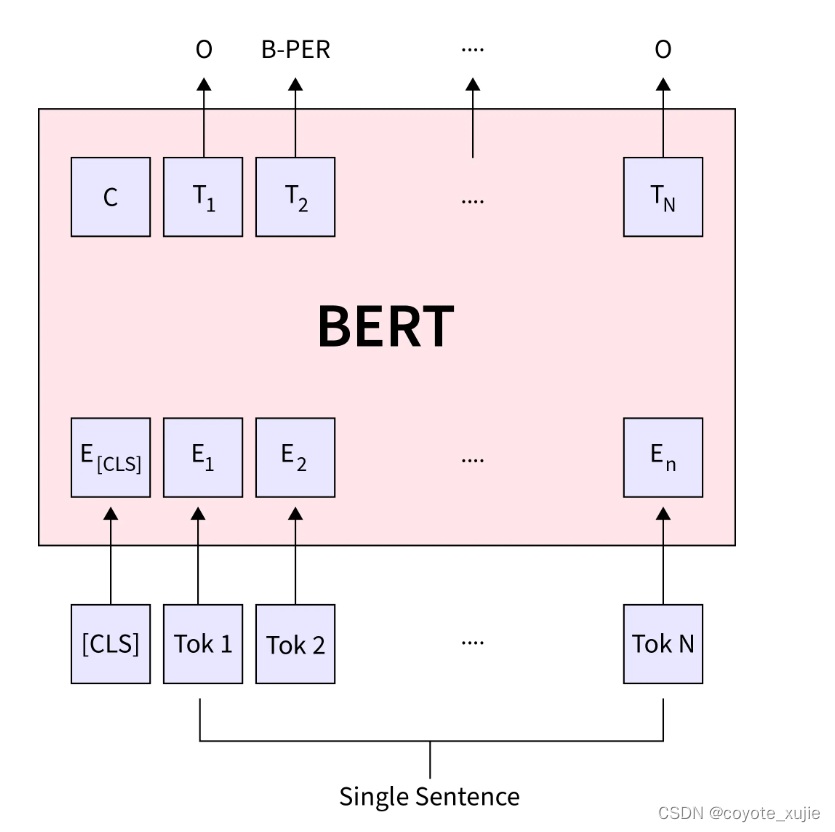

- BERT

- bidirectional transformer,词语和句子级别的特征抽取,注重文本理解

- Pre-train: Maked Language Model + Next Sentence Prediction

- Fine-tune: 根据任务选取对应的representation(最后一层hidden state输出),放入线性层中

例:Named Entity Recognition

Pre-train, Fine-tune: models

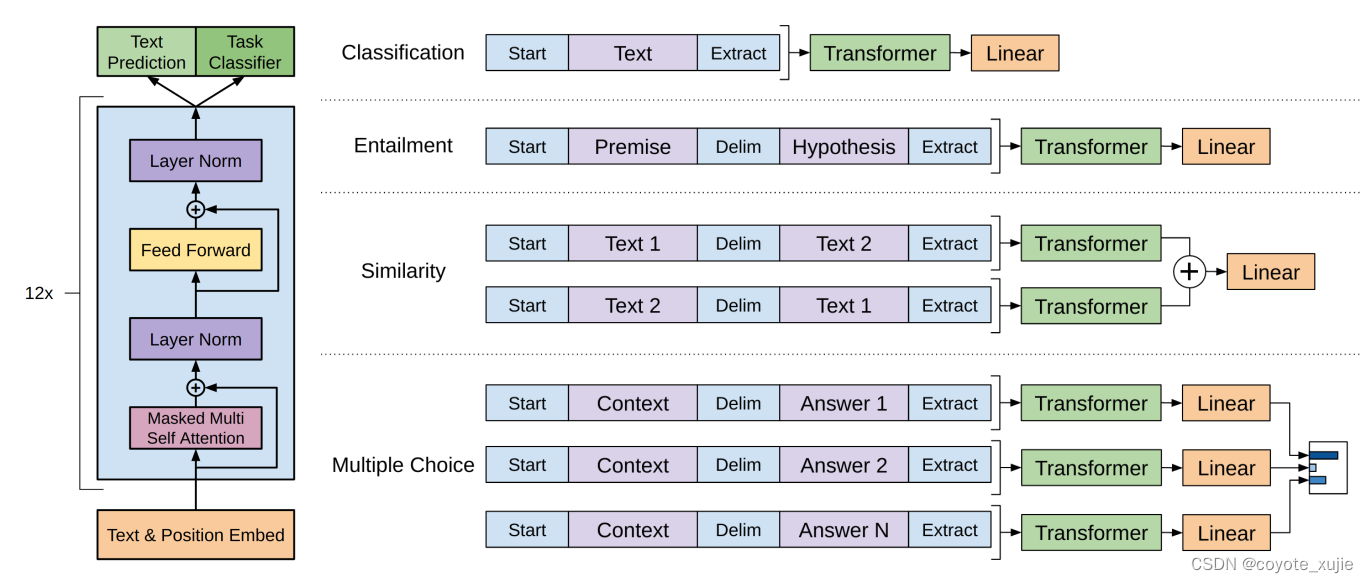

- GPT

- auto-regressive model,通过前序文本预测下一词汇,注重文本生成

- Pre-train: L 1 ( U ) = ∑ i log P ( u i ∣ u i − k , … , u i − 1 ; Θ ) L_1(\mathcal{U})=\sum_i \log P\left(u_i \mid u_{i-k}, \ldots, u_{i-1} ; \Theta\right) L1(U)=∑ilogP(ui∣ui−k,…,ui−1;Θ)

- Fine-tune: task-specific input transformations + fully-connected layer

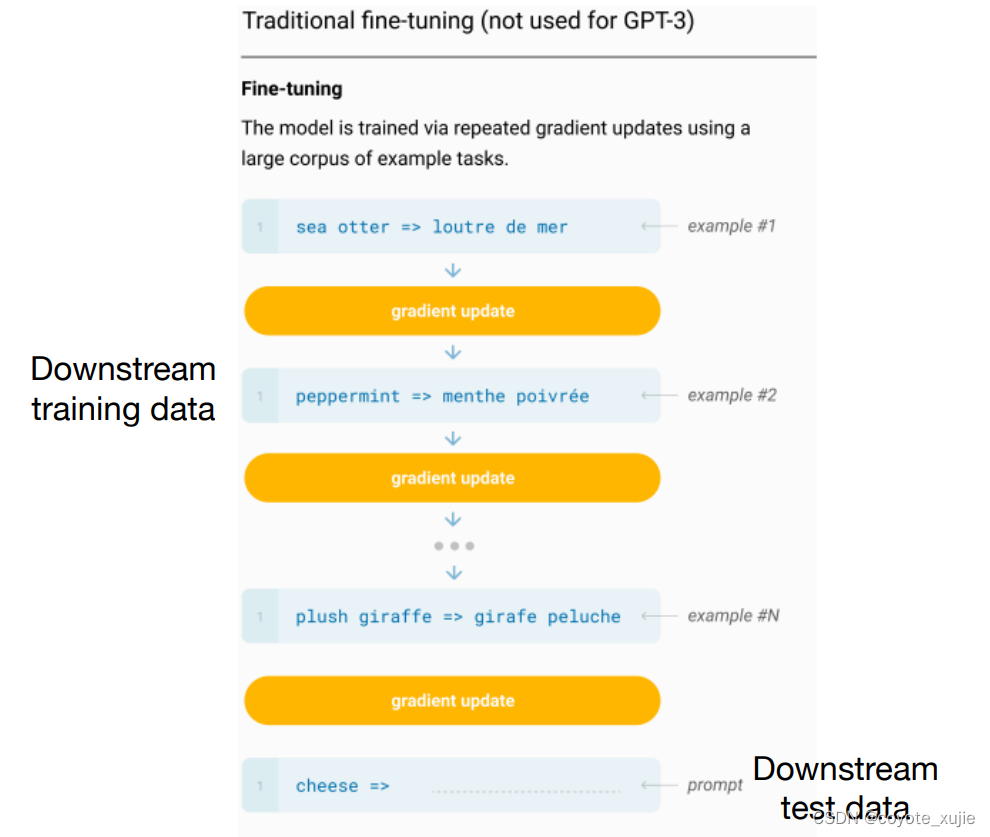

Pre-train, Fine-tune: challenges

- gap between pre-train and fine-tune

少样本学习能力差、容易过拟合

Pre-train, Fine-tune: challenges

- gap between pre-train and fine-tune

少样本学习能力差、容易过拟合

Pre-train, Fine-tune: challenges

- cost of fine-tune

现在的预训练模型参数量越来越大,为了一个特定的任务去 finetuning 一个模型,然后部署于线上业务,也会造成部署资源的极大浪费

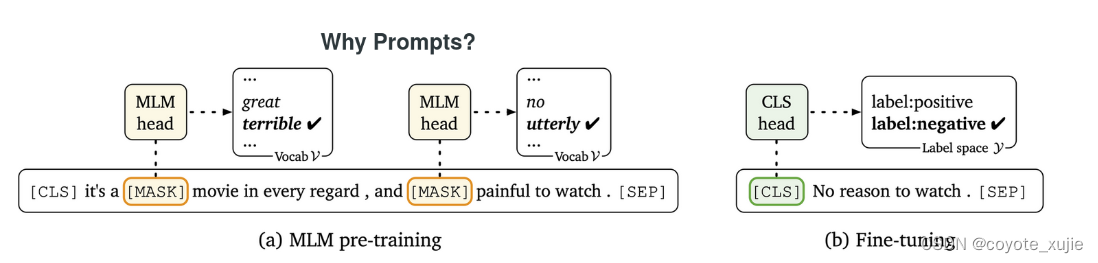

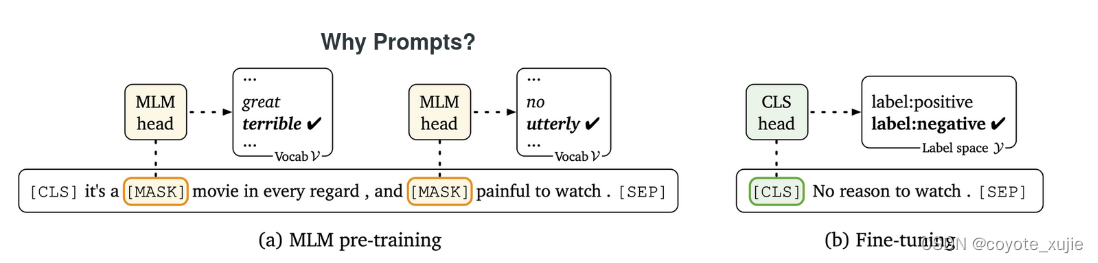

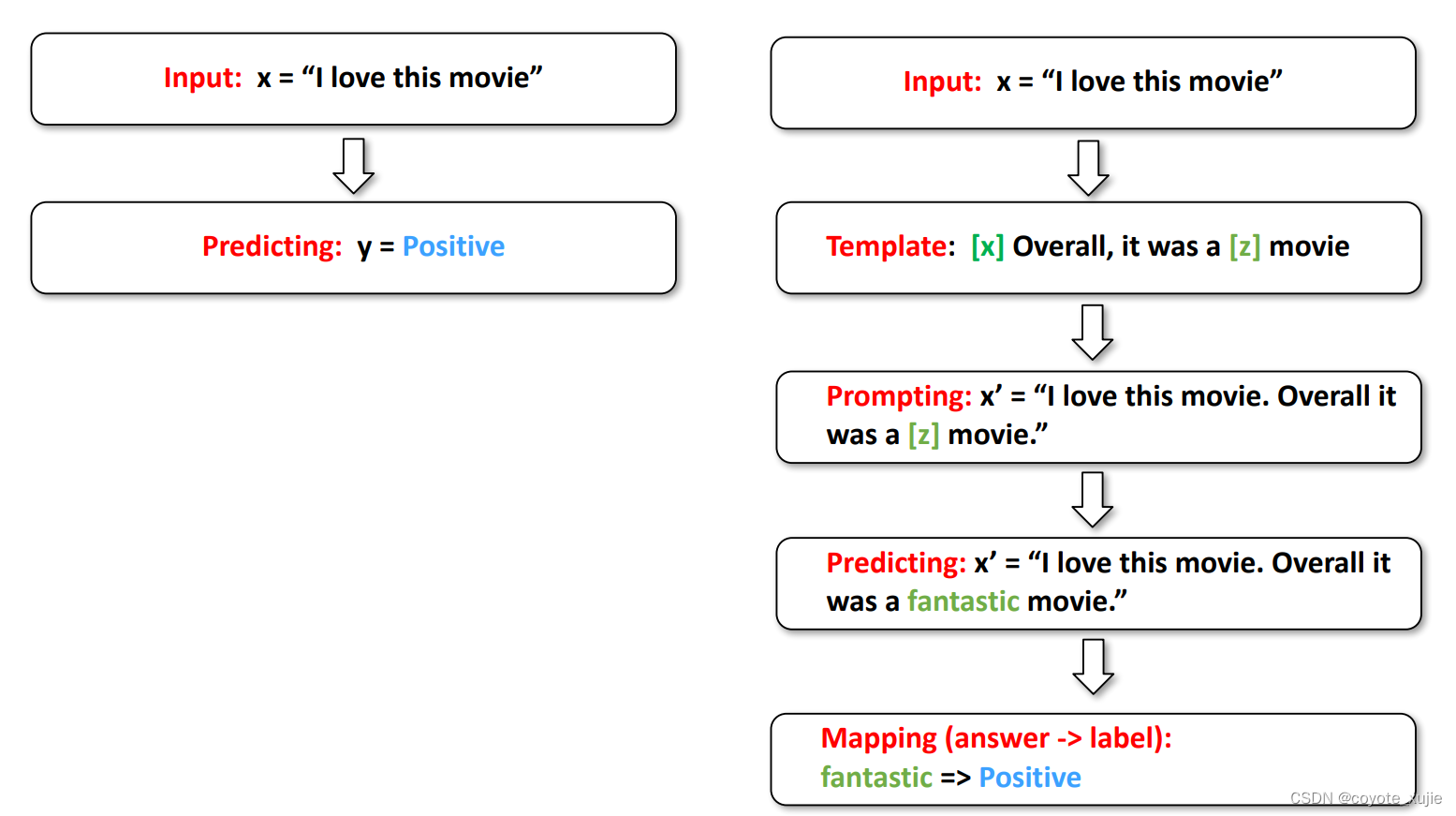

Pre-train, Prompt, Predict: what is prompting

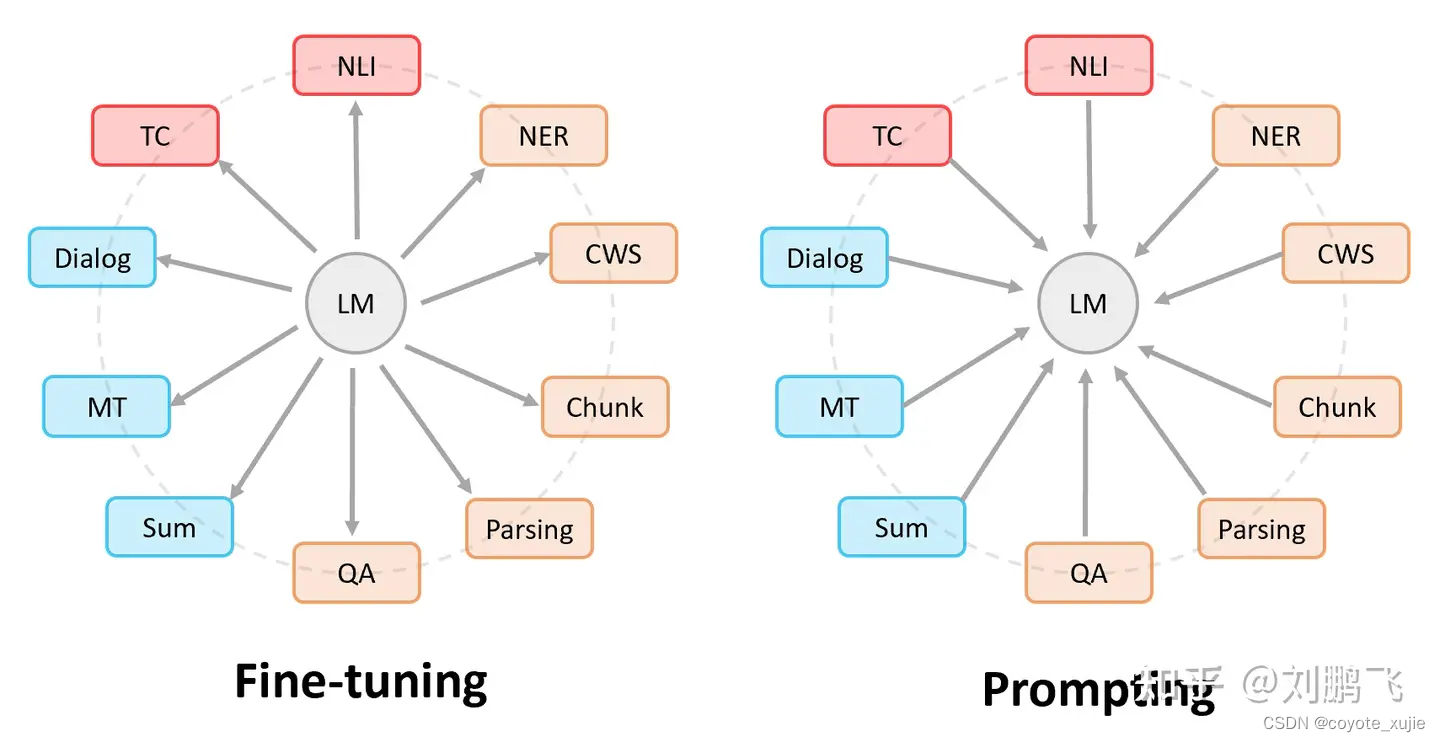

- fine-tuning: 通过改变模型结构,使模型适配下游任务

- prompt learning: 模型结构不变,通过重构任务描述,使下游任务适配模型

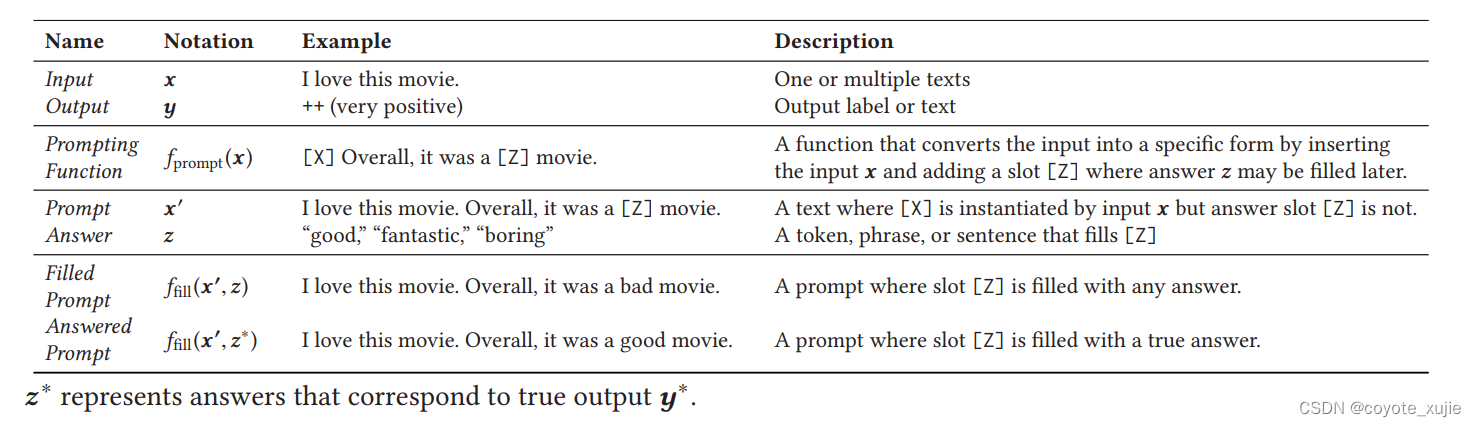

Pre-train, Prompt, Predict: workflow of prompting

Pre-train, Prompt, Predict: workflow of prompting

- Template: 根据任务设计prompt模板,其中包含 input slot[X] 和 answer slot [Z],后根据模板在 input slot 中填入输入

- Mapping (Verbalizer): 将输出的预测结果映射回label

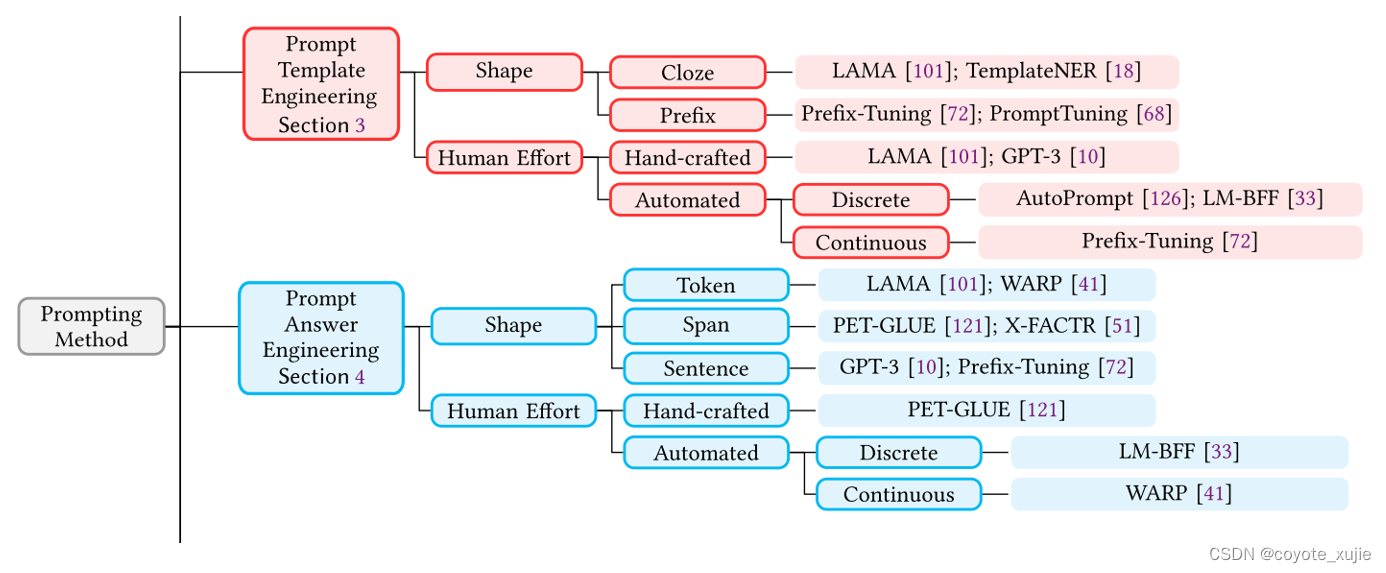

Pre-train, Prompt, Predict: prompt design

Prompting 中最主要的两个部分为 template 与 verbalizer 的设计。

他们可以分别基于任务类型和预训练模型选择(shape)或生成方式(huamn effort)进行分类。

)

—概述需要掌握的内容)

)