清洁数据ploy n

As a bootcamp project, I was asked to analyze data about the sale prices of houses in King County, Washington, in 2014 and 2015. The dataset is well known to students of data science because it lends itself to linear regression modeling. You can take a look at the data over at Kaggle.com.

作为一个训练营项目,我被要求分析有关2014年和2015年华盛顿州金县的房屋售价数据。数据集对数据科学专业的学生来说是众所周知的,因为它有助于进行线性回归建模。 您可以在Kaggle.com上查看数据 。

In this post, I’ll describe my process of cleaning this dataset to prepare for modeling it using multiple linear regression, which allows me to consider the impact of multiple factors on a home’s sale price at the same time.

在这篇文章中,我将描述清理该数据集的过程,以准备使用多元线性回归对它进行建模,这使我可以同时考虑多个因素对房屋售价的影响。

定义我的数据集 (Defining my dataset)

One of the interesting things about this dataset is that King County is home to some pretty massive wealth disparity, both internally and in comparison with state and national averages. Some of the world’s richest people live there, and yet 10% of the county’s residents live in poverty. Seattle is in King County, and the median household income of $89,675, 49% higher than the national average, can probably be attributed in part to the strong presence of the tech industry in the area. Home prices in the dataset range from a modest $78,000 to over $7 million.

关于此数据集,有趣的事情之一是,金县在内部以及与州和全国平均水平相比都存在着相当大的财富差距。 一些世界上最富有的人居住在那里 ,但该县10%的居民生活在贫困中 。 西雅图位于金县, 家庭收入中位数为89,675美元 ,比全国平均水平高49%,这可能部分归因于该地区高科技产业的强大影响力。 数据集中的房屋价格从适中的$ 78,000到超过$ 7百万。

# Import needed packages

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Read in the data and view summary statistics

data = pd.read_csv('kc_house_data.csv')

data.describe()These disparities mean that it is hard to find a linear model that can describe the data while keeping error reasonably low. Common sense tells us that a twelve-bedroom house isn’t simply four times bigger than a three-bedroom house; it’s a mansion, a luxury villa, a thing of a different nature than a typical family home.

这些差异意味着很难找到一个可以描述数据并同时保持较低误差的线性模型。 常识告诉我们,一间十二居室的房屋不仅仅是三居室的房屋的四倍。 这是一座豪宅,一栋豪华别墅,其性质与典型的家庭住宅不同。

While exploring this dataset, I decided that not only was I unlikely to find a model that could describe modest homes and mansions alike, I also didn’t want to. Billionaires, if you’re reading this: I’m sure there is someone else who can help you figure out how to maximize the sale price of your Medina mansion. I would rather use my skills to help middle-income families understand how they can use homeownership to build wealth.

在浏览此数据集时,我决定不仅不大可能找到一个可以描述谦虚的房屋和豪宅的模型,而且我也不想这么做。 亿万富翁,如果您正在阅读此书:我敢肯定还有其他人可以帮助您找出如何最大化麦地那豪宅的销售价格。 我宁愿使用自己的技能来帮助中等收入家庭了解如何利用房屋所有权来积累财富。

This thinking brought me to my first big decision about cleaning the data: I decided to cut it down to help me focus on midrange family homes. I removed the top 10% most expensive homes and then limited the remaining data to just those homes with 2 to 5 bedrooms.

这种想法使我做出了清理数据的第一个重大决定:我决定缩减数据范围,以帮助我专注于中端家庭住宅。 我删除了最昂贵的前10%房屋,然后将剩余数据限制为只有2到5个卧室的房屋。

# Filter the dataset

midrange_homes = data[(data['price'] < np.quantile(data['price'], 0.9))

& (data['bedrooms'].isin(range(2, 6)))]

# View summary statistics

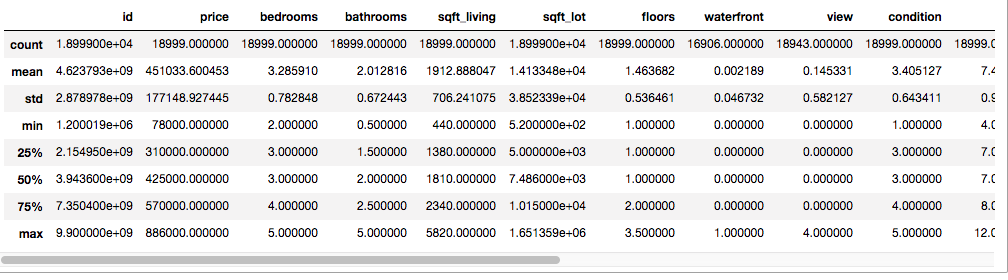

midrange_homes.describe()

All told, this left me with 18,999 homes with a median sale price of $425,000. A little fiddling with a mortgage calculator persuaded me that this could be an affordable price for a family making the median income of $89,675, assuming they had excellent credit, could make a 14% downpayment, and got a reasonable interest rate.

总而言之,这给我留下了18,999套房屋,中位售价为425,000美元。 有点按揭计算器的说服我说这对于一个家庭中位数收入为89,675美元的家庭来说是一个负担得起的价格,前提是他们拥有良好的信用,可以首付14%并获得合理的利率。

Limiting the price and number of bedrooms had a nice collateral effect of making most of the extreme values in the dataset disappear. At this point, I was ready to move on my first real cleaning steps: resolving missing values.

限制卧室的价格和数量具有很好的附带效果,可以使数据集中的大多数极值消失。 此时,我已经准备好进行我的第一个实际清洁步骤:解决缺失的值。

不再缺少 (Missing no more)

Many datasets come with missing values, where someone forgot or chose not to collect or record a value for a particular variable in a particular observation. Missing values are a problem because they can interfere with several forms of analysis. They may be a different data type than the other values (e.g., text instead of a number). If lots of values are missing for a particular variable or observation, that column or row may be effectively useless, which is a waste of all the good values that were there. It is important to resolve missing values before doing any quantitative analysis so that we can better understand what our calculations actually represent.

许多数据集带有缺失值,其中有人忘记或选择不收集或记录特定观测值中特定变量的值。 缺少值是一个问题,因为它们会干扰几种形式的分析。 它们可能是与其他值不同的数据类型(例如,文本而不是数字)。 如果大量的值都缺少特定变量或观测,该列或行可能是有效没用,这是所有在那里的良好价值观的浪费。 在进行任何定量分析之前,解决缺失值很重要,这样我们才能更好地理解我们的计算实际代表什么。

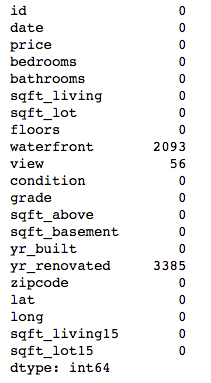

# Check for missing values by column

midrange_homes.isna().sum()

There are a couple of typical ways to resolve missing values. First, you can just remove the columns or rows with missing values from the dataset altogether. This definitely protects you from the perils of missing data, but at the expense of any perfectly good data that happened to be in one of those columns or rows.

有两种解决缺失值的典型方法。 首先,您可以从数据集中完全删除缺少值的列或行。 这无疑可以保护您免受丢失数据的危害,但要以牺牲恰好在这些列或行之一中的任何完美数据为代价。

An alternative is to change the missing values to another value that you think is reasonable in context. This could be the mean, median, or mode of the existing values. It could also be some other value that conveys “missingness” while still being accessible to analysis, like 0, ‘not recorded,’ etc.

一种替代方法是将缺失值更改为您认为在上下文中合理的另一个值。 这可以是现有值的平均值,中位数或众数。 它也可以是其他值,在传达“缺失”的同时仍然可以进行分析,例如0,“未记录”等。

My dataset had missing values in three columns: waterfront, view, and yr_renovated.

我的数据集缺少三列的值: waterfront , view和yr_renovated 。

Waterfront records whether a house is on a waterfront lot, with 1 apparently meaning “yes” and 0 “no.”

滨水区记录房屋是否在滨水地带,其中1个明显表示“是”,0个表示“否”。

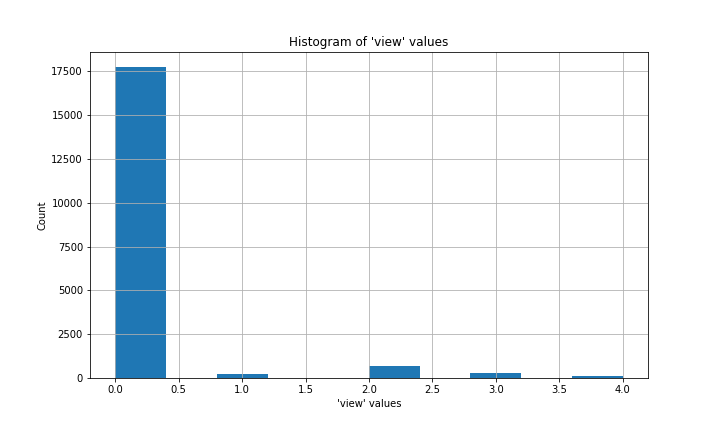

The meaning of the view column is not entirely clear. If you do some searching through other people’s uses of this dataset, you’ll see it interpreted in various ways. The best interpretation I found was that it reflects how much a house was viewed before it sold, and the numeric values (0 to 4) probably represent tiers of a scale rather than literal numbers of views.

视图列的含义并不十分清楚。 如果您通过其他人对该数据集的使用进行搜索,您会发现它以各种方式被解释。 我发现最好的解释是,它反映了房屋出售前的景观,数值(0到4)可能代表比例尺的等级,而不是视图的字面数量。

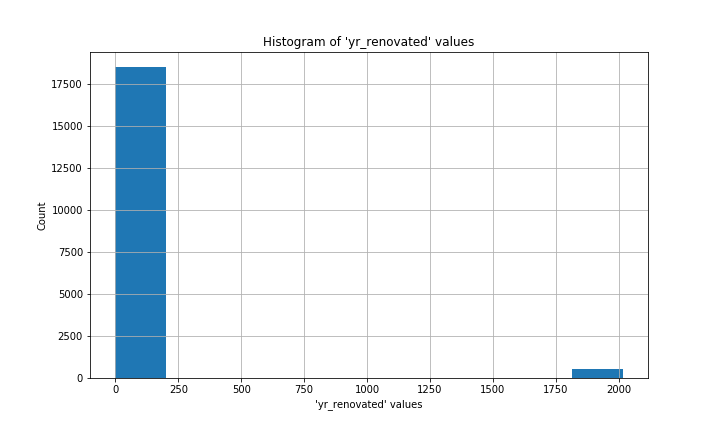

Yr_renovated is also a bit tricky. The vast majority of values are 0, with other values concentrated in the last few decades before 2014/2015.

Yr_renovated也有些棘手。 绝大多数值是0,其他值集中在2014/2015之前的最后几十年。

In each case, 0 was the most common value (the mode) by a long shot. I’ll never know whether the folks who recorded this data meant to put 0 for each missing value, or if those values were truly unknown. Under the circumstances, filling the missing values with 0 wouldn’t make a substantial difference to the overall distribution of values, so that is what I chose to do.

在每种情况下,从长远来看,0是最常见的值(模式)。 我永远不会知道记录此数据的人是否打算为每个缺失值放入0,或者这些值是否真正未知。 在这种情况下,用0填充缺少的值不会对值的总体分布产生实质性的影响,所以我选择这样做。

# Fill missing values with 0, the mode of each column

midrange_homes['waterfront'] = midrange_homes['waterfront'].fillna(0.0)

midrange_homes['waterfront'] = midrange_homes['waterfront'].astype('int64')

midrange_homes['view'] = midrange_homes['view'].fillna(0.0).astype('int64')

midrange_homes['yr_renovated'] = midrange_homes['yr_renovated'].fillna(0)

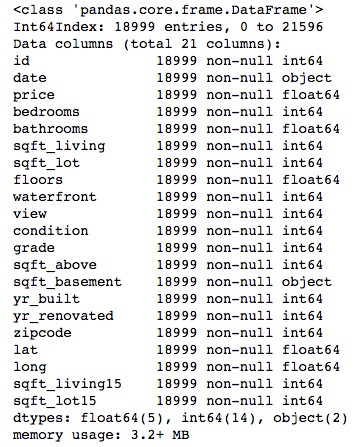

midrange_homes['yr_renovated'] = midrange_homes['yr_renovated'].astype('int64')Looking at the info on this dataset, we can see that two columns — date and sqft_basement — are “objects” (i.e., text) while the rest are numbers (integers or decimal values, a.k.a. floats). But dates and basement square footage are values that I will want to treat like numbers, so I need to changes these columns to new datatypes.

查看此数据集上的信息,我们可以看到两列( 日期和sqft_basement )是“对象”(即文本),其余两列是数字(整数或十进制值,又称浮点数)。 但是日期和地下室平方英尺是我想要像数字一样对待的值,因此我需要将这些列更改为新的数据类型。

# Review column datatypes

midrange_homes.info()

Date is a little tricky because it contains three pieces of information (day, month, and year) in one string. Converting it to a “datetime” object won’t do me much good; I know from experimentation that my regression model doesn’t like datetimes as inputs. Because all the observations in this dataset come from a short period in 2014–2015, there’s not much I can learn about changes over time in home prices. The month of each sale is the only part that really interests me, because it could help me detect a seasonal pattern in sale prices.

日期有点棘手,因为它在一个字符串中包含三个信息(日,月和年)。 将其转换为“ datetime”对象对我没有多大帮助; 从实验中我知道,我的回归模型不喜欢日期时间作为输入。 由于此数据集中的所有观察值均来自2014-2015年的短期时间,因此我对房价随时间的变化了解不多。 每个销售月份都是我真正感兴趣的唯一部分,因为它可以帮助我发现销售价格的季节性模式。

I use the string .split() method in a list comprehension to grab just the month from each date and put it into a new column. The original date column can now be eliminated in the next step.

我在列表推导中使用字符串.split()方法从每个日期中仅获取月份并将其放入新列中。 现在可以在下一步中删除原始日期列。

# Create 'month' column

midrange_homes['month'] = [x.split('/')[0] for x in midrange_homes['date']]

midrange_homes['month'] = pd.to_numeric(midrange_homes['month'],

errors='coerce')停止,拖放和.info() (Stop, drop, and .info())

Now I’m ready to drop some columns that I don’t want to consider when I build my multiple linear regression model.

现在,我准备删除一些在构建多元线性回归模型时不想考虑的列。

I don’t want id around because it is supposed to be a unique identifier for each sale record — a serial number, basically — and I don’t expect it to have any predictive power.

我不想要id ,因为它应该是每个销售记录的唯一标识符(基本上是序列号),而且我不希望它具有任何预测能力。

Date isn’t needed anymore because I now have the month of each sale stored in the month column.

不再需要日期 ,因为我现在将每个销售的月份存储在month列中。

Remember sqft_basement, a numeric column trapped in a text datatype? I didn’t bother coercing it to integers or floats above because I noticed that sqft_living appears to be the sum of sqft_basement and sqft_above. My focus is on things that a family can do to maximize the sale price of their home, and I doubt many people are going to shift the balance of above- versus below-ground space in their homes without also increasing overall square footage. I’ll drop the two more specific columns in favor of using sqft_living to convey similar information.

还记得sqft_basement ,它是捕获在文本数据类型中的数字列吗? 我没有麻烦将其强制为整数或浮于上面,因为我注意到sqft_living似乎是sqft_basement和sqft_above的总和。 我的重点是家庭可以做的事情,以使房屋的销售价格最大化,而且我怀疑许多人会在不增加整体建筑面积的情况下转移房屋中地面和地下空间之间的平衡。 我将删除两列更具体的列,以利于使用sqft_living传达相似的信息。

# Drop unneeded columns

midrange_homes.drop(['id', 'date', 'sqft_above', 'sqft_basement'],

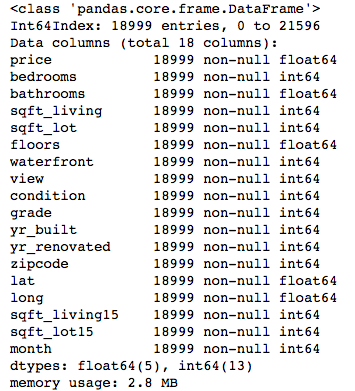

axis=1, inplace=True)# Review the remaining columns

midrange_homes.info()

What remains is a DataFrame with 18,999 rows and 18 columns, all numeric datatypes, perfect for regression modeling.

剩下的是一个具有18,999行和18列的DataFrame,所有数字数据类型,非常适合回归建模。

这是绝对的,假的 (It’s categorical, dummy)

So I fit models to a few minor variations of my dataset, but the results weren’t what I wanted. I was getting adjusted R-squared values around 0.67, which meant that my model was only describing 67% of the variability in home price in this dataset. Given the limits of the data itself — no info on school districts, walkability of the neighborhood, flood risk, etc. — my model is never going to explain 100% of the variability in price. Still, 67% is lower than I would like.

因此,我将模型拟合到数据集的一些细微变化,但结果并非我想要的。 我得到的调整后R平方值约为0.67,这意味着我的模型仅描述了该数据集中房屋价格波动的67%。 考虑到数据本身的局限性-没有关于学区,邻里的可步行性,洪水风险等的信息-我的模型永远无法解释100%的价格变化。 仍然比我想要的低67%。

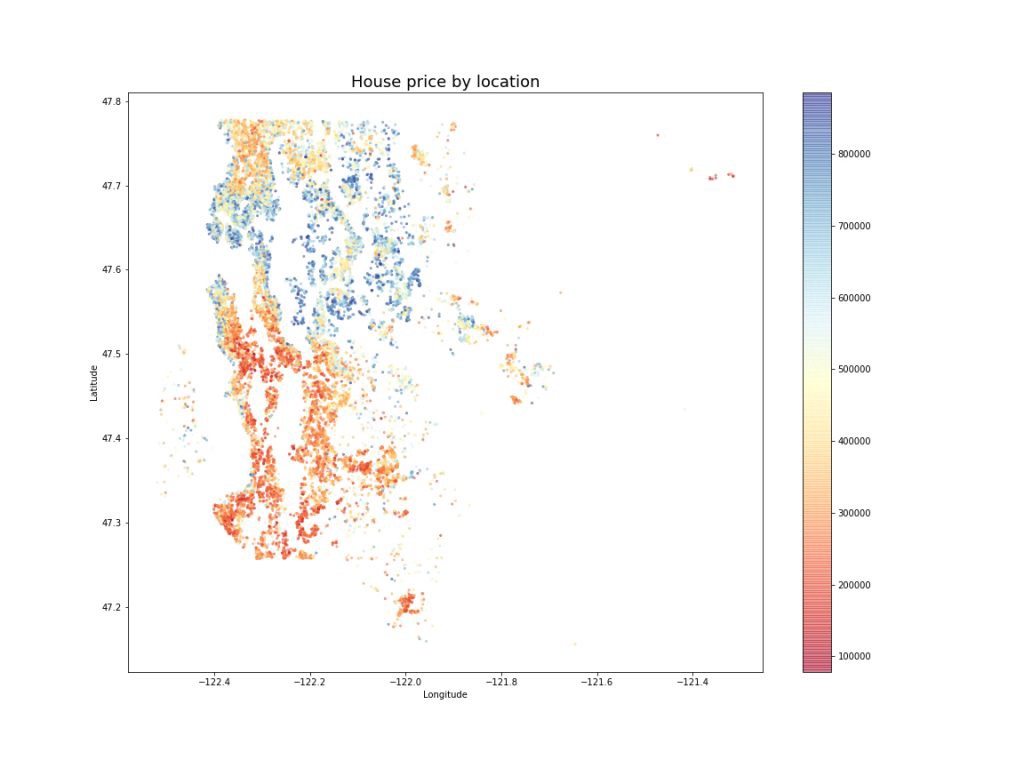

As I mentioned above, King County is home to some extremes of wealth, and even though I excluded the most expensive houses from my dataset, I didn’t exclude their more modest neighbors. The locations of the houses, expressed through zipcode, could be having an effect on price. On my instructor’s advice, I tried one-hot encoding on the zipcode column.

正如我上面提到的,金县拥有一些极端的财富,即使我从数据集中排除了最昂贵的房屋,也没有排除他们较谦虚的邻居。 用邮政编码表示的房屋位置可能会对价格产生影响。 根据老师的建议,我在zipcode列上尝试了一次热编码。

# Generate dummy variables

zip_dummies = pd.get_dummies(midrange_homes['zipcode'], prefix='zip')# Drop the original 'zipcode' column

mh_no_zips = midrange_homes.drop('zipcode', axis=1)# Concatenate the dummies to the copy of 'midrange_homes'

mh_zips_encoded = pd.concat([mh_no_zips, zip_dummies], axis=1)# Drop one of the dummy variables

mh_zips_encoded.drop('zip_98168', axis=1, inplace=True)One-hot encoding, also known as creating dummy variables, takes a variable and splits each unique value off into its own column filled with zeroes and ones. In this case, I ended up with a column for each zipcode in the dataset, filled with a 1 if a house was in that zip code and 0 if not. I then dropped one zipcode column arbitrarily to represent a default location. The coefficients my model generated for the other zipcodes would represent what a homeowner stood to gain or lose by having their home in those zipcodes as opposed to the dropped one.

一键编码,也称为创建伪变量,它采用一个变量并将每个唯一值拆分为由零和一填充的自己的列。 在这种情况下,我最后为数据集中的每个邮政编码添加一列,如果该邮政编码中有房屋,则填充为1,否则填充0。 然后,我任意删除一个邮政编码列以表示默认位置。 我的模型为其他邮政编码生成的系数将代表房主通过将自己的房屋置入这些邮政编码而不是丢弃的邮政编码中而获得或失去的东西。

Treating zipcodes as a categorical variable instead of a continuous, quantitative one paid off immediately: the adjusted R-squared value jumped to 0.82. My model could now explain 82% of the variability in price across this dataset. With that happy outcome, I was ready to move on to validating the model, or testing its ability to predict the prices of houses it hadn’t encountered yet.

将邮政编码视为一种分类变量,而不是一个连续的,定量的变量立即得到了回报:调整后的R平方值跃升至0.82。 我的模型现在可以解释此数据集内82%的价格波动。 有了这个令人欣喜的结果,我准备继续验证模型,或测试其预测尚未遇到的房屋价格的能力。

That’s a summary of how I explored and cleaned the data for my project on house prices in King County, Washington. You can read my code or look at the slides of a non-technical presentation of my results over on my GitHub repository.

这是我如何浏览和清理华盛顿金县房价项目数据的摘要。 您可以在GitHub存储库上阅读我的代码或查看结果的非技术性演示的幻灯片。

Cross-posted from jrkreiger.net.

从 jrkreiger.net 交叉发布 。

翻译自: https://medium.com/swlh/cleaning-house-data-4fcc09017b6c

清洁数据ploy n

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/389037.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!