本次练习的任务是使用逻辑归回和神经网络进行识别手写数字(form 0 to 9, 自动手写数字问题已经应用非常广泛,比如邮编识别。

使用逻辑回归进行多分类分类

练习2 中的logistic 回归实现了二分类分类问题,现在将进行多分类,one vs all。

加载数据集

这次数据时MATLAB 的格式,使用Scipy.io.loadmat 进行加载。Scipy是一个用于数学、科学、工程领域的常用软件包,可以处理插值、积分、优化、图像处理、常微分方程数值解的求解、信号处理等问题。它可用于计算Numpy矩阵,使Numpy和Scipy协同工作。

import numpy as np

import scipy.io

from scipy.io import loadmat

import matplotlib.pyplot as plt

import scipy.optimize as optdata = scipy.io.loadmat('ex3data1.mat')

X = data['X']

y = data['y']

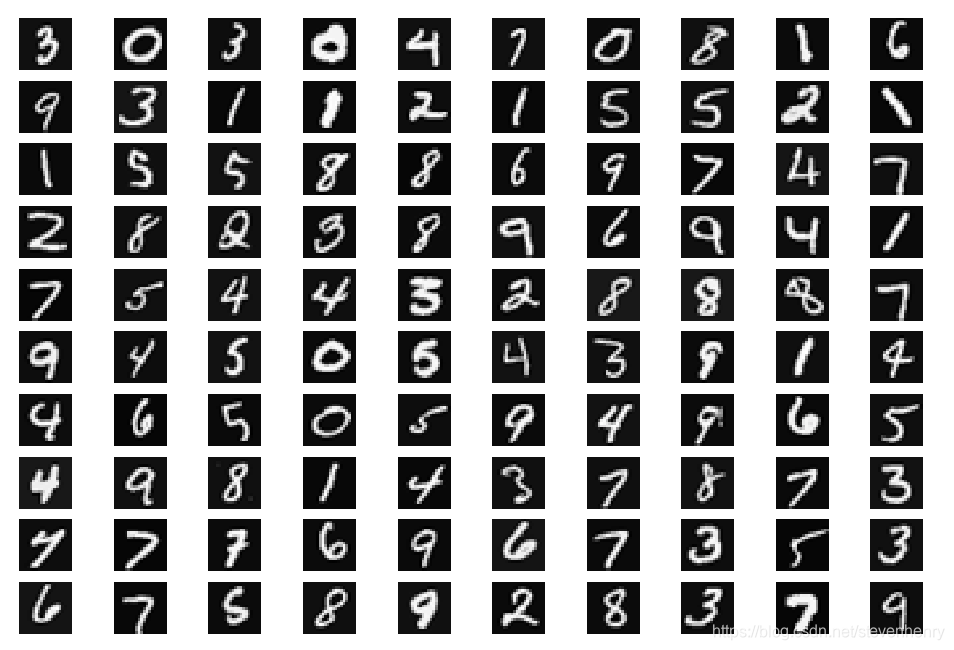

数据集共有5000个样本, 每个样本是20*20的灰度图像。

visuazing the data

随机展示100个图像

def display_data(sample_images):fig, ax_array = plt.subplots(nrows=10, ncols=10, figsize=(6, 4))for row in range(10):for column in range(10):ax_array[row, column].matshow(sample_images[10 * row + column].reshape((20, 20)).T, cmap='gray')ax_array[row, column].axis('off')plt.show()returnrand_samples = np.random.permutation(X.shape[0]) # 打乱顺序

sample_images = X[rand_samples[0:100], :]

display_data(sample_images)

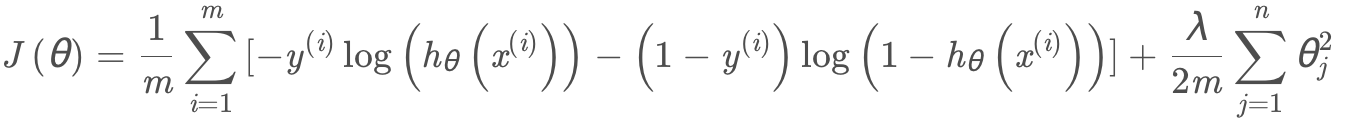

Vectorizing Logistic Regression

看一下logistic回归的代价函数:

其中:

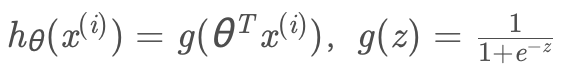

进行向量化运算:

def sigmoid(z):return 1 / (1 + np.exp(-z))

def regularized_cost(theta, X, y, l):thetaReg = theta[1:]first = (-y*np.log(sigmoid(X.dot(theta)))) + (y-1)*np.log(1-sigmoid(X.dot(theta)))reg = (thetaReg.dot(thetaReg))*l / (2*len(X))return np.mean(first) + reg

gradient

def regularized_gradient(theta, X, y, l):thetaReg = theta[1:]first = (1 / len(X)) * X.T @ (sigmoid(X.dot(theta)) - y)reg = np.concatenate([np.array([0]), (l / len(X)) * thetaReg])return first + reg

one-vs-all Classification

这个任务,有10个类,logistics是二分类算法,用在多分类上原理就是把所有的数据分为“某类”和“其它类”

from scipy.optimize import minimizedef one_vs_all(X, y, l, K):all_theta = np.zeros((K, X.shape[1])) # (10, 401)for i in range(1, K+1):theta = np.zeros(X.shape[1])y_i = np.array([1 if label == i else 0 for label in y])ret = minimize(fun=regularized_cost, x0=theta, args=(X, y_i, l), method='TNC',jac=regularized_gradient, options={'disp': True})all_theta[i-1,:] = ret.x return all_theta

向量化操作检错,经验的机器学习工程师通常会检验矩阵的维度,来确认操作是否正确。

predict

def predict_one_vs_all(all_theta, X):m = np.size(X, 0)# You need to return the following variables correctly# Add ones to the X data matrixX = np.c_[np.ones((m, 1)), X]hypothesis = sigmoid(X.dot(all_theta.T)pred = np.argmax(hypothesis), 1) + 1return pred

这里的hypothesis.shape =[5000 * 10], 对应5000个样本,每个样本对应10个标签的概率。取 概率最大的的值,作为最终预测结果。pred 是最终的5000个样本预测数组。

pred = predict_one_vs_all(all_theta, X)

print('Training Set Accuracy: %.2f%%' % (np.mean(pred == y) * 100))

Neural Networks

这里只需要验证所给权重数据,也就是theta,查看分类准确性。

## ================ Part 2: Loading Pameters ================

print('Loading Saved Neural Network Parameters ...')# Load the weights into variables Theta1 and Theta2

weight = scipy.io.loadmat('ex3weights.mat')

Theta1, Theta2 = weight['Theta1'], weight['Theta2']

predict

def load_weight(path):data = loadmat(path)return data['Theta1'], data['Theta2']theta1, theta2 = load_weight('ex3weights.mat')

theta1.shape, theta2.shapeX = np.insert(X, 0, values=np.ones(X.shape[0]), axis=1) # intercept

#

#正向传播

a1 = X

z2 = a1.dot(theta1.T)

z2.shape

z2 = np.insert(z2, 0, 1, axis=1)

a2 = sigmoid(z2)

a2.shape

z3 = a2.dot(theta2.T)

z3.shape

a3 = sigmoid(z3)

a3.shapey_pred = np.argmax(a3, axis=1) + 1

accuracy = np.mean(y_pred == y)

print ('accuracy = {0}%'.format(accuracy * 100)) # accuracy = 97.52%

)

)