Logistic regression

数据内容: 两个参数 x1 x2 y值 0 或 1

Potting

def read_file(file):data = pd.read_csv(file, names=['exam1', 'exam2', 'admitted'])data = np.array(data)return datadef plot_data(X, y):plt.figure(figsize=(6, 4), dpi=150)X1 = X[y == 1, :]X2 = X[y == 0, :]plt.plot(X1[:, 0], X1[:, 1], 'yo')plt.plot(X2[:, 0], X2[:, 1], 'k+')plt.xlabel('Exam1 score')plt.ylabel('Exam2 score')plt.legend(['Admitted', 'Not admitted'], loc='upper right')plt.show()print('Plotting data with + indicating (y = 1) examples and o indicating (y = 0) examples.')

plot_data(X, y)

从图上可以看出admitted 和 not admitted 存在一个明显边界,下面进行逻辑回归:

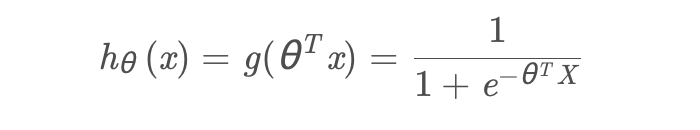

logistic 回归的假设函数

g(x)为logistics function:

def sigmoid(x):return 1 / (np.exp(-x) + 1)

看一下逻辑函数的函数图:

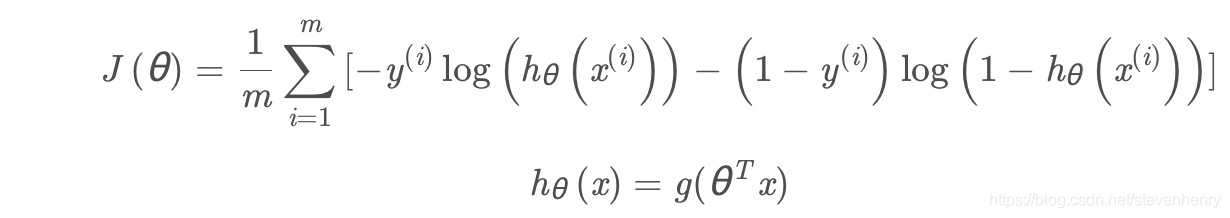

cost function

逻辑回归的代价函数:

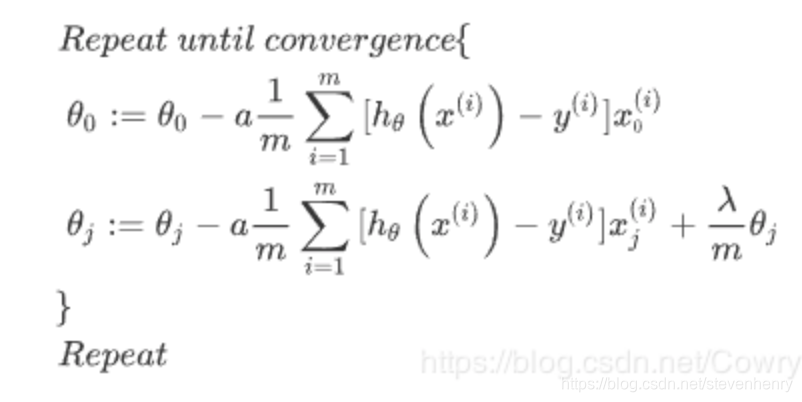

Gradient descent

- 批处理梯度下降(batch gradient descent)

- 向量化计算公式:

1/mXT(sigmoid(XθT)−y)1/mX^{T}(sigmoid(Xθ^{T}) - y) 1/mXT(sigmoid(XθT)−y)

def gradient(initial_theta, X, y):m, n = X.shapeinitial_theta = initial_theta.reshape((n, 1))grad = X.T.dot(sigmoid(X.dot(initial_theta)) - y) / mreturn grad.flatten()

computer Cost and grad

m, n = X.shape

X = np.c_[np.ones(m), X]

initial_theta = np.zeros((n + 1, 1))

y = y.reshape((m, 1))# cost, grad = costFunction(initial_theta, X, y)

cost, grad = cost_function(initial_theta, X, y), gradient(initial_theta, X, y)

print('Cost at initial theta (zeros): %f' % cost);

print('Expected cost (approx): 0.693');

print('Gradient at initial theta (zeros): ');

print('%f %f %f' % (grad[0], grad[1], grad[2]))

print('Expected gradients (approx): -0.1000 -12.0092 -11.2628')

#

theta1 = np.array([[-24], [0.2], [0.2]], dtype='float64')

cost, grad = cost_function(theta1, X, y), gradient(theta1, X, y)

# cost, grad = costFunction(theta1, X, y)

print('Cost at initial theta (zeros): %f' % cost);

print('Expected cost (approx): 0.218');

print('Gradient at initial theta (zeros): ');

print('%f %f %f' % (grad[0], grad[1], grad[2]))

print('Expected gradients (approx): 0.043 2.566 2.647')

学习 θ 参数

使用scipy库里的optimize库进行训练, 得到最终的theta结果

Optimizing using fminunc

initial_theta = np.zeros(n + 1)

result = opt.minimize(fun=cost_function, x0=initial_theta, args=(X, y), method='SLSQP', jac=gradient)print('Cost at theta found by fminunc: %f' % result['fun'])

print('Expected cost (approx): 0.203')

print('theta:')

print('%f %f %f' % (result['x'][0], result['x'][1], result['x'][2]))

print('Expected theta (approx):')

print(' -25.161 0.206 0.201')

predict and Accuracies

学习好了参数θ, 开始进行预测, 当hθ 大于等于0.5, 预测y = 1

当hθ小于0.5时, 预测y =0

def predict(theta, X):m = np.size(theta, 0)rst = sigmoid(X.dot(theta.reshape(m, 1)))rst = rst > 0.5return rst# predict and Accuraciesprob = sigmoid(np.array([1, 45, 85], dtype='float64').dot(result['x']))

print('For a student with scores 45 and 85, we predict an admission ' \'probability of %.3f' % prob)

print('Expected value: 0.775 +/- 0.002\n')p = predict(result['x'], X)print('Train Accuracy: %.1f%%' % (np.mean(p == y) * 100))

print('Expected accuracy (approx): 89.0%\n')

也可以用skearn 来检验:

from sklearn.metrics import classification_report

print(classification_report(predictions, y))

Decision boundary (决策边界)

X × θ = 0

θ0 + x1θ1 + x2θ2 = 0

x1 = np.arange(70, step=0.1)

x2 = -(final_theta[0] + x1*final_theta[1]) / final_theta[2]fig, ax = plt.subplots(figsize=(8,5))

positive = X[y == 1, :]

negative = X[y == 0, :]

ax.scatter(positive[:, 0], positive[:, 1], c='b', label='Admitted')

ax.scatter(negative[:, 0], negative[:, 1], s=50, c='r', marker='x', label='Not Admitted')

ax.plot(x1, x2)

ax.set_xlim(30, 100)

ax.set_ylim(30, 100)

ax.set_xlabel('x1')

ax.set_ylabel('x2')

ax.set_title('Decision Boundary')

plt.show()

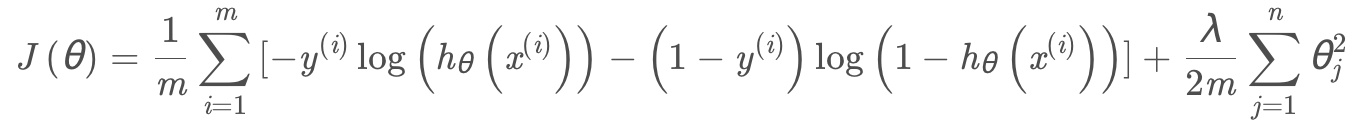

Regularized logistic regression

正则化可以减少过拟合, 也就是高方差,直观原理是,当超参数lambda 非常大的时候,参数θ相对较小, 所以函数曲线就变得简单, 也就减少了刚方差。

可视化数据

data2 = pd.read_csv('ex2data2.txt', names=[' column1', 'column2', 'Accepted'])

data2.head()

def plot_data():positive = data2[data2['Accepted'].isin([1])]negative = data2[data2['Accepted'].isin([0])]fig, ax = plt.subplots(figsize=(8,5))ax.scatter(positive['column1'], positive['column2'], s=50, c='b', marker='o', label='Accepted')ax.scatter(negative['column1'], negative['column2'], s=50, c='r', marker='x', label='Rejected')ax.legend()ax.set_xlabel('Test 1 Score')ax.set_ylabel('Test 2 Score')plot_data() Feature mapping

尽可能将两个特征 x1 x2 相结合,组成一个线性表达式,方法是映射到所有的x1 和x2 的多项式上,直到第六次幂

for i in 0..powerfor p in 0..i:output x1^(i-p) * x2^p```

def feature_mapping(x1, x2, power):data = {}for i in np.arange(power + 1):for p in np.arange(i + 1):data["f{}{}".format(i - p, p)] = np.power(x1, i - p) * np.power(x2, p)return pd.DataFrame(data)

Regularized Cost function

Regularized gradient decent

决策边界

X × θ = 0

x = np.linspace(-1, 1.5, 250)

xx, yy = np.meshgrid(x, x)z = feature_mapping(xx.ravel(), yy.ravel(), 6).as_matrix()

z = z.dot(final_theta)

z = z.reshape(xx.shape)plot_data()

plt.contour(xx, yy, z, 0)

plt.ylim(-.8, 1.2)

)