PostgreSQL is a powerful, open source object-relational database system with over 30 years of active development that has earned it a strong reputation for reliability, feature robustness, and performance.

PostgreSQL是一个功能强大的开源对象关系数据库系统,经过30多年的积极开发,在可靠性,功能强大和性能方面赢得了极高的声誉。

Why use Postgres?

为什么要使用Postgres?

Postgres has a lot of capability. Built using an object-relational model, it supports complex structures and a breadth of built-in and user-defined data types. It provides extensive data capacity and is trusted for its data integrity.

Postgres具有很多功能。 它使用对象关系模型构建,支持复杂的结构以及内置和用户定义的数据类型的范围。 它提供了广泛的数据容量,并因其数据完整性而受到信赖。

It comes with many features aimed to help developers build applications, administrators to protect data integrity and build fault-tolerant environments, and help you manage your data no matter how big or small the dataset.

它具有许多功能,旨在帮助开发人员构建应用程序,帮助管理员保护数据完整性和构建容错环境,并帮助您管理数据(无论数据集大小)。

We will be using the famous Titanic dataset from Kaggle to predict whether the people aboard were likely to survive the sinkage of the world’s greatest ship or not.

我们将使用来自Kaggle的著名的《泰坦尼克号》数据集来预测船上的人们是否有可能幸免于世界上最伟大的船只的沉没。

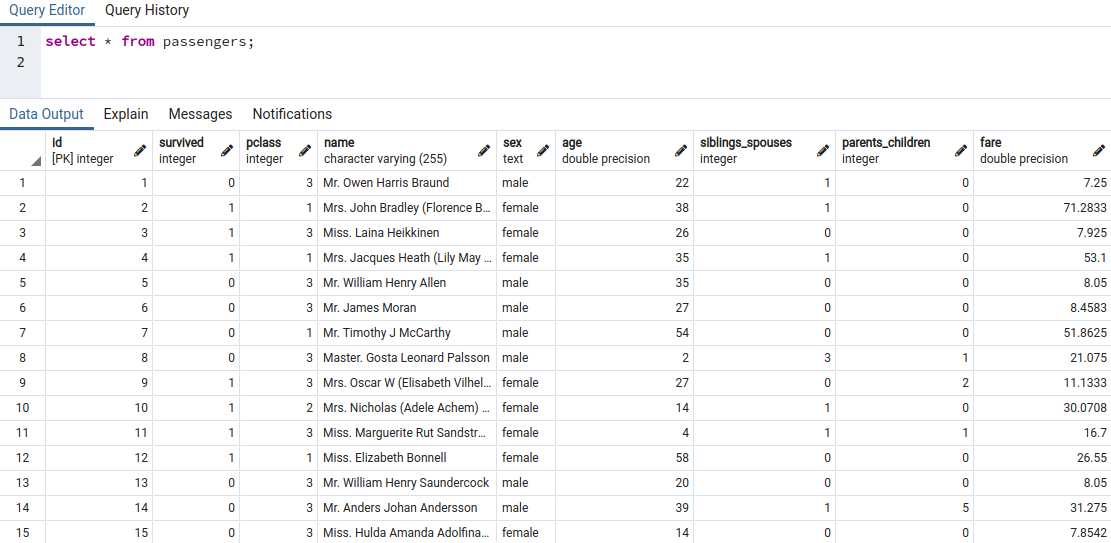

In the first step make sure the you have valid Postgres credentials, a created database with the data already imported. Check the Kaggle website to downloads the csv files: https://www.kaggle.com/c/titanic/data. The data should look something like this:

第一步,请确保您具有有效的Postgres凭据,即已导入数据的已创建数据库。 检查Kaggle网站以下载csv文件: https : //www.kaggle.com/c/titanic/data 。 数据应如下所示:

We’ll first import the proper libraries. Make sure you pip install them. I’m using a local jupyter environment. Apart from the obvious ones, psycopg2 and sqlalchemy are crucial for creating a connection to postgres. Just pip install them as well. :)

我们将首先导入适当的库。 确保您点安装它们。 我正在使用本地jupyter环境。 除了显而易见的以外,psycopg2和sqlalchemy对于创建与postgres的连接至关重要。 只需点安装它们。 :)

Next, we’ll be using a create_engine form sqlalchemy. It’s too simple to use.

接下来,我们将使用sqlalchemy形式的create_engine。 使用起来太简单了。

Replace <enter yours> with your own credentials. The default port is 5432 and username is ‘postgres’. If the code prints ‘Connected to database’ you have succesfully made a connection to your postgres database.

用您自己的凭据替换<enter yours>。 默认端口为5432,用户名为“ postgres”。 如果代码显示“已连接到数据库”,则说明您已成功连接到Postgres数据库。

Next, let’s convert the query result set to a pandas dataframe.

接下来,让我们将查询结果集转换为pandas数据框。

As you can see the dataframe has 887 rows and 9 columns with the first being id.

如您所见,数据框具有887行和9列,第一个是id。

In the next section, let’s try to figure out if any data is directly associated with the survival rate. We’ll take if sex, passenger class and having a family has anything to do with their chance of surviving.

在下一节中,让我们尝试确定是否有任何数据与生存率直接相关。 我们将考虑性别,旅客阶层和家庭是否与他们生存的机会有关。

As you can see, 74% of women aboard survived and only 19% of men did. Passenger class also has an enormous affect. Having siblings or spouses is not correlated. Let’s take a look at a visual correlation between age and survival.

如您所见,船上74%的女性得以幸存,只有19%的男性得以幸存。 客运等级也有巨大影响。 有兄弟姐妹或配偶不相关。 让我们看一下年龄和生存率之间的视觉关联。

There is a significant ammount of toddlers that died in the accident. Most of passengers were middle-aged.

事故中有大量婴儿丧生。 大多数乘客是中年人。

Since computers like numbers more than words I have converted sex into a binary classifier.

由于计算机比数字更喜欢数字,因此我已将性别转换为二进制分类器。

The data still remains the same.

数据仍然保持不变。

Finally, let’s dive into preprocessing for classification.

最后,让我们深入进行分类预处理。

I used sklearn’s train_test_split to create a training and test dataset.

我使用sklearn的train_test_split创建了训练和测试数据集。

We have to drop the ‘survived’ column in the train set otherwise the data serves no purpose.

我们必须在训练集中删除“幸存”列,否则数据没有任何作用。

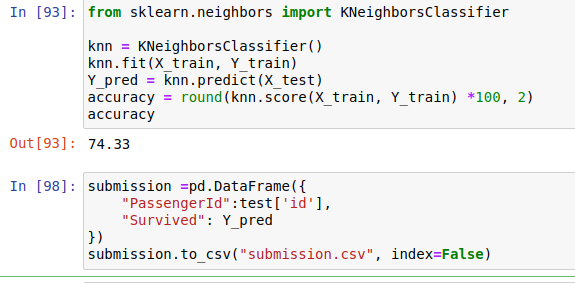

Finally, we fit the training data and got the accuracy of 74.33 which is not great. But not bad either. Let’s save the predicted values to a csv file called ‘submission.csv’. It will only have two values: passengerId and a boolean indicating survival.

最后,我们拟合了训练数据并获得了74.33的准确度,这并不是一个很好的结果。 但也不错。 让我们将预测值保存到一个名为“ submission.csv”的csv文件中。 它只有两个值:passengerId和一个表示生存期的布尔值。

Summary:

摘要:

- use postgres as transactional database management system for data pipelines 使用postgres作为数据管道的事务数据库管理系统

- have fun manipulating data with pandas and visualisation libraries such as matplotlib and seaborn. 使用熊猫和可视化库(例如matplotlib和seaborn)来处理数据很有趣。

- make predictions using the machine learning algorithms provided to you by scikit-learn and tensorflow. 使用scikit-learn和tensorflow提供给您的机器学习算法进行预测。

Thanks ;)

谢谢 ;)

翻译自: https://medium.com/@cvetko.tim/titanic-dataset-with-postgres-and-jupyter-notebook-69073c4a67e6

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/389387.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

)

![【洛谷算法题】P1046-[NOIP2005 普及组] 陶陶摘苹果【入门2分支结构】Java题解](http://pic.xiahunao.cn/【洛谷算法题】P1046-[NOIP2005 普及组] 陶陶摘苹果【入门2分支结构】Java题解)

)