一、集群安装条件前置

实验spark安装在【Hadoop入门(二)集群安装】机器上, 已完成安装jdk,hadoop和ssh、网络等配置环境等。

spark所依赖的虚拟机和操作系统配置

环境:ubuntu14 + spark-2.4.4-bin-hadoop2.6+jdk1.8+ssh

虚拟机:(vmware10)

二、standalone安装环境设置

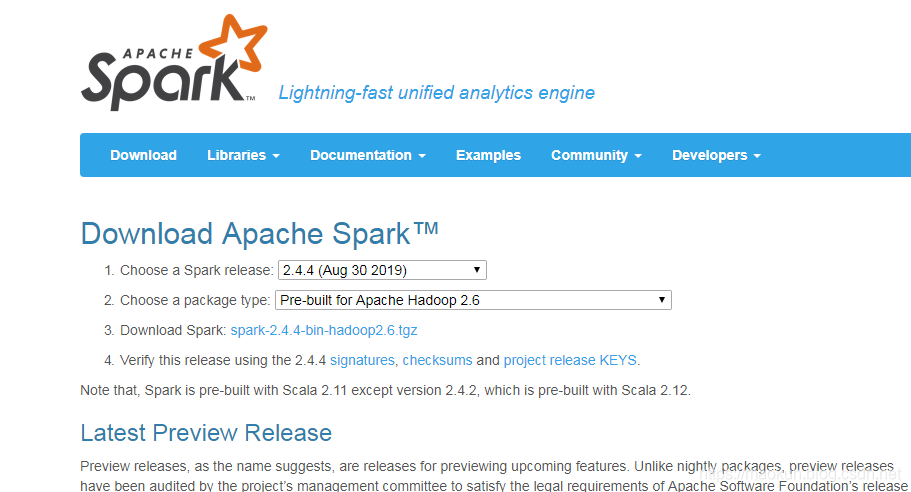

(1)下载spark

spark官网,由于本人使用hadoop2.6,所有下载spark-2.4.4-bin-hadoop2.6。

(2)上传到linux系统解压

tar xvf spark-2.4.4-bin-hadoop2.6.tar.gz

#放在统一的软件目录下

mv spark-2.4.4-bin-hadoop2.6 software/

#别名

ln -s software/spark-2.4.4-bin-hadoop2.6 spark-2.4.4(3) 配置spark-env.sh

注意:文件名空格问题

cd ~/spark-2.4.4/conf/

cp spark-env.sh.template spark-env.sh

vim spark-env.sh编辑spark-env.sh添加以下内容

SPARK_MASTER_PORT="7077"

SPARK_MASTER_IP="hadoop01"

#单机器work的数量

SPARK_WORK_INSTANCES="1"

#spark主

MASTER="spark://${SPARK_MASTER_IP}:${SPARK_MASTER_PORT}"export JAVA_HOME=/home/mk/jdk1.8(4)配置slaves

cd ~/spark-2.4.4/conf/

cp slaves.template slaves

vim slaves编辑slaves添加以下内容

hadoop01

hadoop02

hadoop03

(5)复制到hadoop02、hadoop03

scp -r /home/mk/software/spark-2.4.4-bin-hadoop2.6 mk@hadoop02:/home/mk/software/

scp -r /home/mk/software/spark-2.4.4-bin-hadoop2.6 mk@hadoop03:/home/mk/software/#hadoop02机器别名

ln -s software/spark-2.4.4-bin-hadoop2.6 spark-2.4.4#hadoop03机器别名

ln -s software/spark-2.4.4-bin-hadoop2.6 spark-2.4.4

三、启动spark

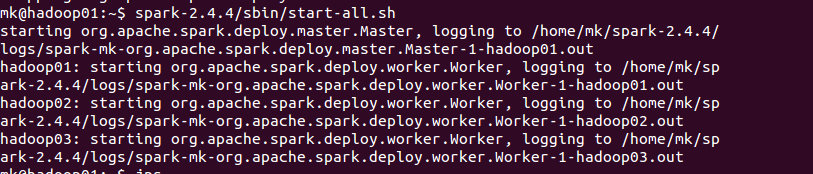

(1)启动

~/spark-2.4.4/sbin/start-all.sh

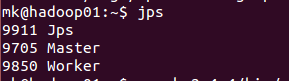

hadoop01检查

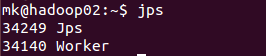

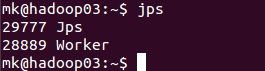

hadoop02检查

hadoop03检查

启动成功。

sparkUI浏览器进行访问http://hadoop01:8080/

(2)运行spark计算PI例子

spark-2.4.4/bin/run-example --master spark://hadoop01:7077 SparkPi

Pi is roughly 3.145115725578628

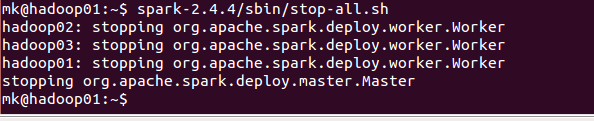

(3)关闭spark

~/spark-2.4.4/sbin/stop-all.sh

多主standalone安装)

Idea构建spark项目)

Idea远程提交项目到spark集群)