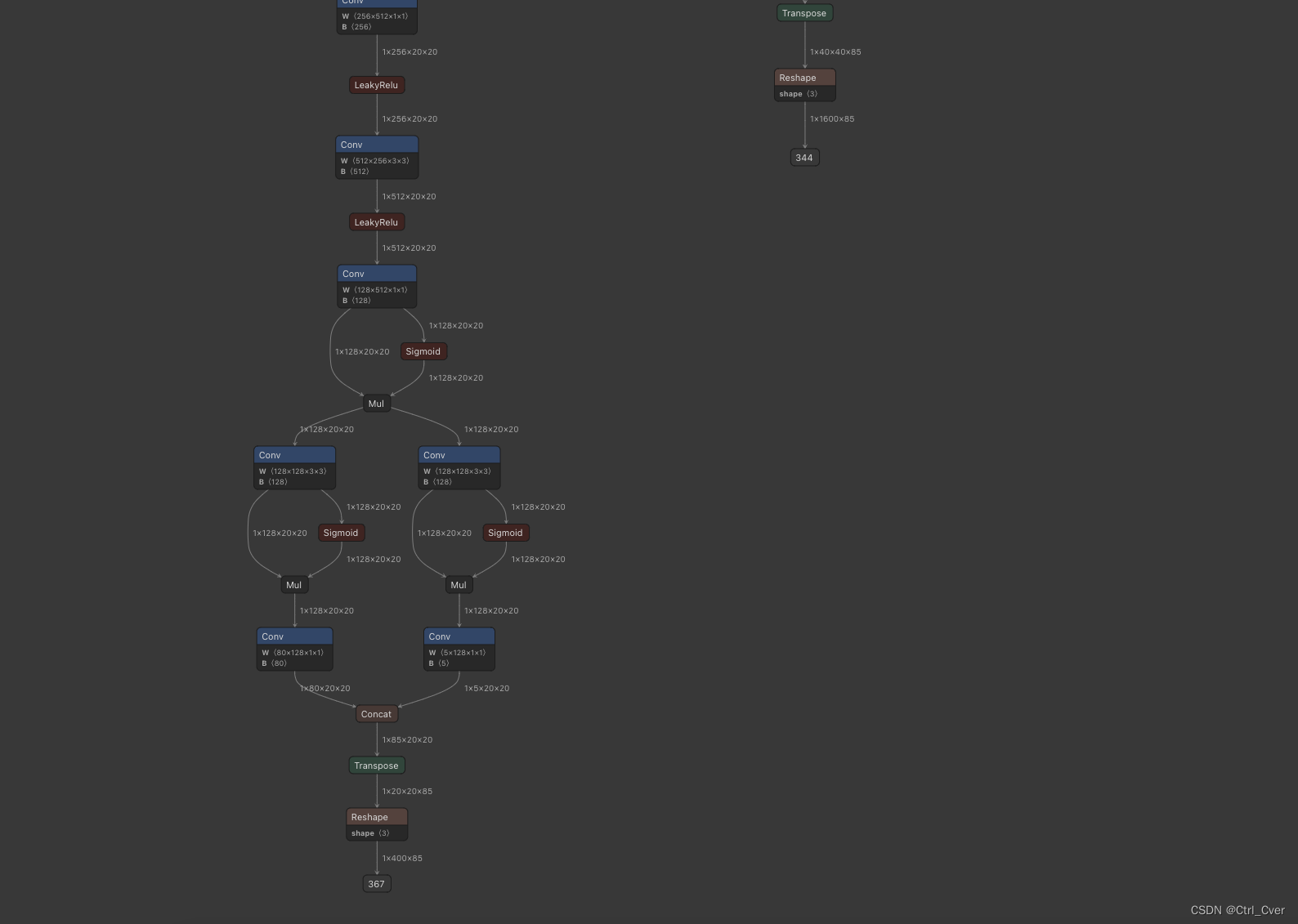

在导出onnx的时候可以把后处理的那部分注释掉,下图是我的处理方式,只在导出onnx时,myexport=1.。导出完毕后,onnx的结构如图。这样在后续转模型的时候就不会出现do not support dimension size > 4 了。 得到onnx后,手写后处理,附赠onnx推理代码: import onnxruntime

import numpy as np

import cv2

import os

import tqdm

onnx_model_path = 'my.onnx'

sess = onnxruntime. InferenceSession( onnx_model_path)

strides = [ 8 , 16 , 32 ]

featuremaps = [ 80 , 40 , 20 ] coco_classes = [ 'person' , 'bicycle' , 'car' , 'motorcycle' , 'airplane' , 'bus' , 'train' , 'truck' , 'boat' , 'traffic light' , 'fire hydrant' , 'stop sign' , 'parking meter' , 'bench' , 'bird' , 'cat' , 'dog' , 'horse' , 'sheep' , 'cow' , 'elephant' , 'bear' , 'zebra' , 'giraffe' , 'backpack' , 'umbrella' , 'handbag' , 'tie' , 'suitcase' , 'frisbee' , 'skis' , 'snowboard' , 'sports ball' , 'kite' , 'baseball bat' , 'baseball glove' , 'skateboard' , 'surfboard' , 'tennis racket' , 'bottle' , 'wine glass' , 'cup' , 'fork' , 'knife' , 'spoon' , 'bowl' , 'banana' , 'apple' , 'sandwich' , 'orange' , 'broccoli' , 'carrot' , 'hot dog' , 'pizza' , 'donut' , 'cake' , 'chair' , 'couch' , 'potted plant' , 'bed' , 'dining table' , 'toilet' , 'tv' , 'laptop' , 'mouse' , 'remote' , 'keyboard' , 'cell phone' , 'microwave' , 'oven' , 'toaster' , 'sink' , 'refrigerator' , 'book' , 'clock' , 'vase' , 'scissors' , 'teddy bear' , 'hair drier' , 'toothbrush'

] def sigmoid ( x) : return 1 / ( 1 + np. exp( - x) ) def get_color ( idx) : idx += 3 return ( 37 * idx % 255 , 17 * idx % 255 , 29 * idx % 255 ) def onnx_infer ( img_path) : img = cv2. imread( img_path) img = cv2. resize( img, ( 640 , 640 ) ) input_data = np. transpose( img, ( 2 , 0 , 1 ) ) [ None , . . . ] input_data = np. array( input_data, dtype= np. float32) output_data = sess. run( [ 'output_0' , '344' , '367' ] , { 'input_0' : input_data} ) posted_output = [ ] for i, output in enumerate ( output_data) : b, n, c = output. shapeoutput[ . . . , 4 : ] = sigmoid( output[ . . . , 4 : ] ) y = np. arange( featuremaps[ i] ) . repeat( featuremaps[ i] ) . reshape( - 1 , 1 ) x = np. reshape( [ np. arange( featuremaps[ i] ) for _ in range ( featuremaps[ i] ) ] , ( - 1 , 1 ) ) grid = np. concatenate( [ x, y] , axis= 1 ) output[ 0 , : , 0 : 2 ] = ( output[ 0 , : , 0 : 2 ] + grid) * strides[ i] output[ 0 , : , 2 : 4 ] = np. exp( output[ 0 , : , 2 : 4 ] ) * strides[ i] posted_output. append( output[ 0 ] ) posted_output = np. concatenate( posted_output) box_corner = np. zeros_like( posted_output) box_corner[ : , 0 ] = posted_output[ : , 0 ] - posted_output[ : , 2 ] / 2 box_corner[ : , 1 ] = posted_output[ : , 1 ] - posted_output[ : , 3 ] / 2 box_corner[ : , 2 ] = posted_output[ : , 0 ] + posted_output[ : , 2 ] / 2 box_corner[ : , 3 ] = posted_output[ : , 1 ] + posted_output[ : , 3 ] / 2 posted_output[ : , : 4 ] = box_corner[ : , : 4 ] class_conf = np. max ( posted_output[ : , 5 : 85 ] , axis= 1 ) class_pred = np. argmax( posted_output[ : , 5 : 85 ] , axis= 1 ) conf_mask = ( posted_output[ : , 4 ] * class_conf. squeeze( ) >= 0.25 ) detections = posted_output[ conf_mask] cls_scores = class_conf[ conf_mask] cls_cat = class_pred[ conf_mask] indices = cv2. dnn. NMSBoxes( detections[ : , : 4 ] , detections[ : , 4 ] * cls_scores, score_threshold= 0.1 , nms_threshold= 0.55 ) ans_bbox = detections[ indices] [ : , : 4 ] ans_conf = cls_scores[ indices] * detections[ indices] [ : , 4 ] ans_cat = cls_cat[ indices] for bbox, conf, cat in zip ( ans_bbox, ans_conf, ans_cat) : x0, y0, x1, y1 = bboxcv2. rectangle( img, ( int ( x0) , int ( y0) ) , ( int ( x1) , int ( y1) ) , get_color( int ( cat) ) , 2 , 2 ) label = f' { coco_classes[ int ( cat) ] } : { conf: .2f } ' . putText( img, label, ( int ( x0) , int ( y0) - 5 ) , cv2. FONT_HERSHEY_SIMPLEX, 0.5 , get_color( int ( cat) ) , 2 ) cv2. imwrite( os. path. join( 'onnx_infer' , img_path. split( '/' ) [ - 1 ] ) , img) if __name__ == '__main__' : data_root = 'imgs/' imgs = os. listdir( data_root) for img in tqdm. tqdm( imgs) : onnx_infer( os. path. join( data_root, img) )

)

)

)

)

补题)