目录

一、概述

二、安装部署Metrics-Server组件

1.下载Metrics-Server资源清单

2.编辑Metrics-Server的资源清单

3.验证Metrics-Server是否成功安装

4.使用top命令测试是否管用

三、hpa资源实现pod水平伸缩(自动扩缩容)

1.编写deploy资源清单

2.编写hpa资源清单

3.查看hpa资源

四、压测

1.进入pod,安装和使用stress工具

2.查看hpa资源的负载情况

一、概述

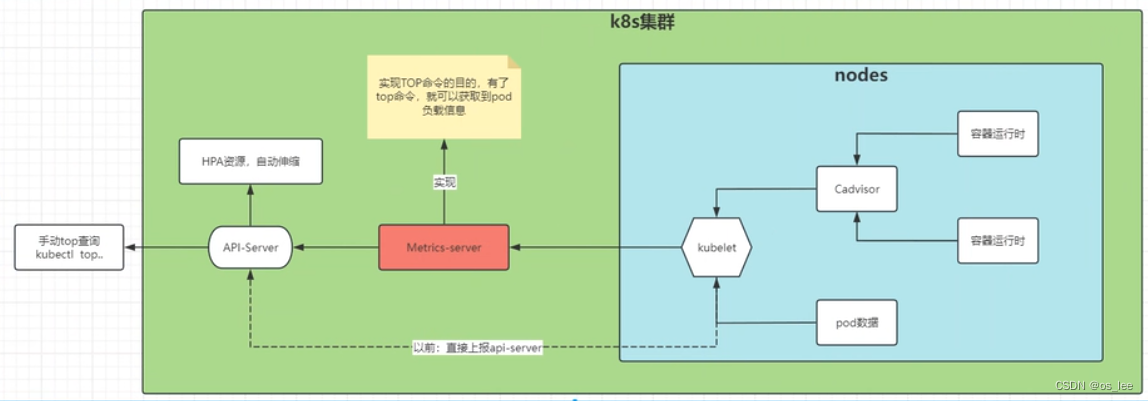

Metrics-Server组件目的:获取集群中pod、节点等负载信息;

hpa资源目的:通过metrics-server获取的pod负载信息,自动伸缩创建pod;

参考链接:

资源指标管道 | Kubernetes

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server

GitHub - kubernetes-sigs/metrics-server: Scalable and efficient source of container resource metrics for Kubernetes built-in autoscaling pipelines.

二、安装部署Metrics-Server组件

就是给k8s集群安装top命令的意思;

1.下载Metrics-Server资源清单

[root@k8s1 k8s]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability-1.21+.yaml

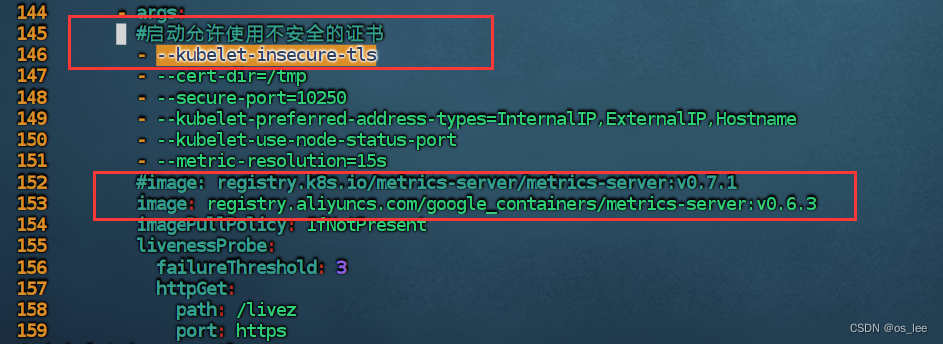

2.编辑Metrics-Server的资源清单

[root@k8s1 k8s]# vim high-availability-1.21+.yamlapiVersion: v1

kind: ServiceAccount

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:k8s-app: metrics-serverrbac.authorization.k8s.io/aggregate-to-admin: "true"rbac.authorization.k8s.io/aggregate-to-edit: "true"rbac.authorization.k8s.io/aggregate-to-view: "true"name: system:aggregated-metrics-reader

rules:

- apiGroups:- metrics.k8s.ioresources:- pods- nodesverbs:- get- list- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:k8s-app: metrics-servername: system:metrics-server

rules:

- apiGroups:- ""resources:- nodes/metricsverbs:- get

- apiGroups:- ""resources:- pods- nodesverbs:- get- list- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:k8s-app: metrics-servername: metrics-server-auth-readernamespace: kube-system

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccountname: metrics-servernamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:k8s-app: metrics-servername: metrics-server:system:auth-delegator

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:auth-delegator

subjects:

- kind: ServiceAccountname: metrics-servernamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:k8s-app: metrics-servername: system:metrics-server

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:metrics-server

subjects:

- kind: ServiceAccountname: metrics-servernamespace: kube-system

---

apiVersion: v1

kind: Service

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

spec:ports:- name: httpsport: 443protocol: TCPtargetPort: httpsselector:k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

spec:replicas: 2selector:matchLabels:k8s-app: metrics-serverstrategy:rollingUpdate:maxUnavailable: 1template:metadata:labels:k8s-app: metrics-serverspec:affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:k8s-app: metrics-servernamespaces:- kube-systemtopologyKey: kubernetes.io/hostnamecontainers:- args:#启动允许使用不安全的证书- --kubelet-insecure-tls- --cert-dir=/tmp- --secure-port=10250- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname- --kubelet-use-node-status-port- --metric-resolution=15s#image: registry.k8s.io/metrics-server/metrics-server:v0.7.1image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.3imagePullPolicy: IfNotPresentlivenessProbe:failureThreshold: 3httpGet:path: /livezport: httpsscheme: HTTPSperiodSeconds: 10name: metrics-serverports:- containerPort: 10250name: httpsprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /readyzport: httpsscheme: HTTPSinitialDelaySeconds: 20periodSeconds: 10resources:requests:cpu: 100mmemory: 200MisecurityContext:allowPrivilegeEscalation: falsecapabilities:drop:- ALLreadOnlyRootFilesystem: truerunAsNonRoot: truerunAsUser: 1000seccompProfile:type: RuntimeDefaultvolumeMounts:- mountPath: /tmpname: tmp-dirnodeSelector:kubernetes.io/os: linuxpriorityClassName: system-cluster-criticalserviceAccountName: metrics-servervolumes:- emptyDir: {}name: tmp-dir

---

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

spec:minAvailable: 1selector:matchLabels:k8s-app: metrics-server

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:labels:k8s-app: metrics-servername: v1beta1.metrics.k8s.io

spec:group: metrics.k8s.iogroupPriorityMinimum: 100insecureSkipTLSVerify: trueservice:name: metrics-servernamespace: kube-systemversion: v1beta1versionPriority: 100[root@k8s1 k8s]# kubectl apply -f high-availability-1.21+.yaml

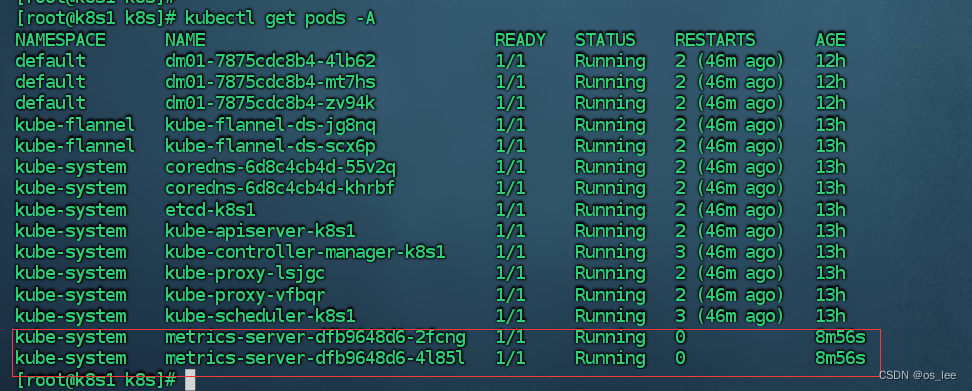

3.验证Metrics-Server是否成功安装

4.使用top命令测试是否管用

[root@k8s1 k8s]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s1 734m 18% 867Mi 11%

k8s2 118m 2% 493Mi 6%

[root@k8s1 k8s]# kubectl top pods -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

default dm01-7875cdc8b4-4lb62 0m 4Mi

default dm01-7875cdc8b4-mt7hs 0m 2Mi

default dm01-7875cdc8b4-zv94k 0m 2Mi

kube-flannel kube-flannel-ds-jg8nq 7m 20Mi

kube-flannel kube-flannel-ds-scx6p 7m 22Mi

kube-system coredns-6d8c4cb4d-55v2q 2m 18Mi

kube-system coredns-6d8c4cb4d-khrbf 2m 18Mi

kube-system etcd-k8s1 14m 66Mi

kube-system kube-apiserver-k8s1 55m 175Mi

kube-system kube-controller-manager-k8s1 18m 50Mi

kube-system kube-proxy-lsjgc 1m 19Mi

kube-system kube-proxy-vfbqr 1m 17Mi

kube-system kube-scheduler-k8s1 4m 21Mi

kube-system metrics-server-dfb9648d6-2fcng 4m 19Mi

kube-system metrics-server-dfb9648d6-4l85l 4m 22Mi 三、hpa资源实现pod水平伸缩(自动扩缩容)

- 当资源使用超一定的范围,会自动扩容,但是扩容数量不会超过最大pod数量;

- 扩容时无延迟,只要监控资源使用超过阔值,则会直接创建pod;

- 当资源使用率恢复到阔值以下时,需要等待一段时间才会释放,大概时5分钟;

1.编写deploy资源清单

[root@k8s1 k8s]# cat deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: dm-hpa

spec:replicas: 1selector:matchLabels:k8s: osleetemplate:metadata:labels:k8s: osleespec:containers:- name: c1image: centos:7command:- tail- -f- /etc/hostsresources:requests:cpu: "50m"limits:cpu: "150m"[root@k8s1 k8s]# kubectl apply -f deploy.yaml

deployment.apps/dm-hpa created

2.编写hpa资源清单

[root@k8s1 k8s]# cat hpa.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:name: hpa-tools

spec:#指定pod最大的数量是多少(自动扩容的上限)maxReplicas: 10#指定pod最小的pod数量是多少(自动缩容的下限)minReplicas: 2#弹性伸缩引用的目标是谁?scaleTargetRef:#目标资源的apiapiVersion: "apps/v1"#目标资源的类型kindkind: Deployment#目标资源的名称metadata-name是什么name: dm-hpa#使用cpu阈值(使用到达多少,开始扩容、缩容)#95%targetCPUUtilizationPercentage: 95[root@k8s1 k8s]# kubectl apply -f hpa.yaml

horizontalpodautoscaler.autoscaling/hpa-tools created

3.查看hpa资源

[root@k8s1 k8s]# kubectl get hpa -o wide

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-tools Deployment/dm-hpa 0%/95% 2 10 2 102s

四、压测

1.进入pod,安装和使用stress工具

# 进入pod容器

[root@k8s1 k8s]# kubectl exec -it pod/dm-hpa-844c748565-jpzxt -- sh

sh-4.2# yum -y install wget# 安装aili源和epel源

sh-4.2# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

sh-4.2# wget -O /etc/yum.repos.d/epel.repo https://mirrors.aliyun.com/repo/epel-7.repo# 安装压测工具

sh-4.2# yum -y install stress# 开始使用命令压测pod

sh-4.2# stress --cpu 8 --io 4 --vm 2 --vm-bytes 128M --timeout 20m2.查看hpa资源的负载情况

[root@k8s1 ~]# kubectl get hpa -o wide

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-tools Deployment/dm-hpa 100%/95% 2 10 3 11m

[root@k8s1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

dm-hpa-844c748565-jbn7s 1/1 Running 0 7m17s

dm-hpa-844c748565-jpzxt 1/1 Running 0 11m

dm-hpa-844c748565-tn8fr 1/1 Running 0 24m

可以看到:

- 我们创建的deploy资源只有一个副本;

- 我们创建的hpa资源之后,设置最小值是2,最大值是10 ;

- 我们在查看pod,可以看见,pod变成了2个;

- 我们进入容器,开始压测,将负载压测到超过95%;

- 再次查看pod,发现变成了3个,自动创建了一个;

- 关闭压测,5分钟后,pod有回归到了2个;

- 至此,hpa的pod自动伸缩,测试完毕;

)

(上))

)

什么意思)

)

)