import os

from dotenv import load_dotenv

from langchain_community.llms import Tongyi

load_dotenv('key.env') # 指定加载 env 文件

key = os.getenv('DASHSCOPE_API_KEY') # 获得指定环境变量

DASHSCOPE_API_KEY = os.environ["DASHSCOPE_API_KEY"] # 获得指定环境变量

model = Tongyi(temperature=1)from langchain_core.prompts import PromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnableBranch# 构建分类判断链:识别用户的问题应该属于哪个(指定的)分类

chain = (PromptTemplate.from_template("""Given the user question below, classify it as either being about `LangChain` or `Other`.Do not respond with more than one word.<question>{question}</question>Classification:""")| model| StrOutputParser()

)# 构建内容问答链和默认应答链

langchain_chain = (PromptTemplate.from_template("""You are an expert in LangChain. Respond to the following question in one sentence:Question: {question}Answer:""")| model

)

general_chain = (PromptTemplate.from_template("""Respond to the following question in one sentence:Question: {question}Answer:""")| model

)# 通过 RunnableBranch 构建条件分支并附加到主调用链上

branch = RunnableBranch((lambda x: "langchain" in x["topic"].lower(), langchain_chain),general_chain,

)

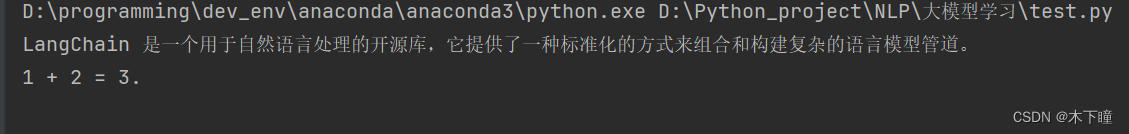

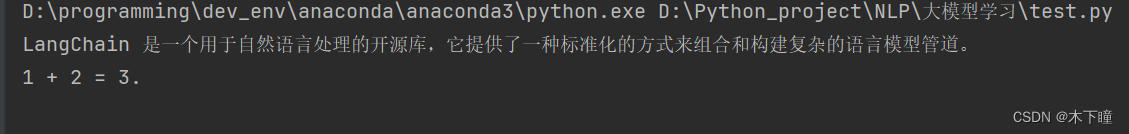

full_chain = {"topic": chain, "question": lambda x: x["question"]} | branchprint(full_chain.invoke({"question": "什么是 LangChain?"}))

print(full_chain.invoke({"question": "1 + 2 = ?"}))

B. Two Buttons (BFS))

)

)

)