文章目录

- 1.集群部署

- 1.1 环境概述

- 1.1.1 基础知识

- 1.1.2 环境规划

- 1.1.3 小结

- 1.2 准备工作

- 1.2.1 基本环境

- 1.2.2 软件安装

- 1.2.3 小结

- 1.3 Ceph部署

- 1.3.1 集群创建

- 1.3.2 部署Mon

- 1.3.3 小结

- 1.4 Ceph部署2

- 1.4.1 Mon认证

- 1.4.2 Mgr环境

- 1.4.3 小结

- 1.5 OSD环境

- 1.5.1 基本环境

- 1.5.2 OSD实践

- 1.5.3 小结

- 1.6 OSD操作

- 1.6.1 基本实践

- 1.6.2 进阶实践

- 1.6.3 小结

- 1.7 OSD节点

- 1.7.1 OSD删除

- 1.7.2 OSD添加

- 1.7.3 小结

- 1.8 存储实践

- 1.8.1 基本环境

- 1.8.2 OSD实践

- 1.8.3 小结

- 1.9 存储解析

- 1.9.1 存储解析

- 1.9.2 存储删除

- 1.9.3 小结

- 1.10 环境完善

- 1.10.1 扩展mon

- 1.10.2 扩展mgr

- 1.10.3 小结

1.集群部署

1.1 环境概述

学习目标:这一节,我们从基础知识、环境规划、小结三个方面来学习。

1.1.1 基础知识

注意事项

在Ceph系统的搭建过程中,会出现各种意想不到或者预想不到的问题,就算整个过程中每一步都没有问题,还是会出现各种问题,这些问题不仅仅在网上找不到,在官网中找不到,甚至玩Ceph数年的人都解决不了。

尤其是,就算你第一次成功了,第二次重试就会出现问题。所以,如果出现问题怎么办?一步一步踏踏实实的进行研究,分析解决问题,并进行总结并梳理成册就可以了。

简介

Ceph的环境部署是非常繁琐的,所以,官方帮我们提供了很多的快捷部署方式。

参考资料:https://docs.ceph.com/en/reef/install/https://docs.ceph.com/en/quincy/install/https://docs.ceph.com/en/pacific/install/ (本文档以Nautilus版本为例进行搭建)

推荐方法:

Cephadm使用容器和 systemd 安装和管理 Ceph 集群,并于 CLI 和仪表板 GUI 紧密集成。仅支持 Octopus 和更新的版本,需要容器和 Python3 支持。与新的编排 API 完全集成

Rook在 Kubernetes 中运行的 Ceph 集群,同时还支持通过 Kubernetes API 管理存储资源和配置。仅支持 Nautilus 和较新版本的 Ceph。其他方法:

ceph-ansible: 使用 Ansible 部署 Ceph 集群,对于新的编排器功能、管理功能和仪表板支持不好

ceph-deploy: 是一个快速部署集群的工具,不支持Centos8

DeepSea: 使用 Salt 安装 Ceph

ceph-mon: 使用 Juju 安装 Ceph

Puppet-ceph: 通过 Puppet 安装 Ceph

二进制源码: 手工安装

windows图形: 在windows主机上,通过鼠标点点点的方式进行部署

版本的选择

版本地址:https://docs.ceph.com/en/latest/releases/

最新版本:官网版本 v18.2.2 Reef

版本特性:x.0.z(开发版)、x.1.z(候选版)、x.2.z(稳定、修正版)

本文档演示版本:官网版本 v14.2.22 Nautilus

1.1.2 环境规划

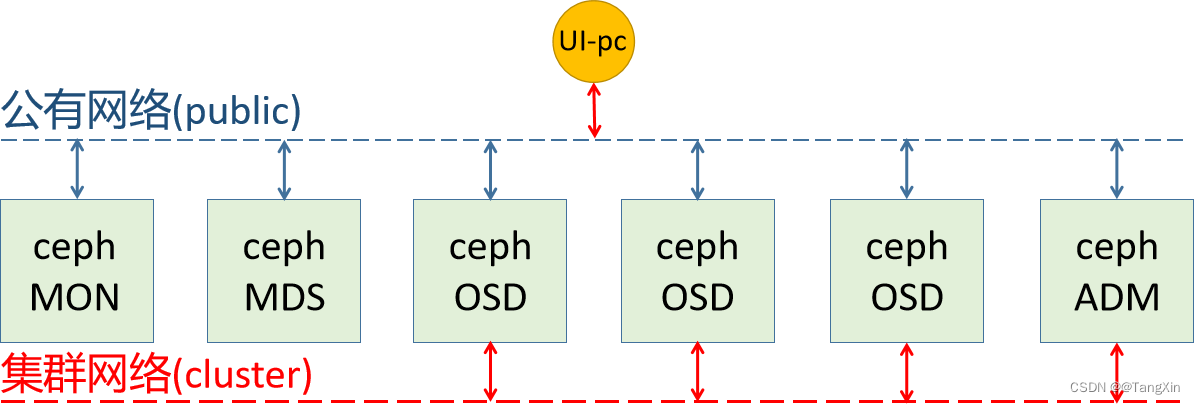

网络规划

公有网络(Public) - 前端网络 - 北向网络 - a public (front-side) network:连接客户端和集群- 用于用户的数据通信- 192.168.120.0/24集群网络(Cluster)- 后端网络 - 东西向网络 - a cluster (back-side) network:连接Ceph各存储节点- 用于集群内部的管理通信- 192.168.8.0/24可以在Ceph配置文件的 [global] 部分配置两个网络:- public network = {public-network/netmask}- cluster network = {cluster-network/netmask}

提示

与客户通信的数据流为纵向,所以称之为北向网络,或称为南北网络。

集群内通过新的数据流为横向,所以称之为东西网络。南北的含义是数据可以往外走,客户端是集群外的节点,其余为集群内节点。

东西的含义是数据流是横向的,数据流会在集群内节点通信,与外界无关。

主机规划

磁盘规划磁盘1 - VM的系统盘磁盘2和磁盘3 - Ceph的OSD

主机名规划

| 主机名 | Public/共有网络/前端网络 | Cluster/集群网络/后端网络 | 磁盘 | 其他角色 |

|---|---|---|---|---|

| admin | 192.168.120.20 | 192.168.8.20 | sdb、sdc | |

| stor21 | 192.168.120.21 | 192.168.8.21 | sdb、sdc | mon01 |

| stor22 | 192.168.120.22 | 192.168.8.22 | sdb、sdc | mon02 |

| stor23 | 192.168.120.23 | 192.168.8.23 | sdb、sdc | mon03 |

| stor24 | 192.168.120.24 | 192.168.8.24 | sdb、sdc | |

| stor25 | 192.168.120.25 | 192.168.8.25 | sdb、sdc | |

| stor26 | 192.168.120.26 | 192.168.8.26 | sdb、sdc |

注意:由于生产中,Ceph的集群角色是非常多的,当我们的主机最少的时候,只能让一台主机节点运行多个角色。stor21、22、23这三台主机,还同时兼具Mon的角色,视情况兼容Mgr角色主机名的完整格式是:xxx.superopsmsb.com

其他准备

管理用户由于我们接下来的所有操作,基本上都是在 admin 这个主机上来运行,所以,我们不推荐直接使用root用户来管理,倾向于通过一个普通用户来操作接下来的操作。由于后续的安装软件,涉及到root用户权限的操作,所以这个普通用户最好具备sudo的权限。

时间同步对于任何一个集群来说,时间同步是非常重要的。

主机名规划随着生产中的主节点越来越多,我们通过手工定制主机名的方式就不太适合集群的主机管理了。所以在企业中,我们的主机名相关的信息,倾向于通过内网dns来进行管理。尤其是等我们到radosgw的时候,必须通过泛域名解析的机制来实现,更强大的面向客户端的主机名管理体系。

VM主机准备

系统镜像:CentOS-7-x86_64-Everything-1708-7.4.iso

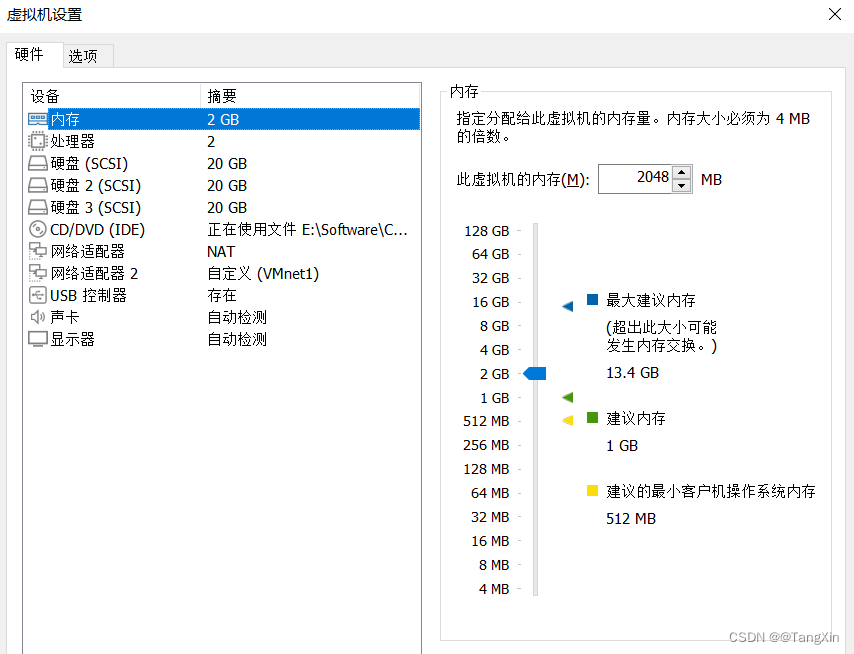

内存:2G

处理器:2核

磁盘:20G*3(包括系统盘)

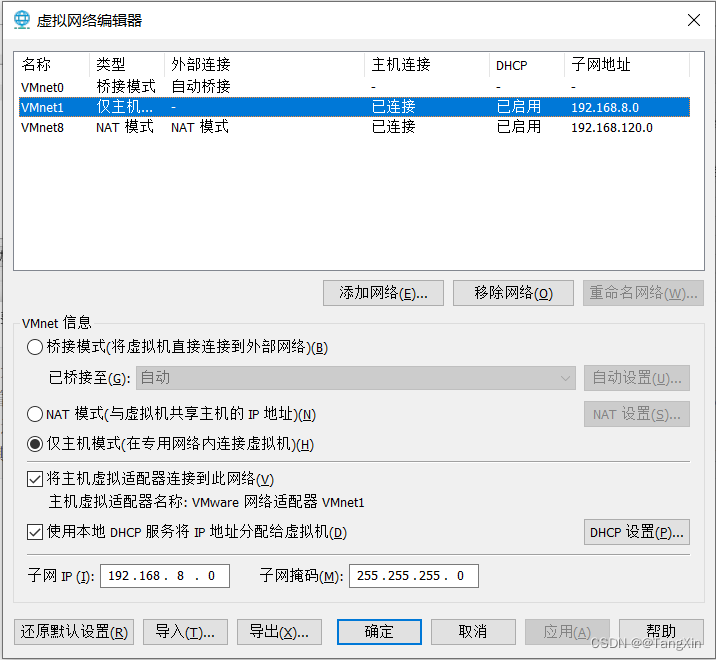

网络:一个NAT模式,一个自定义(VMnet1)模式

虚拟网络设置:VMnet1 设定为 192.168.8.0 网段,VMnet8 设定为 192.168.120.0 网段

虚拟机设置:额外添加两块盘,每个根据自己的情况设定容量,本次设定为20G额外另加一块网络适配器,使用仅主机模式 -- VMnet1,mac地址必须要重新生成,避免冲突

虚拟机创建

1.1.3 小结

1.2 准备工作

学习目标:这一节,我们从基本环境、软件安装、小结三个方面来学习。

1.2.1 基本环境

主机名管理

只需要在admin节点,编辑 /etc/hosts 文件

192.168.120.20 admin.superopsmsb.com admin

192.168.120.21 stor21.superopsmsb.com stor21 mon01.superopsmsb.com mon01

192.168.120.22 stor22.superopsmsb.com stor22 mon02.superopsmsb.com mon02

192.168.120.23 stor23.superopsmsb.com stor23 mon03.superopsmsb.com mon03

192.168.120.24 stor24.superopsmsb.com stor24

192.168.120.25 stor25.superopsmsb.com stor25

192.168.120.26 stor26.superopsmsb.com stor26

注意:后续可能会涉及到k8s环境的部署,所以hosts文件有可能会发生变动。

防火墙管理

集群所有节点都需要操作,关闭防火墙

systemctl stop firewalld

systemctl status firewalld

systemctl disable firewalld

systemctl is-enabled firewalld

时间同步服务设置

# 集群所有节点都执行

# 方法1:实验方法

yum install ntpdate -y

ntpdate time.windows.com

-------------------------------------------------------------------

# 方法2:生产环境-在线环境

yum install chrony -y

# 备份配置

cp /etc/chrony.conf /etc/chrony.conf.orig

sed -i '/^pool/s/^/#/' /etc/chrony.conf #注解掉pool

grep '#pool' /etc/chrony.conf

sed -i '/#pool/a\server cn.pool.ntp.org iburst' /etc/chrony.conf

sed -i '/#pool/a\server ntp.ntsc.ac.cn iburst' /etc/chrony.conf

sed -i '/#pool/a\server ntp1.aliyun.com iburst' /etc/chrony.conf

grep -A 3 '#pool' /etc/chrony.conf

-------------------------------------------------------------------

# 方法3:生产环境-离线环境

# 时间服务节点

allow 192.168.120.0/24

server 127.127.0.1 iburst

driftfile /var/lib/chrony/drift

keyfile /etc/chrony.keys

leapsectz right/UTC

local stratum 10

makestep 1.0 3

rtcsync

logdir /var/log/chrony# 时间客户端

allow 192.168.120.0/24

server 192.168.120.41 iburst

driftfile /var/lib/chrony/drift

keyfile /etc/chrony.keys

leapsectz right/UTC

local stratum 10

makestep 1.0 3

rtcsync

logdir /var/log/chrony# 重启服务

systemctl restart chronyd.service

跨主机通信

脚本文件名称 01_remote_host_auth.sh

#!/bin/bash

# 功能: 批量设定远程主机免密码认证

# 版本: v0.2# 准备工作

user_dir='/root'

host_file='/etc/hosts'

login_user='root'

login_pass='123456'

target_type=(部署 免密 同步 主机名 退出)# 菜单

menu(){echo -e "\e[31m批量设定远程主机免密码认证管理界面\e[0m"echo "====================================================="echo -e "\e[32m 1: 部署环境 2: 免密认证 3: 同步hosts \e[0m"echo -e "\e[32m 4: 设定主机名 5:退出操作 \e[0m"echo "====================================================="

}

# expect环境

expect_install(){if [ -f /usr/bin/expect ]thenecho -e "\e[33mexpect环境已经部署完毕\e[0m"elseyum install expect -y >> /dev/null 2>&1 && echo -e "\e[33mexpect软件安装完毕\e[0m" || (echo -e "\e[33mexpect软件安装失败\e[0m" && exit)fi

}

# 秘钥文件生成环境

create_authkey(){# 保证历史文件清空[ -d ${user_dir}/.ssh ] && rm -rf ${user_dir}/.ssh/* || mkdir -p ${user_dir}/.ssh# 构建秘钥文件对/usr/bin/ssh-keygen -t rsa -P "" -f ${user_dir}/.ssh/id_rsaecho -e "\e[33m秘钥文件已经创建完毕\e[0m"

}

# expect自动匹配逻辑

expect_autoauth_func(){# 接收外部参数command="$@"expect -c "spawn ${command}expect {\"yes/no\" {send \"yes\r\"; exp_continue}\"*password*\" {send \"${login_pass}\r\"; exp_continue}\"*password*\" {send \"${login_pass}\r\"}}"

}

# 跨主机传输文件认证

sshkey_auth_func(){# 接收外部的参数local host_list="$*"for ip in ${host_list}do# /usr/bin/ssh-copy-id -i ${user_dir}/.ssh/id_rsa.pub root@10.0.0.12cmd="/usr/bin/ssh-copy-id -i ${user_dir}/.ssh/id_rsa.pub"remote_host="${login_user}@${ip}"expect_autoauth_func ${cmd} ${remote_host}done

}# 跨主机同步hosts文件

scp_hosts_func(){# 接收外部的参数local host_list="$*"for ip in ${host_list}doremote_host="${login_user}@${ip}"scp ${host_file} ${remote_host}:${host_file}done

}# 跨主机设定主机名规划

set_hostname_func(){# 接收外部的参数local host_list="$*"for ip in ${host_list}dohost_name=$(grep ${ip} ${host_file}|awk '{print $NF}')remote_host="${login_user}@${ip}"ssh ${remote_host} "hostnamectl set-hostname ${host_name}"done

}

# 帮助信息逻辑

Usage(){echo "请输入有效的操作id"

}

# 逻辑入口

while true

domenuread -p "请输入有效的操作id: " target_idif [ ${#target_type[@]} -ge ${target_id} ]thenif [ ${target_type[${target_id}-1]} == "部署" ]thenecho "开始部署环境操作..."expect_installcreate_authkeyelif [ ${target_type[${target_id}-1]} == "免密" ]thenread -p "请输入需要批量远程主机认证的主机列表范围(示例: {20..26}): " num_listip_list=$(eval echo 192.168.120.$num_list)echo "开始执行免密认证操作..."sshkey_auth_func ${ip_list}elif [ ${target_type[${target_id}-1]} == "同步" ]thenread -p "请输入需要批量远程主机同步hosts的主机列表范围(示例: {20..26}): " num_listip_list=$(eval echo 192.168.120.$num_list)echo "开始执行同步hosts文件操作..."scp_hosts_func ${ip_list}elif [ ${target_type[${target_id}-1]} == "主机名" ]thenread -p "请输入需要批量设定远程主机主机名的主机列表范围(示例: {20..26}): " num_listip_list=$(eval echo 192.168.120.$num_list)echo "开始执行设定主机名操作..."set_hostname_func ${ip_list}elif [ ${target_type[${target_id}-1]} == "退出" ]thenecho "开始退出管理界面..."exitfielseUsagefi

done

执行脚本文件,只在admin节点执行

[root@admin ~]# /bin/bash /data/scripts/01_remote_host_auth.sh

批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 同步hosts4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 1

开始部署环境操作...

expect软件安装完毕

Generating public/private rsa key pair.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:9RQMPbTLWZfGDZtqcX4Vfzx+IbVXn/Bo+9kZUPl4ojU root@admin.superopsmsb.com

The key's randomart image is:

+---[RSA 2048]----+

| .=o..+o|

| +oB*X|

| . +*=@@|

| . +.OE.O|

| S B+.*o|

| .. ..*|

| +.|

| |

| |

+----[SHA256]-----+

秘钥文件已经创建完毕

批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 同步hosts4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 2

请输入需要批量远程主机认证的主机列表范围(示例: {20..26}): {20..26}

开始执行免密认证操作...

spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.20

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.20's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.20'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.21

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.21's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.21'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.22

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.22's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.22'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.23

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.23 (192.168.120.23)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.23's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.23'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.24

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.24 (192.168.120.24)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.24's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.24'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.25

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.25 (192.168.120.25)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.25's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.25'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.120.26

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.26 (192.168.120.26)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.120.26's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@192.168.120.26'"

and check to make sure that only the key(s) you wanted were added.批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 同步hosts4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 3

请输入需要批量远程主机同步hosts的主机列表范围(示例: {20..26}): {20..26}

开始执行同步hosts文件操作...

hosts 100% 556 1.4MB/s 00:00

hosts 100% 556 593.5KB/s 00:00

hosts 100% 556 572.1KB/s 00:00

hosts 100% 556 634.8KB/s 00:00

hosts 100% 556 513.5KB/s 00:00

hosts 100% 556 397.4KB/s 00:00

hosts 100% 556 434.3KB/s 00:00

批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 同步hosts4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 4

请输入需要批量设定远程主机主机名的主机列表范围(示例: {20..26}): {20..26}

开始执行设定主机名操作...

批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 同步hosts4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 5

开始退出管理界面...

测试效果,只在admin节点执行

[root@admin ~]# for i in {20..26}; do hostname=$(ssh root@192.168.120.$i "hostname"); echo "192.168.120.$i - $hostname"; done

192.168.120.20 - admin

192.168.120.21 - mon01

192.168.120.22 - mon02

192.168.120.23 - mon03

192.168.120.24 - stor24

192.168.120.25 - stor25

192.168.120.26 - stor26

1.2.2 软件安装

用户管理

创建普通用户

useradd -m cephadm -s /bin/bash

echo cephadm:123456 | chpasswd为用户配置root权限

echo "cephadm ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephadm

chmod 0440 /etc/sudoers.d/cephadm切换用户时,不输出最新登录信息

[root@admin ~]# grep "se.*postlogin" /etc/pam.d/su

# session include postlogin

脚本方法 02_create_ceph_user.sh

#!/bin/bash

# 功能: 创建专属的ceph管理用户

# 版本: v0.2# 准备工作

login_user='cephadm'

login_pass='123456'# 设定普通用户

useradd -m ${login_user} -s /bin/bash

echo ${login_user}:${login_pass} | chpasswd

echo "${login_user} ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/${login_user}

chmod 0440 /etc/sudoers.d/${login_user}

批量执行,只在admin节点执行

for i in {20..26}

dossh root@192.168.120.$i "mkdir /data/scripts -p"scp /data/scripts/02_create_ceph_user.sh root@192.168.120.$i:/data/scripts/02_create_ceph_user.shssh root@192.168.120.$i "/bin/bash /data/scripts/02_create_ceph_user.sh"

done

执行效果

[root@admin scripts]# for i in {20..26}

> do

> ssh root@192.168.120.$i "mkdir /data/scripts -p"

> scp /data/scripts/02_create_ceph_user.sh root@192.168.120.$i:/data/scripts/02_create_ceph_user.sh

> ssh root@192.168.120.$i "/bin/bash /data/scripts/02_create_ceph_user.sh"

> done

02_create_ceph_user.sh 100% 403 624.6KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

02_create_ceph_user.sh 100% 403 168.0KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

02_create_ceph_user.sh 100% 403 296.9KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

02_create_ceph_user.sh 100% 403 253.8KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

02_create_ceph_user.sh 100% 403 360.6KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

02_create_ceph_user.sh 100% 403 424.1KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

02_create_ceph_user.sh 100% 403 304.9KB/s 00:00

cephadm ALL = (root) NOPASSWD:ALL

确认效果

for i in {20..26}; do usermsg=$(ssh root@192.168.120.$i "id cephadm"); echo "192.168.120.$i - $usermsg"; done[root@admin ~]# for i in {20..26}; do usermsg=$(ssh root@192.168.120.$i "id cephadm"); echo "192.168.120.$i - $usermsg"; done

192.168.120.20 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

192.168.120.21 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

192.168.120.22 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

192.168.120.23 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

192.168.120.24 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

192.168.120.25 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

192.168.120.26 - uid=1000(cephadm) gid=1000(cephadm) groups=1000(cephadm)

跨主机免密码认证

脚本文件内容 /data/scripts/03_remote_cephadm_auth.sh

#!/bin/bash

# 功能: 批量设定远程主机免密码认证

# 版本: v0.3# 准备工作

user_dir='/home/cephadm'

login_user='cephadm'

login_pass='123456'

host_file='/etc/hosts'

target_type=(部署 免密 退出)# 菜单

menu(){echo -e "\e[31m批量设定远程主机免密码认证管理界面\e[0m"echo "====================================================="echo -e "\e[32m 1: 部署环境 2: 免密认证 3: 退出操作 \e[0m"echo "====================================================="

}

# expect环境

expect_install(){if [ -f /usr/bin/expect ]thenecho -e "\e[33mexpect环境已经部署完毕\e[0m"elsesudo yum install expect -y >> /dev/null 2>&1 && echo -e "\e[33mexpect软件安装完毕\e[0m" || (echo -e "\e[33mexpect软件安装失败\e[0m" && exit)fi

}

# 秘钥文件生成环境

create_authkey(){# 保证历史文件清空[ -d ${user_dir}/.ssh ] && rm -rf ${user_dir}/.ssh/*# 构建秘钥文件对/usr/bin/ssh-keygen -t rsa -P "" -f ${user_dir}/.ssh/id_rsaecho -e "\e[33m秘钥文件已经创建完毕\e[0m"

}

# expect自动匹配逻辑

expect_autoauth_func(){# 接收外部参数command="$@"expect -c "spawn ${command}expect {\"yes/no\" {send \"yes\r\"; exp_continue}\"*password*\" {send \"${login_pass}\r\"; exp_continue}\"*password*\" {send \"${login_pass}\r\"}}"

}

# 跨主机传输文件认证

sshkey_auth_func(){# 接收外部的参数local host_list="$*"for ip in ${host_list}docmd="/usr/bin/ssh-copy-id -i ${user_dir}/.ssh/id_rsa.pub"remote_host="${login_user}@${ip}"host_name=$(grep ${ip} ${host_file}|awk '{print $NF}')remote_host1="${login_user}@${host_name}"remote_host2="${login_user}@${host_name}.superopsmsb.com"expect_autoauth_func ${cmd} ${remote_host}expect_autoauth_func ${cmd} ${remote_host1}expect_autoauth_func ${cmd} ${remote_host2}done

}# 帮助信息逻辑

Usage(){echo "请输入有效的操作id"

}

# 逻辑入口

while true

domenuread -p "请输入有效的操作id: " target_idif [ ${#target_type[@]} -ge ${target_id} ]thenif [ ${target_type[${target_id}-1]} == "部署" ]thenecho "开始部署环境操作..."expect_installcreate_authkeyelif [ ${target_type[${target_id}-1]} == "免密" ]thenread -p "请输入需要批量远程主机认证的主机列表范围(示例: {20..26}): " num_listip_list=$(eval echo 192.168.120.$num_list)echo "开始执行免密认证操作..."sshkey_auth_func ${ip_list}elif [ ${target_type[${target_id}-1]} == "退出" ]thenecho "开始退出管理界面..."exitfielseUsagefi

done

更改文件权限

chown cephadm:cephadm /data/scripts/03_remote_cephadm_auth.sh切换用户

su - cephadm

执行脚本文件

[cephadm@admin ~]$ /bin/bash /data/scripts/03_remote_cephadm_auth.sh

批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 退出操作

=====================================================

请输入有效的操作id: 1

开始部署环境操作...

expect环境已经部署完毕

Generating public/private rsa key pair.

Created directory '/home/cephadm/.ssh'.

Your identification has been saved in /home/cephadm/.ssh/id_rsa.

Your public key has been saved in /home/cephadm/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:s7sPnVHcaRVTBows3g8vLcPcmcPCyHTZB9HvW66x+/o cephadm@admin

The key's randomart image is:

+---[RSA 2048]----+

| . oo**|

| ..o.o+o|

| . ooo+..|

| o.=.. o|

| So.* B = |

| +ooO X o|

| o o =.oo|

| o +.|

| oo. =*E|

+----[SHA256]-----+

秘钥文件已经创建完毕

批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 退出操作

=====================================================

请输入有效的操作id: 2

请输入需要批量远程主机认证的主机列表范围(示例: {20..26}): {20..26}

开始执行免密认证操作...

spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.20

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.20 (192.168.120.20)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.20's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.20'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@admin

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'admin (192.168.120.20)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@admin.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'admin.superopsmsb.com (192.168.120.20)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.21

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.21 (192.168.120.21)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.21's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.21'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@mon01

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'mon01 (192.168.120.21)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@mon01.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'mon01.superopsmsb.com (192.168.120.21)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.22

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.22 (192.168.120.22)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.22's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.22'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@mon02

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'mon02 (192.168.120.22)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@mon02.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'mon02.superopsmsb.com (192.168.120.22)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.23

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.23 (192.168.120.23)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.23's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.23'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@mon03

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'mon03 (192.168.120.23)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@mon03.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'mon03.superopsmsb.com (192.168.120.23)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.24

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.24 (192.168.120.24)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.24's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.24'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@stor24

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor24 (192.168.120.24)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@stor24.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor24.superopsmsb.com (192.168.120.24)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.25

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.25 (192.168.120.25)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.25's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.25'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@stor25

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor25 (192.168.120.25)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@stor25.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor25.superopsmsb.com (192.168.120.25)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@192.168.120.26

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host '192.168.120.26 (192.168.120.26)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

cephadm@192.168.120.26's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'cephadm@192.168.120.26'"

and check to make sure that only the key(s) you wanted were added.spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@stor26

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor26 (192.168.120.26)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)spawn /usr/bin/ssh-copy-id -i /home/cephadm/.ssh/id_rsa.pub cephadm@stor26.superopsmsb.com

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor26.superopsmsb.com (192.168.120.26)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)批量设定远程主机免密码认证管理界面

=====================================================1: 部署环境 2: 免密认证 3: 退出操作

=====================================================

请输入有效的操作id: 3

开始退出管理界面...

测试效果

[cephadm@admin ~]$ for i in {20..26}; do hostname=$(ssh cephadm@192.168.120.$i "hostname"); echo "192.168.120.$i - $hostname"; done

192.168.120.20 - admin

192.168.120.21 - mon01

192.168.120.22 - mon02

192.168.120.23 - mon03

192.168.120.24 - stor24

192.168.120.25 - stor25

192.168.120.26 - stor26

因为 192.168.120.21-23 有两个角色,所以我们需要将相关角色的免密认证

[cephadm@admin ~]$ num_list={1..3}

[cephadm@admin ~]$ for i in $(eval echo stor2$num_list stor2$num_list.superopsmsb.com); do ssh-copy-id cephadm@$i; done

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor21 (192.168.120.21)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor22 (192.168.120.22)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor23 (192.168.120.23)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor21.superopsmsb.com (192.168.120.21)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor22.superopsmsb.com (192.168.120.22)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/cephadm/.ssh/id_rsa.pub"

The authenticity of host 'stor23.superopsmsb.com (192.168.120.23)' can't be established.

ECDSA key fingerprint is SHA256:KpRuS62f0QpNftthTCb49/KRM4z1//3xjP4JXtuSSFI.

ECDSA key fingerprint is MD5:5a:da:83:fb:58:c5:a1:45:1a:e4:9b:f9:d0:f1:30:25.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.(if you think this is a mistake, you may want to use -f option)

定制软件源

对 ceph-deploy 方式部署 Ceph 来说,Ceph 的官方仓库路径是:http://download.ceph.com/,包括各种 Ceph 版本,比如:Octopus、Pacific、Quincy等,它根据不同OS系统环境,分别位于 rpm-版本号 或者 debian-版本号 的noarch目录下。比如:Pacific版本的软件相关源在:rpm-pacific/el8/noarch/ceph-release-1-1.el8.noarch.rpm注意:el7:代表支持Red Hat 7.x、CentOS 7.x 系统的软件el8:代表支持Red Hat 8.x、CentOS 8.x 系统的软件pacific版本及其更新版本,只支持CentOS 8.x环境

Ceph 的 Pacific 和 Quincy 版本,仅仅支持 CentOS8.x,Octopus 版本虽然有CentOS7版本,不仅仅软件不全,而且对于底层 GCC 库和 GLIBC 库要求比较高,如果升级 CentOS7 的底层库,会导致其他软件受到影响,无法正常使用,另外没有配套的 ceph-deploy。所以对于 CentOS7 来说,只能部署 Nautilus 版本和更低版本。

对于 Ubuntu 系统来说,即使多个版本对于底层环境要求有些区别,但是经过测试,问题不大,也就是说 Ubuntu 系统可以安装 Ceph 的全系列。

安装软件源

yum install -y https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/ceph-release-1-1.el7.noarch.rpm

更新软件源

yum makecache fast所有 Ceph 节点部署软件源,只在 admin 节点执行即可

for i in {20..26}

do ssh root@192.168.120.$i yum install -y https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/ceph-release-1-1.el7.noarch.rpmssh root@192.168.120.$i yum makecache fast

done[root@admin ~]# for i in {20..26}

> do

> ssh root@192.168.120.$i yum install -y https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/ceph-release-1-1.el7.noarch.rpm

> ssh root@192.168.120.$i yum makecache fast

> done

Loaded plugins: fastestmirror, langpacks

Examining /var/tmp/yum-root-zoFbmV/ceph-release-1-1.el7.noarch.rpm: ceph-release-1-1.el7.noarch

Marking /var/tmp/yum-root-zoFbmV/ceph-release-1-1.el7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package ceph-release.noarch 0:1-1.el7 will be installed

--> Finished Dependency ResolutionDependencies Resolved================================================================================Package Arch Version Repository Size

================================================================================

Installing:ceph-release noarch 1-1.el7 /ceph-release-1-1.el7.noarch 544Transaction Summary

================================================================================

Install 1 PackageTotal size: 544

Installed size: 544

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transactionInstalling : ceph-release-1-1.el7.noarch 1/1Verifying : ceph-release-1-1.el7.noarch 1/1Installed:ceph-release.noarch 0:1-1.el7Complete!

......

部署依赖,只在 admin 节点安装 Ceph 软件

yum update -y

yum install ceph-deploy python-setuptools python2-subprocess32 -y测试效果

su - cephadm -c "ceph-deploy --help"

命令解析

查看命令帮助

[root@admin ~]# su - cephadm

Last login: Tue Apr 2 15:16:12 CST 2024 on pts/0

[cephadm@admin ~]$ ceph-deploy --help

usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME][--overwrite-conf] [--ceph-conf CEPH_CONF]COMMAND ...Easy Ceph deployment-^-/ \|O o| ceph-deploy v2.0.1).-.('/|||\`| '|` |'|`Full documentation can be found at: http://ceph.com/ceph-deploy/docsoptional arguments:-h, --help show this help message and exit-v, --verbose be more verbose-q, --quiet be less verbose--version the current installed version of ceph-deploy--username USERNAME the username to connect to the remote host--overwrite-conf overwrite an existing conf file on remote host (ifpresent)--ceph-conf CEPH_CONFuse (or reuse) a given ceph.conf filecommands:COMMAND description# 创建一个集群new Start deploying a new cluster, and write aCLUSTER.conf and keyring for it.install Install Ceph packages on remote hosts.rgw Ceph RGW daemon managementmgr Ceph MGR daemon managementmds Ceph MDS daemon managementmon Ceph MON Daemon managementgatherkeys Gather authentication keys for provisioning new nodes.disk Manage disks on a remote host.osd Prepare a data disk on remote host.repo Repo definition management# 同步admin秘钥信息admin Push configuration and client.admin key to a remotehost.# 同步ceph.conf文件config Copy ceph.conf to/from remote host(s)uninstall Remove Ceph packages from remote hosts.purgedata Purge (delete, destroy, discard, shred) any Ceph datafrom /var/lib/cephpurge Remove Ceph packages from remote hosts and purge alldata.forgetkeys Remove authentication keys from the local directory.pkg Manage packages on remote hosts.calamari Install and configure Calamari nodes. Assumes that arepository with Calamari packages is alreadyconfigured. Refer to the docs for examples(http://ceph.com/ceph-deploy/docs/conf.html)See 'ceph-deploy <command> --help' for help on a specific command

1.2.3 小结

1.3 Ceph部署

学习目标:这一节,我们从集群创建、部署Mon、小结三个方面来学习。

1.3.1 集群创建

准备工作

首先在admin管理节点上,以cephadm用户创建集群相关的配置文件目录:

su - cephadm

mkdir ceph-cluster && cd ceph-cluster

初始化集群解析

操作解析

ceph-deploy new --help

初始化第一个MON节点的命令格式为:“ceph-deploy new {initial-monitor-node(s)}”- mon01即为第一个MON节点名称,其名称必须与节点当前实际使用的主机名称(uname -n)保持一致- 可以是短名称,也可以是长名称,但是最终用的仍然是短名称,但是会导致如下报错:ceph-deploy new: error: hostname: xxx is not resolvable- 推荐使用完整写法:格式 hostname:fqdn,比如:mon01:mon01.superopsmsd.com注意:如果初始化的时候,希望同时部署多个节点的换,使用空格隔开 hostname:fqdn即可如果部署过程出现问题,需要清空- ceph-deploy forgetkeys- ceph-deploy purge mon01- ceph-deploy purgedata mon01- rm ceph.*

集群初始化

部署3个mon节点,只在 admin 节点执行即可,切换到 cephadm用户

ceph-deploy new --public-network 192.168.120.0/24 --cluster-network 192.168.8.0/24 mon01:mon01.superopsmsb.com mon02:mon02.superopsmsb.com mon03:mon03.superopsmsb.com --no-ssh-copykey[cephadm@admin ceph-cluster]$ ceph-deploy new --public-network 192.168.120.0/24 --cluster-network 192.168.8.0/24 mon01:mon01.superopsmsb.com mon02:mon02.superopsmsb.com mon03:mon03.superopsmsb.com --no-ssh-copykey

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy new --public-network 192.168.120.0/24 --cluster-network 192.168.8.0/24 mon01:mon01.superopsmsb.com mon02:mon02.superopsmsb.com mon03:mon03.superopsmsb.com --no-ssh-copykey

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x11bd140>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x121cd88>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : False

[ceph_deploy.cli][INFO ] mon : ['mon01:mon01.superopsmsb.com', 'mon02:mon02.superopsmsb.com', 'mon03:mon03.superopsmsb.com']

[ceph_deploy.cli][INFO ] public_network : 192.168.120.0/24

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : 192.168.8.0/24

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[mon01.superopsmsb.com][DEBUG ] connection detected need for sudo

[mon01.superopsmsb.com][DEBUG ] connected to host: mon01.superopsmsb.com

[mon01.superopsmsb.com][DEBUG ] detect platform information from remote host

[mon01.superopsmsb.com][DEBUG ] detect machine type

[mon01.superopsmsb.com][DEBUG ] find the location of an executable

[mon01.superopsmsb.com][INFO ] Running command: sudo /usr/sbin/ip link show

[mon01.superopsmsb.com][INFO ] Running command: sudo /usr/sbin/ip addr show

[mon01.superopsmsb.com][DEBUG ] IP addresses found: [u'192.168.8.21', u'192.168.120.21']

[ceph_deploy.new][DEBUG ] Resolving host mon01.superopsmsb.com

[ceph_deploy.new][DEBUG ] Monitor mon01 at 192.168.120.21

[mon02.superopsmsb.com][DEBUG ] connection detected need for sudo

[mon02.superopsmsb.com][DEBUG ] connected to host: mon02.superopsmsb.com

[mon02.superopsmsb.com][DEBUG ] detect platform information from remote host

[mon02.superopsmsb.com][DEBUG ] detect machine type

[mon02.superopsmsb.com][DEBUG ] find the location of an executable

[mon02.superopsmsb.com][INFO ] Running command: sudo /usr/sbin/ip link show

[mon02.superopsmsb.com][INFO ] Running command: sudo /usr/sbin/ip addr show

[mon02.superopsmsb.com][DEBUG ] IP addresses found: [u'192.168.120.22', u'192.168.8.22']

[ceph_deploy.new][DEBUG ] Resolving host mon02.superopsmsb.com

[ceph_deploy.new][DEBUG ] Monitor mon02 at 192.168.120.22

[mon03.superopsmsb.com][DEBUG ] connection detected need for sudo

[mon03.superopsmsb.com][DEBUG ] connected to host: mon03.superopsmsb.com

[mon03.superopsmsb.com][DEBUG ] detect platform information from remote host

[mon03.superopsmsb.com][DEBUG ] detect machine type

[mon03.superopsmsb.com][DEBUG ] find the location of an executable

[mon03.superopsmsb.com][INFO ] Running command: sudo /usr/sbin/ip link show

[mon03.superopsmsb.com][INFO ] Running command: sudo /usr/sbin/ip addr show

[mon03.superopsmsb.com][DEBUG ] IP addresses found: [u'192.168.120.23', u'192.168.8.23']

[ceph_deploy.new][DEBUG ] Resolving host mon03.superopsmsb.com

[ceph_deploy.new][DEBUG ] Monitor mon03 at 192.168.120.23

[ceph_deploy.new][DEBUG ] Monitor initial members are ['mon01', 'mon02', 'mon03']

[ceph_deploy.new][DEBUG ] Monitor addrs are [u'192.168.120.21', u'192.168.120.22', u'192.168.120.23']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

注意:如果出现如下报错:[ceph_deploy][ERROR ] AttributeError: 'module' object has no attribute 'needs_ssh'在执行命令的时候,添加一个 --no-ssh-copykey 参数即可这主要是因为免密认证的时候,没有进行 ssh cephadm@主机名 导致的

查看效果

查看初始化后的文件内容

[cephadm@admin ceph-cluster]$ ll

total 16

-rw-rw-r--. 1 cephadm cephadm 308 Apr 2 15:36 ceph.conf

-rw-rw-r--. 1 cephadm cephadm 5313 Apr 2 15:36 ceph-deploy-ceph.log

-rw-------. 1 cephadm cephadm 73 Apr 2 15:36 ceph.mon.keyring查看集群的配置文件

[cephadm@admin ceph-cluster]$ cat ceph.conf

[global]

fsid = 76cc0714-0bd7-43f7-b7c3-ec8cae2819e7 # 这个地方很重要,每次都不一样,不要乱动

public_network = 192.168.120.0/24

cluster_network = 192.168.8.0/24

mon_initial_members = mon01, mon02, mon03

mon_host = 192.168.120.21,192.168.120.22,192.168.120.23

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx查看集群通信的认证信息

[cephadm@admin ceph-cluster]$ cat ceph.mon.keyring

[mon.]

key = AQCZtQtmAAAAABAAGCEERe8csxRdaVWzgtq0mQ==

caps mon = allow *查看集群初始化的日志信息

[cephadm@admin ceph-cluster]$ cat ceph-deploy-ceph.log

[2024-04-02 15:36:55,425][ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[2024-04-02 15:36:55,425][ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy new --public-network 192.168.120.0/24 --cluster-network 192.168.8.0/24 mon01:mon01.superopsmsb.com mon02:mon02.superopsmsb.com mon03:mon03.superopsmsb.com --no-ssh-copykey

[2024-04-02 15:36:55,425][ceph_deploy.cli][INFO ] ceph-deploy options:

......

1.3.2 部署Mon

部署mon软件

操作解析:ceph-deploy命令能够以远程的方式连入Ceph集群各节点完成程序包安装等操作命令格式:ceph-deploy install {ceph-node} [{ceph-node} ...]示例:ceph-deploy install --release nautilus --nogpgcheck admin mon01 mon02 mon03 -- 本教程使用注意:这里主要是Ceph的工作角色的节点一般情况下,不推荐使用这种直接的方法来进行安装,效率太低,而且容易干扰其他主机环境如果不指定版本号 --release nautilus 一般默认安装的版本与yum源设置的版本号不对应

注意:上面会在所有节点上都来进行正常的安装部署,其实还有另外一种方法,手工在所有节点上安装ceph软件 -- 推荐如果以下安装执行失败,有可能是yum源配置没有配置好,可以执行ceph-deploy install {ceph-node} [{ceph-node} ...] 命令,自动配置yum源,完成包的安装yum install -y ceph ceph-osd ceph-mds ceph-mon ceph-radosgw# 推荐指定版本安装yum install -y ceph-14.2.22 ceph-osd-14.2.22 ceph-mds-14.2.22 ceph-mon-14.2.22 ceph-radosgw-14.2.22最后在admin角色主机上安装ceph-deploy install --release nautilus --no-adjust-repos --nogpgcheck admin mon01 mon02 mon03

执行过程

[cephadm@admin ceph-cluster]$ ceph-deploy install --release nautilus --no-adjust-repos --nogpgcheck admin mon01 mon02 mon03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy install --release nautilus --no-adjust-repos --nogpgcheck admin mon01 mon02 mon03

[ceph_deploy.cli][INFO ] ceph-deploy options:

......

[mon03][INFO ] Running command: sudo ceph --version

[mon03][DEBUG ] ceph version 14.2.22 (ca74598065096e6fcbd8433c8779a2be0c889351) nautilus (stable)

集群通信认证

配置初始MON节点,同时向所有节点同步配置

ceph-deploy mon create-initial

注意:为了避免因为认证方面导致的通信失败,尤其是在现有环境上,推荐使用 --overwrite-conf 参数ceph-deploy --overwrite-conf config push mon01 mon02 mon03

执行效果

[cephadm@admin ceph-cluster]$ ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

......

[mon01][DEBUG ] ********************************************************************************

[mon01][DEBUG ] status for monitor: mon.mon01

[mon01][DEBUG ] {

[mon01][DEBUG ] "election_epoch": 0,

[mon01][DEBUG ] "extra_probe_peers": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addrvec": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.22:3300",

[mon01][DEBUG ] "nonce": 0,

[mon01][DEBUG ] "type": "v2"

[mon01][DEBUG ] },

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.22:6789",

[mon01][DEBUG ] "nonce": 0,

[mon01][DEBUG ] "type": "v1"

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] },

[mon01][DEBUG ] {

[mon01][DEBUG ] "addrvec": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.23:3300",

[mon01][DEBUG ] "nonce": 0,

[mon01][DEBUG ] "type": "v2"

[mon01][DEBUG ] },

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.23:6789",

[mon01][DEBUG ] "nonce": 0,

[mon01][DEBUG ] "type": "v1"

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] }

[mon01][DEBUG ] ],

[mon01][DEBUG ] "feature_map": {

[mon01][DEBUG ] "mon": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "features": "0x3ffddff8ffecffff",

[mon01][DEBUG ] "num": 1,

[mon01][DEBUG ] "release": "luminous"

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] },

[mon01][DEBUG ] "features": {

[mon01][DEBUG ] "quorum_con": "0",

[mon01][DEBUG ] "quorum_mon": [],

[mon01][DEBUG ] "required_con": "0",

[mon01][DEBUG ] "required_mon": []

[mon01][DEBUG ] },

[mon01][DEBUG ] "monmap": {

[mon01][DEBUG ] "created": "2024-04-02 16:30:57.060040",

[mon01][DEBUG ] "epoch": 0,

[mon01][DEBUG ] "features": {

[mon01][DEBUG ] "optional": [],

[mon01][DEBUG ] "persistent": []

[mon01][DEBUG ] },

[mon01][DEBUG ] "fsid": "76cc0714-0bd7-43f7-b7c3-ec8cae2819e7",

[mon01][DEBUG ] "min_mon_release": 0,

[mon01][DEBUG ] "min_mon_release_name": "unknown",

[mon01][DEBUG ] "modified": "2024-04-02 16:30:57.060040",

[mon01][DEBUG ] "mons": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.21:6789/0",

[mon01][DEBUG ] "name": "mon01",

[mon01][DEBUG ] "public_addr": "192.168.120.21:6789/0",

[mon01][DEBUG ] "public_addrs": {

[mon01][DEBUG ] "addrvec": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.21:3300",

[mon01][DEBUG ] "nonce": 0,

[mon01][DEBUG ] "type": "v2"

[mon01][DEBUG ] },

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "192.168.120.21:6789",

[mon01][DEBUG ] "nonce": 0,

[mon01][DEBUG ] "type": "v1"

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] },

[mon01][DEBUG ] "rank": 0

[mon01][DEBUG ] },

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "0.0.0.0:0/1",

[mon01][DEBUG ] "name": "mon02",

[mon01][DEBUG ] "public_addr": "0.0.0.0:0/1",

[mon01][DEBUG ] "public_addrs": {

[mon01][DEBUG ] "addrvec": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "0.0.0.0:0",

[mon01][DEBUG ] "nonce": 1,

[mon01][DEBUG ] "type": "v1"

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] },

[mon01][DEBUG ] "rank": 1

[mon01][DEBUG ] },

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "0.0.0.0:0/2",

[mon01][DEBUG ] "name": "mon03",

[mon01][DEBUG ] "public_addr": "0.0.0.0:0/2",

[mon01][DEBUG ] "public_addrs": {

[mon01][DEBUG ] "addrvec": [

[mon01][DEBUG ] {

[mon01][DEBUG ] "addr": "0.0.0.0:0",

[mon01][DEBUG ] "nonce": 2,

[mon01][DEBUG ] "type": "v1"

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] },

[mon01][DEBUG ] "rank": 2

[mon01][DEBUG ] }

[mon01][DEBUG ] ]

[mon01][DEBUG ] },

[mon01][DEBUG ] "name": "mon01",

[mon01][DEBUG ] "outside_quorum": [

[mon01][DEBUG ] "mon01"

[mon01][DEBUG ] ],

[mon01][DEBUG ] "quorum": [],

[mon01][DEBUG ] "rank": 0,

[mon01][DEBUG ] "state": "probing",

[mon01][DEBUG ] "sync_provider": []

[mon01][DEBUG ] }

[mon01][DEBUG ] ********************************************************************************

[mon01][INFO ] monitor: mon.mon01 is running

[mon01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.mon01.asok mon_status

......

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpFs9N2l

[cephadm@admin ceph-cluster]$ ceph-deploy --overwrite-conf config push mon01 mon02 mon03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy --overwrite-conf config push mon01 mon02 mon03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x1c93440>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['mon01', 'mon02', 'mon03']

[ceph_deploy.cli][INFO ] func : <function config at 0x1c6fed8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.config][DEBUG ] Pushing config to mon01

[mon01][DEBUG ] connection detected need for sudo

[mon01][DEBUG ] connected to host: mon01

[mon01][DEBUG ] detect platform information from remote host

[mon01][DEBUG ] detect machine type

[mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to mon02

[mon02][DEBUG ] connection detected need for sudo

[mon02][DEBUG ] connected to host: mon02

[mon02][DEBUG ] detect platform information from remote host

[mon02][DEBUG ] detect machine type

[mon02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to mon03

[mon03][DEBUG ] connection detected need for sudo

[mon03][DEBUG ] connected to host: mon03

[mon03][DEBUG ] detect platform information from remote host

[mon03][DEBUG ] detect machine type

[mon03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

到mon的节点上查看mon的守护进程

for i in {20..23}; do ssh cephadm@192.168.120.$i "ps aux | grep -v grep | grep ceph-mon"; done[cephadm@admin ceph-cluster]$ for i in {20..23}; do ssh cephadm@192.168.120.$i "ps aux | grep -v grep | grep ceph-mon"; done

ceph 5608 0.2 1.7 501720 34636 ? Ssl 16:30 0:00 /usr/bin/ceph-mon -f --cluster ceph --id mon01 --setuser ceph --setgroup ceph

ceph 5435 0.1 1.6 500700 32772 ? Ssl 16:31 0:00 /usr/bin/ceph-mon -f --cluster ceph --id mon02 --setuser ceph --setgroup ceph

ceph 5459 0.1 1.4 501724 30152 ? Ssl 16:31 0:00 /usr/bin/ceph-mon -f --cluster ceph --id mon03 --setuser ceph --setgroup ceph结果显示:在所有的节点主机上,都有一套ceph-mon的进程在进行。

集群在初始化的时候,会为对应的mon节点生成配套的认证信息

[cephadm@admin ceph-cluster]$ ll /home/cephadm/ceph-cluster

total 604

-rw-------. 1 cephadm cephadm 113 Apr 2 16:31 ceph.bootstrap-mds.keyring

-rw-------. 1 cephadm cephadm 113 Apr 2 16:31 ceph.bootstrap-mgr.keyring

-rw-------. 1 cephadm cephadm 113 Apr 2 16:31 ceph.bootstrap-osd.keyring

-rw-------. 1 cephadm cephadm 113 Apr 2 16:31 ceph.bootstrap-rgw.keyring

-rw-------. 1 cephadm cephadm 151 Apr 2 16:31 ceph.client.admin.keyring

-rw-rw-r--. 1 cephadm cephadm 308 Apr 2 15:36 ceph.conf

-rw-rw-r--. 1 cephadm cephadm 534170 Apr 2 16:35 ceph-deploy-ceph.log

-rw-------. 1 cephadm cephadm 73 Apr 2 15:36 ceph.mon.keyring结果显示:这里生成了一系列的与ceph集群相关的认证文件ceph.bootstrap-mds.keyring 引导启动 mds 的秘钥文件ceph.bootstrap-mgr.keyring 引导启动 mgr 的秘钥文件ceph.bootstrap-osd.keyring 引导启动 osd 的密钥文件ceph.bootstrap-rgw.keyring 引导启动 rgw 的秘钥文件ceph.client.admin.keyring ceph客户端和管理端通信的认证秘钥,是最重要的注意:ceph.client.admin.keyring 拥有ceph集群的所有权限,一定不能有误。

1.3.3 小结

1.4 Ceph部署2

学习目标:这一节,我们从Mon认证、Mgr环境、小结三个方面来学习

1.4.1 Mon认证

为了方便后续的监控环境认证操作,在admin角色主机上,把配置文件和admin秘钥拷贝Ceph集群各监控角色节点,拷贝前秘钥文件前的各个mon节点效果

for i in {21..23}; do ssh cephadm@192.168.120.$i "echo -----$i-----; ls /etc/ceph"; done[cephadm@admin ceph-cluster]$ for i in {21..23}; do ssh cephadm@192.168.120.$i "echo -----$i-----; sudo ls /etc/ceph"; done

-----21-----

ceph.conf

rbdmap

tmpcz8PTU

-----22-----

ceph.conf

rbdmap

tmpSsFhkz

-----23-----

ceph.conf

rbdmap

tmpJ9VgjO

原则上要求,所有mon节点上的 ceph.conf 内容必须一致,如果不一致的话,可以通过下面命令同步ceph-deploy --overwrite-conf config push mon01 mon02 mon03执行集群的认证文件的拷贝动作

ceph-deploy admin mon01 mon02 mon03

执行认证文件信息同步

[cephadm@admin ceph-cluster]$ ceph-deploy admin mon01 mon02 mon03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy admin mon01 mon02 mon03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x2284248>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['mon01', 'mon02', 'mon03']

[ceph_deploy.cli][INFO ] func : <function admin at 0x21ea500>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to mon01

[mon01][DEBUG ] connection detected need for sudo

[mon01][DEBUG ] connected to host: mon01

[mon01][DEBUG ] detect platform information from remote host

[mon01][DEBUG ] detect machine type

[mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to mon02

[mon02][DEBUG ] connection detected need for sudo

[mon02][DEBUG ] connected to host: mon02

[mon02][DEBUG ] detect platform information from remote host

[mon02][DEBUG ] detect machine type

[mon02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to mon03

[mon03][DEBUG ] connection detected need for sudo

[mon03][DEBUG ] connected to host: mon03

[mon03][DEBUG ] detect platform information from remote host

[mon03][DEBUG ] detect machine type

[mon03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf查看效果

[cephadm@admin ceph-cluster]$ for i in {21..23}; do ssh cephadm@192.168.120.$i "echo -----$i-----; ls /etc/ceph"; done

-----21-----

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpcz8PTU

-----22-----

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpSsFhkz

-----23-----

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpJ9VgjO结果显示:所有的mon节点上多了一个 ceph 的客户端与服务端进行认证的秘钥文件了。ceph.client.admin.keyring 主要用于 ceph 客户端与管理端的一个通信认证。注意:如果我们不做交互式操作的话,这个文件可以不用复制。

认证文件权限

虽然我们把认证文件传递给对应的监控角色主机了, 但是我们的服务是通过普通用户cephadm来进行交流的。而默认情况下,传递过去的认证文件,cephadm普通用户是无法正常访问的[cephadm@admin ceph-cluster]$ for i in {21..23}; do ssh cephadm@192.168.120.$i "echo -----$i-----;ls -l /etc/ceph/ceph.cl*"; done

-----21-----

-rw-------. 1 root root 151 Apr 2 17:07 /etc/ceph/ceph.client.admin.keyring

-----22-----

-rw-------. 1 root root 151 Apr 2 17:07 /etc/ceph/ceph.client.admin.keyring

-----23-----

-rw-------. 1 root root 151 Apr 2 17:07 /etc/ceph/ceph.client.admin.keyring[root@stor21 ceph]# su - cephadm

[cephadm@mon01 ~]$ ceph -s

2024-04-02 17:21:10.541 7f1e2f5a6700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

2024-04-02 17:21:10.541 7f1e2f5a6700 -1 AuthRegistry(0x7f1e28066a68) no keyring found at /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

2024-04-02 17:21:10.549 7f1e2f5a6700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

2024-04-02 17:21:10.549 7f1e2f5a6700 -1 AuthRegistry(0x7f1e280c82e8) no keyring found at /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

2024-04-02 17:21:10.549 7f1e2f5a6700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

2024-04-02 17:21:10.549 7f1e2f5a6700 -1 AuthRegistry(0x7f1e2f5a4e78) no keyring found at /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

[errno 2] error connecting to the cluster我们需要在 Ceph 集群中,需要运行ceph命令的节点上,以root用户的身份设定普通用户cephadm能够读取/etc/ceph/ceph.client.admin.keyring文件的权限[cephadm@admin ceph-cluster]$ for i in {21..23}; do ssh cephadm@192.168.120.$i "sudo setfacl -m u:cephadm:r /etc/ceph/ceph.client.admin.keyring"; done查看文件权限

[cephadm@admin ceph-cluster]$ {21..23}; do ssh cephadm@192.168.120.$i "echo -----$i-----;ls -l /etc/ceph/ceph.cl*"; done

-----21-----

-rw-r-----+ 1 root root 151 Apr 2 17:07 /etc/ceph/ceph.client.admin.keyring

-----22-----

-rw-r-----+ 1 root root 151 Apr 2 17:07 /etc/ceph/ceph.client.admin.keyring

-----23-----

-rw-r-----+ 1 root root 151 Apr 2 17:07 /etc/ceph/ceph.client.admin.keyring查看文件的授权信息

[cephadm@admin ceph-cluster]$ for i in {21..23}; do ssh cephadm@192.168.120.$i "getfacl /etc/ceph/ceph.client.admin.keyring"; done

getfacl: Removing leading '/' from absolute path names

# file: etc/ceph/ceph.client.admin.keyring

# owner: root

# group: root

user::rw-

user:cephadm:r--

group::---

mask::r--

other::---getfacl: Removing leading '/' from absolute path names

# file: etc/ceph/ceph.client.admin.keyring

# owner: root

# group: root

user::rw-

user:cephadm:r--

group::---

mask::r--

other::---getfacl: Removing leading '/' from absolute path names

# file: etc/ceph/ceph.client.admin.keyring

# owner: root

# group: root

user::rw-

user:cephadm:r--

group::---

mask::r--

other::---监控节点就可以自己来收集相关的数据了,比如我们在mon01上执行如下命令

[root@stor21 ceph]# su - cephadm

Last login: Tue Apr 2 17:21:08 CST 2024 on pts/0

[cephadm@mon01 ~]$ ceph -scluster:id: 76cc0714-0bd7-43f7-b7c3-ec8cae2819e7health: HEALTH_WARNmons are allowing insecure global_id reclaimservices:mon: 3 daemons, quorum mon01,mon02,mon03 (age 56m)mgr: no daemons activeosd: 0 osds: 0 up, 0 indata:pools: 0 pools, 0 pgsobjects: 0 objects, 0 Busage: 0 B used, 0 B / 0 B availpgs:结果显示:我们的cluster状态不是正常的对于service来说,有三个mon服务,选举的节点有三个,其他服务没有。

集群状态不正常的原因,我们可以通过 ceph health 命令来进行确认,效果如下:

[root@stor21 ~]# ceph health

HEALTH_WARN mons are allowing insecure global_id reclaim; clock skew detected on mon.mon02, mon.mon03

[root@stor21 ~]# ceph health detail

HEALTH_WARN mons are allowing insecure global_id reclaim; clock skew detected on mon.mon02, mon.mon03

AUTH_INSECURE_GLOBAL_ID_RECLAIM_ALLOWED mons are allowing insecure global_id reclaimmon.mon01 has auth_allow_insecure_global_id_reclaim set to truemon.mon02 has auth_allow_insecure_global_id_reclaim set to truemon.mon03 has auth_allow_insecure_global_id_reclaim set to true结果显示:我们在所有的mon节点上进行提示属性的设定

ceph config set mon auth_allow_insecure_global_id_reclaim false[root@stor21 ~]# ceph -scluster:id: 76cc0714-0bd7-43f7-b7c3-ec8cae2819e7health: HEALTH_OKservices:mon: 3 daemons, quorum mon01,mon02,mon03 (age 92m)mgr: no daemons activeosd: 0 osds: 0 up, 0 indata:pools: 0 pools, 0 pgsobjects: 0 objects, 0 Busage: 0 B used, 0 B / 0 B availpgs:结果显示:集群状态问题已经解决了

1.4.2 Mgr环境

需求

ceph-mgr 工作的模式是事件驱动的,简单来说,就是等待事件,事件来了则处理事件返回结果,又继续等待。Ceph MGR 是 Ceph 12.2 依赖主推的功能之一,它负责 Ceph 集群管理的组件,它主要功能是把集群的一些指标暴露给外界使用。根据官方的架构原则上来说,mgr要两个节点来进行工作。对于我们的学习环境来说,其实一个就能够正常使用了,为了节省资源的使用,我们这里将mon01和mon02主机节点兼做MGR节点,为了后续的节点扩充实践,我们暂时先安装一个节点,后面再安装一个节点。

未部署MGR节点的集群状态效果

[cephadm@admin ceph-cluster]$ ssh mon01 ceph -scluster:id: 76cc0714-0bd7-43f7-b7c3-ec8cae2819e7health: HEALTH_OKservices:mon: 3 daemons, quorum mon01,mon02,mon03 (age 9m)mgr: no daemons activeosd: 0 osds: 0 up, 0 indata:pools: 0 pools, 0 pgsobjects: 0 objects, 0 Busage: 0 B used, 0 B / 0 B availpgs:

mgr服务配置

配置Manager节点,启动ceph-mgr进程:

[cephadm@admin ceph-cluster]$ ceph-deploy mgr create mon01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mgr create mon01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('mon01', 'mon01')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x10fccf8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x1096410>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts mon01:mon01

[mon01][DEBUG ] connection detected need for sudo

[mon01][DEBUG ] connected to host: mon01

[mon01][DEBUG ] detect platform information from remote host

[mon01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.4.1708 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to mon01

[mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[mon01][WARNIN] mgr keyring does not exist yet, creating one

[mon01][DEBUG ] create a keyring file

[mon01][DEBUG ] create path recursively if it doesn't exist

[mon01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.mon01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-mon01/keyring

[mon01][INFO ] Running command: sudo systemctl enable ceph-mgr@mon01

[mon01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@mon01.service to /usr/lib/systemd/system/ceph-mgr@.service.

[mon01][INFO ] Running command: sudo systemctl start ceph-mgr@mon01

[mon01][INFO ] Running command: sudo systemctl enable ceph.target

在指定的mgr节点上,查看守护进程

[cephadm@admin ceph-cluster]$ ssh mon01 ps aux | grep -v grep | grep ceph-mgr

ceph 8649 6.2 6.1 1035684 125616 ? Ssl 19:49 0:04 /usr/bin/ceph-mgr -f --cluster ceph --id mon01 --setuser ceph --setgroup ceph结果显示:在 mon01 节点上,部署了一个mgr服务进程

查看集群服务的运行状态

[cephadm@admin ceph-cluster]$ ssh mon01 ceph -scluster:id: 76cc0714-0bd7-43f7-b7c3-ec8cae2819e7health: HEALTH_WARNOSD count 0 < osd_pool_default_size 3services:mon: 3 daemons, quorum mon01,mon02,mon03 (age 13m)mgr: mon01(active, since 2m)osd: 0 osds: 0 up, 0 indata:pools: 0 pools, 0 pgsobjects: 0 objects, 0 Busage: 0 B used, 0 B / 0 B availpgs:结果显示:这个时候,services上,多了一个mgr服务,在mon01节点上,服务状态时active。

admin查看状态

远程查看状态方式不太方便,我们可以在admin主机上进行一下操作来实现admin主机查看集群状态

sudo yum install -y ceph-common

ceph-deploy admin admin