说明:仅供学习使用,请勿用于非法用途,若有侵权,请联系博主删除

作者:zhu6201976

一、捕获Request所有网络相关异常

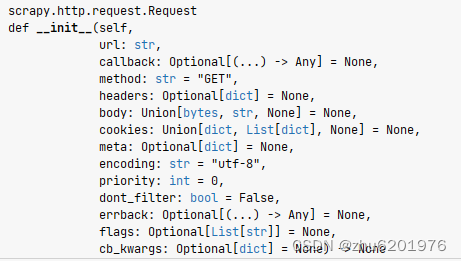

在spider类中,我们构造Request对象或FormRequest对象时,可传递参数errback回调函数。该回调函数可捕获Request所有网络相关异常,如图所示:

文档地址:Requests and Responses — Scrapy 2.11.1 documentation

-

errback (collections.abc.Callable) –

a function that will be called if any exception was raised while processing the request. This includes pages that failed with 404 HTTP errors and such. It receives a Failure as first parameter. For more information, see Using errbacks to catch exceptions in request processing below.

Changed in version 2.0: The callback parameter is no longer required when the errback parameter is specified.

解释:errback参数接收一个function,以处理该request时任意网络异常,如404 Not Found等。errback回调函数第一个参数为Failure对象,包含失败的Request对象和失败原因。

源码示例:Requests and Responses — Scrapy 2.11.1 documentation

import scrapyfrom scrapy.spidermiddlewares.httperror import HttpError

from twisted.internet.error import DNSLookupError

from twisted.internet.error import TimeoutError, TCPTimedOutErrorclass ErrbackSpider(scrapy.Spider):name = "errback_example"start_urls = ["http://www.httpbin.org/", # HTTP 200 expected"http://www.httpbin.org/status/404", # Not found error"http://www.httpbin.org/status/500", # server issue"http://www.httpbin.org:12345/", # non-responding host, timeout expected"https://example.invalid/", # DNS error expected]def start_requests(self):for u in self.start_urls:yield scrapy.Request(u,callback=self.parse_httpbin,errback=self.errback_httpbin,dont_filter=True,)def parse_httpbin(self, response):self.logger.info("Got successful response from {}".format(response.url))# do something useful here...def errback_httpbin(self, failure):# log all failuresself.logger.error(repr(failure))# in case you want to do something special for some errors,# you may need the failure's type:if failure.check(HttpError):# these exceptions come from HttpError spider middleware# you can get the non-200 responseresponse = failure.value.responseself.logger.error("HttpError on %s", response.url)elif failure.check(DNSLookupError):# this is the original requestrequest = failure.requestself.logger.error("DNSLookupError on %s", request.url)elif failure.check(TimeoutError, TCPTimedOutError):request = failure.requestself.logger.error("TimeoutError on %s", request.url)从示例可知,errback可捕获所有网络相关异常,如:HttpError、DNSLookupError、TCPTimedOutError等。因此,我们可以在构造Request对象时,传递该参数,捕获spider中所有与网络相关的异常。

二、捕获所有解析异常

构造Request对象时,仅传递errback参数只能捕获该请求相关网络异常,并不能捕获到解析相关异常。因此,需要再次try catch捕获,以下案例捕获程序中的ValueError 和 Name Not DefinedError。

def parse(self, response, **kwargs):method_name = sys._getframe().f_code.co_name# 2.解析异常需单独捕获try:1 / 0aaaexcept Exception as e:self.logger.error(f'{method_name} Exception {e}')三、完整代码及运行效果

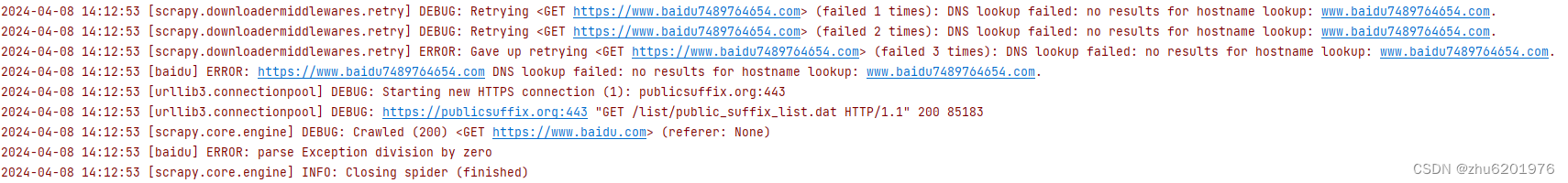

import sysimport scrapyclass BaiduSpider(scrapy.Spider):name = "baidu"# allowed_domains = ["baidu.com"]# start_urls = []def start_requests(self):# 1.构造请求errback回调 可捕获请求异常yield scrapy.Request('https://www.baidu7489764654.com', callback=self.parse, errback=self.parse_errback)yield scrapy.Request('https://www.baidu.com', callback=self.parse, errback=self.parse_errback)def parse(self, response, **kwargs):method_name = sys._getframe().f_code.co_name# 2.解析异常需单独捕获try:1 / 0aaaexcept Exception as e:self.logger.error(f'{method_name} Exception {e}')def parse_errback(self, failure):# 处理请求异常self.logger.error(f'{failure.request.url} {failure.value}')

四、总结

经过上述2个步骤,理论上来说,spider类中所有异常已被成功捕获。不管是请求相关的网络异常,还是解析过程中的代码异常,都能成功捕获并处理,代码健壮性较佳。

)

)

)

、apply()、bind() 的区别)