文章目录

- 学习过程

- 赛题理解

- 学习目标

- 赛题数据

- 数据标签

- 评测指标

- 解题思路

- BERT

- 代码

学习过程

20年当时自身功底是比较零基础(会写些基础的Python[三个科学计算包]数据分析),一开始看这块其实挺懵的,不会就去问百度或其他人,当时遇见困难挺害怕的,但22后面开始力扣题【目前已刷好几轮,博客没写力扣文章之前,力扣排名靠前已刷有5遍左右,排名靠后刷3次左右,代码功底也在一步一步提升】不断地刷、遇见代码不懂的代码,也开始去打印print去理解,到后面问其他人的问题越来越少,个人自主学习、自主解决能力也得到了进一步增强。

赛题理解

- 赛题名称:零基础入门NLP之新闻文本分类

- 赛题目标:通过这道赛题可以引导大家走入自然语言处理的世界,带大家接触NLP的预处理、模型构建和模型训练等知识点。

- 赛题任务:赛题以自然语言处理为背景,要求选手对新闻文本进行分类,这是一个典型的字符识别问题。

学习目标

- 理解赛题背景与赛题数据

- 完成赛题报名和数据下载,理解赛题的解题思路

赛题数据

赛题以匿名处理后的新闻数据为赛题数据,数据集报名后可见并可下载。赛题数据为新闻文本,并按照字符级别进行匿名处理。整合划分出14个候选分类类别:财经、彩票、房产、股票、家居、教育、科技、社会、时尚、时政、体育、星座、游戏、娱乐的文本数据。

赛题数据由以下几个部分构成:训练集20w条样本,测试集A包括5w条样本,测试集B包括5w条样本。为了预防选手人工标注测试集的情况,我们将比赛数据的文本按照字符级别进行了匿名处理。

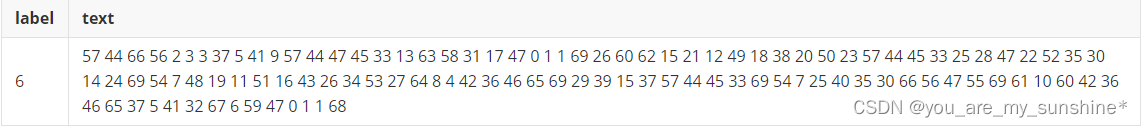

数据标签

处理后的赛题训练数据如下:

在数据集中标签的对应的关系如下:{‘科技’: 0, ‘股票’: 1, ‘体育’: 2, ‘娱乐’: 3, ‘时政’: 4, ‘社会’: 5, ‘教育’: 6, ‘财经’: 7, ‘家居’: 8, ‘游戏’: 9, ‘房产’: 10, ‘时尚’: 11, ‘彩票’: 12, ‘星座’: 13}

评测指标

评价标准为类别f1_score的均值,选手提交结果与实际测试集的类别进行对比,结果越大越好。

解题思路

赛题思路分析:赛题本质是一个文本分类问题,需要根据每句的字符进行分类。但赛题给出的数据是匿名化的,不能直接使用中文分词等操作,这个是赛题的难点。

因此本次赛题的难点是需要对匿名字符进行建模,进而完成文本分类的过程。由于文本数据是一种典型的非结构化数据,因此可能涉及到特征提取和分类模型两个部分。为了减低参赛难度,我们提供了一些解题思路供大家参考:

思路1:TF-IDF + 机器学习分类器

直接使用TF-IDF对文本提取特征,并使用分类器进行分类。在分类器的选择上,可以使用SVM、LR、或者XGBoost。

思路2:FastText

FastText是入门款的词向量,利用Facebook提供的FastText工具,可以快速构建出分类器。

思路3:WordVec + 深度学习分类器

WordVec是进阶款的词向量,并通过构建深度学习分类完成分类。深度学习分类的网络结构可以选择TextCNN、TextRNN或者BiLSTM。

思路4:Bert词向量

Bert是高配款的词向量,具有强大的建模学习能力。

这里使用思路1(TF-IDF + 机器学习分类器) 及 思路4(Bert词向量)

BERT

微调将最后一层的第一个token即[CLS]的隐藏向量作为句子的表示,然后输入到softmax层进行分类。

预训练BERT以及相关代码下载地址:链接: https://pan.baidu.com/s/1zd6wN7elGgp1NyuzYKpvGQ 提取码: tmp5

在原基础上,更改了不少参数,目前调试后该参数效果在原基础上是最佳的

代码

print("程序开始")

import logging

import randomimport numpy as np

import torchlogging.basicConfig(level=logging.INFO, format='%(asctime)-15s %(levelname)s: %(message)s')# set seed

seed = 666

random.seed(seed)

np.random.seed(seed)

torch.cuda.manual_seed(seed)

torch.manual_seed(seed)# set cuda

gpu = 0

use_cuda = gpu >= 0 and torch.cuda.is_available()

if use_cuda:torch.cuda.set_device(gpu)device = torch.device("cuda", gpu)

else:device = torch.device("cpu")

logging.info("Use cuda: %s, gpu id: %d.", use_cuda, gpu)print("开始split data to 20 fold")

# split data to 20 fold

fold_num = 20

data_file = 'train_set.csv'

import pandas as pddef all_data2fold(fold_num, num=20000):fold_data = []f = pd.read_csv(data_file, sep='\t', encoding='UTF-8')texts = f['text'].tolist()[:num]labels = f['label'].tolist()[:num]total = len(labels)index = list(range(total))np.random.shuffle(index)all_texts = []all_labels = []for i in index:all_texts.append(texts[i])all_labels.append(labels[i])label2id = {}for i in range(total):label = str(all_labels[i])if label not in label2id:label2id[label] = [i]else:label2id[label].append(i)all_index = [[] for _ in range(fold_num)]for label, data in label2id.items():# print(label, len(data))batch_size = int(len(data) / fold_num)other = len(data) - batch_size * fold_numfor i in range(fold_num):cur_batch_size = batch_size + 1 if i < other else batch_size# print(cur_batch_size)batch_data = [data[i * batch_size + b] for b in range(cur_batch_size)]all_index[i].extend(batch_data)batch_size = int(total / fold_num)other_texts = []other_labels = []other_num = 0start = 0for fold in range(fold_num):num = len(all_index[fold])texts = [all_texts[i] for i in all_index[fold]]labels = [all_labels[i] for i in all_index[fold]]if num > batch_size:fold_texts = texts[:batch_size]other_texts.extend(texts[batch_size:])fold_labels = labels[:batch_size]other_labels.extend(labels[batch_size:])other_num += num - batch_sizeelif num < batch_size:end = start + batch_size - numfold_texts = texts + other_texts[start: end]fold_labels = labels + other_labels[start: end]start = endelse:fold_texts = textsfold_labels = labelsassert batch_size == len(fold_labels)# shuffleindex = list(range(batch_size))np.random.shuffle(index)shuffle_fold_texts = []shuffle_fold_labels = []for i in index:shuffle_fold_texts.append(fold_texts[i])shuffle_fold_labels.append(fold_labels[i])data = {'label': shuffle_fold_labels, 'text': shuffle_fold_texts}fold_data.append(data)logging.info("Fold lens %s", str([len(data['label']) for data in fold_data]))return fold_datafold_data = all_data2fold(20)

print("结束split data to 20 fold")print("开始build train, dev, test data")

# build train, dev, test data

fold_id = 19# dev

dev_data = fold_data[fold_id]# train

train_texts = []

train_labels = []

for i in range(0, fold_id):data = fold_data[i]train_texts.extend(data['text'])train_labels.extend(data['label'])train_data = {'label': train_labels, 'text': train_texts}# test

test_data_file = 'test_a.csv'

f = pd.read_csv(test_data_file, sep='\t', encoding='UTF-8')

texts = f['text'].tolist()

test_data = {'label': [0] * len(texts), 'text': texts}

print("结束build train, dev, test data")print("开始build vocab")

# build vocab

from collections import Counter

from transformers import BasicTokenizerbasic_tokenizer = BasicTokenizer()class Vocab():def __init__(self, train_data):self.min_count = 0self.pad = 0self.unk = 1self._id2word = ['[PAD]', '[UNK]']self._id2extword = ['[PAD]', '[UNK]']self._id2label = []self.target_names = []self.build_vocab(train_data)reverse = lambda x: dict(zip(x, range(len(x))))self._word2id = reverse(self._id2word)self._label2id = reverse(self._id2label)logging.info("Build vocab: words %d, labels %d." % (self.word_size, self.label_size))def build_vocab(self, data):self.word_counter = Counter()for text in data['text']:words = text.split()for word in words:self.word_counter[word] += 1for word, count in self.word_counter.most_common():if count >= self.min_count:self._id2word.append(word)label2name = {0: '科技', 1: '股票', 2: '体育', 3: '娱乐', 4: '时政', 5: '社会', 6: '教育', 7: '财经',8: '家居', 9: '游戏', 10: '房产', 11: '时尚', 12: '彩票', 13: '星座'}self.label_counter = Counter(data['label'])for label in range(len(self.label_counter)):count = self.label_counter[label]self._id2label.append(label)self.target_names.append(label2name[label])def load_pretrained_embs(self, embfile):with open(embfile, encoding='utf-8') as f:lines = f.readlines()items = lines[0].split()word_count, embedding_dim = int(items[0]), int(items[1])index = len(self._id2extword)embeddings = np.zeros((word_count + index, embedding_dim))for line in lines[1:]:values = line.split()self._id2extword.append(values[0])vector = np.array(values[1:], dtype='float64')embeddings[self.unk] += vectorembeddings[index] = vectorindex += 1embeddings[self.unk] = embeddings[self.unk] / word_countembeddings = embeddings / np.std(embeddings)reverse = lambda x: dict(zip(x, range(len(x))))self._extword2id = reverse(self._id2extword)assert len(set(self._id2extword)) == len(self._id2extword)return embeddingsdef word2id(self, xs):if isinstance(xs, list):return [self._word2id.get(x, self.unk) for x in xs]return self._word2id.get(xs, self.unk)def extword2id(self, xs):if isinstance(xs, list):return [self._extword2id.get(x, self.unk) for x in xs]return self._extword2id.get(xs, self.unk)def label2id(self, xs):if isinstance(xs, list):return [self._label2id.get(x, self.unk) for x in xs]return self._label2id.get(xs, self.unk)@propertydef word_size(self):return len(self._id2word)@propertydef extword_size(self):return len(self._id2extword)@propertydef label_size(self):return len(self._id2label)vocab = Vocab(train_data)

print("结束build vocab")print("开始build module")

# build module

import torch.nn as nn

import torch.nn.functional as Fclass Attention(nn.Module):def __init__(self, hidden_size):super(Attention, self).__init__()self.weight = nn.Parameter(torch.Tensor(hidden_size, hidden_size))self.weight.data.normal_(mean=0.0, std=0.05)self.bias = nn.Parameter(torch.Tensor(hidden_size))b = np.zeros(hidden_size, dtype=np.float32)self.bias.data.copy_(torch.from_numpy(b))self.query = nn.Parameter(torch.Tensor(hidden_size))self.query.data.normal_(mean=0.0, std=0.05)def forward(self, batch_hidden, batch_masks):# batch_hidden: b x len x hidden_size (2 * hidden_size of lstm)# batch_masks: b x len# linearkey = torch.matmul(batch_hidden, self.weight) + self.bias # b x len x hidden# compute attentionoutputs = torch.matmul(key, self.query) # b x lenmasked_outputs = outputs.masked_fill((1 - batch_masks).bool(), float(-1e32))attn_scores = F.softmax(masked_outputs, dim=1) # b x len# 对于全零向量,-1e32的结果为 1/len, -inf为nan, 额外补0masked_attn_scores = attn_scores.masked_fill((1 - batch_masks).bool(), 0.0)# sum weighted sourcesbatch_outputs = torch.bmm(masked_attn_scores.unsqueeze(1), key).squeeze(1) # b x hiddenreturn batch_outputs, attn_scores# build word encoder

csv_path = './bert/bert-mini/'

bert_path = '/students/julyedu_554294/NLP/news/bert/bert-mini'

dropout = 0.18from transformers import BertModelclass WordBertEncoder(nn.Module):def __init__(self):super(WordBertEncoder, self).__init__()self.dropout = nn.Dropout(dropout)self.tokenizer = WhitespaceTokenizer()self.bert = BertModel.from_pretrained(bert_path)self.pooled = Falselogging.info('Build Bert encoder with pooled {}.'.format(self.pooled))def encode(self, tokens):tokens = self.tokenizer.tokenize(tokens)return tokensdef get_bert_parameters(self):no_decay = ['bias', 'LayerNorm.weight']optimizer_parameters = [{'params': [p for n, p in self.bert.named_parameters() if not any(nd in n for nd in no_decay)],'weight_decay': 0.01},{'params': [p for n, p in self.bert.named_parameters() if any(nd in n for nd in no_decay)],'weight_decay': 0.0}]return optimizer_parametersdef forward(self, input_ids, token_type_ids):# input_ids: sen_num x bert_len# token_type_ids: sen_num x bert_len# sen_num x bert_len x 256, sen_num x 256sequence_output, pooled_output = self.bert(input_ids=input_ids, token_type_ids=token_type_ids)#print('sequence_output:', sequence_output)#print('pooled_output:', pooled_output)if self.pooled:reps = pooled_outputelse:reps = sequence_output[:, 0, :] # sen_num x 256if self.training:reps = self.dropout(reps)return repsclass WhitespaceTokenizer():"""WhitespaceTokenizer with vocab."""def __init__(self):vocab_file = csv_path + 'vocab.txt'self._token2id = self.load_vocab(vocab_file)self._id2token = {v: k for k, v in self._token2id.items()}self.max_len = 256self.unk = 1logging.info("Build Bert vocab with size %d." % (self.vocab_size))def load_vocab(self, vocab_file):f = open(vocab_file, 'r')lines = f.readlines()lines = list(map(lambda x: x.strip(), lines))vocab = dict(zip(lines, range(len(lines))))return vocabdef tokenize(self, tokens):assert len(tokens) <= self.max_len - 2tokens = ["[CLS]"] + tokens + ["[SEP]"]output_tokens = self.token2id(tokens)return output_tokensdef token2id(self, xs):if isinstance(xs, list):return [self._token2id.get(x, self.unk) for x in xs]return self._token2id.get(xs, self.unk)@propertydef vocab_size(self):return len(self._id2token)# build sent encoder

sent_hidden_size = 256

sent_num_layers = 2class SentEncoder(nn.Module):def __init__(self, sent_rep_size):super(SentEncoder, self).__init__()self.dropout = nn.Dropout(dropout)self.sent_lstm = nn.LSTM(input_size=sent_rep_size,hidden_size=sent_hidden_size,num_layers=sent_num_layers,batch_first=True,bidirectional=True)def forward(self, sent_reps, sent_masks):# sent_reps: b x doc_len x sent_rep_size# sent_masks: b x doc_lensent_hiddens, _ = self.sent_lstm(sent_reps) # b x doc_len x hidden*2sent_hiddens = sent_hiddens * sent_masks.unsqueeze(2)if self.training:sent_hiddens = self.dropout(sent_hiddens)return sent_hiddens

print("结束build module")print("开始build model")

# build model

class Model(nn.Module):def __init__(self, vocab):super(Model, self).__init__()self.sent_rep_size = 256self.doc_rep_size = sent_hidden_size * 2self.all_parameters = {}parameters = []self.word_encoder = WordBertEncoder()bert_parameters = self.word_encoder.get_bert_parameters()self.sent_encoder = SentEncoder(self.sent_rep_size)self.sent_attention = Attention(self.doc_rep_size)parameters.extend(list(filter(lambda p: p.requires_grad, self.sent_encoder.parameters())))parameters.extend(list(filter(lambda p: p.requires_grad, self.sent_attention.parameters())))self.out = nn.Linear(self.doc_rep_size, vocab.label_size, bias=True)parameters.extend(list(filter(lambda p: p.requires_grad, self.out.parameters())))if use_cuda:self.to(device)if len(parameters) > 0:self.all_parameters["basic_parameters"] = parametersself.all_parameters["bert_parameters"] = bert_parameterslogging.info('Build model with bert word encoder, lstm sent encoder.')para_num = sum([np.prod(list(p.size())) for p in self.parameters()])logging.info('Model param num: %.2f M.' % (para_num / 1e6))def forward(self, batch_inputs):# batch_inputs(batch_inputs1, batch_inputs2): b x doc_len x sent_len# batch_masks : b x doc_len x sent_lenbatch_inputs1, batch_inputs2, batch_masks = batch_inputsbatch_size, max_doc_len, max_sent_len = batch_inputs1.shape[0], batch_inputs1.shape[1], batch_inputs1.shape[2]batch_inputs1 = batch_inputs1.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_lenbatch_inputs2 = batch_inputs2.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_lenbatch_masks = batch_masks.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_lensent_reps = self.word_encoder(batch_inputs1, batch_inputs2) # sen_num x sent_rep_sizesent_reps = sent_reps.view(batch_size, max_doc_len, self.sent_rep_size) # b x doc_len x sent_rep_sizebatch_masks = batch_masks.view(batch_size, max_doc_len, max_sent_len) # b x doc_len x max_sent_lensent_masks = batch_masks.bool().any(2).float() # b x doc_lensent_hiddens = self.sent_encoder(sent_reps, sent_masks) # b x doc_len x doc_rep_sizedoc_reps, atten_scores = self.sent_attention(sent_hiddens, sent_masks) # b x doc_rep_sizebatch_outputs = self.out(doc_reps) # b x num_labelsreturn batch_outputsmodel = Model(vocab)

print("结束build model")print("开始build optimizer")

# build optimizer

learning_rate = 2e-4

bert_lr = 2.5e-5

decay = .95

decay_step = 1000

from transformers import AdamW, get_linear_schedule_with_warmupclass Optimizer:def __init__(self, model_parameters, steps):self.all_params = []self.optims = []self.schedulers = []for name, parameters in model_parameters.items():if name.startswith("basic"):optim = torch.optim.Adam(parameters, lr=learning_rate)self.optims.append(optim)l = lambda step: decay ** (step // decay_step)scheduler = torch.optim.lr_scheduler.LambdaLR(optim, lr_lambda=l)self.schedulers.append(scheduler)self.all_params.extend(parameters)elif name.startswith("bert"):optim_bert = AdamW(parameters, bert_lr, eps=1e-8)self.optims.append(optim_bert)scheduler_bert = get_linear_schedule_with_warmup(optim_bert, 0, steps)self.schedulers.append(scheduler_bert)for group in parameters:for p in group['params']:self.all_params.append(p)else:Exception("no nameed parameters.")self.num = len(self.optims)def step(self):for optim, scheduler in zip(self.optims, self.schedulers):optim.step()scheduler.step()optim.zero_grad()def zero_grad(self):for optim in self.optims:optim.zero_grad()def get_lr(self):lrs = tuple(map(lambda x: x.get_lr()[-1], self.schedulers))lr = ' %.5f' * self.numres = lr % lrsreturn res

print("结束build optimizer")print("开始build dataset")

def sentence_split(text, vocab, max_sent_len=256, max_segment=16):words = text.strip().split()document_len = len(words)index = list(range(0, document_len, max_sent_len))index.append(document_len)segments = []for i in range(len(index) - 1):segment = words[index[i]: index[i + 1]]assert len(segment) > 0segment = [word if word in vocab._id2word else '<UNK>' for word in segment]segments.append([len(segment), segment])assert len(segments) > 0if len(segments) > max_segment:segment_ = int(max_segment / 2)return segments[:segment_] + segments[-segment_:]else:return segmentsdef get_examples(data, word_encoder, vocab, max_sent_len=256, max_segment=8):label2id = vocab.label2idexamples = []for text, label in zip(data['text'], data['label']):# labelid = label2id(label)# wordssents_words = sentence_split(text, vocab, max_sent_len-2, max_segment)doc = []for sent_len, sent_words in sents_words:token_ids = word_encoder.encode(sent_words)sent_len = len(token_ids)token_type_ids = [0] * sent_lendoc.append([sent_len, token_ids, token_type_ids])examples.append([id, len(doc), doc])logging.info('Total %d docs.' % len(examples))return examples

print("结束build dataset")print("开始build loader")

# build loaderdef batch_slice(data, batch_size):batch_num = int(np.ceil(len(data) / float(batch_size)))for i in range(batch_num):cur_batch_size = batch_size if i < batch_num - 1 else len(data) - batch_size * idocs = [data[i * batch_size + b] for b in range(cur_batch_size)]yield docsdef data_iter(data, batch_size, shuffle=True, noise=1.0):"""randomly permute data, then sort by source length, and partition into batchesensure that the length of sentences in each batch"""batched_data = []if shuffle:np.random.shuffle(data)lengths = [example[1] for example in data]noisy_lengths = [- (l + np.random.uniform(- noise, noise)) for l in lengths]sorted_indices = np.argsort(noisy_lengths).tolist()sorted_data = [data[i] for i in sorted_indices]else:sorted_data =databatched_data.extend(list(batch_slice(sorted_data, batch_size)))if shuffle:np.random.shuffle(batched_data)for batch in batched_data:yield batch

print("结束build loader")print("开始some function")

# some function

from sklearn.metrics import f1_score, precision_score, recall_scoredef get_score(y_ture, y_pred):y_ture = np.array(y_ture)y_pred = np.array(y_pred)f1 = f1_score(y_ture, y_pred, average='macro') * 100p = precision_score(y_ture, y_pred, average='macro') * 100r = recall_score(y_ture, y_pred, average='macro') * 100return str((reformat(p, 2), reformat(r, 2), reformat(f1, 2))), reformat(f1, 2)def reformat(num, n):return float(format(num, '0.' + str(n) + 'f'))

print("结束some function")print("开始build trainer")

# build trainerimport time

from sklearn.metrics import classification_reportclip = 5.0

epochs = 20

early_stops = 6

log_interval = 150test_batch_size = 64

train_batch_size = 64save_model = './bert_submission_0306_12_change_canshu_taidaxiugai_kuoda2.bin'

save_test = './bert_submission_0306_12_change_canshu_taidaxiugai_kuoda2.csv'class Trainer():def __init__(self, model, vocab):self.model = modelself.report = Trueself.train_data = get_examples(train_data, model.word_encoder, vocab)self.batch_num = int(np.ceil(len(self.train_data) / float(train_batch_size)))self.dev_data = get_examples(dev_data, model.word_encoder, vocab)self.test_data = get_examples(test_data, model.word_encoder, vocab)# criterionself.criterion = nn.CrossEntropyLoss()# label nameself.target_names = vocab.target_names# optimizerself.optimizer = Optimizer(model.all_parameters, steps=self.batch_num * epochs)# countself.step = 0self.early_stop = -1self.best_train_f1, self.best_dev_f1 = 0, 0self.last_epoch = epochsdef train(self):logging.info('Start training...')for epoch in range(1, epochs + 1):train_f1 = self._train(epoch)dev_f1 = self._eval(epoch)if self.best_dev_f1 <= dev_f1:logging.info("Exceed history dev = %.2f, current dev = %.2f" % (self.best_dev_f1, dev_f1))torch.save(self.model.state_dict(), save_model)self.best_train_f1 = train_f1self.best_dev_f1 = dev_f1self.early_stop = 0else:self.early_stop += 1if self.early_stop == early_stops:logging.info("Eearly stop in epoch %d, best train: %.2f, dev: %.2f" % (epoch - early_stops, self.best_train_f1, self.best_dev_f1))self.last_epoch = epochbreakdef test(self):self.model.load_state_dict(torch.load(save_model))self._eval(self.last_epoch + 1, test=True)def _train(self, epoch):self.optimizer.zero_grad()self.model.train()start_time = time.time()epoch_start_time = time.time()overall_losses = 0losses = 0batch_idx = 1y_pred = []y_true = []for batch_data in data_iter(self.train_data, train_batch_size, shuffle=True):torch.cuda.empty_cache()batch_inputs, batch_labels = self.batch2tensor(batch_data)batch_outputs = self.model(batch_inputs)loss = self.criterion(batch_outputs, batch_labels)loss.backward()loss_value = loss.detach().cpu().item()losses += loss_valueoverall_losses += loss_valuey_pred.extend(torch.max(batch_outputs, dim=1)[1].cpu().numpy().tolist())y_true.extend(batch_labels.cpu().numpy().tolist())nn.utils.clip_grad_norm_(self.optimizer.all_params, max_norm=clip)for optimizer, scheduler in zip(self.optimizer.optims, self.optimizer.schedulers):optimizer.step()scheduler.step()self.optimizer.zero_grad()self.step += 1if batch_idx % log_interval == 0:elapsed = time.time() - start_timelrs = self.optimizer.get_lr()logging.info('| epoch {:3d} | step {:3d} | batch {:3d}/{:3d} | lr{} | loss {:.4f} | s/batch {:.2f}'.format(epoch, self.step, batch_idx, self.batch_num, lrs,losses / log_interval,elapsed / log_interval))losses = 0start_time = time.time()batch_idx += 1overall_losses /= self.batch_numduring_time = time.time() - epoch_start_time# reformatoverall_losses = reformat(overall_losses, 4)score, f1 = get_score(y_true, y_pred)logging.info('| epoch {:3d} | score {} | f1 {} | loss {:.4f} | time {:.2f}'.format(epoch, score, f1,overall_losses,during_time))if set(y_true) == set(y_pred) and self.report:report = classification_report(y_true, y_pred, digits=4, target_names=self.target_names)logging.info('\n' + report)return f1def _eval(self, epoch, test=False):self.model.eval()start_time = time.time()data = self.test_data if test else self.dev_datay_pred = []y_true = []with torch.no_grad():for batch_data in data_iter(data, test_batch_size, shuffle=False):torch.cuda.empty_cache()batch_inputs, batch_labels = self.batch2tensor(batch_data)batch_outputs = self.model(batch_inputs)y_pred.extend(torch.max(batch_outputs, dim=1)[1].cpu().numpy().tolist())y_true.extend(batch_labels.cpu().numpy().tolist())score, f1 = get_score(y_true, y_pred)during_time = time.time() - start_timeif test:df = pd.DataFrame({'label': y_pred})df.to_csv(save_test, index=False, sep=',')else:logging.info('| epoch {:3d} | dev | score {} | f1 {} | time {:.2f}'.format(epoch, score, f1,during_time))if set(y_true) == set(y_pred) and self.report:report = classification_report(y_true, y_pred, digits=4, target_names=self.target_names)logging.info('\n' + report)return f1def batch2tensor(self, batch_data):'''[[label, doc_len, [[sent_len, [sent_id0, ...], [sent_id1, ...]], ...]]'''batch_size = len(batch_data)doc_labels = []doc_lens = []doc_max_sent_len = []for doc_data in batch_data:doc_labels.append(doc_data[0])doc_lens.append(doc_data[1])sent_lens = [sent_data[0] for sent_data in doc_data[2]]max_sent_len = max(sent_lens)doc_max_sent_len.append(max_sent_len)max_doc_len = max(doc_lens)max_sent_len = max(doc_max_sent_len)batch_inputs1 = torch.zeros((batch_size, max_doc_len, max_sent_len), dtype=torch.int64)batch_inputs2 = torch.zeros((batch_size, max_doc_len, max_sent_len), dtype=torch.int64)batch_masks = torch.zeros((batch_size, max_doc_len, max_sent_len), dtype=torch.float32)batch_labels = torch.LongTensor(doc_labels)for b in range(batch_size):for sent_idx in range(doc_lens[b]):sent_data = batch_data[b][2][sent_idx]for word_idx in range(sent_data[0]):batch_inputs1[b, sent_idx, word_idx] = sent_data[1][word_idx]batch_inputs2[b, sent_idx, word_idx] = sent_data[2][word_idx]batch_masks[b, sent_idx, word_idx] = 1if use_cuda:batch_inputs1 = batch_inputs1.to(device)batch_inputs2 = batch_inputs2.to(device)batch_masks = batch_masks.to(device)batch_labels = batch_labels.to(device)return (batch_inputs1, batch_inputs2, batch_masks), batch_labels

print("结束build trainer")print("开始train")

# train

trainer = Trainer(model, vocab)

trainer.train()

print("结束train")print("开始test")

# test

trainer.test()

print("结束test")print("程序结束")logging.basicConfig(level=logging.INFO, format='%(asctime)-15s %(levelname)s: %(message)s')

logging.info("Use cuda: %s, gpu id: %d.", use_cuda, gpu)

score:0.9221

比赛源自:阿里云天池大赛 - 零基础入门NLP - 新闻文本分类

)