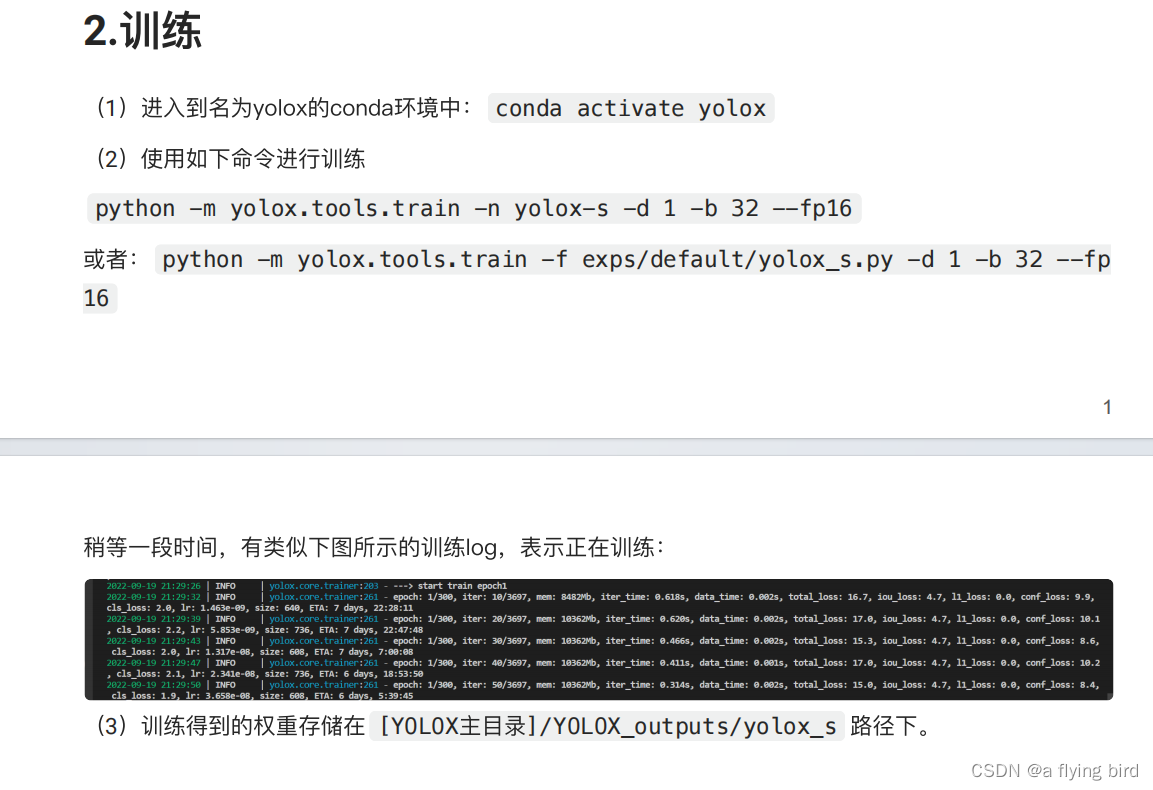

注意: 训练的时候,如果GPU不够,可以修改batchsize大小。

(yolox) xuefei@f123:/mnt/d/BaiduNetdiskDownload/CV/YOLOX$ ls

LICENSE README.md assets checkpoints demo exps requirements.txt setup.py tools yolox

MANIFEST.in YOLOX_outputs build datasets docs hubconf.py setup.cfg tests venv yolox.egg-info

(yolox) xuefei@f123:/mnt/d/BaiduNetdiskDownload/CV/YOLOX$

(yolox) xuefei@f123:/mnt/d/BaiduNetdiskDownload/CV/YOLOX$ python -m yolox.tools.train -n yolox-s -d 1 -b 16 --fp16

2024-02-02 08:36:25 | INFO | yolox.core.trainer:130 - args: Namespace(batch_size=16, cache=None, ckpt=None, devices=1, dist_backend='nccl', dist_url=None, exp_file=None, experiment_name='yolox_s', fp16=True, logger='tensorboard', machine_rank=0, name='yolox-s', num_machines=1, occupy=False, opts=[], resume=False, start_epoch=None)

2024-02-02 08:36:25 | INFO | yolox.core.trainer:131 - exp value:

╒═══════════════════╤════════════════════════════╕

│ keys │ values │

╞═══════════════════╪════════════════════════════╡

│ seed │ None │

├───────────────────┼────────────────────────────┤

│ output_dir │ './YOLOX_outputs' │

├───────────────────┼────────────────────────────┤

│ print_interval │ 10 │

├───────────────────┼────────────────────────────┤

│ eval_interval │ 10 │

├───────────────────┼────────────────────────────┤

│ dataset │ None │

├───────────────────┼────────────────────────────┤

│ num_classes │ 80 │

├───────────────────┼────────────────────────────┤

│ depth │ 0.33 │

├───────────────────┼────────────────────────────┤

│ width │ 0.5 │

├───────────────────┼────────────────────────────┤

│ act │ 'silu' │

├───────────────────┼────────────────────────────┤

│ data_num_workers │ 4 │

├───────────────────┼────────────────────────────┤

│ input_size │ (640, 640) │

├───────────────────┼────────────────────────────┤

│ multiscale_range │ 5 │

├───────────────────┼────────────────────────────┤

│ data_dir │ None │

├───────────────────┼────────────────────────────┤

│ train_ann │ 'instances_train2017.json' │

├───────────────────┼────────────────────────────┤

│ val_ann │ 'instances_val2017.json' │

├───────────────────┼────────────────────────────┤

│ test_ann │ 'instances_test2017.json' │

├───────────────────┼────────────────────────────┤

│ mosaic_prob │ 1.0 │

├───────────────────┼────────────────────────────┤

│ mixup_prob │ 1.0 │

├───────────────────┼────────────────────────────┤

│ hsv_prob │ 1.0 │

├───────────────────┼────────────────────────────┤

│ flip_prob │ 0.5 │

├───────────────────┼────────────────────────────┤

│ degrees │ 10.0 │

├───────────────────┼────────────────────────────┤

│ translate │ 0.1 │

├───────────────────┼────────────────────────────┤

│ mosaic_scale │ (0.1, 2) │

├───────────────────┼────────────────────────────┤

│ enable_mixup │ True │

├───────────────────┼────────────────────────────┤

│ mixup_scale │ (0.5, 1.5) │

├───────────────────┼────────────────────────────┤

│ shear │ 2.0 │

├───────────────────┼────────────────────────────┤

│ warmup_epochs │ 5 │

├───────────────────┼────────────────────────────┤

│ max_epoch │ 300 │

├───────────────────┼────────────────────────────┤

│ warmup_lr │ 0 │

├───────────────────┼────────────────────────────┤

│ min_lr_ratio │ 0.05 │

├───────────────────┼────────────────────────────┤

│ basic_lr_per_img │ 0.00015625 │

├───────────────────┼────────────────────────────┤

│ scheduler │ 'yoloxwarmcos' │

├───────────────────┼────────────────────────────┤

│ no_aug_epochs │ 15 │

├───────────────────┼────────────────────────────┤

│ ema │ True │

├───────────────────┼────────────────────────────┤

│ weight_decay │ 0.0005 │

├───────────────────┼────────────────────────────┤

│ momentum │ 0.9 │

├───────────────────┼────────────────────────────┤

│ save_history_ckpt │ True │

├───────────────────┼────────────────────────────┤

│ exp_name │ 'yolox_s' │

├───────────────────┼────────────────────────────┤

│ test_size │ (640, 640) │

├───────────────────┼────────────────────────────┤

│ test_conf │ 0.01 │

├───────────────────┼────────────────────────────┤

│ nmsthre │ 0.65 │

╘═══════════════════╧════════════════════════════╛

2024-02-02 08:36:25 | INFO | yolox.core.trainer:137 - Model Summary: Params: 8.97M, Gflops: 26.93

2024-02-02 08:36:28 | INFO | yolox.data.datasets.coco:63 - loading annotations into memory...

2024-02-02 08:36:38 | INFO | yolox.data.datasets.coco:63 - Done (t=10.09s)

2024-02-02 08:36:38 | INFO | pycocotools.coco:86 - creating index...

2024-02-02 08:36:39 | INFO | pycocotools.coco:86 - index created!

2024-02-02 08:36:55 | INFO | yolox.core.trainer:155 - init prefetcher, this might take one minute or less...

2024-02-02 08:36:58 | INFO | yolox.data.datasets.coco:63 - loading annotations into memory...

2024-02-02 08:36:59 | INFO | yolox.data.datasets.coco:63 - Done (t=0.58s)

2024-02-02 08:36:59 | INFO | pycocotools.coco:86 - creating index...

2024-02-02 08:36:59 | INFO | pycocotools.coco:86 - index created!

2024-02-02 08:36:59 | INFO | yolox.core.trainer:191 - Training start...

2024-02-02 08:36:59 | INFO | yolox.core.trainer:192 -

YOLOX(

(backbone): YOLOPAFPN(

(backbone): CSPDarknet(

(stem): Focus(

(conv): BaseConv(

(conv): Conv2d(12, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(dark2): Sequential(

(0): BaseConv(

(conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

)

(dark3): Sequential(

(0): BaseConv(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(1): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(2): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

)

(dark4): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(1): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(2): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

)

(dark5): Sequential(

(0): BaseConv(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): SPPBottleneck(

(conv1): BaseConv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): ModuleList(

(0): MaxPool2d(kernel_size=5, stride=1, padding=2, dilation=1, ceil_mode=False)

(1): MaxPool2d(kernel_size=9, stride=1, padding=4, dilation=1, ceil_mode=False)

(2): MaxPool2d(kernel_size=13, stride=1, padding=6, dilation=1, ceil_mode=False)

)

(conv2): BaseConv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(2): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

)

)

(upsample): Upsample(scale_factor=2.0, mode=nearest)

(lateral_conv0): BaseConv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(C3_p4): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(reduce_conv1): BaseConv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(C3_p3): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(bu_conv2): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(C3_n3): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

(bu_conv1): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(C3_n4): CSPLayer(

(conv1): BaseConv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv3): BaseConv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): Bottleneck(

(conv1): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(conv2): BaseConv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

)

)

(head): YOLOXHead(

(cls_convs): ModuleList(

(0): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(1): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(2): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

(reg_convs): ModuleList(

(0): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(1): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(2): Sequential(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

)

(cls_preds): ModuleList(

(0): Conv2d(128, 80, kernel_size=(1, 1), stride=(1, 1))

(1): Conv2d(128, 80, kernel_size=(1, 1), stride=(1, 1))

(2): Conv2d(128, 80, kernel_size=(1, 1), stride=(1, 1))

)

(reg_preds): ModuleList(

(0): Conv2d(128, 4, kernel_size=(1, 1), stride=(1, 1))

(1): Conv2d(128, 4, kernel_size=(1, 1), stride=(1, 1))

(2): Conv2d(128, 4, kernel_size=(1, 1), stride=(1, 1))

)

(obj_preds): ModuleList(

(0): Conv2d(128, 1, kernel_size=(1, 1), stride=(1, 1))

(1): Conv2d(128, 1, kernel_size=(1, 1), stride=(1, 1))

(2): Conv2d(128, 1, kernel_size=(1, 1), stride=(1, 1))

)

(stems): ModuleList(

(0): BaseConv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(1): BaseConv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

(2): BaseConv(

(conv): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)

)

(l1_loss): L1Loss()

(bcewithlog_loss): BCEWithLogitsLoss()

(iou_loss): IOUloss()

)

)

2024-02-02 08:36:59 | INFO | yolox.core.trainer:203 - ---> start train epoch1

2024-02-02 08:37:07 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 10/7393, gpu mem: 3713Mb, mem: 6.5Gb, iter_time: 0.751s, data_time: 0.008s, total_loss: 20.3, iou_loss: 4.8, l1_loss: 0.0, conf_loss: 13.7, cls_loss: 1.9, lr: 1.830e-10, size: 640, ETA: 19 days, 6:56:56

2024-02-02 08:37:12 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 20/7393, gpu mem: 3713Mb, mem: 6.5Gb, iter_time: 0.467s, data_time: 0.001s, total_loss: 15.7, iou_loss: 4.6, l1_loss: 0.0, conf_loss: 9.0, cls_loss: 2.1, lr: 7.318e-10, size: 640, ETA: 15 days, 15:17:49

2024-02-02 08:37:18 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 30/7393, gpu mem: 3713Mb, mem: 6.5Gb, iter_time: 0.616s, data_time: 0.002s, total_loss: 16.7, iou_loss: 4.6, l1_loss: 0.0, conf_loss: 10.0, cls_loss: 2.1, lr: 1.647e-09, size: 576, ETA: 15 days, 16:38:41

2024-02-02 08:37:30 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 40/7393, gpu mem: 4978Mb, mem: 6.5Gb, iter_time: 1.207s, data_time: 0.002s, total_loss: 17.8, iou_loss: 4.7, l1_loss: 0.0, conf_loss: 11.1, cls_loss: 2.0, lr: 2.927e-09, size: 800, ETA: 19 days, 12:21:38

2024-02-02 08:37:36 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 50/7393, gpu mem: 4978Mb, mem: 6.5Gb, iter_time: 0.630s, data_time: 0.002s, total_loss: 15.8, iou_loss: 4.7, l1_loss: 0.0, conf_loss: 8.9, cls_loss: 2.2, lr: 4.574e-09, size: 544, ETA: 18 days, 20:17:13

2024-02-02 08:37:45 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 60/7393, gpu mem: 4978Mb, mem: 6.5Gb, iter_time: 0.916s, data_time: 0.002s, total_loss: 17.5, iou_loss: 4.7, l1_loss: 0.0, conf_loss: 10.9, cls_loss: 1.9, lr: 6.587e-09, size: 736, ETA: 19 days, 14:59:49

2024-02-02 08:37:55 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 70/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.994s, data_time: 0.002s, total_loss: 18.5, iou_loss: 4.6, l1_loss: 0.0, conf_loss: 11.8, cls_loss: 2.1, lr: 8.965e-09, size: 800, ETA: 20 days, 11:11:27

2024-02-02 08:38:03 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 80/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.816s, data_time: 0.003s, total_loss: 20.7, iou_loss: 4.7, l1_loss: 0.0, conf_loss: 13.8, cls_loss: 2.2, lr: 1.171e-08, size: 800, ETA: 20 days, 12:36:00

2024-02-02 08:38:10 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 90/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.631s, data_time: 0.002s, total_loss: 18.8, iou_loss: 4.6, l1_loss: 0.0, conf_loss: 12.3, cls_loss: 1.9, lr: 1.482e-08, size: 640, ETA: 20 days, 1:04:39

2024-02-02 08:38:15 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 100/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.504s, data_time: 0.002s, total_loss: 15.7, iou_loss: 4.6, l1_loss: 0.0, conf_loss: 9.1, cls_loss: 2.0, lr: 1.830e-08, size: 480, ETA: 19 days, 8:00:53

2024-02-02 08:38:22 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 110/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.746s, data_time: 0.002s, total_loss: 18.6, iou_loss: 4.7, l1_loss: 0.0, conf_loss: 11.8, cls_loss: 2.1, lr: 2.214e-08, size: 672, ETA: 19 days, 7:36:25

2024-02-02 08:38:27 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 120/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.519s, data_time: 0.002s, total_loss: 15.7, iou_loss: 4.6, l1_loss: 0.0, conf_loss: 9.0, cls_loss: 2.0, lr: 2.635e-08, size: 512, ETA: 18 days, 19:38:05

2024-02-02 08:38:32 | INFO | yolox.core.trainer:263 - epoch: 1/300, iter: 130/7393, gpu mem: 5059Mb, mem: 6.5Gb, iter_time: 0.463s, data_time: 0.001s, total_loss: 17.6, iou_loss: 4.7, l1_loss: 0.0, conf_loss: 11.1, cls_loss: 1.9, lr: 3.092e-08, size: 640, ETA: 18 days, 6:51:07

^C2024-02-02 08:38:32 | INFO | yolox.core.trainer:196 - Training of experiment is done and the best AP is 0.00