目录

- 报错

- 查看已安装的torch的版本

- 卸载

- 安装GPU版本的torch

- 查看cuda版本

- 手工安装

- 通过pip命令手工安装。

- 结果

- 更新cuda到12.1

- 大功告成

报错

经查阅,这个问题是因为conda默认安装的是CPU版本的torch,应该使用GPU版本的。

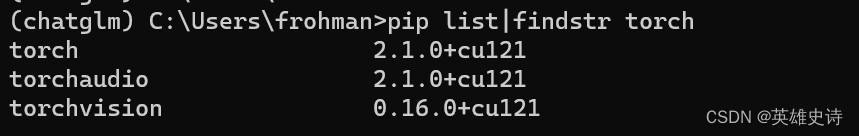

查看已安装的torch的版本

pip list

这种不带后缀的,就CPU版本的。

卸载

pip uninstall torch

安装GPU版本的torch

注意:这个特别要注意版本兼容,需要兼顾:

1.cuda :12.0

2.python :3.11

查看cuda版本

命令:nvidia-smi

我的是12.0,算比较新的,就不更新了。

手工安装

如果直接从资料库安装,很慢,建议下载后手工安装。

地址:https://mirror.sjtu.edu.cn/pytorch-wheels/cu121/?mirror_intel_list

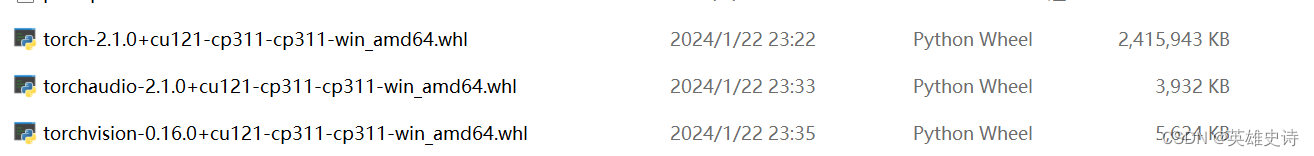

需要3个文件:

通过pip命令手工安装。

pip install “d:\ai\torchaudio-2.1.0+cu121-cp311-cp311-win_amd64.whl”

结果

之前的不报错了。

又报新的错误:

File "D:\ProgramData\anaconda3\envs\chatglm\Lib\site-packages\cpm_kernels\library\base.py", line 72, in wrapperraise RuntimeError("Library %s is not initialized" % self.__name)

RuntimeError: Library cublasLt is not initialized

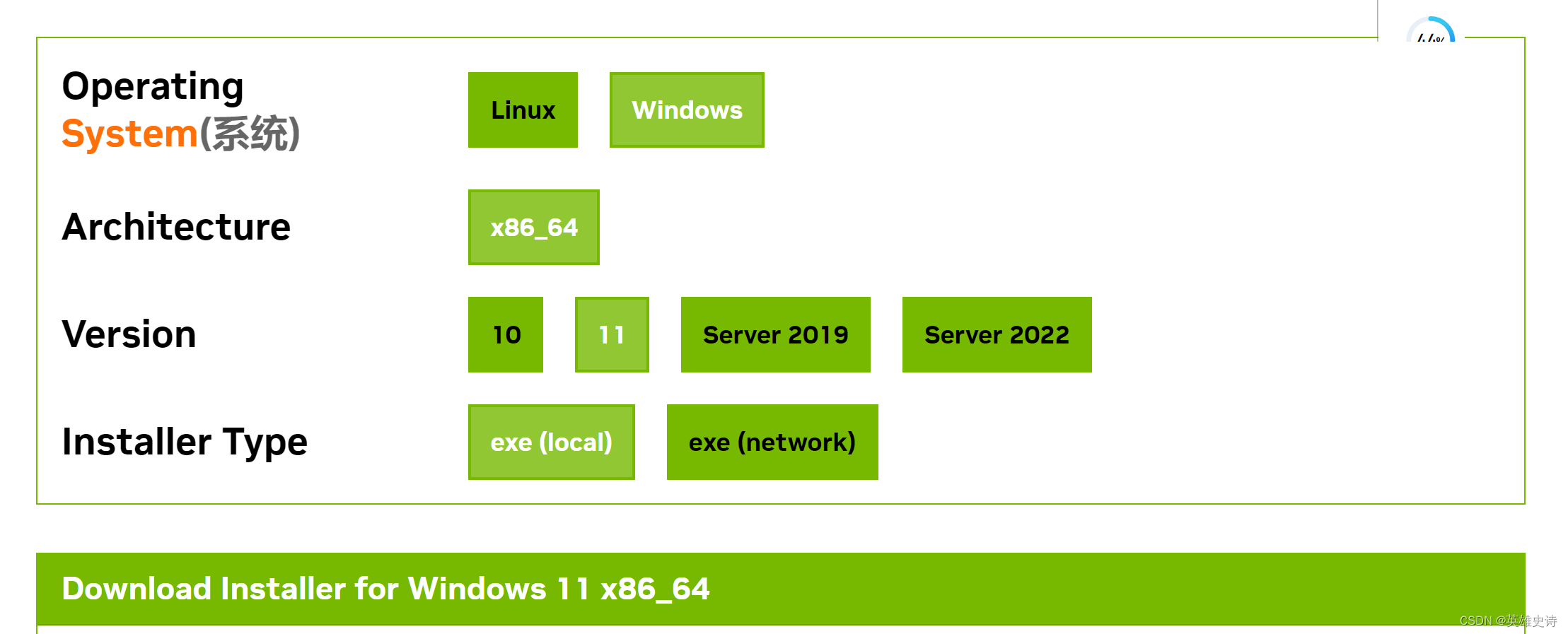

更新cuda到12.1

NVIDIA Corporation\PhysX\Common下,缺少cudart64_65.dll文件

想到cuda是12.0,与torch要求的12.1还是有些许差异。更新到12.1.

文件出现了

文件出现了

大功告成

再次运行程序,终于成功了!

# This is a sample Python script.# Press Shift+F10 to execute it or replace it with your code.

# Press Double Shift to search everywhere for classes, files, tool windows, actions, and settings.from transformers import AutoTokenizer, AutoModeltokenizer = AutoTokenizer.from_pretrained("D:\AI\chatglm3-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("D:\AI\chatglm3-6b", trust_remote_code=True).quantize(4).half().cuda()

model = model.eval()

response, history = model.chat(tokenizer, "你好", history=[])

print(response)

D:\ProgramData\anaconda3\envs\chatglm\python.exe C:\Users\frohman\PycharmProjects\pythonProject\main.py

Explicitly passing a `revision` is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

Explicitly passing a `revision` is encouraged when loading a configuration with custom code to ensure no malicious code has been contributed in a newer revision.

Explicitly passing a `revision` is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

Loading checkpoint shards: 100%|██████████| 7/7 [00:50<00:00, 7.27s/it]

你好👋!我是人工智能助手 ChatGLM3-6B,很高兴见到你,欢迎问我任何问题。Process finished with exit code 0

FLUENT UDF-DEFINE_PROFILE宏)

)

分类算法实现 机器学习 深度学习)

![[网鼎杯 2018]Fakebook1](http://pic.xiahunao.cn/[网鼎杯 2018]Fakebook1)

)