1.How is a grayscale image represented on a computer? How about a color image?

灰度图:单通道,0-256

彩色图:三通道RGB或HSV,0-256

2.How are the files and folders in the MNIST_SAMPLE dataset structured? Why?

分为训练集和测试集,分别包含3和7文件夹,文件夹中为手写数字的图像

3.Explain how the “pixel similarity” approach to classifying digits works.

利用求平均的方式定义理想的3和7模型,与3相近的识别为3,与7相近的识别为7

4.What is a list comprehension? Create one now that selects odd numbers from a list and doubles them.

List Comprehensions:A list comprehension looks like this: new_list = [f(o) for o in a_list if o>0]. This will return every element of a_list that is greater than 0, after passing it to the function f. There are three parts here: the collection you are iterating over (a_list), an optional filter (if o>0), and something to do to each element (f(o)).

list1 = range(20)

list2 = [2*n for n in list1 if n%2 == 1]

5.What is a “rank-3 tensor”?

For every pixel position, we want to compute the average over all the images of the intensity of that pixel. To do this we first combine all the images in this list into a single three-dimensional tensor. The most common way to describe such a tensor is to call it a rank-3 tensor. We often need to stack up individual tensors in a collection into a single tensor.

6.What is the difference between tensor rank and shape? How do you get the rank from the shape?

The length of a tensor’s shape is its rank.

Rank is the number of axes or dimensions in a tensor; shape is the size of each axis of a tensor.

7.What are RMSE and L1 norm?

RSME:均方根误差

L1 norm:L1范数

8.How can you apply a calculation on thousands of numbers at once, many thousands of times faster than a Python loop?

使用tensor进行计算

9.Create a 3×3 tensor or array containing the numbers from 1 to 9. Double it. Select the bottom-right four numbers.

data = tensor(range(1,10)).view(3,3)

data[1:,1:]

10.What is broadcasting?

That is, it will automatically expand the tensor with the smaller rank to have the same size as the one with the larger rank. Broadcasting is an important capability that makes tensor code much easier to write.

11.Are metrics generally calculated using the training set, or the validation set? Why?

validation set

As we’ve discussed, we want to calculate our metric over a validation set. This is so that we don’t inadvertently overfit—that is, train a model to work well only on our training data. This is not really a risk with the pixel similarity model we’re using here as a first try, since it has no trained components, but we’ll use a validation set anyway to follow normal practices and to be ready for our second try later.

12.What is SGD?

随机梯度下降,Stochastic Gradient Descent

We’ll explain stochastic gradient descent (SGD), the mechanism for learning by updating weights automatically. We’ll discuss the choice of a loss function for our basic classification task, and the role of mini-batches. We’ll also describe the math that a basic neural network is actually doing. Finally, we’ll put all these pieces together.

13.Why does SGD use mini-batches?

在计算过程中,如果计算全部数据,会导致计算开销过大,如果计算单个数据,结果不精确,也会导致计算出的梯度不稳定。而一次性计算一批数据可以兼顾二者,且在gpu上进行计算时,mini-batches的计算效率更高。

14.What are the seven steps in SGD for machine learning?

(1)Initialize the weights.初始化参数

(2)For each image, use these weights to predict whether it appears to be a 3 or a 7.计算预测值

(3)Based on these predictions, calculate how good the model is (its loss).计算损失函数

(4)Calculate the gradient, which measures for each weight, how changing that weight would change the loss.计算梯度

(5)Step (that is, change) all the weights based on that calculation.更新权重

(6)Go back to the step 2, and repeat the process.重复迭代过程

(7)Iterate until you decide to stop the training process (for instance, because the model is good enough or you don’t want to wait any longer).停止

15.How do we initialize the weights in a model?

随机设置初始参数

16.What is “loss”?

损失函数,越小代表模型越好

17.Why can’t we always use a high learning rate?

学习率过高可能会导致损失函数震荡

18.What is a “gradient”?

梯度,The gradients tell us how much we have to change each weight to make our model better. It is essentially a measure of how the loss function changes with changes of the weights of the model (the derivative).

19.Do you need to know how to calculate gradients yourself?

不需要,pytorch能自动计算

20.Why can’t we use accuracy as a loss function?

预测准确性只有在预测结果变化时才会改变,对于模型的细微改变,如预测的置信度变化时,并不会改变预测结果,此时梯度为0,无法进一步对模型进行优化。

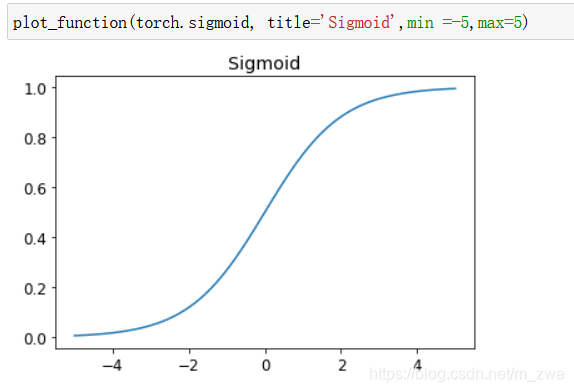

21.Draw the sigmoid function. What is special about its shape?

取值范围为0-1

取值范围为0-1

22.What is the difference between a loss function and a metric?

loss function用于模型训练,metric用于衡量模型性能

23.What is the function to calculate new weights using a learning rate?

optimizer step function

24.What does the DataLoader class do?

The DataLoader class can take any Python collection and turn it into an iterator over many batches.

25.Write pseudocode showing the basic steps taken in each epoch for SGD.

for x, y in dl:

pred = model(x)

loss = loss_func(pred, y)

loss.background()

parameter -= parameter.grad * lr

26.Create a function that, if passed two arguments [1,2,3,4] and ‘abcd’, returns [(1, ‘a’), (2, ‘b’), (3, ‘c’), (4, ‘d’)]. What is special about that output data structure?

def func(x, y): return list(zip(x, y))

27.What does view do in PyTorch?

reshape

28.What are the “bias” parameters in a neural network? Why do we need them?

避免输入为0时输出永远为0,也可以拓展模型适用性

29.What does the @ operator do in Python?

矩阵乘法

30.What does the backward method do?

计算梯度

31.Why do we have to zero the gradients?

PyTorch 会将变量的梯度添加到之前存储的梯度中。如果多次调用训练循环函数,而不将梯度归零,则当前损失的梯度将被添加到先前存储的梯度值中

32.What information do we have to pass to Learner?

dataloader, model, optimize function, loss function, metrics

33.Show Python or pseudocode for the basic steps of a training loop.

def train_epoch(model, lr, params):

for xb, yb in dl:

calc_grad(xb, yb, model)

for p in params:

p.data -= p.grad * lr

p.grad.zero_()

for i in range(20):

train_epoch(model, lr, params)

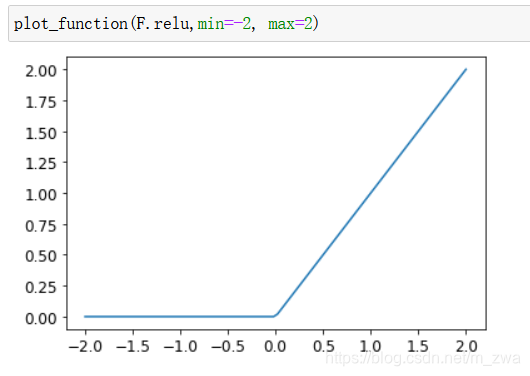

34.What is “ReLU”? Draw a plot of it for values from -2 to +2.

Relu,负数->0,其他->本身

35.What is an “activation function”?

激活函数,用于给神经网络模型提供非线性

36.What’s the difference between F.relu and nn.ReLU?

F.relu是一个Python函数,nn.Relu是pytorch类

37.The universal approximation theorem shows that any function can be approximated as closely as needed using just one nonlinearity. So why do we normally use more?

There are practical performance benefits to using more than one nonlinearity. We can use a deeper model with less number of parameters, better performance, faster training, and less compute/memory requirements.

)