CUDA 安装测试部分 https://blog.csdn.net/sunmc1204953974/article/details/51000970

CUDA的HelloWorld程序编写 https://www.cnblogs.com/neopenx/p/4643705.html

对多线程并行处理的解释:https://blog.csdn.net/sunmc1204953974/article/details/51025801

以下的CUDA知识全部转载自:https://blog.csdn.net/sunmc1204953974

数组中求立方和(CUDA编程举例)

//简单的CUDA程序,转载自:https://blog.csdn.net/sunmc1204953974/article/details/51025801

//代码功能:计算数组中数字的立方和

//maintest.cu文件

#include <stdio.h>

#include <time.h>

#include <iostream>//CUDA RunTime API

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <atomic>using namespace std;

//数组的大小

#define DATA_SIZE 1048576

//程序中用到的线程数,注意要小于设备中的maxThreadsPerBlock变量

#define THREAD_NUM 1024

//GPU的运行频率

int gpu_clock_rate = 0;

int data[DATA_SIZE];void GenerateNumbers(int *number, int size)

{for (int i = 0; i < size; i++){number[i] = rand() % 10;}

}void PrintDeviceProp(const cudaDeviceProp &prop)

{printf("Device Name : %s.\n", prop.name);printf("totalGlobalMem : %d.\n", prop.totalGlobalMem);printf("sharedMemPerBlock : %d.\n", prop.sharedMemPerBlock);printf("regsPerBlock : %d.\n", prop.regsPerBlock);printf("warpSize : %d.\n", prop.warpSize);printf("memPitch : %d.\n", prop.memPitch);printf("maxThreadsPerBlock : %d.\n", prop.maxThreadsPerBlock);printf("maxThreadsDim[0 - 2] : %d %d %d.\n", prop.maxThreadsDim[0], prop.maxThreadsDim[1], prop.maxThreadsDim[2]);printf("maxGridSize[0 - 2] : %d %d %d.\n", prop.maxGridSize[0], prop.maxGridSize[1], prop.maxGridSize[2]);printf("totalConstMem : %d.\n", prop.totalConstMem);printf("major.minor : %d.%d.\n", prop.major, prop.minor);printf("clockRate : %d.\n", prop.clockRate);printf("textureAlignment : %d.\n", prop.textureAlignment);printf("deviceOverlap : %d.\n", prop.deviceOverlap);printf("multiProcessorCount : %d.\n", prop.multiProcessorCount);}//CUDA 初始化

bool InitCUDA()

{int count;//取得支持Cuda的装置的数目cudaGetDeviceCount(&count);//没有符合的硬件if (count == 0) {fprintf(stderr, "There is no device.\n");return false;}int i;for (i = 0; i < count; i++) {cudaDeviceProp prop;if (cudaGetDeviceProperties(&prop, i) == cudaSuccess) {gpu_clock_rate = prop.clockRate;cout << endl;PrintDeviceProp(prop);cout << "---" << endl;cout << endl;if (prop.major >= 1) {break;}}}if (i == count) {fprintf(stderr, "There is no device supporting CUDA 1.x.\n");return false;}cudaSetDevice(i);return true;

}__global__ static void SumOfSquares(int *num, int *result,clock_t *time)

{//表示目前的 thread 是第几个 thread(由 0 开始计算)const int tid = threadIdx.x;//计算每个线程需要完成的量const int size = DATA_SIZE / THREAD_NUM;int sum = 0;int i;//记录运算开始的时间clock_t start;//只在 thread 0(即 threadIdx.x = 0 的时候)进行记录if (tid == 0) start = clock();for (i = tid * size; i < (tid + 1) * size; i++) {sum += num[i] * num[i] * num[i];}result[tid] = sum;//计算时间的动作,只在 thread 0(即 threadIdx.x = 0 的时候)进行//相减得到的是GPU执行单元的频率,也就是GPU的时钟周期(timestamp),需要除以GPU的运行频率才能得到以秒为单位的时间if (tid == 0) *time = clock() - start;

}int maintest()

{srand(time(0));if (!InitCUDA()){return 0;}printf("CUDA initialized.\n");GenerateNumbers(data, DATA_SIZE);//显卡内存数据表示int *gpudata, *result;clock_t *time;cudaMalloc((void**)&gpudata, sizeof(int)*DATA_SIZE);cudaMalloc((void**)&result, sizeof(int)*THREAD_NUM);cudaMalloc((void**)&time, sizeof(clock_t));//cudaMemcpy将产生的随机数复制到显卡内存中//cudaMemcpy(gpudata, data, sizeof(int)*DATA_SIZE, cudaMemcpyHostToDevice);//在CUDA中执行函数,语法<<<block数目,thread数目,shared memory大小>>>(参数...);SumOfSquares << <1, THREAD_NUM, 0 >> >(gpudata, result, time);//从显卡数据复制到内存int sum[THREAD_NUM];clock_t time_use;cudaMemcpy(&sum,result,sizeof(int)*THREAD_NUM, cudaMemcpyDeviceToHost);cudaMemcpy(&time_use, time, sizeof(int), cudaMemcpyDeviceToHost);//freecudaFree(gpudata);cudaFree(result);cudaFree(time);long final_sum = 0;for (int i = 0; i < THREAD_NUM; i++){final_sum += sum[i];}cout << "gpu sum " << final_sum << endl;cout << "gpu time " << time_use << endl;cout << "gpu time(second) " << time_use*1.0/(gpu_clock_rate * 1000) << endl;long time_s, time_e;time_s = clock();final_sum = 0;for (int i = 0; i < DATA_SIZE; i++){final_sum += data[i] * data[i] * data[i];}time_e = clock();cout << "cpu sum " << final_sum << endl;cout << "cpu time(second) " << (time_e - time_s)*1.0/CLOCKS_PER_SEC<< endl;return 0;

}

cpu 文件代码

#include <stdio.h>

#include <iostream>

using namespace std;

//调用cu文件函数的接口

extern int maintest();

int main()

{maintest();return 0;

}

显示结果

Device Name : GeForce GT 630.

totalGlobalMem : -2147483648.

sharedMemPerBlock : 49152.

regsPerBlock : 65536.

warpSize : 32.

memPitch : 2147483647.

maxThreadsPerBlock : 1024.

maxThreadsDim[0 - 2] : 1024 1024 64.

maxGridSize[0 - 2] : 2147483647 65535 65535.

totalConstMem : 65536.

major.minor : 3.0.

clockRate : 875500.

textureAlignment : 512.

deviceOverlap : 1.

multiProcessorCount : 1.

---CUDA initialized.

gpu sum 212139366

gpu time 6795883

gpu time(second) 0.00776229

cpu sum 212139366

cpu time(second) 0.002结果分析

倒数第一行和倒数第三行,可以看到gpu和cpu中的计算结果是一样的,sum=212139366,gpu中所花费时间为0.00776229秒。

可以看到CPU的运行时间比GPU还短,但如果把加和函数编写的复杂,就能明显的看到GPU并行计算的优势。

并行线程的解释

这段代码主要是对相互独立的单元进行计算时,可以编写CUDA函数进行调用,调用时内部的逻辑我个人的分析如下:多个线程会依据__global__函数的副本,几乎同一时间调用副本函数,进行并行的计算,多个线程之间独立运行;const int tid = threadIdx.x;这行代码会获得调用该副本函数的线程id,依据id可以执行具体的单元。

GPU显卡中显存连续存取

for (i = tid * size; i < (tid + 1) * size; i++) {sum += num[i] * num[i] * num[i];

}

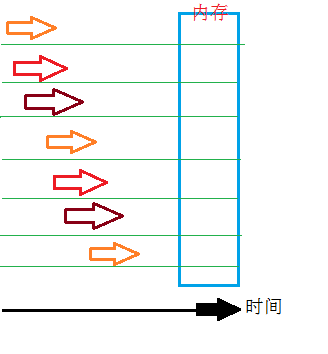

这样for循环的编写没有利用到显存连续存取的优点,在GPU中,thread在等待数据读入时,会切换下一个thread,thread的切换速度要远比显存存取的速度要快,并行的处理如下图:

如果显存反复载入的话,太消耗时间,如果换一种for循环编程方式,速度会提高很多,

for (i = tid ; i < DATA_SIZE; i+= THREAD_NUM) {sum += num[i] * num[i] * num[i] ;

}

显示结果如下:

CUDA initialized.

gpu sum 212259240

gpu time 1122455

gpu time(second) 0.00128207

cpu sum 212259240

cpu time(second) 0.002可以看到GPU消耗时间是1122455个时钟周期,简单的改变比上一个结果的时间消耗6795883要快6倍。

GPU下的0.00128207秒的计算总耗时,终于比0.002秒的CPU耗时要短了。

)