文章目录

- 1. 向管理表中装载数据

- 2. 通过查询语句向表中插入数据

- 3. 动态分区插入

- 4. 从单个查询语句创建表并加载数据

- 5. 导出数据

学习自《Hive编程指南》

1. 向管理表中装载数据

hive (default)> load data local inpath "/home/hadoop/workspace/student.txt"> overwrite into table student1;

分区表可以跟 partition (key1 = v1, key2 = v2, …)

有 local :复制本地路径文件 到 hdfs

无 local:移动 hdfs 文件 到 新的 hdfs 路径

overwrite: 目标文件夹中的数据将会被删除

没有 overwrite : 把新增加的文件添加到目标文件夹中,不删除原数据

inpath 后的路径下,不能包含任何文件夹

2. 通过查询语句向表中插入数据

hadoop@dblab-VirtualBox:~/workspace$ cat stu.txt

1 michael male china

2 ming male china1

3 haha female china

4 huahua female china1

- 创建表,加载数据

hive (default)> create table stu(> id int,> name string,> sex string,> country string)> row format delimited fields terminated by '\t';hive (default)> load data local inpath '/home/hadoop/workspace/stu.txt'> into table stu;

- 通过 select 语句向其他表填入数据

hive (default)> create table employee(> name string,> country string)> row format delimited fields terminated by '\t';

hive (default)> from stu s> insert overwrite table employee> select s.name, s.country where s.id%2=1;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20210408224138_1df23614-7945-40c0-9a4d-df88e4f58ea1

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Job running in-process (local Hadoop)

2021-04-08 22:41:40,081 Stage-1 map = 100%, reduce = 0%

Ended Job = job_local1437521177_0001

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to directory hdfs://localhost:9000/user/hive/warehouse/employee/.hive-staging_hive_2021-04-08_22-41-38_345_1863326332876590299-1/-ext-10000

Loading data to table default.employee

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 83 HDFS Write: 180 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

hive (default)> select * from employee;

OK

michael china

haha china

- 向多表插入数据

hive (default)> from stu s> insert into table employee> select s.name, s.country where s.sex='female'> insert into table employee1> select s.name, s.country where s.sex='male';

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20210408230623_bc69bccf-348e-467d-b88e-498664f27017

Total jobs = 5

Launching Job 1 out of 5

Number of reduce tasks is set to 0 since there's no reduce operator

Job running in-process (local Hadoop)

2021-04-08 23:06:24,405 Stage-2 map = 100%, reduce = 0%

Ended Job = job_local2065691620_0003

Stage-5 is selected by condition resolver.

Stage-4 is filtered out by condition resolver.

Stage-6 is filtered out by condition resolver.

Stage-11 is selected by condition resolver.

Stage-10 is filtered out by condition resolver.

Stage-12 is filtered out by condition resolver.

Moving data to directory hdfs://localhost:9000/user/hive/warehouse/employee/.hive-staging_hive_2021-04-08_23-06-23_001_7974131043339100692-1/-ext-10000

Moving data to directory hdfs://localhost:9000/user/hive/warehouse/employee1/.hive-staging_hive_2021-04-08_23-06-23_001_7974131043339100692-1/-ext-10002

Loading data to table default.employee

Loading data to table default.employee1

MapReduce Jobs Launched:

Stage-Stage-2: HDFS Read: 470 HDFS Write: 474 SUCCESS

Total MapReduce CPU Time Spent: 0 msechive (default)> select * from employee;

ming china1

huahua china1

haha china

huahua china1hive (default)> select * from employee1;

michael china

ming china1

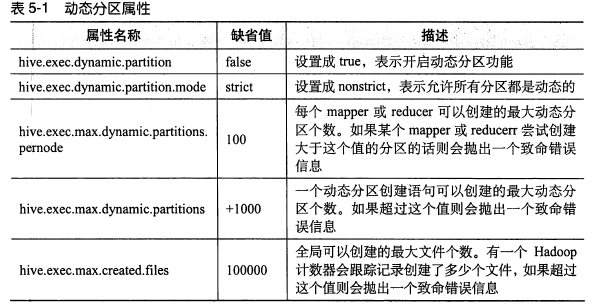

3. 动态分区插入

hive (default)> from stu s> insert overwrite table employee2> partition (country, sex)> select s.id, s.name, s.country, s.sex;hive (default)> select * from employee2;

OK

3 haha china female

1 michael china male

4 huahua china1 female

2 ming china1 male

4. 从单个查询语句创建表并加载数据

表的模式由 select 生成

hive (default)> create table employee3> as select id, name from stu> where country='china';hive (default)> select * from employee3;

1 michael

3 haha

此功能不能用于外部表(数据没有装载,在外部)

5. 导出数据

hive (default)> from stu s> insert overwrite local directory '/tmp/employee'> select s.id, s.name, s.sex> where country='china';

可以同时写入多个文件,insert 重复写几次

hive (default)> ! ls /tmp/employee -r;

000000_0hive (default)> ! cat /tmp/employee/000000_0;

1michaelmale

3hahafemale

)

)

)

)