本文节选自吴恩达老师《深度学习专项课程》编程作业,在此表示感谢。

课程链接:https://www.deeplearning.ai/deep-learning-specialization/

目录

1-Package

2 - Non-regularized model

3 - L2 Regularization(掌握)

4-Dropout(掌握)

4.1 - Forward propagation with dropout

4.2 - Backward propagation with dropout

1-Package

import numpy as np

import matplotlib.pyplot as plt

from reg_utils import sigmoid, relu, plot_decision_boundary, initialize_parameters, load_2D_dataset, predict_dec

from reg_utils import compute_cost, predict, forward_propagation, backward_propagation, update_parameters

import sklearn

import sklearn.datasets

import scipy.io

from testCases import *%matplotlib inline

plt.rcParams['figure.figsize'] = (7.0, 4.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'train_X, train_Y, test_X, test_Y = load_2D_dataset()

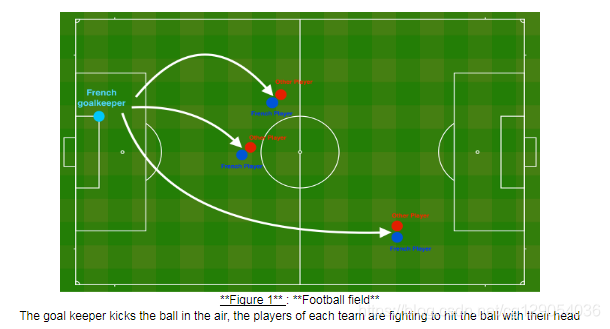

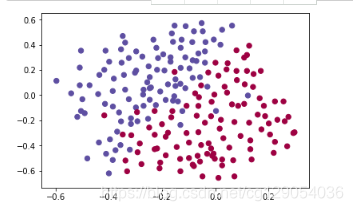

Problem Statement: You have just been hired as an AI expert by the French Football Corporation. They would like you to recommend positions where France's goal keeper should kick the ball so that the French team's players can then hit it with their head.

Each dot corresponds to a position on the football field where a football player has hit the ball with his/her head after the French goal keeper has shot the ball from the left side of the football field.

- If the dot is blue, it means the French player managed to hit the ball with his/her head(蓝色)

- If the dot is red, it means the other team's player hit the ball with their head

Your goal: Use a deep learning model to find the positions on the field where the goalkeeper should kick the ball.

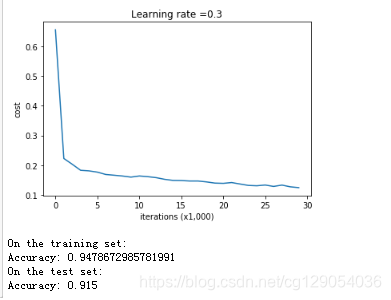

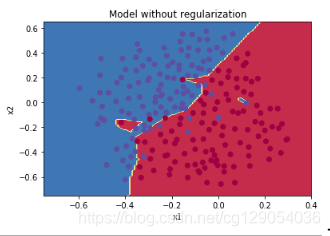

2 - Non-regularized model

You will use the following neural network (already implemented for you below). This model can be used:

- in regularization mode -- by setting the

lambdinput to a non-zero value. We use "lambd" instead of "lambda" because "lambda" is a reserved keyword in Python. - in dropout mode -- by setting the

keep_probto a value less than one

You will first try the model without any regularization. Then, you will implement:

- L2 regularization -- functions: "

compute_cost_with_regularization()" and "backward_propagation_with_regularization()" - Dropout -- functions: "

forward_propagation_with_dropout()" and "backward_propagation_with_dropout()"

In each part, you will run this model with the correct inputs so that it calls the functions you've implemented. Take a look at the code below to familiarize yourself with the model.

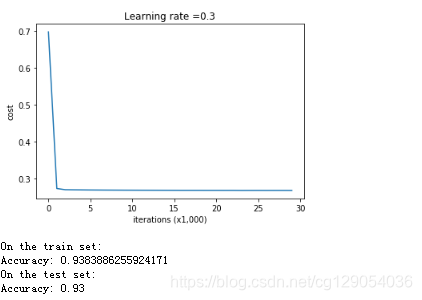

def model(X, Y, learning_rate = 0.3, num_iterations = 30000, print_cost = True, lambd = 0, keep_prob = 1):"""Implements a three-layer neural network: LINEAR->RELU->LINEAR->RELU->LINEAR->SIGMOID.Arguments:X -- input data, of shape (input size, number of examples)Y -- true "label" vector (1 for blue dot / 0 for red dot), of shape (output size, number of examples)learning_rate -- learning rate of the optimizationnum_iterations -- number of iterations of the optimization loopprint_cost -- If True, print the cost every 10000 iterationslambd -- regularization hyperparameter, scalarkeep_prob - probability of keeping a neuron active during drop-out, scalar.Returns:parameters -- parameters learned by the model. They can then be used to predict."""grads = {}costs = [] # to keep track of the costm = X.shape[1] # number of exampleslayers_dims = [X.shape[0], 20, 3, 1]# Initialize parameters dictionary.parameters = initialize_parameters(layers_dims)# Loop (gradient descent)for i in range(0, num_iterations):# Forward propagation: LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID.if keep_prob == 1:a3, cache = forward_propagation(X, parameters)elif keep_prob < 1:a3, cache = forward_propagation_with_dropout(X, parameters, keep_prob)# Cost functionif lambd == 0:cost = compute_cost(a3, Y)else:cost = compute_cost_with_regularization(a3, Y, parameters, lambd)# Backward propagation.assert(lambd==0 or keep_prob==1) # it is possible to use both L2 regularization and dropout, # but this assignment will only explore one at a timeif lambd == 0 and keep_prob == 1:grads = backward_propagation(X, Y, cache)elif lambd != 0:grads = backward_propagation_with_regularization(X, Y, cache, lambd)elif keep_prob < 1:grads = backward_propagation_with_dropout(X, Y, cache, keep_prob)# Update parameters.parameters = update_parameters(parameters, grads, learning_rate)# Print the loss every 10000 iterationsif print_cost and i % 10000 == 0:print("Cost after iteration {}: {}".format(i, cost))if print_cost and i % 1000 == 0:costs.append(cost)# plot the costplt.plot(costs)plt.ylabel('cost')plt.xlabel('iterations (x1,000)')plt.title("Learning rate =" + str(learning_rate))plt.show()return parameters

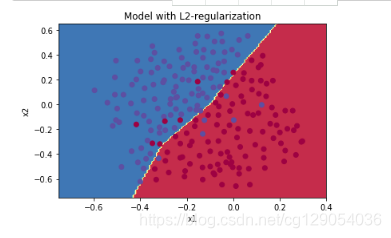

3 - L2 Regularization(掌握)

The standard way to avoid overfitting is called L2 regularization. It consists of appropriately modifying your cost function, from:

To:

Let's modify your cost and observe the consequences.

Exercise: Implement compute_cost_with_regularization() which computes the cost given by formula (2). To calculate

uses np.sum(np.squeeze(W1))。

Note that you have to do this for,then sum the three terms and multiply by

。

def compute_cost_with_regularization(A3, Y, parameters, lambd):"""Implement the cost function with L2 regularization. See formula (2) above.Arguments:A3 -- post-activation, output of forward propagation, of shape (output size, number of examples)Y -- "true" labels vector, of shape (output size, number of examples)parameters -- python dictionary containing parameters of the modelReturns:cost - value of the regularized loss function (formula (2))"""m = Y.shape[1]W1 = parameters["W1"]W2 = parameters["W2"]W3 = parameters["W3"]cross_entropy_cost = compute_cost(A3, Y) # This gives you the cross-entropy part of the costL2_regularization_cost = 1./m * lambd/2 * (np.sum(np.square(W1)) + np.sum(np.square(W2)) + np.sum(np.square(W3)))cost = cross_entropy_cost + L2_regularization_costreturn costOf course, because you changed the cost, you have to change backward propagation as well! All the gradients have to be computed with respect to this new cost.

Exercise: Implement the changes needed in backward propagation to take into account regularization. The changes only concern dW1, dW2 and dW3. For each, you have to add the regularization term's gradient

# GRADED FUNCTION: backward_propagation_with_regularizationdef backward_propagation_with_regularization(X, Y, cache, lambd):"""Implements the backward propagation of our baseline model to which we added an L2 regularization.Arguments:X -- input dataset, of shape (input size, number of examples)Y -- "true" labels vector, of shape (output size, number of examples)cache -- cache output from forward_propagation()lambd -- regularization hyperparameter, scalarReturns:gradients -- A dictionary with the gradients with respect to each parameter, activation and pre-activation variables"""m = X.shape[1](Z1, A1, W1, b1, Z2, A2, W2, b2, Z3, A3, W3, b3) = cachedZ3 = A3 - YdW3 = 1./m * np.dot(dZ3, A2.T) + lambd / m * W3db3 = 1./m * np.sum(dZ3, axis=1, keepdims = True)dA2 = np.dot(W3.T, dZ3)dZ2 = np.multiply(dA2, np.int64(A2 > 0))dW2 = 1./m * np.dot(dZ2, A1.T) + lambd / m * W2db2 = 1./m * np.sum(dZ2, axis=1, keepdims = True)dA1 = np.dot(W2.T, dZ2)dZ1 = np.multiply(dA1, np.int64(A1 > 0))dW1 = 1./m * np.dot(dZ1, X.T) + lambd / m * W1db1 = 1./m * np.sum(dZ1, axis=1, keepdims = True)gradients = {"dZ3": dZ3, "dW3": dW3, "db3": db3,"dA2": dA2,"dZ2": dZ2, "dW2": dW2, "db2": db2, "dA1": dA1, "dZ1": dZ1, "dW1": dW1, "db1": db1}return gradients

What is L2-regularization actually doing?:

L2-regularization relies on the assumption that a model with small weights is simpler than a model with large weights. Thus, by penalizing the square values of the weights in the cost function you drive all the weights to smaller values. It becomes too costly for the cost to have large weights! This leads to a smoother model in which the output changes more slowly as the input changes.

**What you should remember** -- the implications of L2-regularization on: - The cost computation: - A regularization term is added to the cost - The backpropagation function: - There are extra terms in the gradients with respect to weight matrices - Weights end up smaller ("weight decay"): - Weights are pushed to smaller values.

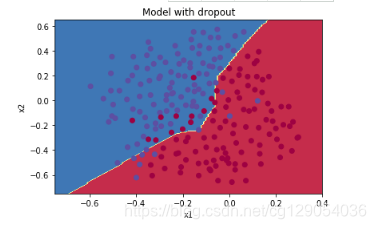

4-Dropout(掌握)

Finally, dropout is a widely used regularization technique that is specific to deep learning. It randomly shuts down some neurons in each iteration. Watch these two videos to see what this means!

4.1 - Forward propagation with dropout

Exercise: Implement the forward propagation with dropout. You are using a 3 layer neural network, and will add dropout to the first and second hidden layers. We will not apply dropout to the input layer or output layer.

Instructions: You would like to shut down some neurons in the first and second layers. To do that, you are going to carry out 4 Steps:

- In lecture, we dicussed creating a variable

with the same shape as

using

np.random.rand()to randomly get numbers between 0 and 1. Here, you will use a vectorized implementation, so create a random matrixof the same dimension as

].

- Set each entry of

to be 0 with probability (

1-keep_prob) or 1 with probability (keep_prob), by thresholding values inappropriately. Hint: to set all the entries of a matrix X to 0 (if entry is less than 0.5) or 1 (if entry is more than 0.5) you would do:

X = (X < 0.5). Note that 0 and 1 are respectively equivalent to False and True. - Set

to

. (You are shutting down some neurons). You can think of

as a mask, so that when it is multiplied with another matrix, it shuts down some of the values.

- Divide

by

keep_prob. By doing this you are assuring that the result of the cost will still have the same expected value as without drop-out. (This technique is also called inverted dropout.)

# GRADED FUNCTION: forward_propagation_with_dropoutdef forward_propagation_with_dropout(X, parameters, keep_prob = 0.5):"""Implements the forward propagation: LINEAR -> RELU + DROPOUT -> LINEAR -> RELU + DROPOUT -> LINEAR -> SIGMOID.Arguments:X -- input dataset, of shape (2, number of examples)parameters -- python dictionary containing your parameters "W1", "b1", "W2", "b2", "W3", "b3":W1 -- weight matrix of shape (20, 2)b1 -- bias vector of shape (20, 1)W2 -- weight matrix of shape (3, 20)b2 -- bias vector of shape (3, 1)W3 -- weight matrix of shape (1, 3)b3 -- bias vector of shape (1, 1)keep_prob - probability of keeping a neuron active during drop-out, scalarReturns:A3 -- last activation value, output of the forward propagation, of shape (1,1)cache -- tuple, information stored for computing the backward propagation"""np.random.seed(1)# retrieve parametersW1 = parameters["W1"]b1 = parameters["b1"]W2 = parameters["W2"]b2 = parameters["b2"]W3 = parameters["W3"]b3 = parameters["b3"]# LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOIDZ1 = np.dot(W1, X) + b1A1 = relu(Z1)### START CODE HERE ### (approx. 4 lines) # Steps 1-4 below correspond to the Steps 1-4 described above. #D1 = np.random.rand(A1.shape[0], A1.shape[1]) # Step 1: initialize matrix D1 = np.random.rand(..., ...)#D1 = (D1 < keep_prob) # Step 2: convert entries of D1 to 0 or 1 (using keep_prob as the threshold)#A1 = A1 * D1 # Step 3: shut down some neurons of A1#A1 = A1 / keep_prob # Step 4: scale the value of neurons that haven't been shut downD1 = np.random.randn(A1.shape[0], A1.shape[1])D1 = (D1 < keep_prob)A1 = A1 * D1A1 = A1 / keep_prob### END CODE HERE ###Z2 = np.dot(W2, A1) + b2A2 = relu(Z2)### START CODE HERE ### (approx. 4 lines)D2 = np.random.rand(A2.shape[0], A2.shape[1]) # Step 1: initialize matrix D2 = np.random.rand(..., ...)D2 = (D2 < keep_prob) # Step 2: convert entries of D2 to 0 or 1 (using keep_prob as the threshold)A2 = A2 * D2 # Step 3: shut down some neurons of A2A2 = A2 / keep_prob # Step 4: scale the value of neurons that haven't been shut down### END CODE HERE ###Z3 = np.dot(W3, A2) + b3A3 = sigmoid(Z3)cache = (Z1, D1, A1, W1, b1, Z2, D2, A2, W2, b2, Z3, A3, W3, b3)return A3, cache4.2 - Backward propagation with dropout

Exercise: Implement the backward propagation with dropout. As before, you are training a 3 layer network. Add dropout to the first and second hidden layers, using the masks and

stored in the cache.

Instruction: Backpropagation with dropout is actually quite easy. You will have to carry out 2 Steps:

- You had previously shut down some neurons during forward propagation, by applying a mask

to

to

- During forward propagation, you had divided

A1bykeep_prob. In backpropagation, you'll therefore have to dividedA1bykeep_probagain (the calculus interpretation is that ifkeep_prob, then its derivativeis also scaled by the same

keep_prob).

# GRADED FUNCTION: backward_propagation_with_dropoutdef backward_propagation_with_dropout(X, Y, cache, keep_prob):"""Implements the backward propagation of our baseline model to which we added dropout.Arguments:X -- input dataset, of shape (2, number of examples)Y -- "true" labels vector, of shape (output size, number of examples)cache -- cache output from forward_propagation_with_dropout()keep_prob - probability of keeping a neuron active during drop-out, scalarReturns:gradients -- A dictionary with the gradients with respect to each parameter, activation and pre-activation variables"""m = X.shape[1](Z1, D1, A1, W1, b1, Z2, D2, A2, W2, b2, Z3, A3, W3, b3) = cachedZ3 = A3 - YdW3 = 1./m * np.dot(dZ3, A2.T)db3 = 1./m * np.sum(dZ3, axis=1, keepdims = True)dA2 = np.dot(W3.T, dZ3)### START CODE HERE ### (≈ 2 lines of code)dA2 = dA2 * D2 # Step 1: Apply mask D2 to shut down the same neurons as during the forward propagationdA2 = dA2 / keep_prob # Step 2: Scale the value of neurons that haven't been shut down### END CODE HERE ###dZ2 = np.multiply(dA2, np.int64(A2 > 0))dW2 = 1./m * np.dot(dZ2, A1.T)db2 = 1./m * np.sum(dZ2, axis=1, keepdims = True)dA1 = np.dot(W2.T, dZ2)### START CODE HERE ### (≈ 2 lines of code)dA1 = dA1 * D1 # Step 1: Apply mask D1 to shut down the same neurons as during the forward propagationdA1 = dA1 / keep_prob # Step 2: Scale the value of neurons that haven't been shut down### END CODE HERE ###dZ1 = np.multiply(dA1, np.int64(A1 > 0))dW1 = 1./m * np.dot(dZ1, X.T)db1 = 1./m * np.sum(dZ1, axis=1, keepdims = True)gradients = {"dZ3": dZ3, "dW3": dW3, "db3": db3,"dA2": dA2,"dZ2": dZ2, "dW2": dW2, "db2": db2, "dA1": dA1, "dZ1": dZ1, "dW1": dW1, "db1": db1}return gradients

Note:

- A common mistake when using dropout is to use it both in training and testing. You should use dropout (randomly eliminate nodes) only in training.

- Deep learning frameworks like tensorflow, PaddlePaddle, keras or caffe come with a dropout layer implementation. Don't stress - you will soon learn some of these frameworks.

**What you should remember about dropout:** - Dropout is a regularization technique. - You only use dropout during training. Don't use dropout (randomly eliminate nodes) during test time. - Apply dropout both during forward and backward propagation. - During training time, divide each dropout layer by keep_prob to keep the same expected value for the activations. For example, if keep_prob is 0.5, then we will on average shut down half the nodes, so the output will be scaled by 0.5 since only the remaining half are contributing to the solution. Dividing by 0.5 is equivalent to multiplying by 2. Hence, the output now has the same expected value. You can check that this works even when keep_prob is other values than 0.5.

- A】Graph Games(思维,对边分块))

)

)

)

)

)

)

)

)

)