机器学习 综合评价

Any Machine Learning project journey starts with loading the dataset and ends (continues ?!) with the finalization of the optimum model or ensemble of models for predictions on unseen data and production deployment.

任何机器学习项目的旅程都始于加载数据集,然后结束(继续?!),最后确定最佳模型或模型集合,以预测看不见的数据和生产部署。

As machine learning practitioners, we are aware that there are several pit stops to be made along the way to arrive at the best possible prediction performance outcome. These intermediate steps include Exploratory Data Analysis (EDA), Data Preprocessing — missing value treatment, outlier treatment, changing data types, encoding categorical features, data transformation, feature engineering /selection, sampling, train-test split etc. to name a few — before we can embark on model building, evaluation and then prediction.

作为机器学习的从业者,我们意识到在达到最佳预测性能结果的过程中,有几个进站。 这些中间步骤包括探索性数据分析(EDA),数据预处理-缺失值处理,离群值处理,更改数据类型,编码分类特征,数据转换,特征工程/选择,采样,训练测试拆分等,仅举几例-在我们开始进行模型构建,评估然后进行预测之前。

We end up importing dozens of python packages to help us do this and this means getting familiar with the syntax and parameters of multiple function calls within each of these packages.

我们最终导入了数十个python软件包来帮助我们完成此操作,这意味着要熟悉每个软件包中的多个函数调用的语法和参数。

Have you wished that there could be a single package that can handle the entire journey end to end with a consistent syntax interface? I sure have!

您是否希望有一个包可以使用一致的语法接口来处理整个旅程,从头到尾? 我肯定有!

输入PyCaret (Enter PyCaret)

These wishes were answered with PyCaretpackage and it is now even more awesome with the release of pycaret2.0.

PyCaret软件包满足了这些愿望,现在pycaret2.0的发布pycaret2.0更加令人敬畏。

Starting with this Article, I will post a series on how pycaret helps us zip through the various stages of an ML project.

从本文开始,我将发布一系列有关pycaret如何帮助我们完成ML项目各个阶段的文章。

安装 (Installation)

Installation is a breeze and is over in a few minutes with all dependencies also being installed. It is recommended to install using a virtual environment like python3 virtualenv or conda environments to avoid any clash with other pre-installed packages.

安装轻而易举,几分钟后就结束了,同时还安装了所有依赖项。 建议使用虚拟环境(例如python3 virtualenv或conda环境)进行安装,以免与其他预装软件包冲突。

pip install pycaret==2.0

pip install pycaret==2.0

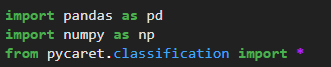

Once installed, we are ready to begin! We import the package into our notebook environment. We will take up a classification problem here. Similarly, the respective PyCaret modules can be imported for a scenario involving regression, clustering, anomaly detection, NLP and Association rules mining.

安装完成后,我们就可以开始了! 我们将包导入到笔记本环境中。 我们将在这里处理分类问题。 同样,可以针对涉及回归,聚类,异常检测,NLP和关联规则挖掘的方案导入相应的PyCaret模块。

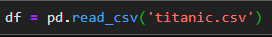

We will use the titanic dataset from kaggle.com. You can download the dataset from here.

我们将使用来自kaggle.com的titanic数据集。 您可以从此处下载数据集。

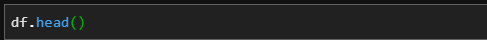

Let's check the first few rows of the dataset using the head() function:

让我们使用head()函数检查数据集的前几行:

建立 (Setup)

The setup() function of pycaret does most — correction, ALL, of the heavy-lifting, that normally is otherwise done in dozens of lines of code — in just a single line!

pycaret的setup()函数pycaret完成大部分工作-校正,全部进行繁重的工作,否则通常只需要一行几十行代码即可完成!

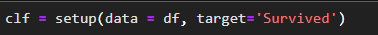

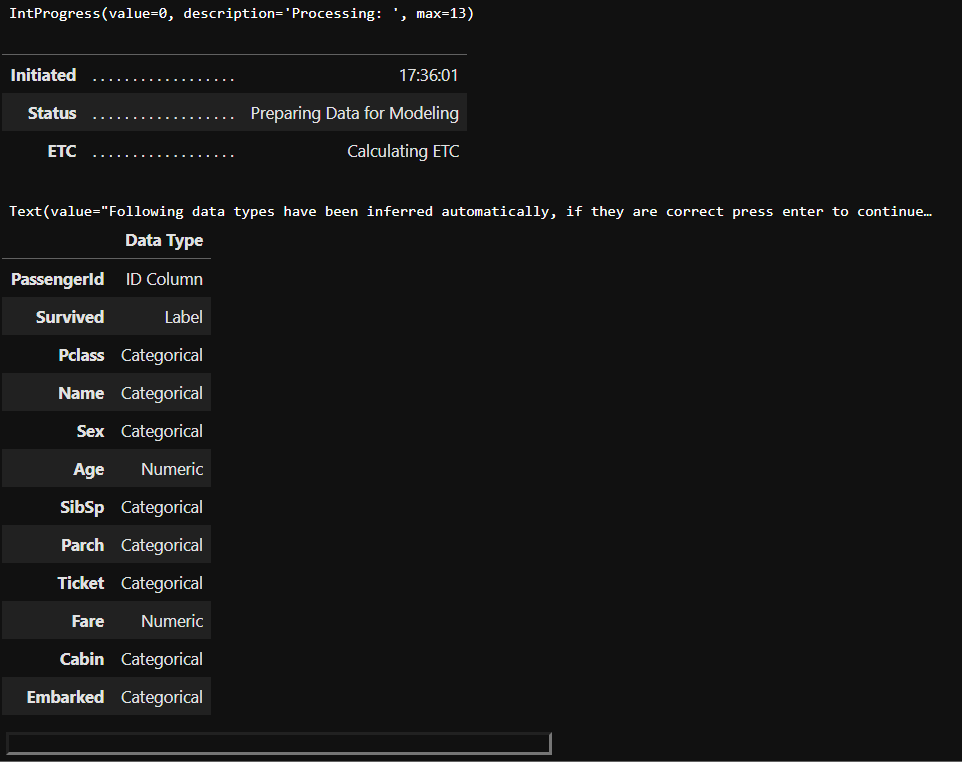

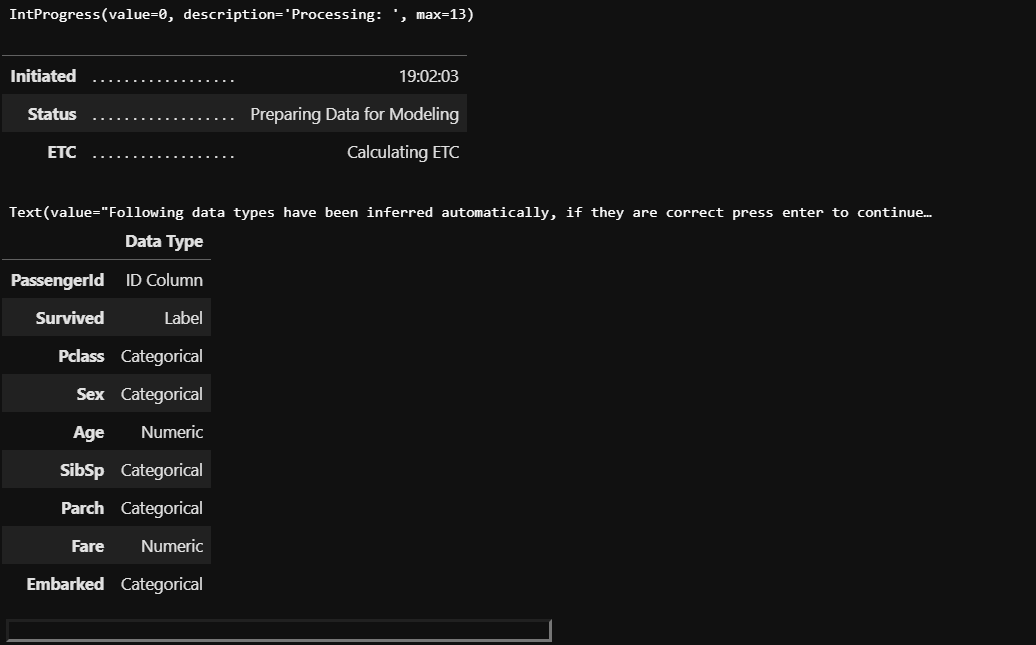

We just need to pass the dataframe and specify the name of the target feature as the arguments. The setup command generates the following output.

我们只需要传递数据框并指定目标要素的名称作为参数即可。 setup命令生成以下输出。

setup has helpfully inferred the data types of the features in the dataset. If we agree to it, all we need to do is hit Enter . Else, if you think the data types as inferred by setup is not correct then you can type quit in the field at the bottom and go back to the setup function to make changes. We will see how to do that shortly. For now, lets hit Enter and see what happens.

setup有助于推断数据集中要素的数据类型。 如果我们同意,则只需按Enter 。 否则,如果您认为由setup程序推断出的数据类型不正确,则可以在底部的字段中键入quit ,然后返回到setup功能进行更改。 我们将很快看到如何做。 现在,让我们Enter ,看看会发生什么。

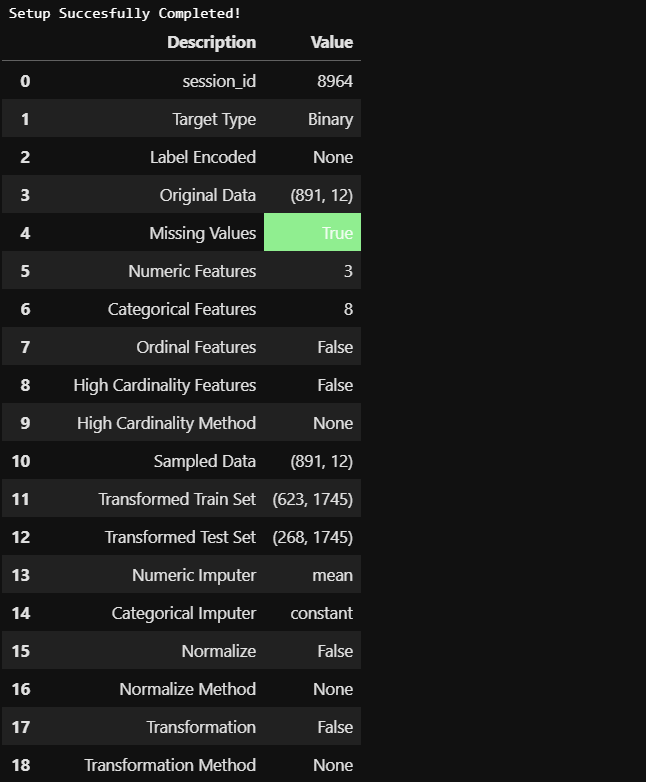

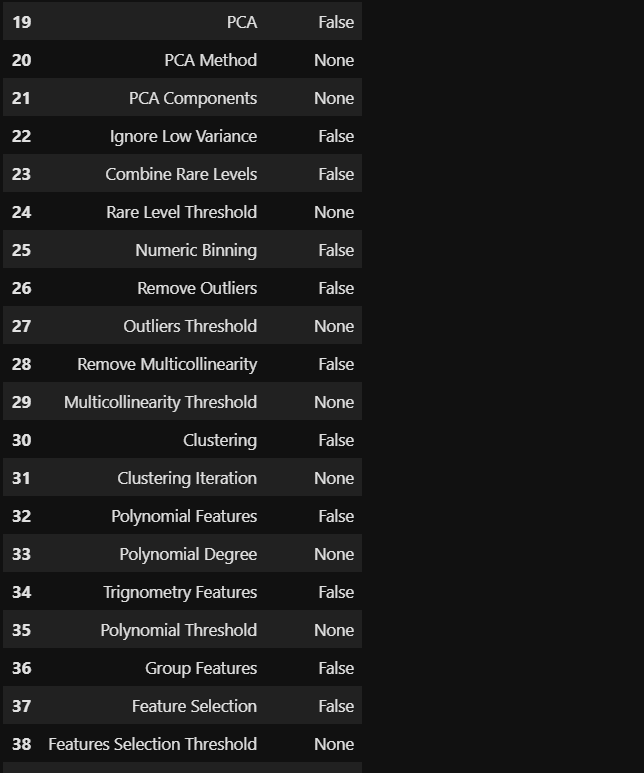

Whew! A whole lot seems to have happened under the hood in just one line of innocuous-looking code! Let's take stock:

ew! 似乎只有一行无害的代码在幕后发生了很多事情! 让我们盘点一下:

- checked for missing values 检查缺失值

- identified numeric and categorical features 确定的数字和分类特征

- created train and test data sets from the original dataset 从原始数据集中创建训练和测试数据集

- imputed missing values in continuous features with mean 连续特征中的插补缺失值

- imputed missing values in categorical features with a constant value 具有恒定值的分类特征中的推定缺失值

- done label-encoding 完成标签编码

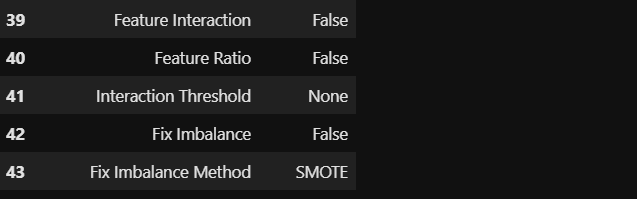

- ..and a whole host of other options seem to be available including outlier treatment, data scaling, feature transformation, dimensionality reduction, multi-collinearity treatment, feature selection and handling imbalanced data etc.! ..以及似乎还有许多其他选择,包括异常值处理,数据缩放,特征转换,降维,多重共线性处理,特征选择和处理不平衡数据等!

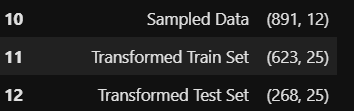

But hey! what is that on lines 11 & 12? The number of features in the train and test datasets are 1745? Seems to be a case of label encoding gone berserk most probably from the categorical features like name, ticket and cabin. Further in this article and in the next, we will look at how we can control the setup as per our requirements to address such cases proactively.

但是,嘿! 第11和12行是什么? 训练和测试数据集中的要素数量为1745? 似乎是标签编码的一种情况,很可能是从name , ticket和cabin等分类特征中消失了。 在本文的下一部分和下一部分中,我们将研究如何根据我们的要求控制设置,以主动解决此类情况。

定制setup (Customizing setup)

To start with how can we exclude features from model building like the three features above? We pass the variables which we want to exclude in the ignore_features argument of the setup function. It is to be noted that the ID and DateTime columns, when inferred, are automatically set to be ignored for modelling.

首先,我们如何像上面的三个功能那样从模型构建中排除功能? 我们在setup函数的ignore_features参数中传递要排除的变量。 要注意的是,ID和DateTime列在推断时会自动设置为忽略以进行建模。

Note below that pycaret, while asking for our confirmation has dropped the above mentioned 3 features. Let's click Enter and proceed.

请注意,在pycaret下方,要求我们确认时已删除了上述3个功能。 让我们单击Enter并继续。

In the resultant output (the truncated version is shown below), we can see that post setup, the dataset shape is more manageable now with label encoding done only of the remaining more relevant categorical features:

在结果输出中(截断的版本如下所示),我们可以看到设置后,现在仅使用其余更相关的分类特征进行标签编码,就更易于管理数据集形状:

In the next Article in this series we will look in detail at further data preprocessing tasks we can achieve on the dataset using this single setup function of pycaret by passing additional arguments.

在本系列的下一篇文章中,我们将详细介绍通过使用pycaret的单个setup功能通过传递附加参数可以对数据集完成的进一步数据预处理任务。

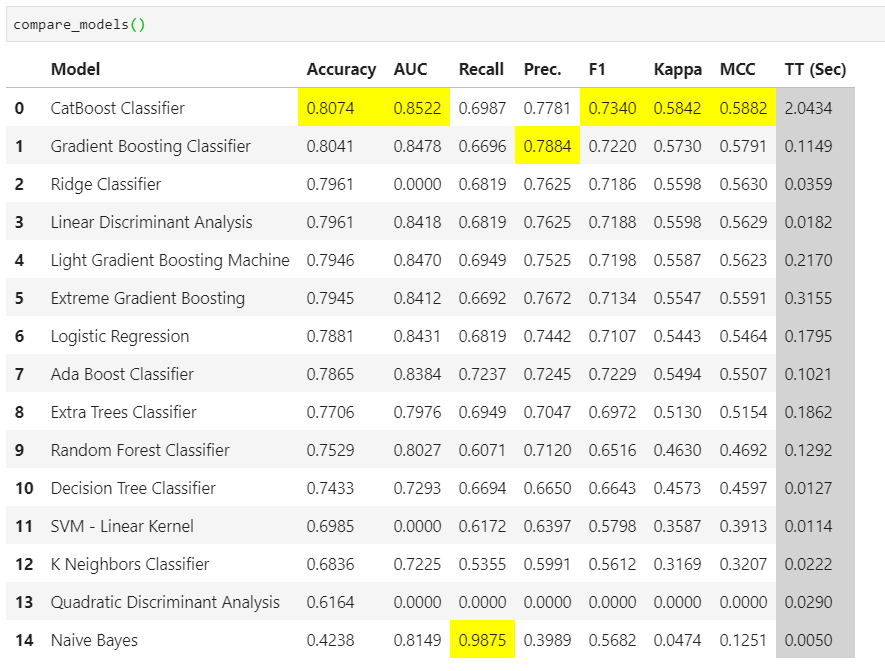

But before we go, let’s do a flash-forward to the amazing model comparison capabilities of pycaret using the compare_model() function.

但是在开始之前,让我们使用compare_model()函数pycaret惊人的模型比较功能。

Boom! All it takes is just compare_models() to get the results of 15 classification modelling algorithms compared across various classification metrics on cross-validation. At a glance, we can see that CatBoost classifier performs best across most of the metrics with Naive-Bayes doing well on recall and Gradient Boosting on precision. The top-performing model for each metric is highlighted automatically by pycaret.

繁荣! 它所compare_models()只是compare_models()来获得15种分类建模算法的结果,这些算法在交叉验证的各个分类指标之间进行了比较。 一目了然,我们可以看到CatBoost分类器在大多数指标上表现最佳,其中Naive-Bayes在召回率方面表现出色,而在精度方面则表现出Gradient Boosting 。 pycaret自动突出显示每个指标的性能最高的模型。

Depending on the model evaluation metric(s) we are interested in pycaret helps us to straightaway zoom in on the top-performing model which we can further tune using the hyper-parameters. More on this in the upcoming Articles.

根据模型评估指标,我们对pycaret感兴趣,可以帮助我们Swift放大性能最高的模型,我们可以使用超参数进一步对其进行调整。 在即将到来的文章中对此有更多的了解。

In conclusion, we have briefly seen glimpses of how pycaret can help us to fast track through the ML project life cycle through minimal code combined with extensive and comprehensive customization of the critical data pre-processing stages.

总之,我们已经简要了解了pycaret如何通过最少的代码以及对关键数据预处理阶段的广泛而全面的自定义,可以帮助我们快速跟踪ML项目生命周期。

You may also be interested in my other articles on awesome packages that use minimal code to deliver maximum results in Exploratory Data Analysis(EDA) and Visualization.

您可能还对我的其他有关超棒软件包的文章感兴趣,这些文章使用最少的代码来在探索性数据分析(EDA)和可视化中提供最大的结果。

Thanks for reading and would love to hear your feedback. Cheers!

感谢您的阅读,并希望听到您的反馈。 干杯!

翻译自: https://towardsdatascience.com/pycaret-the-machine-learning-omnibus-dadf6e230f7b

机器学习 综合评价

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/388858.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

![【洛谷】P1641 [SCOI2010]生成字符串(思维+组合+逆元)](http://pic.xiahunao.cn/【洛谷】P1641 [SCOI2010]生成字符串(思维+组合+逆元))

暑期集训--二进制(BZOJ5294)【线段树】)

![[Python设计模式] 第17章 程序中的翻译官——适配器模式](http://pic.xiahunao.cn/[Python设计模式] 第17章 程序中的翻译官——适配器模式)

)

)