selenium 解析网页

网页抓取系列 (WEB SCRAPING SERIES)

总览 (Overview)

Selenium is a portable framework for testing web applications. It is open-source software released under the Apache License 2.0 that runs on Windows, Linux and macOS. Despite serving its major purpose, Selenium is also used as a web scraping tool. Without delving into the components of Selenium, we shall focus on a single component that is useful for web scraping, WebDriver. Selenium WebDriver provides us with an ability to control a web browser through a programming interface to create and execute test cases.

Selenium是用于测试Web应用程序的可移植框架。 它是在Windows,Linux和macOS上运行的Apache许可2.0下发行的开源软件。 尽管Selenium具有主要用途,但它也被用作网络抓取工具。 在不深入研究Selenium的组件的情况下,我们将只关注对Web抓取有用的单个组件WebDriver 。 Selenium WebDriver使我们能够通过编程界面控制Web浏览器以创建和执行测试用例。

In our case, we shall be using it for scraping data from websites. Selenium comes in handy when websites display content dynamically i.e. use JavaScripts to render content. Even though Scrapy is a powerful web scraping framework, it becomes useless with these dynamic websites. My goal for this tutorial is to make you familiarize with Selenium and carry out some basic web scraping using it.

就我们而言,我们将使用它来从网站上抓取数据。 当网站动态显示内容(即使用JavaScript呈现内容)时,Selenium会派上用场。 尽管Scrapy是强大的Web抓取框架,但对于这些动态网站而言,它就变得毫无用处。 本教程的目的是使您熟悉Selenium并使用它进行一些基本的Web抓取。

Let us start by installing selenium and a webdriver. WebDrivers support 7 Programming Languages: Python, Java, C#, Ruby, PHP, .Net and Perl. The examples in this manual are with Python language. There are tutorials available on the internet with other languages.

让我们从安装selenium和一个webdriver开始。 WebDrivers支持7种编程语言:Python,Java,C#,Ruby,PHP,.Net和Perl。 本手册中的示例均使用Python语言。 互联网上有其他语言的教程。

This is the third part of a 4 part tutorial series on web scraping using Scrapy and Selenium. The other parts can be found at

这是有关使用Scrapy和Selenium进行Web抓取的4部分教程系列的第三部分。 其他部分可以在找到

Part 1: Web scraping with Scrapy: Theoretical Understanding

第1部分:使用Scrapy进行Web抓取:理论理解

Part 2: Web scraping with Scrapy: Practical Understanding

第2部分:使用Scrapy进行Web爬取:实践理解

Part 4: Web scraping with Selenium & Scrapy

第4部分:使用Selenium和Scrapy进行Web抓取

安装Selenium和WebDriver (Installing Selenium and WebDriver)

安装Selenium (Installing Selenium)

Installing Selenium on any Linux OS is easy. Just execute the following command in a terminal and Selenium would be installed automatically.

在任何Linux操作系统上安装Selenium都很容易。 只需在终端中执行以下命令,即可自动安装Selenium。

pip install selenium安装WebDriver (Installing WebDriver)

Selenium officially has WebDrivers for 5 Web Browsers. Here, we shall see the installation of WebDriver for two of the most widely used browsers: Chrome and Firefox.

Selenium正式具有用于5个Web浏览器的 WebDrivers 。 在这里,我们将看到为两种最广泛使用的浏览器安装了WebDriver:Chrome和Firefox。

安装适用于Chrome的Chromedriver (Installing Chromedriver for Chrome)

First, we need to download the latest stable version of chromedriver from Chrome’s official site. It would be a zip file. All we need to do is extract it and put it in the executable path.

首先,我们需要从Chrome的官方网站下载最新的稳定版chromedriver 。 这将是一个zip文件。 我们需要做的就是提取它并将其放在可执行文件路径中。

wget https://chromedriver.storage.googleapis.com/83.0.4103.39/chromedriver_linux64.zipunzip chromedriver_linux64.zipsudo mv chromedriver /usr/local/bin/为Firefox安装Geckodriver (Installing Geckodriver for Firefox)

Installing geckodriver for Firefox is even simpler since it is maintained by Firefox itself. All we need to do is execute the following line in a terminal and you are ready to play around with selenium and geckodriver.

为Firefox安装geckodriver更加简单,因为它是由Firefox自己维护的。 我们需要做的就是在终端中执行以下行,您可以使用Selenium和geckodriver。

sudo apt install firefox-geckodriver例子 (Examples)

There are two examples with increasing levels of complexity. First one would be a simpler webpage opening and typing into textboxes and pressing key(s). This example is to showcase how a webpage can be controlled through Selenium using a program. The second one would be a more complex web scraping example involving mouse scrolling, mouse button clicks and navigating to other pages. The goal here is to make you feel confident to start web scraping with Selenium.

有两个示例的复杂性不断提高。 第一个将是一个更简单的网页,打开并在文本框中键入内容并按键。 这个例子展示了如何使用程序通过Selenium控制网页。 第二个是更复杂的Web抓取示例,其中涉及鼠标滚动,鼠标按钮单击以及导航到其他页面。 目的是使您有信心开始使用Selenium进行网页抓取。

示例1 —使用Selenium登录到Facebook (Example 1 — Logging into Facebook using Selenium)

Let us try out a simple automation task using Selenium and chromedriver as our training wheel exercise. For this, we would try to log into a Facebook account and we are not performing any kind of data scraping. I am assuming that you have some knowledge of identifying HTML tags used in a webpage using the browser’s developer tools. The following is a piece of python code that opens up a new Chrome browser, opens the Facebook main page, enters a username, password and clicks Login button.

让我们尝试使用Selenium和chromedriver作为我们的训练轮练习的简单自动化任务。 为此,我们将尝试登录Facebook帐户,并且不执行任何类型的数据抓取。 我假设您具有使用浏览器的开发人员工具识别网页中使用HTML标签的知识。 以下是一段python代码,用于打开新的Chrome浏览器,打开Facebook主页,输入用户名,密码并单击“登录”按钮。

from selenium import webdriver

from selenium.webdriver.common.keys import Keysuser_name = "Your E-mail"

password = "Your Password"# Creating a chromedriver instance

driver = webdriver.Chrome() # For Chrome

# driver = webdriver.Firefox() # For Firefox# Opening facebook homepage

driver.get("https://www.facebook.com")# Identifying email and password textboxes

email = driver.find_element_by_id("email")

passwd = driver.find_element_by_id("pass")# Sending user_name and password to corresponding textboxes

email.send_keys(user_name)

passwd.send_keys(password)# Sending a signal that RETURN key has been pressed

passwd.send_keys(Keys.RETURN)# driver.quit()After executing this python code, your Facebook homepage would open in a new Chrome browser window. Let us examine how this became possible.

执行此python代码后,您的Facebook主页将在新的Chrome浏览器窗口中打开。 让我们研究一下这如何成为可能。

It all starts with the creation of a webdriver instance for your browser. As I am using Chrome, I have used

driver = webdriver.Chrome().这一切都始于为浏览器创建Webdriver实例。 在使用Chrome时,我已经使用

driver = webdriver.Chrome()。Then we open the Facebook webpage using

driver.get("https://www.facebook.com"). When python encountersdriver.get(URL), it opens a new browser window and opens the webpage specified by theURL.然后,我们使用

driver.get("https://www.facebook.com")打开Facebook网页。 当python遇到driver.get(URL),它将打开一个新的浏览器窗口,并打开由URL指定的网页。Once the homepage is loaded, we identify the textboxes to type e-mail and password using their HTML tag’s id attribute. This is done using

driver.find_element_by_id().加载首页后,我们将使用其HTML标签的id属性来标识文本框,以键入电子邮件和密码。 这是使用

driver.find_element_by_id()。We send the

usernameandpasswordvalues for logging into Facebook usingsend_keys().我们使用

send_keys()发送用于登录Facebook的username和password值。We then simulate the user’s action of pressing RETURN/ENTER key by sending its corresponding signal using

send_keys(Keys.RETURN).然后,我们通过使用

send_keys(Keys.RETURN)发送相应的信号来模拟用户按下RETURN / ENTER键的send_keys(Keys.RETURN)。

IMPORTANT NOTE:Any instance created in a program should be closed at the end of the program or after its purpose is served. So, whenever we are creating a webdriver instance, it has to be terminated using driver.quit(). If we do not terminate the opened instances, it starts to use up RAM, which may impact the machine's performance and slow it down. In the above example, this termination process has been commented out to show the output in a browser window. And, if terminated, the browser window would also be closed and the reader would not be able to see the output.

重要说明:在程序中创建的任何实例都应在程序结束时或在达到其目的后关闭。 因此,每当我们创建一个webdriver实例时,都必须使用driver.quit()将其终止。 如果我们不终止打开的实例,它将开始用完RAM,这可能会影响计算机的性能并降低其速度。 在上面的示例中,该终止过程已被注释掉,以在浏览器窗口中显示输出。 并且,如果终止,浏览器窗口也将关闭,阅读器将无法看到输出。

示例2 —从OpenAQ收集污染数据 (Example 2 — Scraping Pollution data from OpenAQ)

This is a more complex example. OpenAQ is a non-profit organization that collects and shares air quality data that are open and can be accessed in many ways. This is evident from the site’s robots.txt.

这是一个更复杂的示例。 OpenAQ是一个非营利性组织,负责收集和共享开放的空气质量数据,并且可以通过多种方式进行访问。 从网站的robots.txt中可以明显看出这一点。

User-agent: *

Disallow:Our goal here is to collect data on PM2.5 readings from all the countries listed on http://openaq.org. PM2.5 are the particulate matter (PM) that have a diameter lesser than 2.5 micrometres, which is way smaller than the diameter of a human hair. If the reader is interested in knowing more about PM2.5, please follow this link.

我们的目标是从http://openaq.org上列出的所有国家/地区收集有关PM2.5读数的数据。 PM2.5是直径小于2.5微米(比人的头发直径小得多)的颗粒物(PM)。 如果读者有兴趣了解更多有关PM2.5的信息,请点击此链接。

The reason for choosing Selenium over Scrapy is that http://openaq.org uses React JS to render data. If it were static webpages, Scrapy would scrape the data efficiently. To scrape data, we first need to analyze the website, manually navigate the pages and note down the user interaction steps required to extract data.

选择Selenium而不是Scrapy的原因是http://openaq.org使用React JS渲染数据。 如果是静态网页,Scrapy将有效地抓取数据。 要抓取数据,我们首先需要分析网站,手动浏览页面,并记下提取数据所需的用户交互步骤。

了解http://openaq.org布局 (Understanding http://openaq.org layout)

It is always better to scrape with as few webpage navigations as possible. The website has a webpage https://openaq.org/#/locations which could be used as a starting point for scraping.

最好抓取尽可能少的网页导航。 该网站有一个网页https://openaq.org/#/locations ,可以用作抓取的起点。

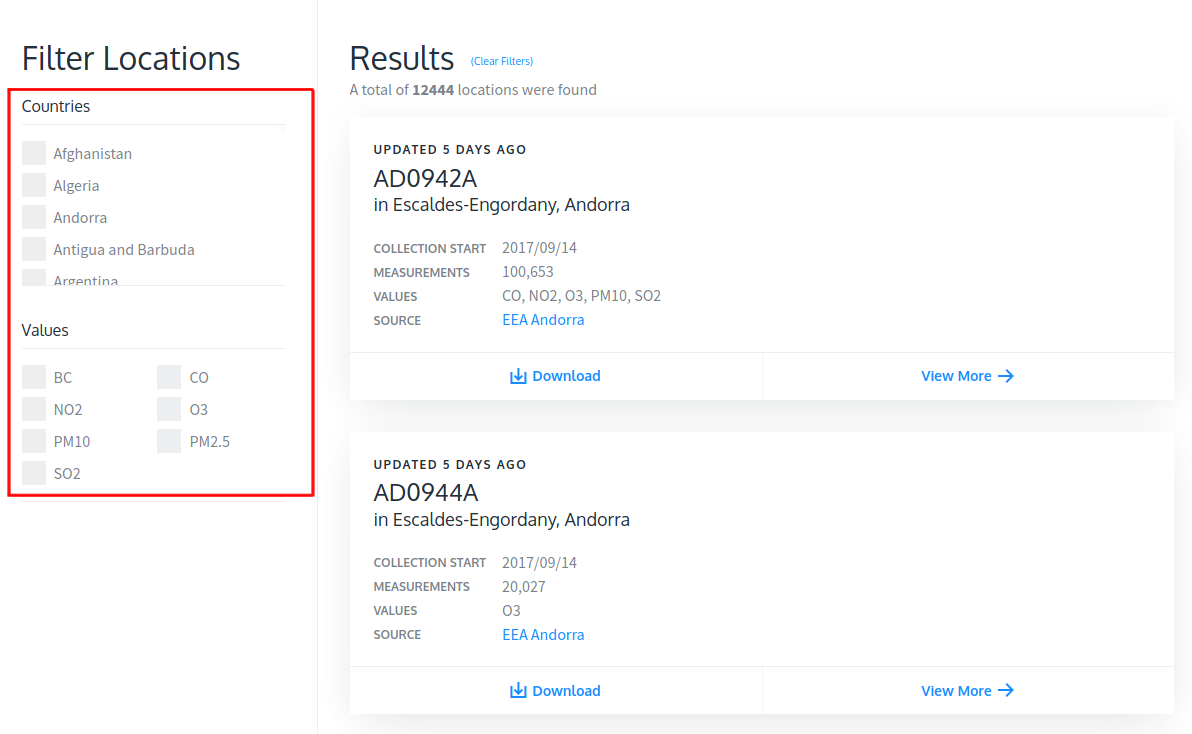

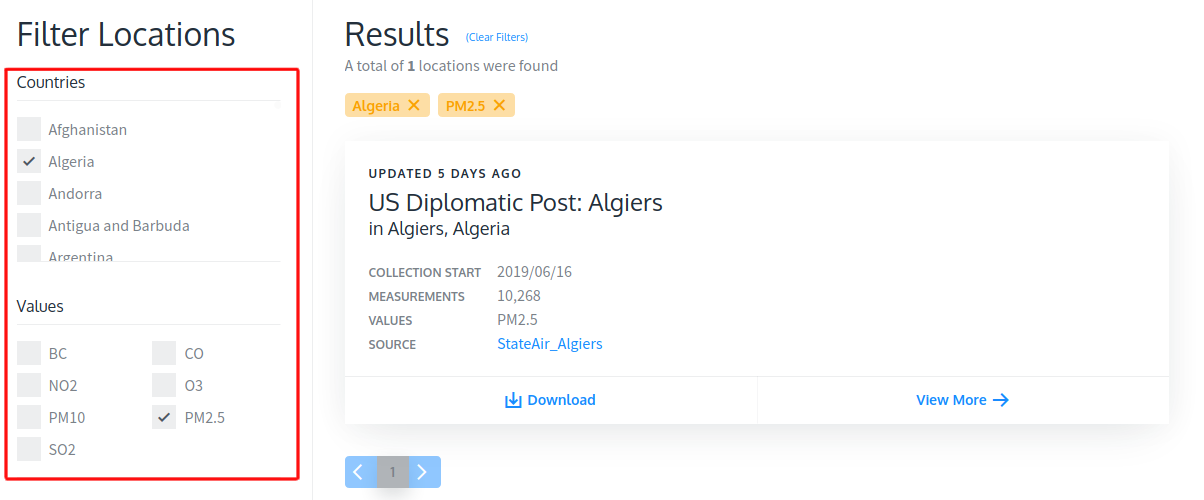

The filter locations option on the left-side panel is used to filter out PM2.5 data for each country. The Results on the right-side panel show cards that open a new page when clicked to display PM2.5 and other data.

左侧面板上的过滤器位置选项用于过滤每个国家的PM2.5数据。 右侧面板上的结果显示了单击显示PM2.5和其他数据时会打开新页面的卡片。

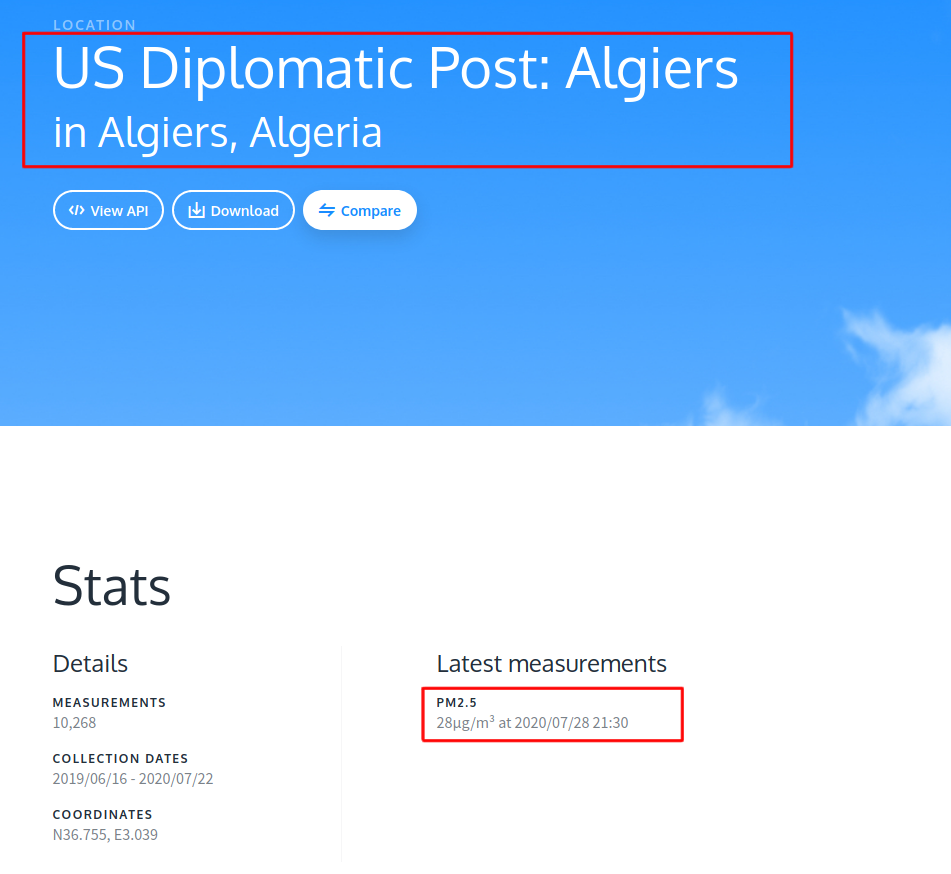

A sample page containing PM2.5 data is shown below. From this page, we can extract PM2.5 values, location, city, country, date and time of recording PM2.5 value using XPATH or CSS.

包含PM2.5数据的示例页面如下所示。 在此页面上,我们可以使用XPATH或CSS提取PM2.5值,位置,城市,国家,记录PM2.5值的日期和时间。

Similarly, the left-side panel can be used to filter out and collect URLs of all the locations that contain PM2.5 data. The following are the actions that we performed manually to collect the data.

同样,左侧面板可用于过滤和收集包含PM2.5数据的所有位置的URL。 以下是我们为收集数据而手动执行的操作。

Open https://openaq.org/#/locations

打开https://openaq.org/#/locations

- From the left-side panel, select/click checkbox of a country. Let us go through the countries alphabetically. 在左侧面板中,选择/单击国家/地区的复选框。 让我们按字母顺序浏览各个国家。

- Also, from the left-side panel, select/click checkbox PM2.5. 另外,从左侧面板中,选择/单击复选框PM2.5。

- Wait for the cards to load in the right-side panel. Each card would then open a new webpage when clicked to display PM2.5 and other data. 等待卡装入右侧面板。 单击显示PM2.5和其他数据后,每张卡都会打开一个新的网页。

收集PM2.5数据所需的步骤 (Steps needed to collect PM2.5 data)

Based on the manual steps performed, data collection from http://openaq.org is broken down to 3 steps.

根据执行的手动步骤,从http://openaq.org收集数据分为3个步骤。

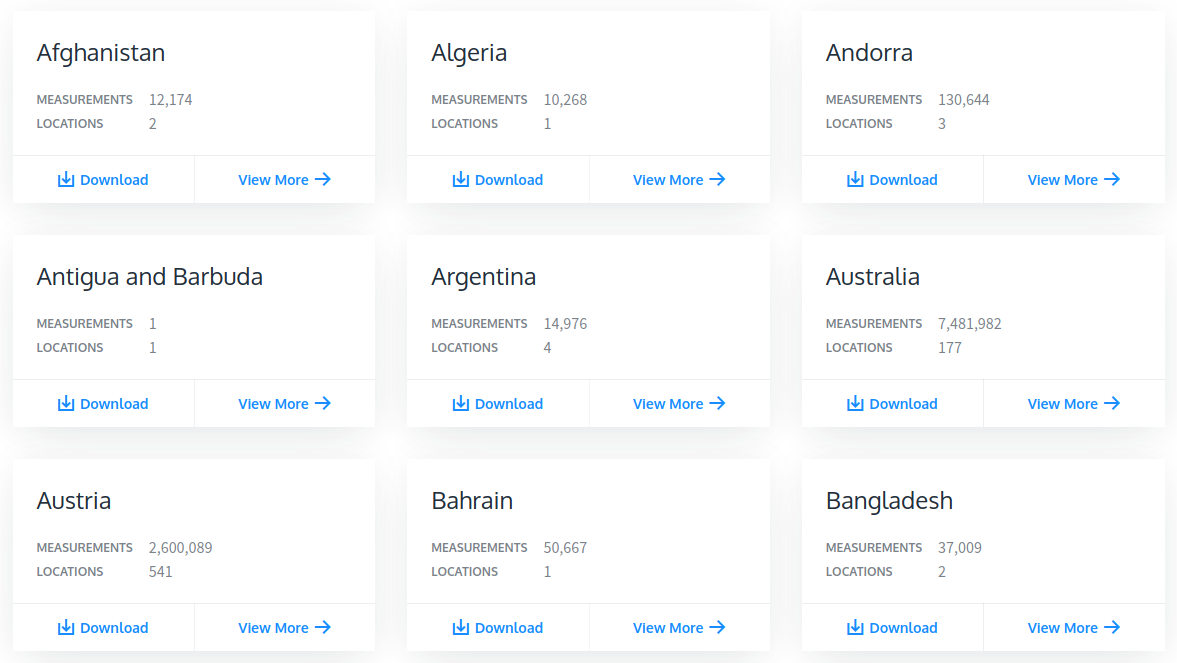

- Collecting country names as displayed on OpenAQ countries webpage. This would be used in selecting appropriate checkboxes while filtering. 收集OpenAQ国家网页上显示的国家名称。 这将用于在过滤时选择适当的复选框。

- Collecting URLs that contain PM2.5 data from each country. Some countries contain more than 20 PM2.5 readings collected from various locations. It would require further manipulation of the webpage, which is explained in the code section. 从每个国家/地区收集包含PM2.5数据的URL。 一些国家/地区包含从各个位置收集的20多个PM2.5读数。 这将需要对网页进行进一步的操作,这将在代码部分中进行说明。

- Opening up webpages of the individual URL and extracting PM2.5 data. 打开单个URL的网页并提取PM2.5数据。

刮取PM2.5数据 (Scraping PM2.5 data)

Now that we have the steps needed, let us start to code. The example is divided into 3 functions, each performing the task corresponding to the aforementioned 3 steps. The python code for this example can be found in my GitHub repository.

现在我们有了所需的步骤,让我们开始编写代码。 该示例分为3个功能,每个功能对应于上述3个步骤。 该示例的python代码可以在我的GitHub存储库中找到。

get_countries() (get_countries())

Instead of using OpenAQ locations webpage, there is https://openaq.org/#/countries webpage, which displays all the countries at once. It is easier to extract country names from this page.

https://openaq.org/#/countries网页不是使用OpenAQ位置网页,而是一次显示所有国家/地区。 从此页面提取国家/地区名称比较容易。

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import jsondef get_countries():countries_list = []# driver = webdriver.Chrome() # To open a new browser window and navigate it# Use the headless option to avoid opening a new browser window

options = webdriver.ChromeOptions()

options.add_argument("headless")

desired_capabilities = options.to_capabilities()

driver = webdriver.Chrome(desired_capabilities=desired_capabilities)# Getting webpage with the list of countriesdriver.get("https://openaq.org/#/countries")# Implicit wait

driver.implicitly_wait(10)# Explicit wait

wait = WebDriverWait(driver, 5)

wait.until(EC.presence_of_element_located((By.CLASS_NAME, "card__title")))

countries = driver.find_elements_by_class_name("card__title")

for country in countries:

countries_list.append(country.text)driver.quit()# Write countries_list to json file

with open("countries_list.json", "w") as f:

json.dump(countries_list, f)Let us understand how the code works. As always, the first step is to instantiate the webdriver. Here, instead of opening a new browser window, the webdriver is instantiated as a headless one. This way, a new browser window will not be opened and the burden on RAM would be reduced. The second step is to open the webpage containing the list of countries. The concept of wait is used in the above code.

让我们了解代码的工作原理。 与往常一样,第一步是实例化webdriver。 在这里,无需打开新的浏览器窗口,而是将Webdriver实例化为无头的窗口。 这样,将不会打开新的浏览器窗口,并且将减轻RAM的负担。 第二步是打开包含国家列表的网页。 在上面的代码中使用了wait概念。

Implicit Wait: When created, is alive until the WebDriver object dies. And is common for all operations. It instructs the webdriver to wait for a certain amount of time before elements load on the webpage.

隐式等待 :创建后,它一直存在,直到WebDriver对象死亡。 并且对于所有操作都是通用的。 它指示Web驱动程序在将元素加载到网页上之前等待一定的时间。

Explicit Wait: Intelligent waits that are confined to a particular web element, in this case, tag with class name “card__title”. It is generally used along with

ExpectedConditions.显式等待 :仅限于特定Web元素的智能等待,在这种情况下,标记为类名“ card__title”。 它通常与

ExpectedConditions一起使用。

The third step is to extract the country names using the tag with class name “card__title”. Finally, the country names are written to a JSON file for persistence. Below is a glimpse of the JSON file.

第三步是使用带有类名“ card__title”的标签提取国家名称。 最后,将国家/地区名称写入JSON文件以实现持久性。 以下是JSON文件的概览。

countries_list.json["Afghanistan", "Algeria", "Andorra", "Antigua and Barbuda", ... ]get_urls() (get_urls())

The next step after getting the list of countries is to get the URLs of every location that records PM2.5 data. To do this, we need to open the OpenAQ locations webpage and make use of the left-side panel to filter out countries and PM2.5 data. Once it is filtered, the right-side panel would be populated with cards to individual locations that record PM2.5 data. We extract the URLs corresponding to each of these cards and eventually write them to a file that would be used in the next step of extracting PM2.5 data. Some countries have more than 20 locations that record PM2.5 data. For example, Australia has 162 locations, Belgium has 69 locations, China has 1602 locations. For these countries, the right-side panel on locations webpage is subdivided into pages. It is highly imperative that we navigate through these pages and collect URLs of all the locations. The code below has a while TRUE: loop that performs this exact task of page navigation.

获取国家列表后的下一步是获取记录PM2.5数据的每个位置的URL。 为此,我们需要打开OpenAQ位置网页,并使用左侧面板过滤掉国家和PM2.5数据。 过滤后,右侧面板将在卡片上填充到记录PM2.5数据的各个位置。 我们提取与每个卡相对应的URL,最后将它们写入一个文件,该文件将在下一步提取PM2.5数据时使用。 一些国家/地区有20多个记录PM2.5数据的位置。 例如,澳大利亚有162个地点,比利时有69个地点,中国有1602个地点。 对于这些国家/地区,位置网页右侧的面板可细分为页面。 我们必须浏览这些页面并收集所有位置的URL,这一点非常重要。 下面的代码有while TRUE:循环,执行此页面导航的确切任务。

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.action_chains import ActionChains

from logzero import logger

import selenium.common.exceptions as exception

import time

import jsondef get_urls():# Load the countries list written by get_countries()

with open("countries_list.json", "r") as f:

countries_list = json.load(f)

# driver = webdriver.Chrome()

# Use headless option to not open a new browser window

options = webdriver.ChromeOptions()

options.add_argument("headless")

desired_capabilities = options.to_capabilities()

driver = webdriver.Chrome(desired_capabilities=desired_capabilities)urls_final = []

for country in countries_list:# Opening locations webpage

driver.get("https://openaq.org/#/locations")

driver.implicitly_wait(5)

urls = []# Scrolling down the country filter till the country is visible

action = ActionChains(driver)

action.move_to_element(driver.find_element_by_xpath("//span[contains(text()," + '"' + country + '"' + ")]"))

action.perform()# Identifying country and PM2.5 checkboxes

country_button = driver.find_element_by_xpath("//label[contains(@for," + '"' + country + '"' + ")]")

values_button = driver.find_element_by_xpath("//span[contains(text(),'PM2.5')]")

# Clicking the checkboxes

country_button.click()

time.sleep(2)

values_button.click()

time.sleep(2)while True:

# Navigating subpages where there are more PM2.5 data. For example, Australia has 162 PM2.5 readings from 162 different locations that are spread across 11 subpages.locations = driver.find_elements_by_xpath("//h1[@class='card__title']/a")for loc in locations:

link = loc.get_attribute("href")

urls.append(link)try:

next_button = driver.find_element_by_xpath("//li[@class='next']")

next_button.click()

except exception.NoSuchElementException:

logger.debug(f"Last page reached for {country}")

breaklogger.info(f"{country} has {len(urls)} PM2.5 URLs")

urls_final.extend(urls)logger.info(f"Total PM2.5 URLs: {len(urls_final)}")

driver.quit()# Write the URLs to a file

with open("urls.json", "w") as f:

json.dump(urls_final, f)It is always a good practice to log the output of programs that tend to run longer than 5 minutes. For this purpose, the above code makes use of logzero. The output JSON file containing the URLs looks like this.

记录运行时间超过5分钟的程序的输出始终是一个好习惯。 为此,上面的代码使用了logzero 。 包含URL的输出JSON文件如下所示。

urls.json[

"https://openaq.org/#/location/US%20Diplomatic%20Post%3A%20Kabul",

"https://openaq.org/#/location/Kabul",

"https://openaq.org/#/location/US%20Diplomatic%20Post%3A%20Algiers",

...

]get_pm_data() (get_pm_data())

The process of getting PM2.5 data from the individual location is a straight forward web scraping task of identifying the HTML tag containing the data and extracting it with text processing. The same happens in the code provided below. The code extracts the country, city, location, PM2.5 value, URL of the location, date and time of recording PM2.5 value. Since there are over 5000 URLs to be opened, there would be a problem with RAM usage unless the RAM installed is over 64GB. To make this program to run on machines with minimum 8GB of RAM, the webdriver is terminated and re-instantiated every 200 URLs.

从各个位置获取PM2.5数据的过程是直接的Web抓取任务,即识别包含数据HTML标签并通过文本处理将其提取。 在下面提供的代码中也会发生同样的情况。 该代码提取国家,城市,位置,PM2.5值,位置的URL,记录PM2.5值的日期和时间。 由于要打开的URL超过5000个,因此除非已安装的RAM超过64GB,否则RAM的使用会出现问题。 为了使该程序在具有至少8GB RAM的计算机上运行,将终止webdriver,并每200个URL重新实例化一次。

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.action_chains import ActionChains

from logzero import logger

import selenium.common.exceptions as exception

import time

import jsondef get_pm_data():# Load the URLs list written by get_urls()

with open("urls.json", "r") as f:

urls = json.load(f)# Use headless option to not open a new browser window

options = webdriver.ChromeOptions()

options.add_argument("headless")

desired_capabilities = options.to_capabilities()

driver = webdriver.Chrome(desired_capabilities=desired_capabilities)list_data_dict = []

count = 0for i, url in enumerate(urls):

data_dict = {}# Open the webpage corresponding to each URL

driver.get(url)

driver.implicitly_wait(10)

time.sleep(2)try:

# Extract Location and City

loc = driver.find_element_by_xpath("//h1[@class='inpage__title']").text.split("\n")

logger.info(f"loc: {loc}")

location = loc[0]

city_country = loc[1].replace("in ", "", 1).split(",")

city = city_country[0]

country = city_country[1]

data_dict["country"] = country

data_dict["city"] = city

data_dict["location"] = locationpm = driver.find_element_by_xpath("//dt[text()='PM2.5']/following-sibling::dd[1]").textif pm is not None:

# Extract PM2.5 value, Date and Time of recording

split = pm.split("µg/m³")

pm = split[0]

date_time = split[1].replace("at ", "").split(" ")

date_pm = date_time[1]

time_pm = date_time[2]

data_dict["pm25"] = pm

data_dict["url"] = url

data_dict["date"] = date_pm

data_dict["time"] = time_pmlist_data_dict.append(data_dict)

count += 1except exception.NoSuchElementException:

# Logging the info of locations that do not have PM2.5 data for manual checking

logger.error(f"{location} in {city},{country} does not have PM2.5")# Terminating and re-instantiating webdriver every 200 URL to reduce the load on RAM

if (i != 0) and (i % 200 == 0):

driver.quit()

driver = webdriver.Chrome(desired_capabilities=desired_capabilities)

logger.info("Chromedriver restarted")# Write the extracted data into a JSON file

with open("openaq_data.json", "w") as f:

json.dump(list_data_dict, f)logger.info(f"Scraped {count} PM2.5 readings.")

driver.quit()The outcome of the program looks as shown below. The program has extracted PM2.5 values from 4114 individual locations. Imagine opening these individual webpages and manually extracting the data. It is times like this makes us appreciate the use of web scraping programs or bots, in general.

程序的结果如下所示。 该程序已从4114个单独的位置提取了PM2.5值。 想象一下打开这些单独的网页并手动提取数据。 像这样的时代使我们大体上喜欢使用网络抓取程序或机器人。

openaq_data.json[

{

"country": " Afghanistan",

"city": "Kabul",

"location": "US Diplomatic Post: Kabul",

"pm25": "33",

"url": "https://openaq.org/#/location/US%20Diplomatic%20Post%3A%20Kabul",

"date": "2020/07/31",

"time": "11:00"

},

{

"country": " Algeria",

"city": "Algiers",

"location": "US Diplomatic Post: Algiers",

"pm25": "31",

"url": "https://openaq.org/#/location/US%20Diplomatic%20Post%3A%20Algiers",

"date": "2020/07/31",

"time": "08:30"

},

{

"country": " Australia",

"city": "Adelaide",

"location": "CBD",

"pm25": "9",

"url": "https://openaq.org/#/location/CBD",

"date": "2020/07/31",

"time": "11:00"

},

...

]结束语 (Closing remarks)

I hope this tutorial has given you the confidence to start web scraping with Selenium. The complete code of the example is available in my GitHub repository. In the next tutorial, I shall show you how to integrate Selenium with Scrapy.

我希望本教程使您有信心开始使用Selenium进行Web抓取。 该示例的完整代码可在我的GitHub 存储库中找到 。 在下一个教程中 ,我将向您展示如何将Selenium与Scrapy集成。

Till then, Good Luck. Stay safe and happy learning.!

到那时,祝你好运。 保持安全愉快的学习!!

翻译自: https://towardsdatascience.com/web-scraping-with-selenium-d7b6d8d3265a

selenium 解析网页

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/388096.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

)

)

对称二叉树 个人题解)

——目录结构)

——文件列表)

——页面结构(上))

——页面结构(下))