加权准确率WA,未加权平均召回率UAR和未加权UF1 1.加权准确率WA,未加权平均召回率UAR和未加权UF1 2.参考链接

from sklearn.metrics import classification_report

from sklearn.metrics import precision_recall_curve, average_precision_score,roc_curve, auc, precision_score, recall_score, f1_score, confusion_matrix, accuracy_scoreimport torch

from torchmetrics import MetricTracker, F1Score, Accuracy, Recall, Precision, Specificity, ConfusionMatrix

import warnings

warnings.filterwarnings( "ignore" ) pred_list = [ 2 , 0 , 4 , 1 , 4 , 2 , 4 , 2 , 2 , 0 , 4 , 1 , 4 , 2 , 2 , 0 , 4 , 1 , 4 , 2 , 4 , 2 ]

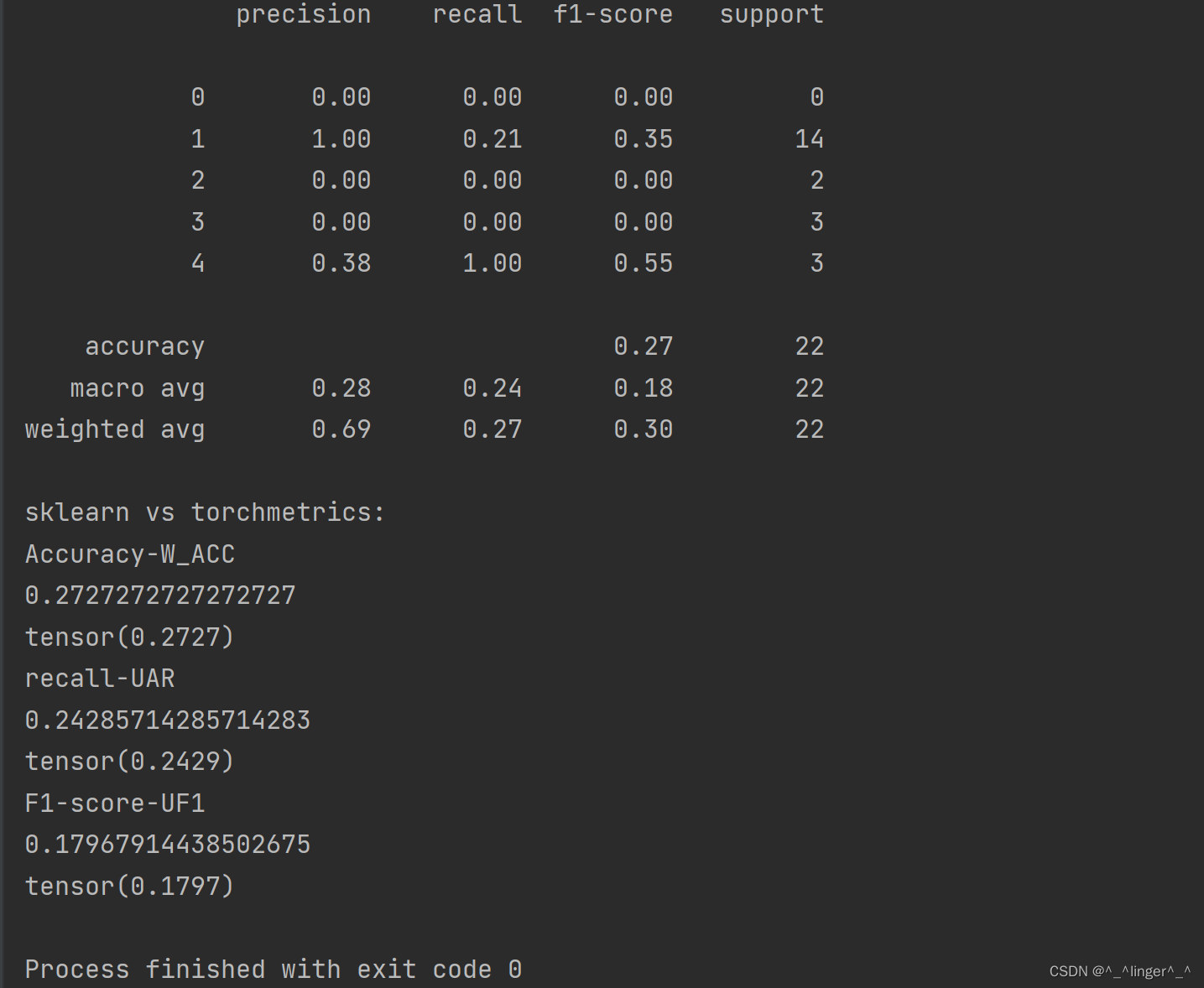

target_list = [ 1 , 1 , 4 , 1 , 3 , 1 , 2 , 1 , 1 , 1 , 4 , 1 , 3 , 1 , 1 , 1 , 4 , 1 , 3 , 1 , 2 , 1 ] print( classification_report( target_list, pred_list))

test_acc_en = Accuracy( task= "multiclass" ,num_classes= 5 , threshold = 1 . / 5 , average = "weighted" )

test_rcl_en = Recall( task= "multiclass" ,num_classes= 5 , threshold = 1 . / 5 , average = "macro" )

test_f1_en = F1Score( task= "multiclass" , num_classes = 5 , threshold = 1 . / 5 , average = "macro" ) preds = torch.tensor( pred_list)

target = torch.tensor( target_list)

print( "sklearn vs torchmetrics: " )

print( "Accuracy-W_ACC" )

print( accuracy_score( y_true= target_list, y_pred = pred_list))

print( test_acc_en( preds, target)) print( "recall-UAR" )

print( recall_score( y_true= target_list,y_pred= pred_list,average= 'macro' ))

print( test_rcl_en( preds, target)) print( "F1-score-UF1" )

print( f1_score( y_true= target_list, y_pred = pred_list, average = 'macro' ))

print( test_f1_en( preds, target))

一文看懂机器学习指标:准确率、精准率、召回率、F1、ROC曲线、AUC曲线 多分类中TP/TN/FP/FN的计算 多分类中混淆矩阵的TP,TN,FN,FP计算 TP、TN、FP、FN超级详细解析 一分钟看懂深度学习中的准确率(Accuracy)、精度(Precision)、召回率(Recall)和 mAP 深度学习评价指标简要综述 深度学习 数据多分类准确度 多分类的准确率计算 Sklearn和TorchMetrics计算F1、准确率(Accuracy)、召回率(Recall)、精确率(Precision)、敏感性(Sensitivity)、特异性(Specificity) 【PyTorch】TorchMetrics:PyTorch的指标度量库

)

)

)