中草药药材图像识别相关的实践在前文中已有对应的实践了,感兴趣的话可以自行移步阅读即可:

《python基于轻量级GhostNet模型开发构建23种常见中草药图像识别系统》

《基于轻量级MnasNet模型开发构建40种常见中草药图像识别系统》

在上一篇文章中,我们提到在自主开发构建大规模的中草药数据集,本文就是建立在这样的背景基础上的,目前已经构建了包含908种中草药数据的基础数据集,整体如下:

首先看下整体效果:

类别实例如下:

车前草

金银花

蒲公英

鸭跖草

垂盆草

酸浆

苍耳

马兰头

荠菜

小蓟

水芹菜

天胡荽

酢浆草

婆婆指甲菜

漆姑草

通泉草

波斯婆婆纳

泽漆

狗尾巴草

旋复花

黄花菜

小飞蓬

金线草

鸭舌草

兰花参

柴胡

麦冬

蛇莓

玉竹

桑白皮

曼陀罗

鬼针草

苦菜

葵菜

荨麻

龙葵

蒺藜

何首乌

野薄荷

棕榈

夏枯草

绞股蓝

紫云英

七星草

芍药

贝母

当归

丹皮

柴胡

车前草

紫苏

益母草

枇杷叶

荷叶

大青叶

艾叶

野菊花

金银花

月季花

旋覆花

莲子

菟丝子

银杏

茴香

天麻

葛根

桔梗

黄柏

杜仲

厚朴

全蝎

地龙

土鳖虫

蟋蟀

贝壳

珍珠

磁石

麻黄

桂枝

生姜

香薷

紫苏叶

藁本

辛夷

防风

白芷

荆芥

羌活

苍耳子

薄荷

牛蒡子

蔓荆子

蝉蜕

桑叶

葛根

柴胡

升麻

淡豆豉

知母

栀子

夏枯草

芦根

天花粉

淡竹叶

黄芩

黄连

黄柏

龙胆

苦参

犀角

生地黄

玄参

牡丹皮

赤芍

金银花

连翘

鱼腥草

熟地黄

党参

桂枝

山药

枸杞子

车前草

紫苏

大青叶

荷叶

青皮薄荷

柴胡

香附

当归

黄芪

西洋参

茯苓

苍术

艾叶

老姜

当归

香附

益母草

玫瑰花

桑枝

薄荷

木瓜

鸡血藤

女贞子

莲子

薏米

百合

人参

太子参

鹿茸

龟板

鳖甲

杏仁

桔梗

陈皮

丹参

川芎

旱莲草

车前子

大黄

夏枯草

连翘

金银花

桂枝

柴胡

香附

薄荷

青皮

香橼

佛手

熟地

当归

川芎

白芍

阿胶

丹参

三七

桃仁

红花

元胡

生地

石斛

沙参

麦冬

巴戟天

锁阳

火炭母

地胆草

崩大碗

绞股蓝

布荆

八角枫

八角茴香

八角金盘

八角莲

八角莲叶

目前数据总类别共有908种,来自我们不同成员的汇总,后续有新的类目可以持续进行扩充累积。

考虑到如此大类目的图像识别,本文选择的是经典的ResNet模型,残差网络(ResNet)是一种深度学习架构,用于解决深度神经网络中的梯度消失和梯度爆炸问题。它引入了残差块(residual block)的概念,使网络能够更轻松地学习恒等映射,从而提高网络的训练效果。

ResNet的构建原理如下:

-

基础模块:ResNet的基础模块是残差块。每个残差块由两个卷积层组成,每个卷积层后面跟着一个批量归一化层(batch normalization)和一个激活函数(通常是ReLU)。这两个卷积层的输出通过跳跃连接(skip connection)相加,然后再通过激活函数。这个跳跃连接允许信息直接流过残差块,从而避免了信息在网络中丢失或衰减。

-

堆叠残差块:ResNet通过堆叠多个残差块来构建更深的网络。这些残差块可以有不同的层数和滤波器数量,以适应不同的任务和网络深度需求。

-

池化层和全连接层:在堆叠残差块之后,可以添加池化层来减小特征图的尺寸,并通过全连接层对最终的特征进行分类或回归。

ResNet的优点:

-

解决梯度消失和梯度爆炸问题:由于残差块中的跳跃连接,ResNet可以更轻松地训练深层网络,避免了梯度在反向传播过程中的消失或爆炸。

-

提高网络的训练效果:残差块允许网络学习恒等映射,即将输入直接传递到输出。这使得网络可以更容易地学习残差部分,从而提高了网络的训练效果。

-

可以构建非常深的网络:由于残差连接的存在,ResNet可以堆叠更多的残差块,构建非常深的网络。这有助于提取更复杂的特征,从而提高模型的表达能力。

ResNet的缺点:

-

参数较多:由于ResNet的深度,网络中存在大量的参数,这会增加模型的复杂度和训练时间。

-

训练困难:尽管ResNet可以解决梯度消失和梯度爆炸问题,但在训练较深的ResNet时,仍然可能出现其他训练困难,如梯度退化问题和过拟合。

ResNet通过引入残差块和跳跃连接的方式,解决了深度神经网络中的梯度消失和梯度爆炸问题,并提高了网络的训练效果。

这里给出对应的代码实现:

# coding=utf-8

from keras.models import Model

from keras.layers import (Input,Dense,BatchNormalization,Conv2D,MaxPooling2D,AveragePooling2D,ZeroPadding2D,

)

from keras.layers import add, Flatten

from keras.optimizers import SGD

import numpy as npseed = 7

np.random.seed(seed)def Conv2d_BN(x, nb_filter, kernel_size, strides=(1, 1), padding="same", name=None):if name is not None:bn_name = name + "_bn"conv_name = name + "_conv"else:bn_name = Noneconv_name = Nonex = Conv2D(nb_filter,kernel_size,padding=padding,strides=strides,activation="relu",name=conv_name,)(x)x = BatchNormalization(axis=3, name=bn_name)(x)return xdef Conv_Block(inpt, nb_filter, kernel_size, strides=(1, 1), with_conv_shortcut=False):x = Conv2d_BN(inpt,nb_filter=nb_filter[0],kernel_size=(1, 1),strides=strides,padding="same",)x = Conv2d_BN(x, nb_filter=nb_filter[1], kernel_size=(3, 3), padding="same")x = Conv2d_BN(x, nb_filter=nb_filter[2], kernel_size=(1, 1), padding="same")if with_conv_shortcut:shortcut = Conv2d_BN(inpt, nb_filter=nb_filter[2], strides=strides, kernel_size=kernel_size)x = add([x, shortcut])return xelse:x = add([x, inpt])return xdef ResNet():inpt = Input(shape=(224, 224, 3))x = ZeroPadding2D((3, 3))(inpt)x = Conv2d_BN(x, nb_filter=64, kernel_size=(7, 7), strides=(2, 2), padding="valid")x = MaxPooling2D(pool_size=(3, 3), strides=(2, 2), padding="same")(x)x = Conv_Block(x,nb_filter=[64, 64, 256],kernel_size=(3, 3),strides=(1, 1),with_conv_shortcut=True,)x = Conv_Block(x, nb_filter=[64, 64, 256], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[64, 64, 256], kernel_size=(3, 3))x = Conv_Block(x,nb_filter=[128, 128, 512],kernel_size=(3, 3),strides=(2, 2),with_conv_shortcut=True,)x = Conv_Block(x, nb_filter=[128, 128, 512], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[128, 128, 512], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[128, 128, 512], kernel_size=(3, 3))x = Conv_Block(x,nb_filter=[256, 256, 1024],kernel_size=(3, 3),strides=(2, 2),with_conv_shortcut=True,)x = Conv_Block(x, nb_filter=[256, 256, 1024], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[256, 256, 1024], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[256, 256, 1024], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[256, 256, 1024], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[256, 256, 1024], kernel_size=(3, 3))x = Conv_Block(x,nb_filter=[512, 512, 2048],kernel_size=(3, 3),strides=(2, 2),with_conv_shortcut=True,)x = Conv_Block(x, nb_filter=[512, 512, 2048], kernel_size=(3, 3))x = Conv_Block(x, nb_filter=[512, 512, 2048], kernel_size=(3, 3))x = AveragePooling2D(pool_size=(7, 7))(x)x = Flatten()(x)x = Dense(908, activation="softmax")(x)model = Model(inputs=inpt, outputs=x)sgd = SGD(decay=0.0001, momentum=0.9)model.compile(loss="categorical_crossentropy", optimizer=sgd, metrics=["accuracy"])model.summary()

上面是基于Keras框架实现的,当然了也可以基于PyTorch框架实现,如下所示:

import torch

from torch import Tensor

import torch.nn as nn

import numpy as np

from torchvision._internally_replaced_utils import load_state_dict_from_url

from typing import Type, Any, Callable, Union, List, Optionaldef conv3x3(in_planes: int, out_planes: int, stride: int = 1, groups: int = 1, dilation: int = 1

) -> nn.Conv2d:return nn.Conv2d(in_planes,out_planes,kernel_size=3,stride=stride,padding=dilation,groups=groups,bias=False,dilation=dilation,)def conv1x1(in_planes: int, out_planes: int, stride: int = 1) -> nn.Conv2d:return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)class BasicBlock(nn.Module):expansion: int = 1def __init__(self,inplanes: int,planes: int,stride: int = 1,downsample: Optional[nn.Module] = None,groups: int = 1,base_width: int = 64,dilation: int = 1,norm_layer: Optional[Callable[..., nn.Module]] = None,) -> None:super(BasicBlock, self).__init__()if norm_layer is None:norm_layer = nn.BatchNorm2dif groups != 1 or base_width != 64:raise ValueError("BasicBlock only supports groups=1 and base_width=64")if dilation > 1:raise NotImplementedError("Dilation > 1 not supported in BasicBlock")self.conv1 = conv3x3(inplanes, planes, stride)self.bn1 = norm_layer(planes)self.relu = nn.ReLU(inplace=True)self.conv2 = conv3x3(planes, planes)self.bn2 = norm_layer(planes)self.downsample = downsampleself.stride = stridedef forward(self, x: Tensor) -> Tensor:identity = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)if self.downsample is not None:identity = self.downsample(x)out += identityout = self.relu(out)return outclass Bottleneck(nn.Module):expansion: int = 4def __init__(self,inplanes: int,planes: int,stride: int = 1,downsample: Optional[nn.Module] = None,groups: int = 1,base_width: int = 64,dilation: int = 1,norm_layer: Optional[Callable[..., nn.Module]] = None,) -> None:super(Bottleneck, self).__init__()if norm_layer is None:norm_layer = nn.BatchNorm2dwidth = int(planes * (base_width / 64.0)) * groups# Both self.conv2 and self.downsample layers downsample the input when stride != 1self.conv1 = conv1x1(inplanes, width)self.bn1 = norm_layer(width)self.conv2 = conv3x3(width, width, stride, groups, dilation)self.bn2 = norm_layer(width)self.conv3 = conv1x1(width, planes * self.expansion)self.bn3 = norm_layer(planes * self.expansion)self.relu = nn.ReLU(inplace=True)self.downsample = downsampleself.stride = stridedef forward(self, x: Tensor) -> Tensor:identity = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.conv2(out)out = self.bn2(out)out = self.relu(out)out = self.conv3(out)out = self.bn3(out)if self.downsample is not None:identity = self.downsample(x)out += identityout = self.relu(out)return outclass ResNet(nn.Module):def __init__(self,block: Type[Union[BasicBlock, Bottleneck]],layers: List[int],num_classes: int = 1000,zero_init_residual: bool = False,groups: int = 1,width_per_group: int = 64,replace_stride_with_dilation: Optional[List[bool]] = None,norm_layer: Optional[Callable[..., nn.Module]] = None,) -> None:super(ResNet, self).__init__()if norm_layer is None:norm_layer = nn.BatchNorm2dself._norm_layer = norm_layerself.inplanes = 64self.dilation = 1if replace_stride_with_dilation is None:replace_stride_with_dilation = [False, False, False]if len(replace_stride_with_dilation) != 3:raise ValueError("replace_stride_with_dilation should be None ""or a 3-element tuple, got {}".format(replace_stride_with_dilation))self.groups = groupsself.base_width = width_per_groupself.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)self.bn1 = norm_layer(self.inplanes)self.relu = nn.ReLU(inplace=True)self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)self.layer1 = self._make_layer(block, 64, layers[0])self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0])self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])self.avgpool = nn.AdaptiveAvgPool2d((1, 1))self.fc = nn.Linear(512 * block.expansion, num_classes)for m in self.modules():if isinstance(m, nn.Conv2d):nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):nn.init.constant_(m.weight, 1)nn.init.constant_(m.bias, 0)if zero_init_residual:for m in self.modules():if isinstance(m, Bottleneck):nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]elif isinstance(m, BasicBlock):nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]def _make_layer(self,block: Type[Union[BasicBlock, Bottleneck]],planes: int,blocks: int,stride: int = 1,dilate: bool = False,) -> nn.Sequential:norm_layer = self._norm_layerdownsample = Noneprevious_dilation = self.dilationif dilate:self.dilation *= stridestride = 1if stride != 1 or self.inplanes != planes * block.expansion:downsample = nn.Sequential(conv1x1(self.inplanes, planes * block.expansion, stride),norm_layer(planes * block.expansion),)layers = []layers.append(block(self.inplanes,planes,stride,downsample,self.groups,self.base_width,previous_dilation,norm_layer,))self.inplanes = planes * block.expansionfor _ in range(1, blocks):layers.append(block(self.inplanes,planes,groups=self.groups,base_width=self.base_width,dilation=self.dilation,norm_layer=norm_layer,))return nn.Sequential(*layers)def _forward_impl(self, x: Tensor, need_fea=False) -> Tensor:if need_fea:features, features_fc = self.forward_features(x, need_fea)x = self.fc(features_fc)return features, features_fc, xelse:x = self.forward_features(x)x = self.fc(x)return xdef forward(self, x: Tensor, need_fea=False) -> Tensor:return self._forward_impl(x, need_fea)def forward_features(self, x, need_fea=False):x = self.conv1(x)x = self.bn1(x)x = self.relu(x)x = self.maxpool(x)if need_fea:x1 = self.layer1(x)x2 = self.layer2(x1)x3 = self.layer3(x2)x4 = self.layer4(x3)x = self.avgpool(x4)x = torch.flatten(x, 1)return [x1, x2, x3, x4], xelse:x = self.layer1(x)x = self.layer2(x)x = self.layer3(x)x = self.layer4(x)x = self.avgpool(x)x = torch.flatten(x, 1)return xdef cam_layer(self):return self.layer4def _resnet(block: Type[Union[BasicBlock, Bottleneck]],layers: List[int],pretrained: bool,progress: bool,**kwargs: Any

) -> ResNet:model = ResNet(block, layers, **kwargs)if pretrained:state_dict = load_state_dict_from_url("https://download.pytorch.org/models/resnet50-0676ba61.pth",progress=progress,)model_dict = model.state_dict()weight_dict = {}for k, v in state_dict.items():if k in model_dict:if np.shape(model_dict[k]) == np.shape(v):weight_dict[k] = vpretrained_dict = weight_dictmodel_dict.update(pretrained_dict)model.load_state_dict(model_dict)return modeldef resnet50(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:return _resnet(Bottleneck, [3, 4, 6, 3], pretrained, progress, **kwargs)

可以根据自己的喜好,直接集成到自己的项目中进行使用都是可以的。

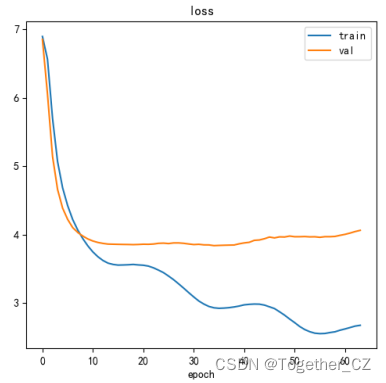

整体训练loss曲线如下所示:

准确率曲线如下所示:

目前仅仅从零开始训练了60多个epoch,效果不是很理想,后续计划基于预训练的模型权重来进行微调训练提升当前的精度。

)

与Android系统 各自特点 架构对比 各自优势)

)

报错:unknown error: unsupported protocol)

单目初始化)

-window、distinct、join等)