音视频介绍

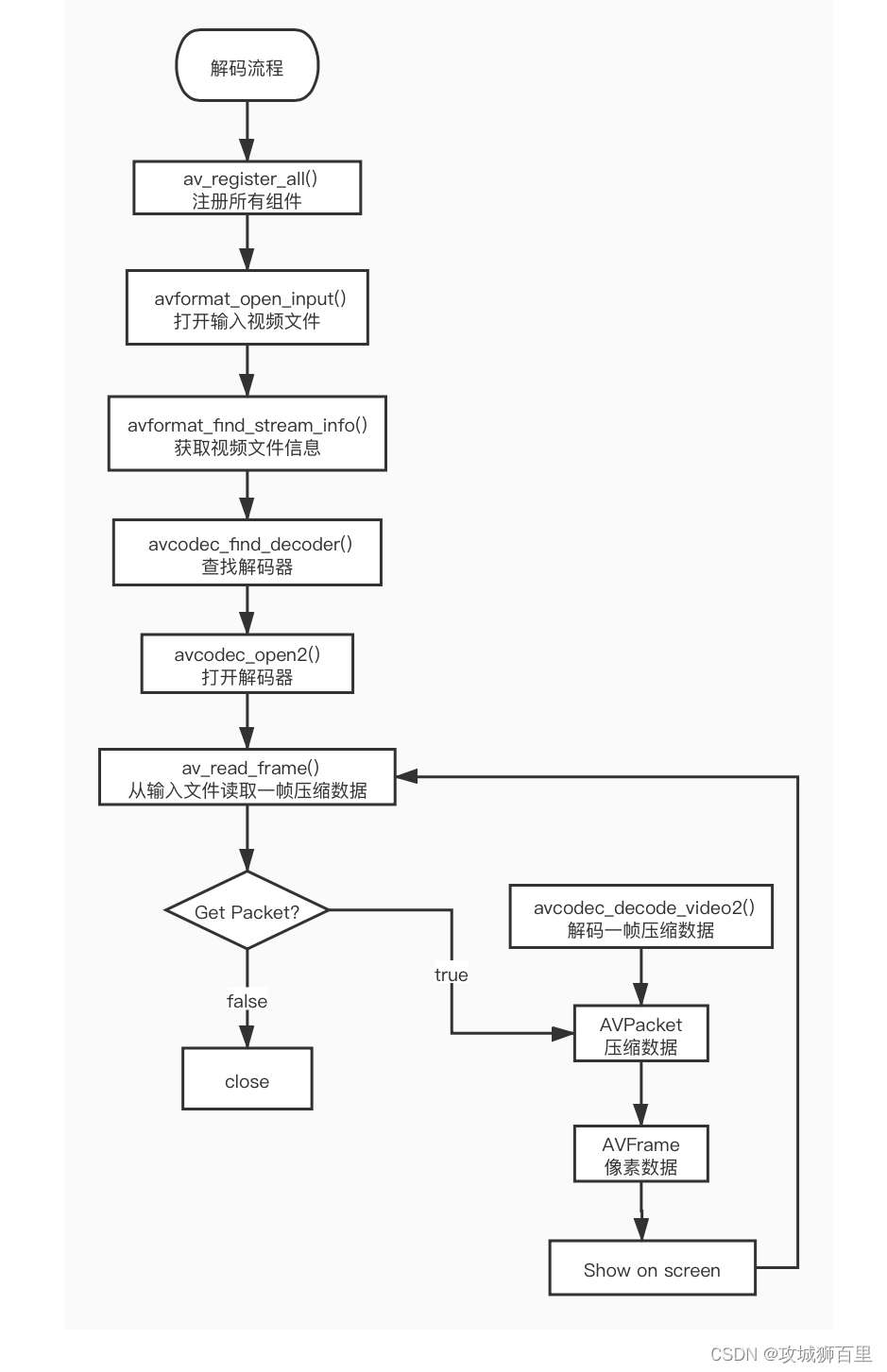

音视频解码流程

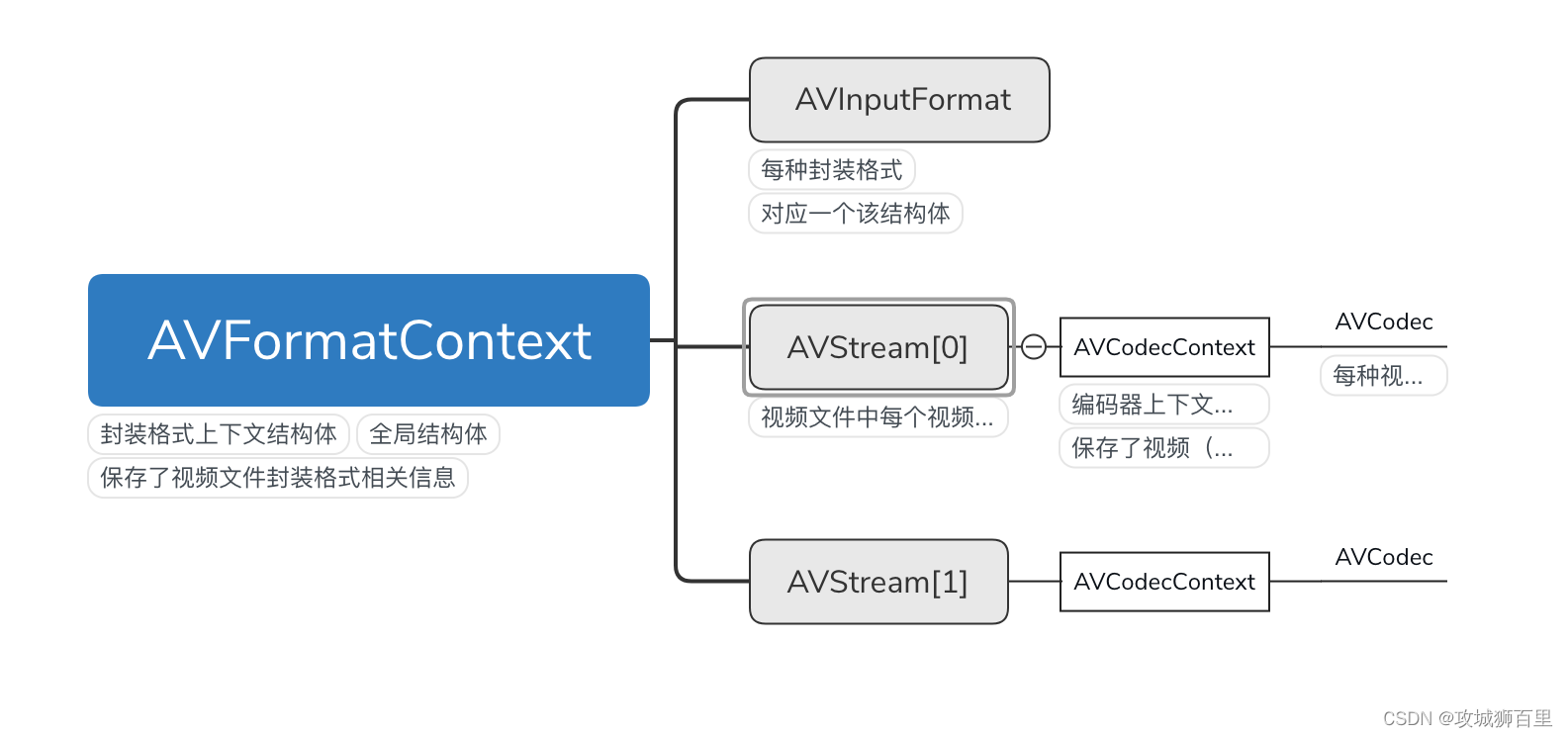

FFmpeg解码的数据结构说明

- AVFormatContext:封装格式上下文结构体,全局结构体,保存了视频文件封装格式相关信息

- AVInputFormat:每种封装格式,对应一个该结构体

- AVStream[0]:视频文件中每个视频(音频)流对应一个该结构体

- AVCodecContext:编码器上下文结构体,保存了视频(音频)编解码相关信息

- AVCodec:每种视频(音频)编解码器(例如H.264解码器)对应一个该结构体

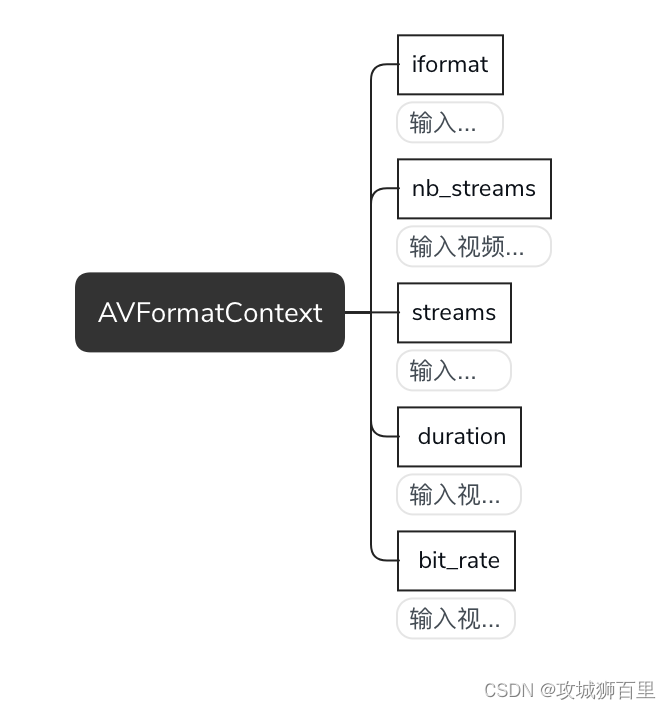

AVFormatContext数据结构说明

- iformat:输入视频的AVInputFormat

- nb_streams:输入视频的AVStream 个数

- streams:输入视频的AVStream []数组

- duration:输入视频的时长(以微秒为单位)

- bit_rate:输入视频的码率

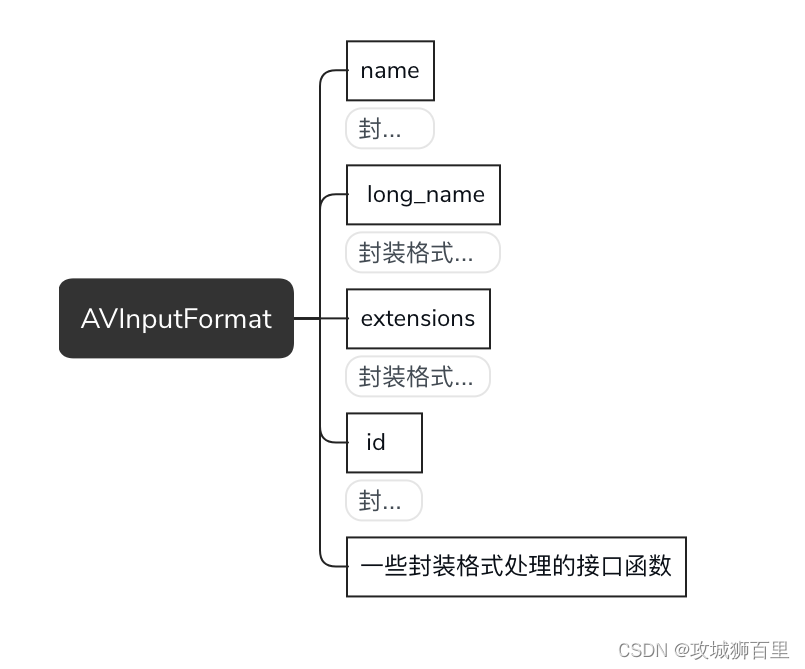

AVInputFormat数据结构说明

- name:封装格式名称

- long_name:封装格式的长名称

- extensions:封装格式的扩展名

- id:封装格式ID

- 一些封装格式处理的接口函数

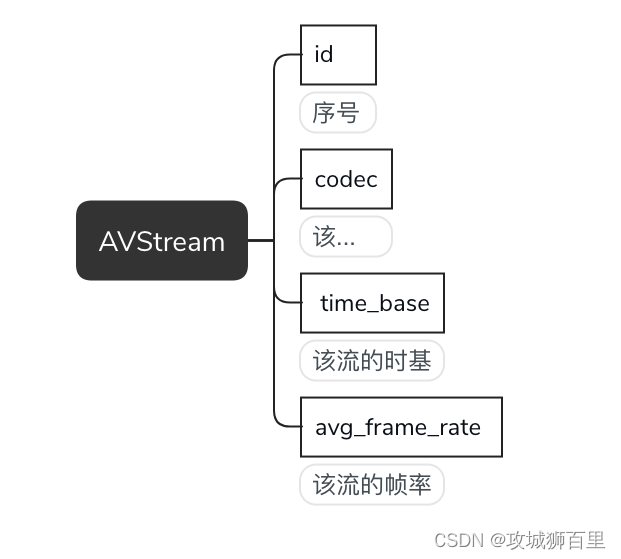

AVStream数据结构说明

- id:序号

- codec:该流对应的AVCodecContext

- time_base:该流的时基

- avg_frame_rate:该流的帧率

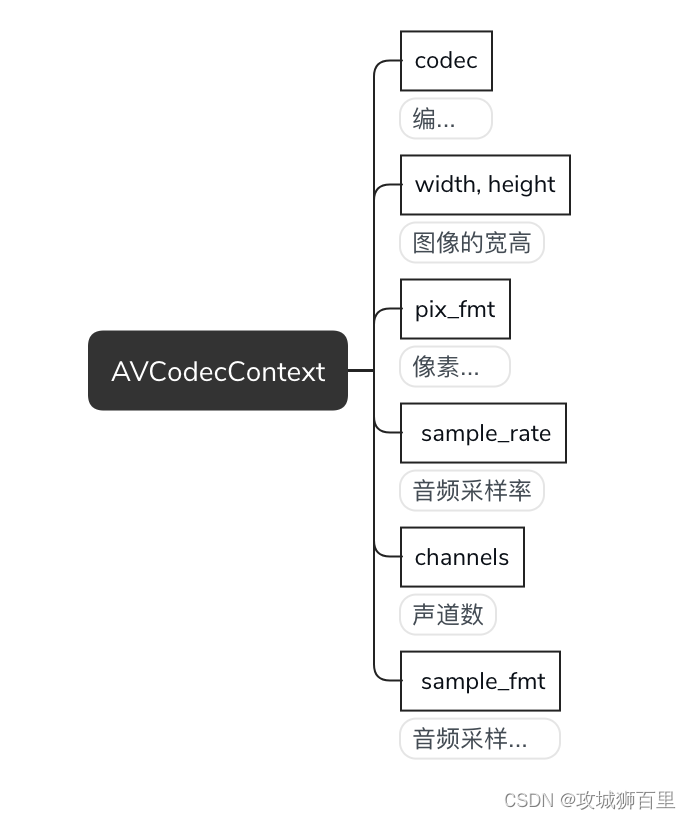

AVCodecContext数据结构说明

- codec:编解码器的AVCodec

- width, height:图像的宽高

- pix_fmt:像素格式

- sample_rate:音频采样率

- channels:声道数

- sample_fmt:音频采样格式

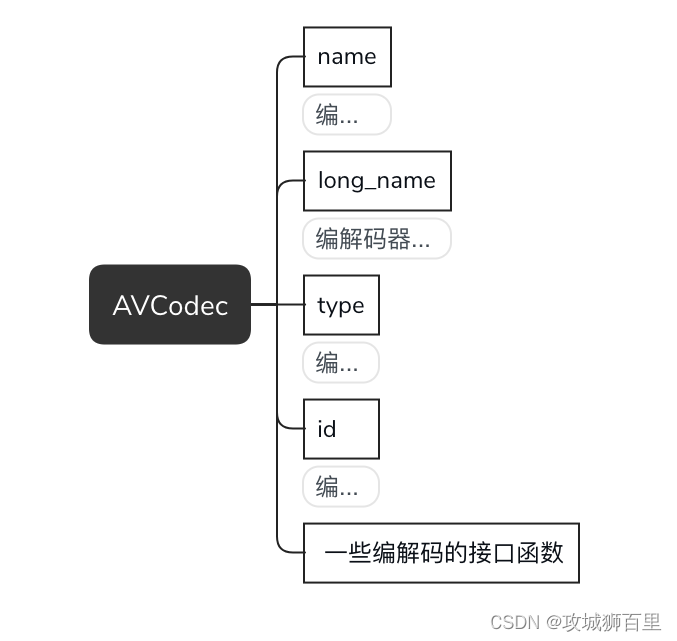

AVCodec数据结构说明

- name:编解码器名称

- long_name:编解码器长名称

- type:编解码器类型

- id:编解码器ID

- 一些编解码的接口函数

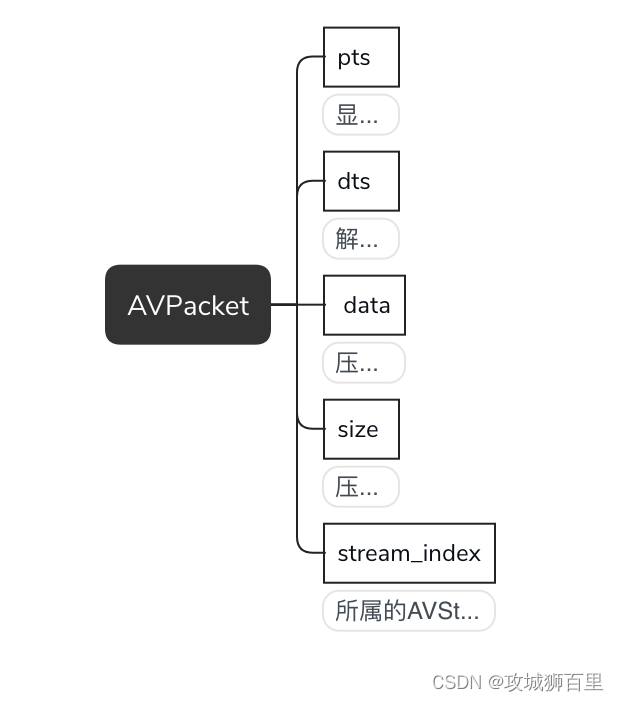

AVPacket数据结构说明

- pts:显示时间戳

- dts:解码时间戳

- data:压缩编码数据

- size:压缩编码数据大小

- stream_index:所属的AVStream

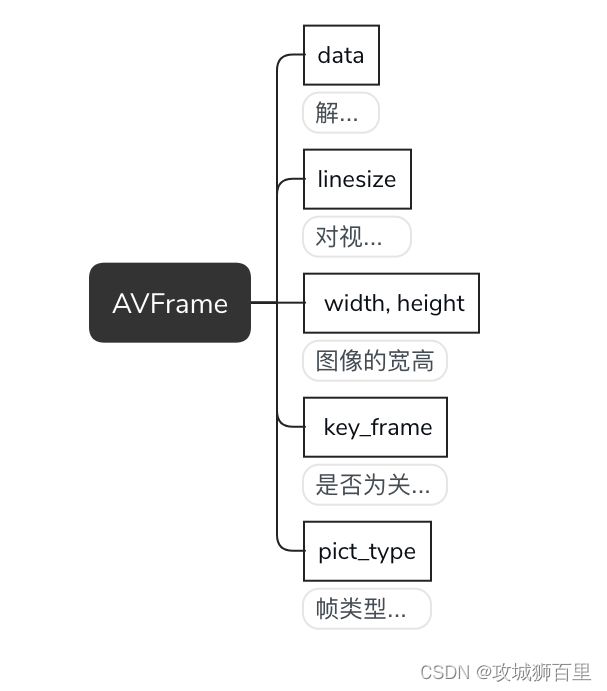

AVFrame数据结构说明

- data:解码后的图像像素数据(音频采样数据)

- linesize:对视频来说是图像中一行像素的大小;对音频来说是整个音

- width, height:图像的宽高

- key_frame:是否为关键帧

- pict_type:帧类型(只针对视频) 。例如I,P,B

音视频实战

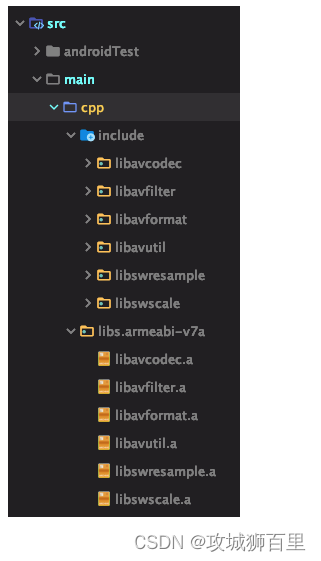

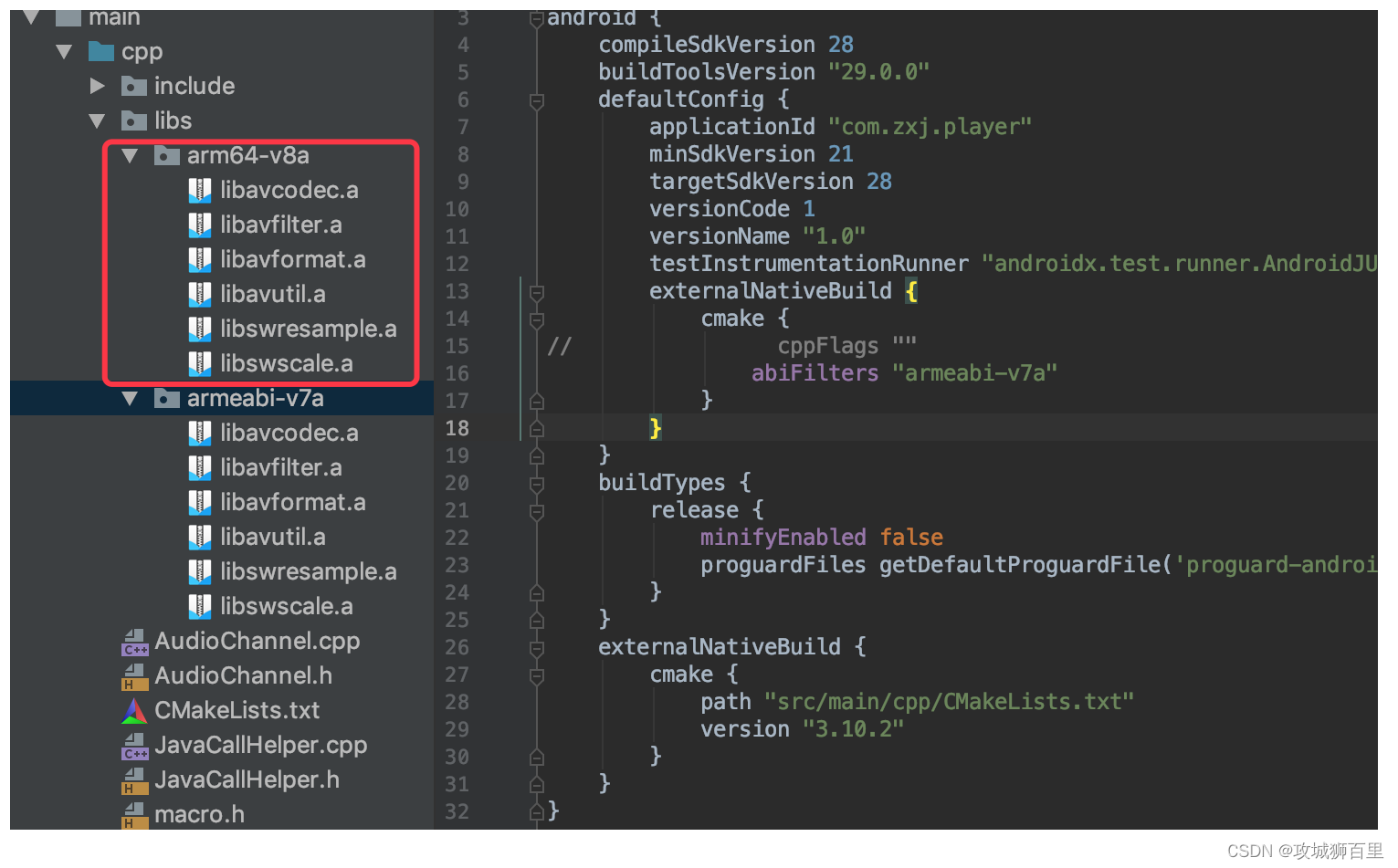

将编译好的FFmpeg库考入到工程

编写CMakeLists.txt

cmake_minimum_required(VERSION 3.4.1)file(GLOB source_file *.cpp)message("source_file = ${source_file}")add_library(z-playerSHARED${source_file})include_directories(${CMAKE_SOURCE_DIR}/include)set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -L${CMAKE_SOURCE_DIR}/libs/${CMAKE_ANDROID_ARCH_ABI}")find_library(log-liblog)target_link_libraries(z-player# 方法一:使用-Wl 忽略顺序

# -Wl,--start-group #忽略顺序引发的错误

# avcodec avfilter avformat avutil swresample swscale

# -Wl,--end-group# 方法二:调整顺序avformat avcodec avfilter avutil swresample swscale #必须要把avformat放在avcodec的前面${log-lib}z)

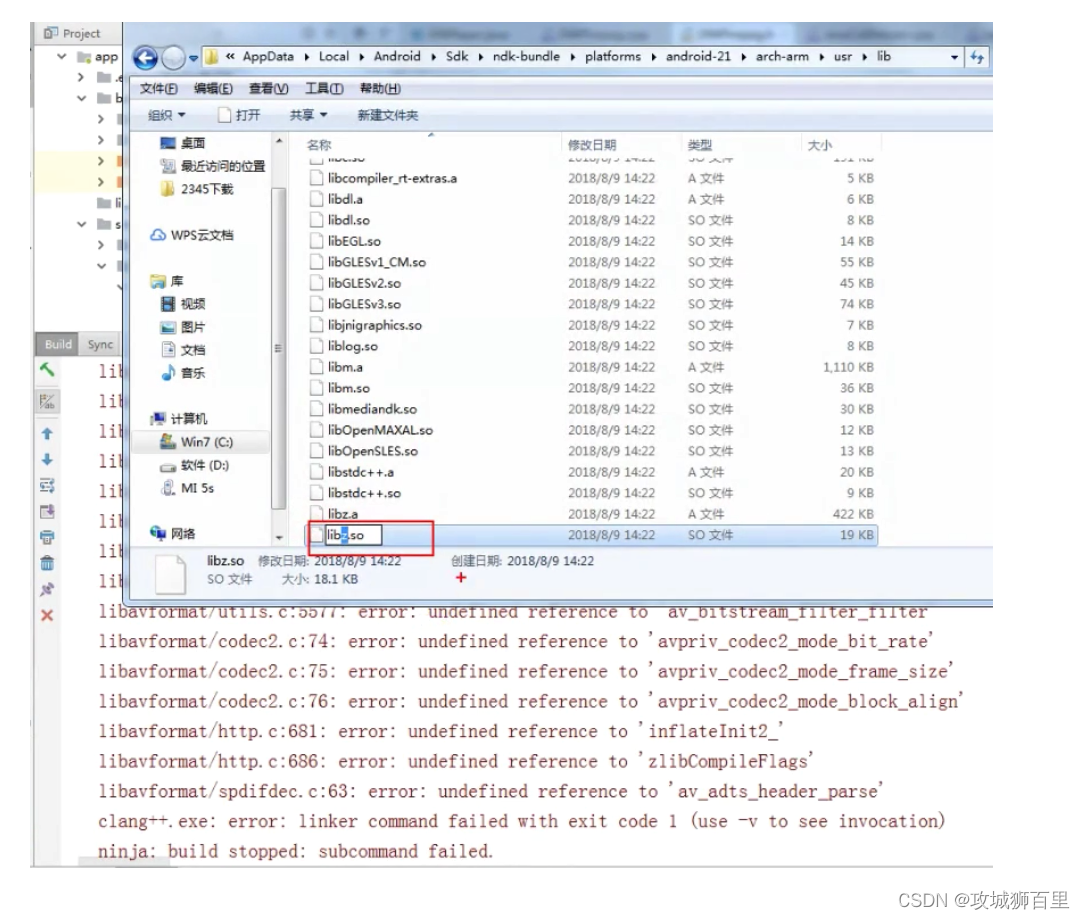

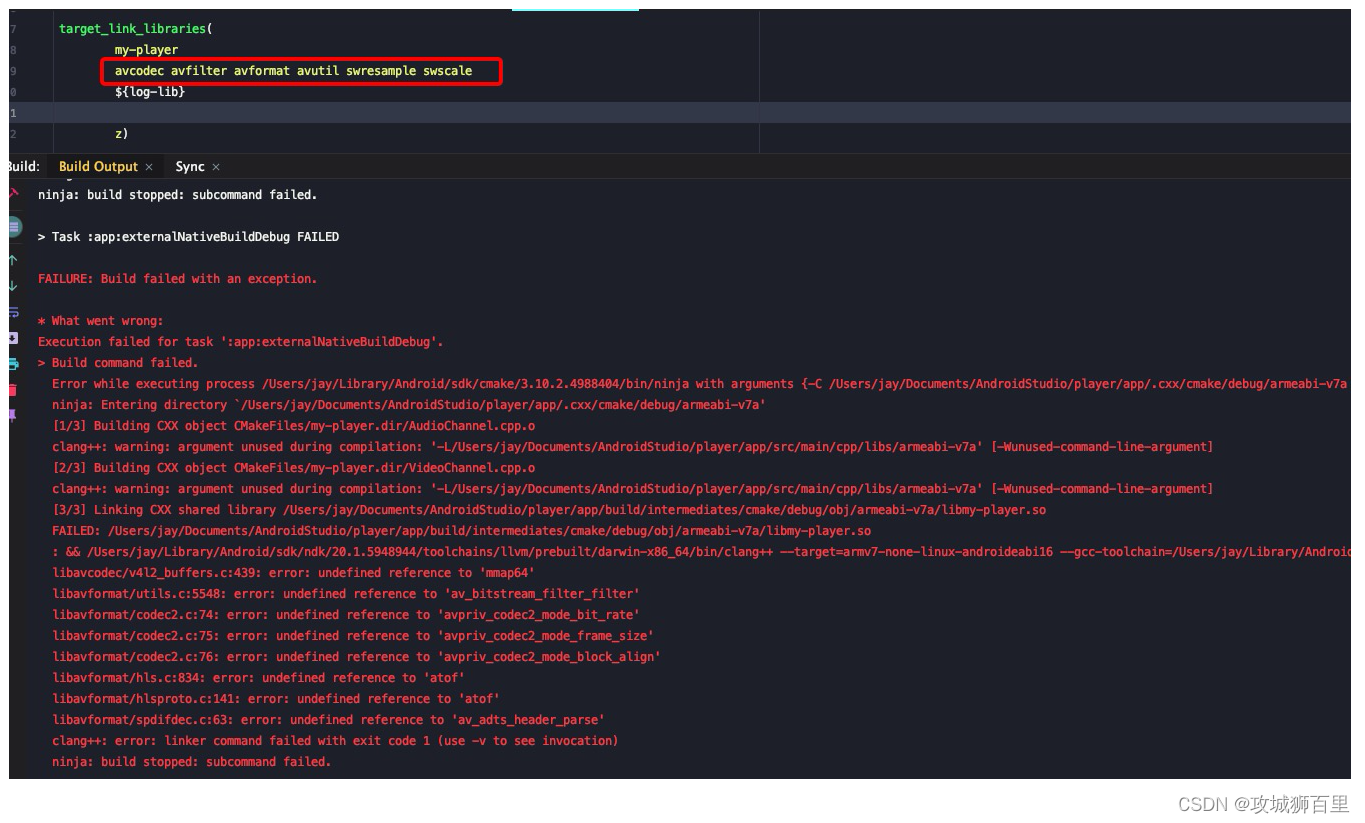

这里target_link_libraries方法有两个问题:

1.FFmpeg是需要依赖了libz.so库的如下图:

所有要在target_link_libraries方法里添加z,否则会报错

2.FFmpeg添加顺序问题,

当我们添加

avcodec avfilter avformat avutil swresample swscale

这样一个顺序时会报错

解决方法有两个:

第一个:将avfilter放到avcodec前面就可以了

avformat avcodec avfilter avutil swresample swscale

第二个:使用-Wl忽略顺序

-Wl,--start-group #忽略顺序引发的错误

avcodec avfilter avformat avutil swresample swscale

-Wl,--end-group

编码

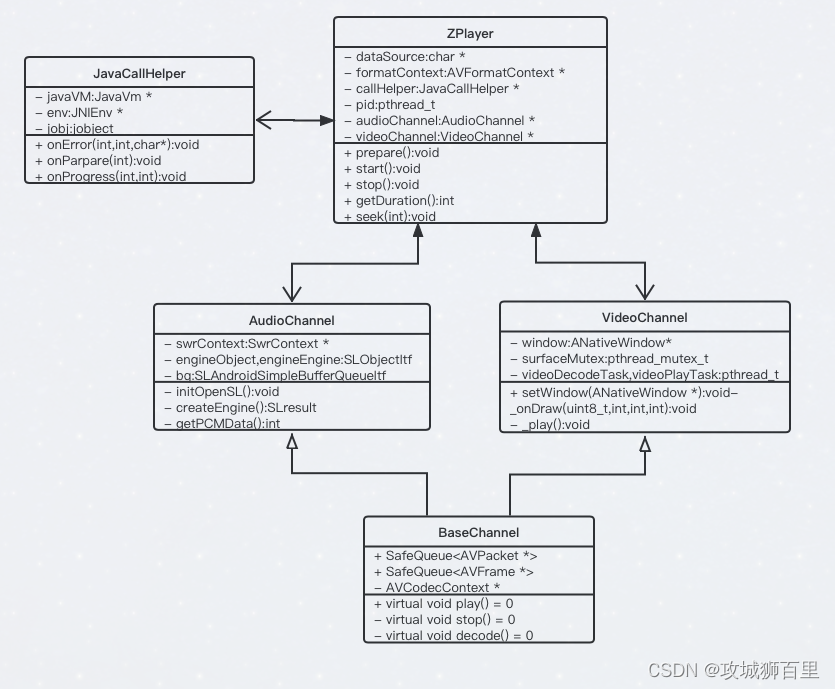

FFmpeg播放器结构类图

编写ZPlayer.java类

public class ZPlayer implements LifecycleObserver, SurfaceHolder.Callback {private static final String TAG = ZPlayer.class.getSimpleName();static {System.loadLibrary("z-player");}private final long nativeHandle;private OnPrepareListener listener;private OnErrorListener onErrorListener;private SurfaceHolder mHolder;private OnProgressListener onProgressListener;public ZPlayer() {nativeHandle = nativeInit();}/*** 设置播放显示的画布* @param surfaceView*/public void setSurfaceView(SurfaceView surfaceView) {if (this.mHolder != null) {mHolder.removeCallback(this); // 清除上一次的}mHolder = surfaceView.getHolder();mHolder.addCallback(this);}/*** 让使用者设置播放的文件,或者直播地址* @param dataSource*/public void setDataSource(String dataSource){setDataSource(nativeHandle, dataSource);}/*** 准备好要播放的视频*/@OnLifecycleEvent(Lifecycle.Event.ON_RESUME)public void prepare() {Log.e(TAG,"ZPlayer->prepare");nativePrepare(nativeHandle);}/*** 开始播放*/public void start(){Log.e(TAG,"ZPlayer->start");nativeStart(nativeHandle);}/*** 停止播放*/@OnLifecycleEvent(Lifecycle.Event.ON_STOP)public void stop(){Log.e(TAG,"ZPlayer->stop");nativeStop(nativeHandle);}@OnLifecycleEvent(Lifecycle.Event.ON_DESTROY)public void release(){Log.e(TAG,"ZPlayer->release");mHolder.removeCallback(this);nativeRelease(nativeHandle);}/*** JavaCallHelper 会反射调用此方法* @param errorCode*/public void onError(int errorCode, String ffmpegError){Log.e(TAG,"Java接收到回调了->onError:"+errorCode);String title = "\nFFmpeg给出的错误如下:\n";String msg = null;switch (errorCode){case Constant.FFMPEG_CAN_NOT_OPEN_URL:msg = "打不开视频"+title+ ffmpegError;break;case Constant.FFMPEG_CAN_NOT_FIND_STREAMS:msg = "找不到流媒体"+title+ ffmpegError;break;case Constant.FFMPEG_FIND_DECODER_FAIL:msg = "找不到解码器"+title+ ffmpegError;break;case Constant.FFMPEG_ALLOC_CODEC_CONTEXT_FAIL:msg = "无法根据解码器创建上下文"+title+ ffmpegError;break;case Constant.FFMPEG_CODEC_CONTEXT_PARAMETERS_FAIL:msg = "根据流信息 配置上下文参数失败"+title+ ffmpegError;break;case Constant.FFMPEG_OPEN_DECODER_FAIL:msg = "打开解码器失败"+title+ ffmpegError;break;case Constant.FFMPEG_NOMEDIA:msg = "没有音视频"+title+ ffmpegError;break;}if(onErrorListener != null ){onErrorListener.onError(msg);}}/*** JavaCallHelper 会反射调用此方法*/public void onPrepare(){Log.e(TAG,"Java接收到回调了->onPrepare");if(listener != null){listener.onPrepare();}}public void onProgress(int progress){if(onProgressListener != null){onProgressListener.onProgress(progress);}}@Overridepublic void surfaceCreated(SurfaceHolder surfaceHolder) {Log.e(TAG,"ZPlayer->surfaceCreated");}@Overridepublic void surfaceChanged(SurfaceHolder surfaceHolder, int i, int i1, int i2) {Log.e(TAG,"ZPlayer->surfaceChanged");nativeSetSurface(nativeHandle,surfaceHolder.getSurface());}@Overridepublic void surfaceDestroyed(SurfaceHolder surfaceHolder) {Log.e(TAG,"ZPlayer->surfaceDestroyed");}public int getDuration() {return getNativeDuration(nativeHandle);}public void seek(int playProgress) {nativeSeek(playProgress,nativeHandle);}public interface OnPrepareListener{void onPrepare();}public void setOnPrepareListener(OnPrepareListener listener){this.listener = listener;}public interface OnProgressListener{void onProgress(int progress);}public void setOnProgressListener(OnProgressListener listener){this.onProgressListener = listener;}public interface OnErrorListener{void onError(String errorCode);}public void setOnErrorListener(OnErrorListener listener){this.onErrorListener = listener;}private native long nativeInit();private native void setDataSource(long nativeHandle, String path);private native void nativePrepare(long nativeHandle);private native void nativeStart(long nativeHandle);private native void nativeStop(long nativeHandle);private native void nativeRelease(long nativeHandle);private native void nativeSetSurface(long nativeHandle, Surface surface);private native int getNativeDuration(long nativeHandle);private native void nativeSeek(int playValue,long nativeHandle);

}

编写MainActivity.java类

public class MainActivity extends AppCompatActivity implements SeekBar.OnSeekBarChangeListener {private ActivityMainBinding binding;private int PERMISSION_REQUEST = 0x1001;private ZPlayer mPlayer;// 用户是否拖拽里private boolean isTouch = false;// 获取native层的总时长private int duration ;@Overrideprotected void onCreate(Bundle savedInstanceState) {super.onCreate(savedInstanceState);binding = ActivityMainBinding.inflate(getLayoutInflater());setContentView(binding.getRoot());checkPermission();binding.seekBar.setOnSeekBarChangeListener(this);mPlayer = new ZPlayer();getLifecycle().addObserver(mPlayer);mPlayer.setSurfaceView(binding.surfaceView);mPlayer.setDataSource("/sdcard/demo.mp4");

// mPlayer.setDataSource("/sdcard/chengdu.mp4");mPlayer.setOnPrepareListener(new ZPlayer.OnPrepareListener() {@Overridepublic void onPrepare() {duration = mPlayer.getDuration();runOnUiThread(new Runnable() {@Overridepublic void run() {binding.seekBarTimeLayout.setVisibility(duration != 0 ? View.VISIBLE : View.GONE);if(duration != 0){binding.tvTime.setText("00:00/"+getMinutes(duration)+":"+getSeconds(duration));}binding.tvState.setTextColor(Color.GREEN);binding.tvState.setText("恭喜init初始化成功");}});mPlayer.start();}});mPlayer.setOnErrorListener(new ZPlayer.OnErrorListener() {@Overridepublic void onError(String errorCode) {runOnUiThread(new Runnable() {@Overridepublic void run() {binding.tvState.setTextColor(Color.RED);binding.tvState.setText(errorCode);}});}});mPlayer.setOnProgressListener(new ZPlayer.OnProgressListener() {@Overridepublic void onProgress(int progress) {if (!isTouch){runOnUiThread(new Runnable() {@Overridepublic void run() {// 非直播,是本地视频文件if(duration != 0) {binding.tvTime.setText(getMinutes(progress) + ":" + getSeconds(progress)+ "/" + getMinutes(duration) + ":" + getSeconds(duration));binding.seekBar.setProgress(progress * 100 / duration);}}});}}});}private void checkPermission() {if(ContextCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE) !=PackageManager.PERMISSION_GRANTED){Log.e("zuo","无权限,去申请权限");ActivityCompat.requestPermissions(this, new String[]{Manifest.permission.WRITE_EXTERNAL_STORAGE}, PERMISSION_REQUEST);}else {Log.e("zuo","有权限");}}@Overridepublic void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {super.onRequestPermissionsResult(requestCode, permissions, grantResults);if(requestCode == PERMISSION_REQUEST){Log.e("zuo","申请到权限"+grantResults.length);if (grantResults[0] == PackageManager.PERMISSION_GRANTED){Toast.makeText(this,"已申请权限",Toast.LENGTH_SHORT).show();}else {Toast.makeText(this,"申请权限失败",Toast.LENGTH_SHORT).show();}}}private String getSeconds(int duration){int seconds = duration % 60;String str ;if(seconds <= 9){str = "0"+seconds;}else {str = "" + seconds;}return str;}private String getMinutes(int duration){int minutes = duration / 60;String str ;if(minutes <= 9){str = "0"+minutes;}else {str = "" + minutes;}return str;}/*** 当前拖动条进度发送了改变,毁掉此方法* @param seekBar 控件* @param progress 1~100* @param fromUser 是否用户拖拽导致到改变*/@Overridepublic void onProgressChanged(SeekBar seekBar, int progress, boolean fromUser) {if(fromUser) {binding.tvTime.setText(getMinutes(progress * duration / 100) + ":" + getSeconds(progress * duration / 100)+ "/" + getMinutes(duration) + ":" + getSeconds(duration));}}//手按下去,毁掉此方法@Overridepublic void onStartTrackingTouch(SeekBar seekBar) {isTouch = true;}// 手松开(SeekBar当前值)回调此方法@Overridepublic void onStopTrackingTouch(SeekBar seekBar) {isTouch = false;int seekBarProgress = seekBar.getProgress();int playProgress = seekBarProgress * duration / 100;mPlayer.seek(playProgress);}

}

编写native-lib.cpp

java调用native方法的入口

#include <jni.h>

#include <string>

#include "ZPlayer.h"

#define LOG_TAG "native-lib"ZPlayer *zPlayer = nullptr;

JavaVM *javaVm = nullptr;

JavaCallHelper *helper = nullptr;

ANativeWindow *window = nullptr;

pthread_mutex_t mutex = PTHREAD_MUTEX_INITIALIZER;int JNI_OnLoad(JavaVM *vm,void *r){javaVm = vm;return JNI_VERSION_1_6;

}extern "C"

JNIEXPORT jlong JNICALL

Java_com_zxj_zplayer_ZPlayer_nativeInit(JNIEnv *env, jobject thiz) {//创建播放器helper = new JavaCallHelper(javaVm,env,thiz);zPlayer = new ZPlayer(helper);return (jlong)zPlayer;

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_setDataSource(JNIEnv *env, jobject thiz, jlong native_handle,jstring path) {const char *dataSource = env->GetStringUTFChars(path, nullptr);ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);zPlayer->setDataSource(dataSource);env->ReleaseStringUTFChars(path,dataSource);

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_nativePrepare(JNIEnv *env, jobject thiz, jlong native_handle) {ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);zPlayer->prepare();

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_nativeStart(JNIEnv *env, jobject thiz, jlong native_handle) {ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);zPlayer->start();

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_nativeStop(JNIEnv *env, jobject thiz, jlong native_handle) {ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);zPlayer->stop();if(helper){DELETE(helper);}

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_nativeRelease(JNIEnv *env, jobject thiz, jlong native_handle) {pthread_mutex_lock(&mutex);if(window){ANativeWindow_release(window);window = nullptr;}pthread_mutex_unlock(&mutex);DELETE(helper);DELETE(zPlayer);DELETE(javaVm);DELETE(window);

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_nativeSetSurface(JNIEnv *env, jobject thiz, jlong native_handle,jobject surface) {pthread_mutex_lock(&mutex);//先释放之前的显示窗口if(window){LOGE("nativeSetSurface->window=%p",window);ANativeWindow_release(window);window = nullptr;}window = ANativeWindow_fromSurface(env,surface);ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);zPlayer->setWindow(window);pthread_mutex_unlock(&mutex);

}extern "C"

JNIEXPORT jint JNICALL

Java_com_zxj_zplayer_ZPlayer_getNativeDuration(JNIEnv *env, jobject thiz, jlong native_handle) {ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);if(zPlayer){return zPlayer->getDuration();}return 0;

}extern "C"

JNIEXPORT void JNICALL

Java_com_zxj_zplayer_ZPlayer_nativeSeek(JNIEnv *env, jobject thiz, jint play_value,jlong native_handle) {ZPlayer *zPlayer = reinterpret_cast<ZPlayer *>(native_handle);if(zPlayer){zPlayer->seek(play_value);}

}

编写JavaCallHelper.cpp

这个类主要用作:处理音视频后各个状态回调java方法

#include "JavaCallHelper.h"

#define LOG_TAG "JavaCallHelper"JavaCallHelper::JavaCallHelper(JavaVM *vm, JNIEnv *env, jobject instace) {this->vm = vm;//如果在主线程回调this->env = env;//一旦涉及到jobject 跨方法/跨线程 就需要创建全局引用this->instace = env->NewGlobalRef(instace);jclass clazz = env->GetObjectClass(instace);onErrorId = env->GetMethodID(clazz, "onError", "(ILjava/lang/String;)V");onPrepareId = env->GetMethodID(clazz, "onPrepare", "()V");onProgressId = env->GetMethodID(clazz, "onProgress", "(I)V");

}JavaCallHelper::~JavaCallHelper() {env->DeleteGlobalRef(this->instace);

}void JavaCallHelper::onError(int thread, int errorCode,char * ffmpegError) {//主线程if(thread == THREAD_MAIN){jstring _ffmpegError = env->NewStringUTF(ffmpegError);env->CallVoidMethod(instace,onErrorId,errorCode,_ffmpegError);} else{//子线程JNIEnv *env;if (vm->AttachCurrentThread(&env, 0) != JNI_OK) {return;}jstring _ffmpegError = env->NewStringUTF(ffmpegError);env->CallVoidMethod(instace,onErrorId,errorCode,_ffmpegError);vm->DetachCurrentThread();//解除附加,必须要}

}void JavaCallHelper::onPrepare(int thread) {//主线程if(thread == THREAD_MAIN){env->CallVoidMethod(instace,onPrepareId);} else{//子线程JNIEnv *env;if (vm->AttachCurrentThread(&env, 0) != JNI_OK) {return;}env->CallVoidMethod(instace,onPrepareId);vm->DetachCurrentThread();}

}void JavaCallHelper::onProgress(int thread, int progress) {if(thread == THREAD_MAIN){env->CallVoidMethod(instace,onProgressId,progress);} else{//子线程JNIEnv *env;if (vm->AttachCurrentThread(&env, 0) != JNI_OK) {return;}env->CallVoidMethod(instace,onProgressId,progress);vm->DetachCurrentThread();}

}

编写ZPlayer.cpp

主要处理音视频的

#include <cstring>

#include "ZPlayer.h"

#include "macro.h"void *task_prepare(void *args) {ZPlayer *zFmpeg = static_cast<ZPlayer *>(args);zFmpeg->_prepare();return 0;

}ZPlayer::ZPlayer(JavaCallHelper *callHelper, const char *dataSource) {this->callHelper = callHelper;//这样写会报错,dataSource会在native-lib.cpp里的方法里会被释放掉,那么这里拿到的dataSource是悬空指针

// this->dataSource = const_cast<char *>(dataSource);this->dataSource = new char[strlen(dataSource)];strcpy(this->dataSource, dataSource);

}ZPlayer::~ZPlayer() {//释放

// delete this->dataSource;

// this->dataSource = nullptr;DELETE(dataSource);DELETE(callHelper);

}void ZPlayer::prepare() {pthread_create(&pid, 0, task_prepare, this);

}void ZPlayer::_prepare() {//初始化网络,不调用这个,FFmpage是无法联网的avformat_network_init();//AVFormatContext 包含了视频的信息(宽、高等)formatContext = 0;//1、打开媒体地址(文件地址、直播地址)int ret = avformat_open_input(&formatContext, dataSource, 0, 0);//ret不为0表示打开媒体失败if (ret) {LOGE("打开媒体失败:%s", av_err2str(ret));callHelper->onError(THREAD_CHILD, FFMPEG_CAN_NOT_OPEN_URL);return;}//2、查找媒体中的音视频流ret = avformat_find_stream_info(formatContext, 0);//小于0则失败if (ret < 0) {LOGE("查找流失败:%s", av_err2str(ret));callHelper->onError(THREAD_CHILD, FFMPEG_CAN_NOT_FIND_STREAMS);return;}//经过avformat_find_stream_info方法后,formatContext->nb_streams就有值了unsigned int streams = formatContext->nb_streams;//nb_streams :几个流(几段视频/音频)for (int i = 0; i < streams; ++i) {//可能代表是一个视频,也可能代表是一个音频AVStream *stream = formatContext->streams[i];//包含了解码 这段流的各种参数信息(宽、高、码率、帧率)AVCodecParameters *codecpar = stream->codecpar;//无论视频还是音频都需要干的一些事情(获得解码器)// 1、通过当前流使用的编码方式,查找解码器AVCodec *avCodec = avcodec_find_decoder(codecpar->codec_id);if (avCodec == nullptr) {LOGE("查找解码器失败:%s", av_err2str(ret));callHelper->onError(THREAD_CHILD, FFMPEG_FIND_DECODER_FAIL);return;}//2、获得解码器上下文AVCodecContext *context3 = avcodec_alloc_context3(avCodec);if (context3 == nullptr) {LOGE("创建解码上下文失败:%s", av_err2str(ret));callHelper->onError(THREAD_CHILD, FFMPEG_ALLOC_CODEC_CONTEXT_FAIL);return;}//3、设置上下文内的一些参数 (context->width)ret = avcodec_parameters_to_context(context3, codecpar);if (ret < 0) {LOGE("设置解码上下文参数失败:%s", av_err2str(ret));callHelper->onError(THREAD_CHILD, FFMPEG_CODEC_CONTEXT_PARAMETERS_FAIL);return;}// 4、打开解码器ret = avcodec_open2(context3, avCodec, 0);if (ret != 0) {LOGE("打开解码器失败:%s", av_err2str(ret));callHelper->onError(THREAD_CHILD, FFMPEG_OPEN_DECODER_FAIL);return;}//音频if (codecpar->codec_type == AVMEDIA_TYPE_AUDIO) {audioChannel = new AudioChannel;} else if (codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {videoChannel = new VideoChannel;}}if (!audioChannel && !videoChannel) {LOGE("没有音视频");callHelper->onError(THREAD_CHILD, FFMPEG_NOMEDIA);return;}// 准备完了 通知java 你随时可以开始播放callHelper->onPrepare(THREAD_CHILD);

}

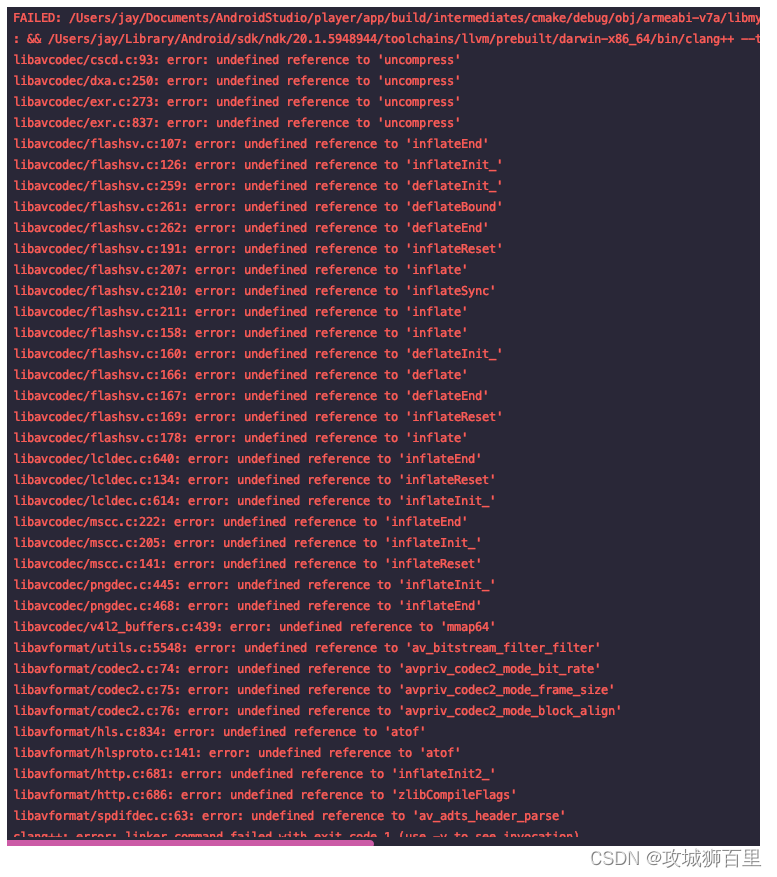

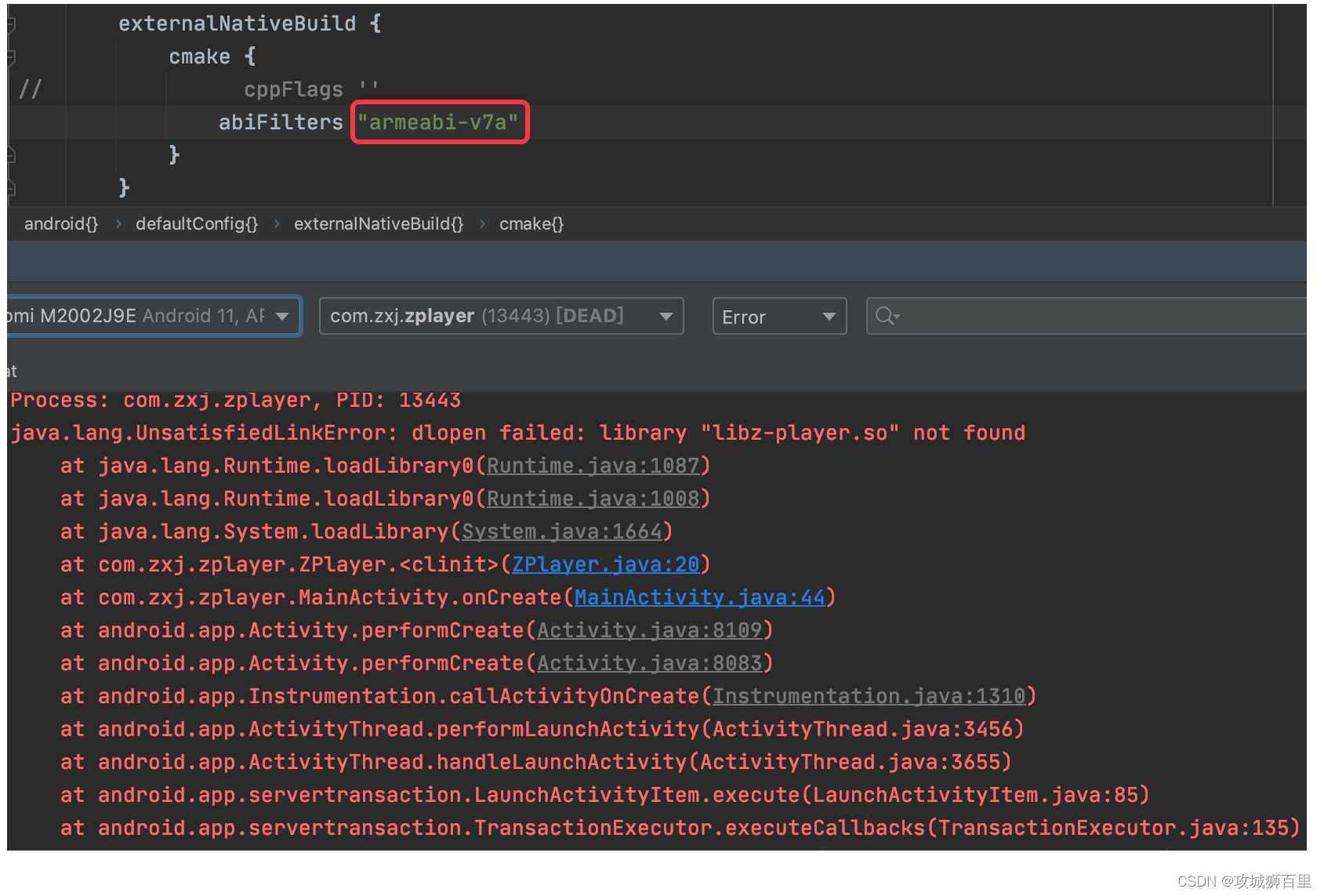

核心代码差不多是这些,现在我们可以先测试一下,编译运行会发现报错

报这个错是因为,FFmpeg的版本问题,在上一篇《FFmpeg编译》中我们在编译FFmpeg的库的时候,指定了-D__ANDROID_API__=21,而我们项目中的minSdkVersion为14,所以需要修改minSdkVersion为21就可以了。

最后运行测试是没有问题的。

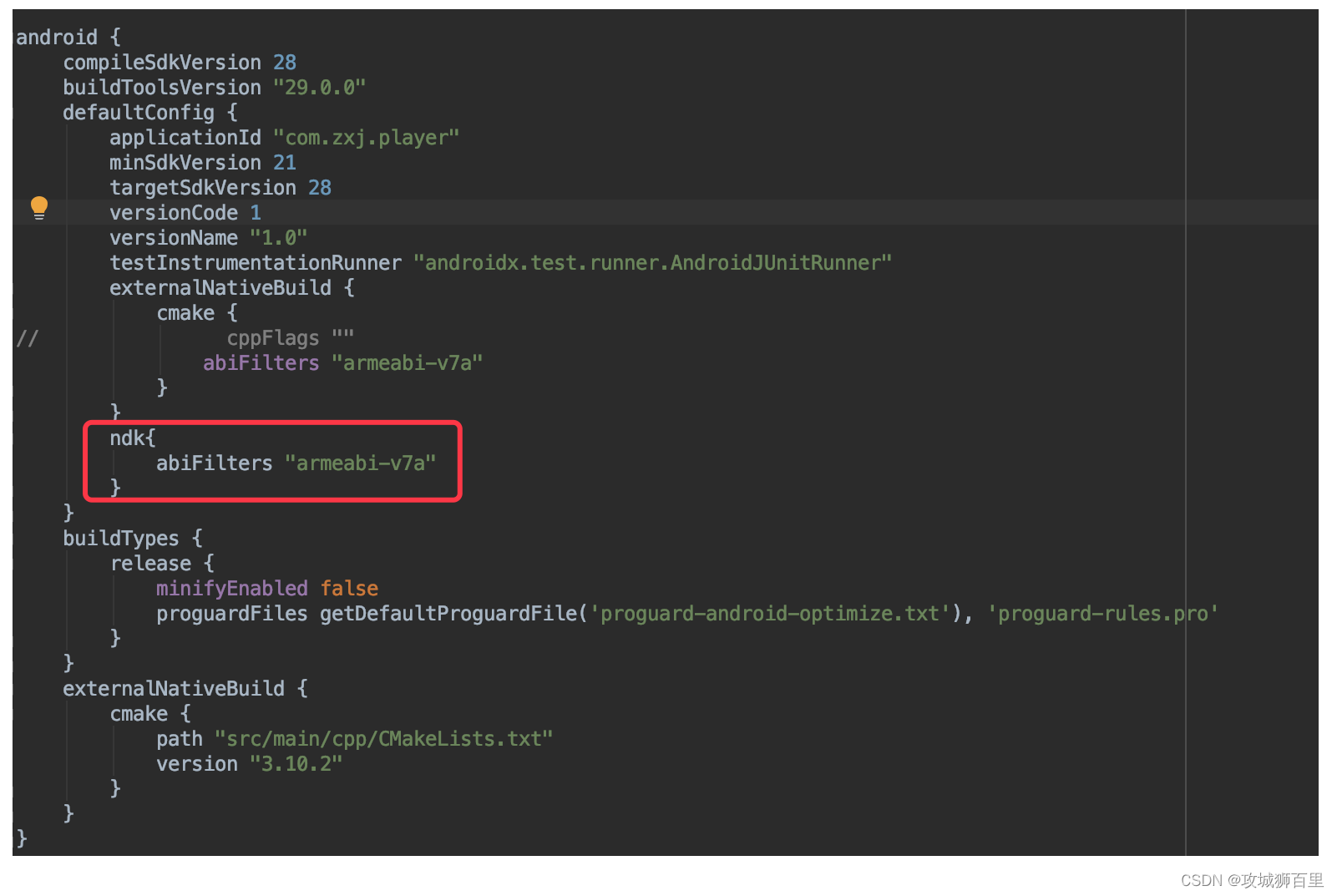

其他手机奔溃解决方法

上面编译源码使用到是"armeabi-v7a",但是有的手机是"arm64-v8a"架构到,所以直接运行就会报错,找不到so库

1.这时有两种解决方法

在build.gradle文件里加入ndk{abiFilters "armeabi-v7a"}就可以了

2.重新编译一个"arm64-v8a"的静态库,修改build.sh文件

#!/bin/bash#执行生成makefile的shell脚本

PREFIX=./android/armeabi-v7a2NDK_ROOT=/home/zuojie/android-ndk-r17cCPU=aarch64-linux-android

TOOLCHAIN=$NDK_ROOT/toolchains/$CPU-4.9/prebuilt/linux-x86_64FLAGS="-isystem $NDK_ROOT/sysroot/usr/include/$CPU -D__ANDROID_API__=21 -g -DANDROID -ffunction-sections -funwind-tables -fstack-protector-strong -no-canonical-prefixes -Wa,--noexecstack -Wformat -Werror=format-security -O0 -fPIC"

#FLAGS="-isystem $NDK_ROOT/sysroot/usr/include/$CPU -D__ANDROID_API__=21 -g -DANDROID -ffunction-sections -funwind-tables -fstack-protector-strong -no-canonical-prefixes -march=armv7-a -mfloat-abi=softfp -mfpu=vfpv3-d16 -mthumb -Wa,--noexecstack -Wformat -Werror=format-security -O0 -fPIC"INCLUDES=" -isystem $NDK_ROOT/sources/android/support/include"# \ 换行连接符

./configure --prefix=$PREFIX \--enable-small \--disable-programs \--disable-avdevice \--disable-postproc \--disable-encoders \--disable-muxers \--disable-filters \--enable-cross-compile \--cross-prefix=$TOOLCHAIN/bin/$CPU- \--disable-shared \--enable-static \--sysroot=$NDK_ROOT/platforms/android-21/arch-arm64 \--extra-cflags="$FLAGS $INCLUDES" \--extra-cflags="-isysroot $NDK_ROOT/sysroot/" \--arch=arm64 \--target-os=android # 清理一下

make clean

#执行makefile

make install

将编译好生成的静态库考入到项目到指定目录下

原文地址: https://www.cnblogs.com/zuojie/p/16461050.html#autoid-1-2-0

主流的音视频全栈开发技术 跳转

整理了一些音视频开发学习书籍、视频资料(音视频流媒体高级开发FFmpeg6.0/WebRTC/RTMP/RTSP/编码解码),有需要的可以自行添加学习交流群:739729163 领取!

:解放代码责任链,提升灵活性与可维护性)

——Cyber通信)

Truffle测试helloworld智能合约)

: namespace、类)

)

)

)