基于Nginx和Consul构建自动发现的Docker服务架构

文章目录

- 基于Nginx和Consul构建自动发现的Docker服务架构

- 资源列表

- 基础环境

- 一、安装Docker

- 1.1、Consul节点安装

- 1.2、registrator节点安装

- 二、案例前知识点

- 2.1、什么是Consul

- 三、基于Nginx和Consul构建自动发现的Docker服务架构

- 2.1、建立Consul服务

- 2.2、后台启动consul服务端

- 2.3、查看群集信息

- 2.4、通过http api获取群集信息

- 四、容器服务自动加入Nginx群集

- 4.1、安装Gliderlabs/Registrator

- 4.2、测试服务发现功能是否正常

- 4.3、验证http和nginx服务是否注册号到consul

- 五、安装consul-template

- 5.1、准备template nginx模板文件

- 5.2、编译安装nginx

- 5.3、配置nginx

- 5.4、配置并启动template

- 5.5、访问template-nginx配置文件

- 5.6、增加一个nginx容器节点

资源列表

| 操作系统 | 配置 | 主机名 | IP | 所需软件 |

|---|---|---|---|---|

| CentOS 7.9 | 2C4G | consul | 192.168.93.165 | Docker 26.1.2、Consul、Consul-remplate |

| CentOS 7.9 | 2C4G | registrator | 192.168.93.166 | Docker 20.10.17 |

基础环境

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭内核安全机制

setenforce 0

sed -i "s/^SELINUX=.*/SELINUX=disabled/g" /etc/selinux/config

- 修改主机名

hostnamectl set-hostname consul

hostnamectl set-hostname registrator

需要软件包或者报错需要解决问题的,私有或者评论就好

一、安装Docker

1.1、Consul节点安装

# 安装依赖环境

yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加CentOS官方镜像站

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum clean all && yum makecache# 安装Docker

yum -y install docker-ce docker-ce-cli containerd.io# 启动Docker

systemctl start docker

systemctl enable docker# 配置Docker加速器

cd /etc/docker/

cat >> daemon.json << EOF

{

"registry-mirrors": ["https://8xpk5wnt.mirror.aliyuncs.com"]

}

EOF

systemctl restart docker# 查看版本

[root@consul ~]# docker -v

Docker version 26.1.2, build 211e74b1.2、registrator节点安装

# 先卸载原本Docker

yum -y remove docker*# 下载rpm软件包

yum -y install wget

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/containerd.io-1.6.6-3.1.el7.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-20.10.17-3.el7.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-cli-20.10.17-3.el7.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-rootless-extras-20.10.17-3.el7.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-compose-plugin-2.6.0-3.el7.x86_64.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-scan-plugin-0.17.0-3.el7.x86_64.rpm# 联网下载依赖包(不安装)

yum -y install --downloadonly --downloaddir=./ *.rpm# 安装Docker

yum -y install *.rpm# 启动Docker

systemctl start docker

systemctl enable docker# 配置Docker加速器

cd /etc/docker/

cat >> daemon.json << EOF

{

"registry-mirrors": ["https://8xpk5wnt.mirror.aliyuncs.com"]

}

EOF

systemctl restart docker# 查看Docker版本

[root@registrator ~]# docker -v

Docker version 20.10.17, build 100c701二、案例前知识点

2.1、什么是Consul

- Consul是HashiCorp公司推出的开源工具,用于实现分布式系统的服务发现与配置。与其他分布式服务注册与发现的方案不同,与Airbnb的SmartStack等相比,Consul的方案更“一站式”,内置了服务注册与发现框架、分布一致性协议实现、健康检查、Key/Value存储、多数据中心方案,不再需要依赖其他工具(例如Zookeeper等),使用起来也较为简单。Consul是基于Golang语言开发实现的,因此具有天然可移植性(支持Linux、Windows和Mac OS X);安装包仅包含一个可执行文件,方便部署,与Docker等轻量级容器可无缝配合。

三、基于Nginx和Consul构建自动发现的Docker服务架构

2.1、建立Consul服务

- 想要利用Consul提供的服务实现服务的注册与发现,需要建立Consul服务。在Consul方案中,每个提供服务的节点上都要部署和运行Consul的Agent,,所有运行Consul Agent节点的集合构成了Consul Cluster。Consul Agent有两种运行模式;Server和Client。这里的Server和Client只是Consul群集层面的区分,与搭建在Consul之上的应用服务无关。以Server模式运行的Consul Agent节点用于维护Consul群集的状态,管理建议每个Consul Cluster至少有3个或以上的运行在Server mode的Agent,Client节点不限。

[root@consul ~]# mkdir consul

[root@consul ~]# cd consul/

# 上传zip压缩包

[root@consul consul]# yum -y install unzip

[root@consul consul]# unzip consul_0.9.2_linux_amd64.zip

Archive: consul_0.9.2_linux_amd64.zipinflating: consul

[root@consul consul]# mv consul /usr/bin/

# 查看版本

[root@consul consul]# consul -v

Consul v0.9.2

Protocol 2 spoken by default, understands 2 to 3 (agent will automatically use protocol >2 when speaking to compatible agents)

2.2、后台启动consul服务端

- 安装consul是用于服务注册,也就是容器本身的一些信息注册到consul里面,其他程序可以通过consul获取注册的相关服务信息,这就是服务注册与发现

[root@consul ~]# nohup consul agent -server -bootstrap -ui -data-dir=/var/lib/consul-data -bind=192.168.93.165 -client=0.0.0.0 -node=consul-server01 &> /var/log/consul.log &# 其中的命令参数解释如下

-bootstrap:用来控制一个server是否在bootstrap模式,在一个datacenter中只能有一个server处于bootstrap模式,当一个server处于bootstrap模式时,可以自己选举为raft leader

-data-dir:参数指定数据存储目录

-bind:该地址用来在群集内部的通讯,群集内的所有节点到地址都必须是可达的,默认是0.0.0.0

-ui:参数指定开启UI界面,这样通过http://localhost:8500/ui这样的地址啊访问consul自带的web UI界面

-client:consul绑定在哪个client地址上,这个地址提供HTTP、DNS、RPC等服务,默认是127.0.0.1

-node:节点在群集中的名称,在一个群集中必须是唯一的,默认是该节点的主机名# 查看重定向日志文件,可以看到服务没有报错,是正常的

[root@consul ~]# cat /var/log/consul.log

nohup: ignoring input

==> WARNING: Bootstrap mode enabled! Do not enable unless necessary

==> Starting Consul agent...

==> Consul agent running!Version: 'v0.9.2'Node ID: '0bb5e004-361b-e1f4-ad19-c67e9074142f'Node name: 'consul-server01'Datacenter: 'dc1'Server: true (bootstrap: true)Client Addr: 0.0.0.0 (HTTP: 8500, HTTPS: -1, DNS: 8600)Cluster Addr: 192.168.93.165 (LAN: 8301, WAN: 8302)Gossip encrypt: false, RPC-TLS: false, TLS-Incoming: false==> Log data will now stream in as it occurs:2024/05/31 20:39:18 [INFO] raft: Initial configuration (index=1): [{Suffrage:Voter ID:192.168.93.165:8300 Address:192.168.93.165:8300}]2024/05/31 20:39:18 [INFO] raft: Node at 192.168.93.165:8300 [Follower] entering Follower state (Leader: "")2024/05/31 20:39:18 [INFO] serf: EventMemberJoin: consul-server01.dc1 192.168.93.1652024/05/31 20:39:18 [INFO] serf: EventMemberJoin: consul-server01 192.168.93.1652024/05/31 20:39:18 [INFO] agent: Started DNS server 0.0.0.0:8600 (udp)2024/05/31 20:39:18 [INFO] consul: Handled member-join event for server "consul-server01.dc1" in area "wan"2024/05/31 20:39:18 [INFO] consul: Adding LAN server consul-server01 (Addr: tcp/192.168.93.165:8300) (DC: dc1)2024/05/31 20:39:18 [INFO] agent: Started DNS server 0.0.0.0:8600 (tcp)2024/05/31 20:39:18 [INFO] agent: Started HTTP server on [::]:85002024/05/31 20:39:25 [ERR] agent: failed to sync remote state: No cluster leader2024/05/31 20:39:27 [WARN] raft: Heartbeat timeout from "" reached, starting election2024/05/31 20:39:27 [INFO] raft: Node at 192.168.93.165:8300 [Candidate] entering Candidate state in term 22024/05/31 20:39:27 [INFO] raft: Election won. Tally: 12024/05/31 20:39:27 [INFO] raft: Node at 192.168.93.165:8300 [Leader] entering Leader state2024/05/31 20:39:27 [INFO] consul: cluster leadership acquired2024/05/31 20:39:27 [INFO] consul: New leader elected: consul-server012024/05/31 20:39:27 [INFO] consul: member 'consul-server01' joined, marking health alive2024/05/31 20:39:28 [INFO] agent: Synced node info

==> Newer Consul version available: 1.18.2 (currently running: 0.9.2)# 启动consul后默认会监听5个端口

8300:replication(客户端、服务端数据同步)、leader farwarding的端口

8301:lan cossip的端口(内部统一数据中心端口)

8302:wan gossip的端口(外网端口、广域网、不同数据中心端口通信)

8500:web ui界面的端口(用于http接口与Web)

8600:使用dns协议查看节点信息的端口

2.3、查看群集信息

# 查看群集中的所有成员节点

[root@consul ~]# consul members

Node Address Status Type Build Protocol DC

consul-server01 192.168.93.165:8301 alive server 0.9.2 2 dc1# Node:群集中节点的名称

# Address:节点的地址和端口

# Status:节点的状态,alive表示该节点当前是活跃的

# Type:节点的类型,server表示这是一个consul服务器节点

# Build:consul的版本号

# Protocol:consul使用的协议版本,这里是2

# DC:数据中心的标识,consul支持多数据中心配置,这里的数据中心标识是dc1# 获取Consul详细信息

[root@consul ~]# consul info | grep leaderleader = trueleader_addr = 192.168.93.165:8300# leader=true:表示当前节点是consul群集的领导者。领导者负载协调和管理群集的状态,处理所有的写操作,并将数据复制到其他服务器上

# leader_addr=192.168.93.165:这表示当前领导者的地址和端口,这是consul群集中其他节点和客户端咳哟用来与领导者通信的地址和端口

2.4、通过http api获取群集信息

# 查看群集server成员

[root@consul ~]# curl 127.0.0.1:8500/v1/status/peers

["192.168.93.165:8300"]

# 查看群集Raf leader(领导者)

[root@consul ~]# curl 127.0.0.1:8500/v1/status/leader

"192.168.93.165:8300"

# 注册的所有服务(还没有注册服务)

[root@consul ~]# curl 127.0.0.1:8500/v1/catalog/services

{"consul":[]}

# 查看nginx服务信息(还没做任何有关nginx的操作)

[root@consul ~]# curl 127.0.0.1:8500/v1/catalog/nginx

# 查看群集节点相信信息

[root@consul ~]# curl 127.0.0.1:8500/v1/catalog/nodes

[{"ID":"0bb5e004-361b-e1f4-ad19-c67e9074142f","Node":"consul-server01","Address":"192.168.93.165","Datacenter":"dc1","TaggedAddresses":{"lan":"192.168.93.165","wan":"192.168.93.165"},"Meta":{},"CreateIndex":5,"ModifyIndex":6}]

四、容器服务自动加入Nginx群集

4.1、安装Gliderlabs/Registrator

- Gliderlabs/Registrator可检查容器运行状态并自动注册,还可以注销docker容器的服务到服务配置中心。目前支持Consul、Etcd和SkyDNS2

- 如果拉去Gliderlabs/Registrator镜像的时候报错不能拉去,可能是docker版本太高了

# 在192.168.93.166节点,执行以下操作

[root@registrator ~]# docker run -d --name=registrator --net=host -v /var/run/docker.sock:/tmp/docker.sock --restart=always gliderlabs/registrator:latest -ip=192.168.93.166 consul://192.168.93.165:8500

Unable to find image 'gliderlabs/registrator:latest' locally

latest: Pulling from gliderlabs/registrator

Image docker.io/gliderlabs/registrator:latest uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

c87f684ee1c2: Pull complete

a0559c0b3676: Pull complete

a28552c49839: Pull complete

Digest: sha256:6e708681dd52e28f4f39d048ac75376c9a762c44b3d75b2824173f8364e52c10

Status: Downloaded newer image for gliderlabs/registrator:latest

e2377a3815a8f8ddcddfed50fd1776542002455f3d1e18fe72249d82ed10b61e[root@registrator ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e2377a3815a8 gliderlabs/registrator:latest "/bin/registrator -i…" 37 seconds ago Up 36 seconds registrator# 选项含义解释如下

--name=registrator:给容器只当一个容器名称--net=host:容器与宿主机共享网络-v /var/run/docker.sock:/tmp/docker.sock:挂载卷,用来监视新的容器启动和停止事件 本机:容器--restart=always:容器重启策略,如果容器退出,Docker将始终重启容器gliderlabs/registrator:latest:要运行的镜像-ip=192.168.93.166 :这是一个传递给registrator的命令行参数,指定了容器应该使用的IP地址consul://192.168.93.165:8500:这也是一个传递给registrator的命令行参数,制定了registrator应该连接到的Consul服务器的地址和端口。Consul是一个服务发现和配置管理的工具,registrator会将新启动的容器注册到Consul服务器中

4.2、测试服务发现功能是否正常

[root@registrator ~]# docker run -itd -p 83:80 --name test-01 -h test01 nginx

[root@registrator ~]# docker run -itd -p 84:80 --name test-02 -h test02 nginx

[root@registrator ~]# docker run -itd -p 88:80 --name test-03 -h test03 httpd

[root@registrator ~]# docker run -itd -p 89:80 --name test-04 -h test04 httpd# 选项含义解释如下

-itd:一个组合,i:容器保持输入、t:给容器分配一个伪终端、-d运行后台运行

-p:端口映射 宿主机端口:容器端口

--name:容器名称

-host:容器主机名

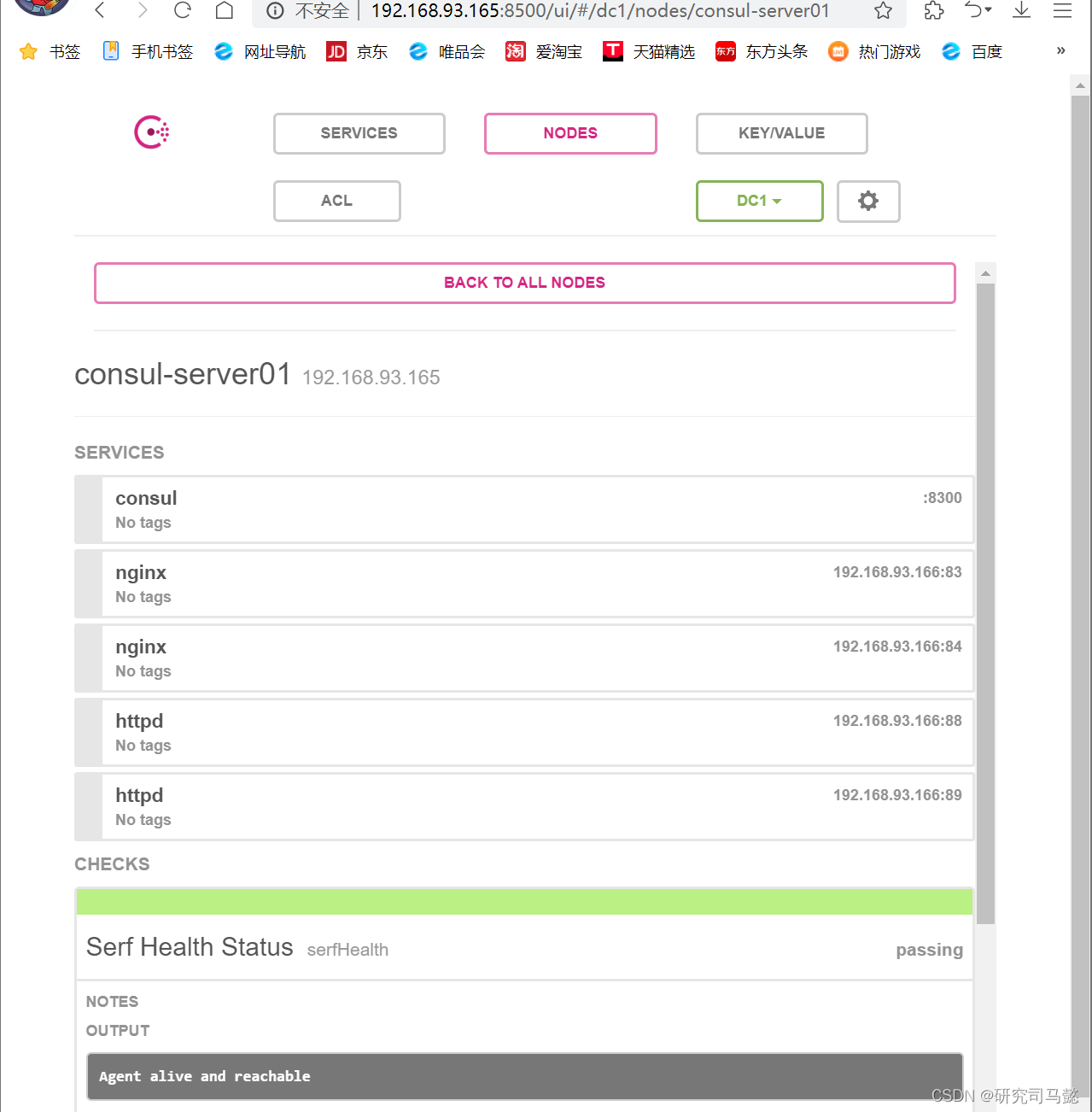

4.3、验证http和nginx服务是否注册号到consul

- 浏览器中,输入http://192.168.93.165:8500,在web页面中“单击NODES”,然后单击“consul-server01”,会出现5个服务

# 使用curl测试连接服务器

[root@consul ~]# curl 127.0.0.1:8500/v1/catalog/services

{"consul":[],"httpd":[],"nginx":[]}# 从结果看,httpd和nginx服务已经注册到consul里面,说明服务正常

五、安装consul-template

- Consul-Template是基于Consul的自动替换配置文件的应用。在Consul-template没出现之前,构建服务大多数采用的与Zookeeper、Etcd+Confd类似的系统

- Consul-Template是一个守护进程,用于实时查询Consul群集信息,并更新文件系统上任意数量的指定模板,生成配置文件。更新完成以后,可以选择运行shell命令执行更新操作,重新加载Nginx

- Consul-Template可以查询Consul中的服务目录、Key、Key-values等。这种强大的抽象功能和查询语言模板可以使用Consul-Template特别适合动态的创建配置文件。例如:创建Apache/Nginx、Proxy Balancers、Harprxy Backends、Varnish Servers、Application Configurations等

5.1、准备template nginx模板文件

- 需要在192.168.93.165上执行如下操作:

[root@consul ~]# vim /root/consul/nginx.ctmpl

upstream http_backend {{{range service "nginx"}}server {{.Address}}:{{.Port}};{{end}}

}

server {listen 83;server_name localhost 192.168.93.165;access_log /var/log/nginx/kgc.cn-access.log;index index.html index.php;location / {proxy_set_header HOST $host;proxy_set_header X-Real-IP $remote_addr;proxy_set_header Client-IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;proxy_pass http://http_backend;}

}# 创建nginx日志文件存放目录

[root@consul ~]# mkdir /var/log/nginx

5.2、编译安装nginx

[root@consul ~]# yum -y install gcc pcre-devel zlib-devel

[root@consul ~]# tar -zxvf nginx-1.12.0.tar.gz

[root@consul ~]# cd nginx-1.12.0/

[root@consul nginx-1.12.0]# ./configure --prefix=/usr/local/nginx

[root@consul nginx-1.12.0]# make && make install

5.3、配置nginx

[root@consul ~]# vim /usr/local/nginx/conf/nginx.conf

# 在http段里面34行添加虚拟主机目录

include vhost/*.conf;

[root@consul ~]# mkdir /usr/local/nginx/conf/vhost

# 启动nginx服务

[root@consul ~]# /usr/local/nginx/sbin/nginx

[root@consul ~]# netstat -anpt | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 15667/nginx: master

5.4、配置并启动template

[root@consul ~]# unzip consul-template_0.19.3_linux_amd64.zip

Archive: consul-template_0.19.3_linux_amd64.zipinflating: consul-template

[root@consul ~]# mv consul-template /usr/bin/

# 查看版本

[root@consul ~]# consul-template -v

consul-template v0.19.3 (ebf2d3d)# 在前台启动template服务,启动后不要按ctrl + c 中止

[root@consul ~]# consul-template -consul-addr 192.168.93.165:8500 -template "/root/consul/nginx.ctmpl:/usr/local/nginx/conf/vhost/kgc.conf:/usr/local/nginx/sbin/nginx -s reload" --log-level=info

2024/06/01 03:21:24.800912 [INFO] consul-template v0.19.3 (ebf2d3d)

2024/06/01 03:21:24.800923 [INFO] (runner) creating new runner (dry: false, once: false)

2024/06/01 03:21:24.801137 [INFO] (runner) creating watcher

2024/06/01 03:21:24.801380 [INFO] (runner) starting

2024/06/01 03:21:24.801394 [INFO] (runner) initiating run

2024/06/01 03:21:24.802638 [INFO] (runner) initiating run

2024/06/01 03:21:24.803310 [INFO] (runner) rendered "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/kgc.conf"

2024/06/01 03:21:24.803321 [INFO] (runner) executing command "/usr/local/nginx/sbin/nginx -s reload" from "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/kgc.conf"

2024/06/01 03:21:24.803343 [INFO] (child) spawning: /usr/local/nginx/sbin/nginx -s reload# 需要指定template模板文件及生成路径即可,生成的配置文件如下,重新打开一个终端查看

[root@consul ~]# cat /usr/local/nginx/conf/vhost/kgc.conf

upstream http_backend {server 192.168.93.166:83;server 192.168.93.166:84;}

server {listen 83;server_name localhost 192.168.93.165;access_log /var/log/nginx/kgc.cn-access.log;index index.html index.php;location / {proxy_set_header HOST $host;proxy_set_header X-Real-IP $remote_addr;proxy_set_header Client-IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;proxy_pass http://http_backend;}

}

5.5、访问template-nginx配置文件

- 通过浏览器访问template-nginx配置文件里面Nginx监听的83端口,访问成功如下所示

5.6、增加一个nginx容器节点

- 增加一个nginx容器节点,测试服务发现及配置更新功能

[root@registrator ~]# docker run -itd -p 85:80 --name test-05 -h test05 nginx

- 观察template服务,会从模板更新/usr/local/nginx/conf/vhost/kgc.conf文件内容,并且重载nginx服务

ca2024/06/01 03:26:45.744977 [INFO] (runner) initiating run

2024/06/01 03:26:45.745772 [INFO] (runner) rendered "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/kgc.conf"

2024/06/01 03:26:45.745791 [INFO] (runner) executing command "/usr/local/nginx/sbin/nginx -s reload" from "/root/consul/nginx.ctmpl" => "/usr/local/nginx/conf/vhost/kgc.conf"

2024/06/01 03:26:45.745811 [INFO] (child) spawning: /usr/local/nginx/sbin/nginx -s reload

- 查看/usr/local/nginx/conf/vhost/kgc.conf文件内容

[root@consul ~]# cat /usr/local/nginx/conf/vhost/kgc.conf

upstream http_backend {server 192.168.93.166:83;server 192.168.93.166:84;# 新多出一个85nginx服务server 192.168.93.166:85;}

server {listen 83;server_name localhost 192.168.93.165;access_log /var/log/nginx/kgc.cn-access.log;index index.html index.php;location / {proxy_set_header HOST $host;proxy_set_header X-Real-IP $remote_addr;proxy_set_header Client-IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;proxy_pass http://http_backend;}

}

- 查看三台nginx容器日志,请求正常轮询到各个容器节点上

# 使用curl或者浏览器访问http://192.168.93.165:83,观察容器nginx日志

[root@registrator ~]# docker logs -f test-01

192.168.93.165 - - [01/Jun/2024:03:30:10 +0000] "GET / HTTP/1.0" 200 615 "-" "curl/7.29.0" "127.0.0.1[root@registrator ~]# docker logs -f test-02

192.168.93.165 - - [01/Jun/2024:03:30:11 +0000] "GET / HTTP/1.0" 200 615 "-" "curl/7.29.0" "127.0.0.1"[root@registrator ~]# docker logs -f test-05

192.168.93.165 - - [01/Jun/2024:03:30:12 +0000] "GET / HTTP/1.0" 200 615 "-" "curl/7.29.0" "127.0.0.1"

)

普通用户不能sudo 也不能使用root登录 忘记root密码 修复解决方案)

算法详解及C++代码实现)

![[240602] ChatGPT 背后的残酷真相:AI 正以吞噬地球的速度消耗资源 | KEV - 已知漏洞利用目录](http://pic.xiahunao.cn/[240602] ChatGPT 背后的残酷真相:AI 正以吞噬地球的速度消耗资源 | KEV - 已知漏洞利用目录)

)

)