目录

一、虚拟机准备

二、所有节点环境准备

1.所有节点做hosts解析

2.所有节点重新命名

3.所有节点安装docker

4.所有节点为docker做linux内核转发

5.所有节点配置docker

6.所有节点关闭swap分区

7.所有节点验证网卡硬件编号是否冲突

8.所有节点配置允许iptables桥接流量

9.所有关闭防火墙和selinux

三、k8s1节点安装harbor镜像仓库

1.安装harbor

2.验证是否成功

四、kubeadm方式部署k8s

1.所有节点安装部署组件

2.初始化master节点(k8s1)

3.验证下master节点组件

4.worker节点加入k8s集群

5.此时查看节点

6.master节点部署网络组件

7.查看节点状态,是否从notready变为ready

8.小彩蛋,命令补全

五、报错解决

报错1

k8s多种搭建方式汇总:k8s搭建-多种方式汇总-CSDN博客

一、虚拟机准备

搭建学习环境,使用4台虚拟机centos7系统;

| 主机名称 | ip地址 | 资源 | 备注 |

| k8s1 | 10.128.175.251 | 2核4g | master节点,harbor镜像仓库 |

| k8s2 | 10.128.173.94 | 2核4g | worker节点 |

| k8s3 | 10.128.175.196 | 2核4g | worker节点 |

二、所有节点环境准备

1.所有节点做hosts解析

cat >> /etc/hosts <<EOF

10.128.175.251 k8s1 harbor.oslee.com

10.128.173.94 k8s2

10.128.175.196 k8s3

EOF

2.所有节点重新命名

hostnamectl set-hostname k8s1

hostnamectl set-hostname k8s2

hostnamectl set-hostname k8s3

3.所有节点安装docker

[root@k8s1 data]# cd /data

[root@k8s1 data]# ls

docker.tar.gz

[root@k8s1 data]# tar -xvf docker.tar.gz[root@k8s1 data]# ls

docker.tar.gz download install-docker.sh

[root@k8s1 data]# sh install-docker.sh install

4.所有节点为docker做linux内核转发

因为,docker底层使用了iptables,在容器与宿主机端口映射时,需要源地址转发,共享网络,所以,需要做内核转发配置;

echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

sysctl -p

5.所有节点配置docker

参数说明:

"registry-mirrors": ["https://embkz39z.mirror.aliyuncs.com"], # 阿里云镜像加速,可以自己申请;

"insecure-registries": ["http://harbor.oslee.com"], #允许拉取自建仓库harbor仓库的镜像,后面有部署harbor的教程;

"exec-opts": ["native.cgroupdriver=systemd"] #kubeadm去寻找的cgroup默认是systemd,而docker不配置的话,默认是cgroupfs,不配置这个,部署k8s时会报错;

"data-root": "/data/lib/docker" # docker存储数据目录;

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{"registry-mirrors": ["https://embkz39z.mirror.aliyuncs.com"],"insecure-registries": ["http://harbor.oslee.com"],"exec-opts": ["native.cgroupdriver=systemd"],"data-root": "/data/lib/docker"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo systemctl enable docker6.所有节点关闭swap分区

[root@k8s1 data]# sed -ni '/^[^#]*swap/s@^@#@p' /etc/fstab

[root@k8s1 data]# free -h

total used free shared buff/cache available

Mem: 31G 357M 28G 9.1M 1.9G 30G

Swap: 0B 0B 0B

7.所有节点验证网卡硬件编号是否冲突

# 所有节点都执行,对比是否冲突

ifconfig eth0 |grep ether |awk '{print $2}'

cat /sys/class/dmi/id/product_uuid

8.所有节点配置允许iptables桥接流量

cat > /etc/modules-load.d/k8s.conf << EOF

br_netfilter

EOFcat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

EOFsysctl --system

9.所有关闭防火墙和selinux

#1,关闭防火墙

systemctl stop firewalld

systemctl disable firewalld#2,关闭selinux

setenforce 0

sed -i '7c SELINUX=disabled' /etc/selinux/config

三、k8s1节点安装harbor镜像仓库

1.安装harbor

#1,创建harbor工作目录

[root@k8s1 harbor]# mkdir -pv /data/harbor

[root@k8s1 harbor]# cd /data/harbor#2,上传harbor本地安装包;

[root@k8s1 harbor]# ls

harbor-offline-installer-v2.3.1.tgz#3,解压harbor安装包

[root@k8s1 harbor]# tar -xvf harbor-offline-installer-v2.3.1.tgz

#4,查看harbor的目录文件

[root@k8s1 harbor]# ll harbor

total 618124

-rw-r--r-- 1 root root 3361 Jul 19 2021 common.sh

-rw-r--r-- 1 root root 632922189 Jul 19 2021 harbor.v2.3.1.tar.gz

-rw-r--r-- 1 root root 7840 Jul 19 2021 harbor.yml.tmpl

-rwxr-xr-x 1 root root 2500 Jul 19 2021 install.sh

-rw-r--r-- 1 root root 11347 Jul 19 2021 LICENSE

-rwxr-xr-x 1 root root 1881 Jul 19 2021 prepare#5,复制修改harbor的配置文件名称

[root@k8s1 harbor]# cp harbor/harbor.yml.tmpl harbor/harbor.yml#6,修改配置文件信息

[root@harbor harbor]# vim harbor/harbor.yml

...

hostname: harbor.oslee.com

...

################################################

#注释掉下面的信息

# https related config

# https:

# # https port for harbor, default is 443

# port: 443

# # The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path################################################

harbor_admin_password: harbor123

# 启动harbor脚本

[root@k8s1 harbor]# ./harbor/install.sh

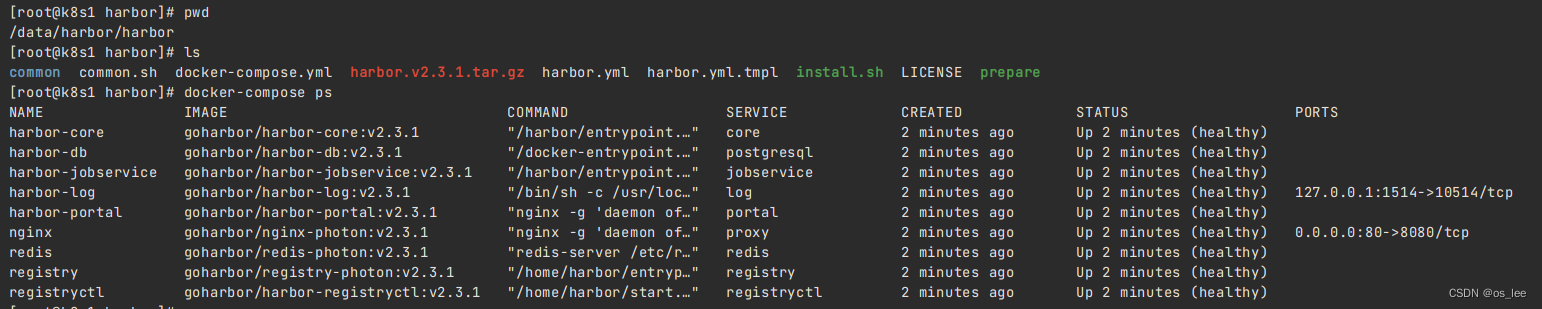

2.验证是否成功

[root@k8s1 harbor]# pwd

/data/harbor/harbor

[root@k8s1 harbor]# ls

common common.sh docker-compose.yml harbor.v2.3.1.tar.gz harbor.yml harbor.yml.tmpl install.sh LICENSE prepare

[root@k8s1 harbor]# docker-compose ps

本地windows浏览器访问;

注意:先配置windows本地hosts;

10.128.175.251 harbor.oslee.com

四、kubeadm方式部署k8s

1.所有节点安装部署组件

# 本地离线安装

#1,上传并解压软件包

[root@k8s1 data]# tar -xvf kubeadm-kubelet-kubectl.tar.gz

#2,所有节点本地安装

yum -y localinstall kubeadm-kubelet-kubectl/*.rpm

#3,设置启动及开机自启动k8s

systemctl enable --now kubelet.service

#4,查看状态(此时启动失败,不用管,因为还没配置完成,配置完之后自动回启动)

systemctl status kubelet.service

2.初始化master节点(k8s1)

如果你的初始化失败,想要重新初始化:kubeadm reset -f

rm -rf /etc/kubernetes/manifests/kube-apiserver.yaml

rm -rf /etc/kubernetes/manifests/kube-controller-manager.yaml

rm -rf /etc/kubernetes/manifests/kube-scheduler.yaml

rm -rf /etc/kubernetes/manifests/etcd.yaml

rm -rf /var/lib/etcd/*

[root@k8s1 data]# kubeadm init --kubernetes-version=v1.23.17 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=oslee.com

参数释义:

--pod-network-cidr=10.100.0.0/16 #pod的网段

--service-cidr=10.200.0.0/16 #service资源的网段

--service-dns-domain=oslee.com #service集群的dns解析名称

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 10.35.8.50:6443 --token 19g89m.8susoikd7tl2c2n8 \--discovery-token-ca-cert-hash sha256:a4342264f0142b43a1ac1f7a1b66aafd04f915194d5298eb96b7b2d5bc292687

#拷贝整数到家目录下

[root@k8s1 data]# mkdir -p $HOME/.kube

[root@k8s1 data]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s1 data]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

3.验证下master节点组件

#1,查看master组件

[root@k8s1 data]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

4.worker节点加入k8s集群

# 上面初始化master节点时生成的

kubeadm join 10.35.8.50:6443 --token 19g89m.8susoikd7tl2c2n8 \

--discovery-token-ca-cert-hash sha256:a4342264f0142b43a1ac1f7a1b66aafd04f915194d5298eb96b7b2d5bc292687# 如果过期了,可重新生成

kubeadm token create --print-join-command

5.此时查看节点

[root@k8s1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s1 NotReady control-plane,master 22m v1.23.17

k8s2 NotReady <none> 4m10s v1.23.17

k8s3 NotReady <none> 15s v1.23.17################

此时发现,状态显示“没准备好”,是因为未有CNI网络组件

6.master节点部署网络组件

#1,本地上传网络组建文件

[root@k8s1 data]# ls kube-flannel.yml

kube-flannel.yml

[root@k8s231 ~]# vim kube-flannel.ymlnet-conf.json: |

{

"Network": "10.100.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

#3,部署flannel组件

[root@k8s231 ~]# kubectl apply -f kube-flannel.yml

#4,查看flannel组件是否成功创建pod(每个节点是否都有)

[root@k8s231 ~]# kubectl get pods -A -o wide | grep flannel

7.查看节点状态,是否从notready变为ready

[root@k8s1 data]# watch -n 2 kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s1 Ready control-plane,master 7m41s v1.23.17

k8s2 Ready <none> 4m33s v1.23.17

k8s3 Ready <none> 3m13s v1.23.17

8.小彩蛋,命令补全

yum -y install bash-completion

kubectl completion bash > ~/.kube/completion.bash.inc

echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile

source $HOME/.bash_profile

五、报错解决

报错1

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "k8s2" could not be reached

[WARNING Hostname]: hostname "k8s2": lookup k8s2 on 10.128.86.49:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

# 临时解决

echo "1">/proc/sys/net/bridge/bridge-nf-call-iptables

echo "1">/proc/sys/net/bridge/bridge-nf-call-ip6tables

# 永久解决

## 在/etc/sysctl.conf中添加:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1## 执行sysctl -p

如果出现 缺少文件的现象sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: 没有那个文件或目录

则确认是否驱动加载完成

## 驱动加载

modprobe br_netfilter

bridge

实现原理)

)

)