#include <opencv2/core.hpp> // 包含OpenCV核心功能的头文件

#include <opencv2/imgproc.hpp> // 包含OpenCV图像处理功能的头文件

#include <opencv2/features2d.hpp> // 包含OpenCV特征检测相关功能的头文件

#include <opencv2/highgui.hpp> // 包含OpenCV的GUI功能,如窗口显示的头文件

#include <opencv2/calib3d.hpp> // 包含OpenCV进行相机标定和三维重建功能的头文件

#include <iostream> // 包含标准输入输出流库的头文件

#include <iomanip> // 包含输入输出流格式设置的头文件using namespace std; // 使用标准命名空间

using namespace cv; // 使用OpenCV命名空间// 声明帮助函数,该函数会输出使用本程序的方式

static void help(char** argv)

{cout<< "This is a sample usage of AffineFeature detector/extractor.\n"<< "And this is a C++ version of samples/python/asift.py\n"<< "Usage: " << argv[0] << "\n"// 以下是该程序的参数说明<< " [ --feature=<sift|orb|brisk> ] # Feature to use.\n"<< " [ --flann ] # use Flann-based matcher instead of bruteforce.\n"<< " [ --maxlines=<number(50 as default)> ] # The maximum number of lines in visualizing the matching result.\n"<< " [ --image1=<image1(aero1.jpg as default)> ]\n"<< " [ --image2=<image2(aero3.jpg as default)> ] # Path to images to compare."<< endl;

}// 声明计时器函数,用于计算操作的耗时

static double timer()

{return getTickCount() / getTickFrequency();

}// 程序的主函数,argc是参数数量,argv是参数列表

int main(int argc, char** argv)

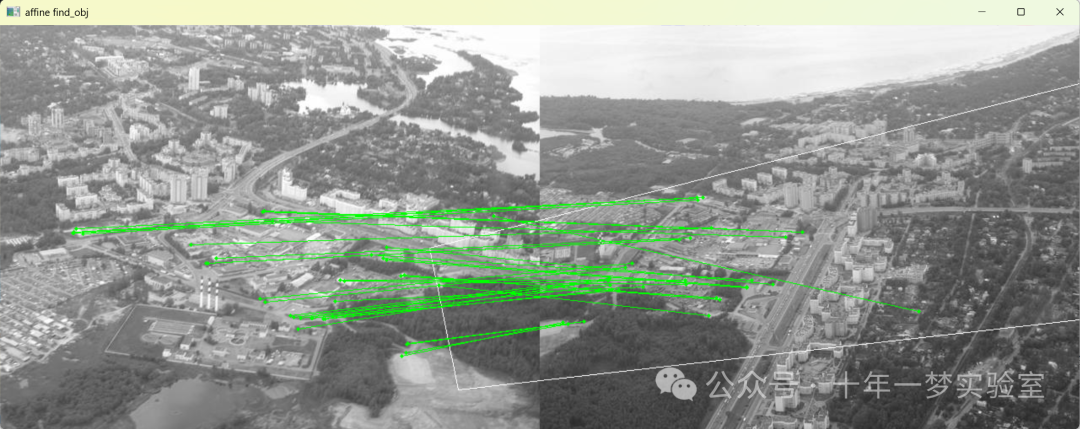

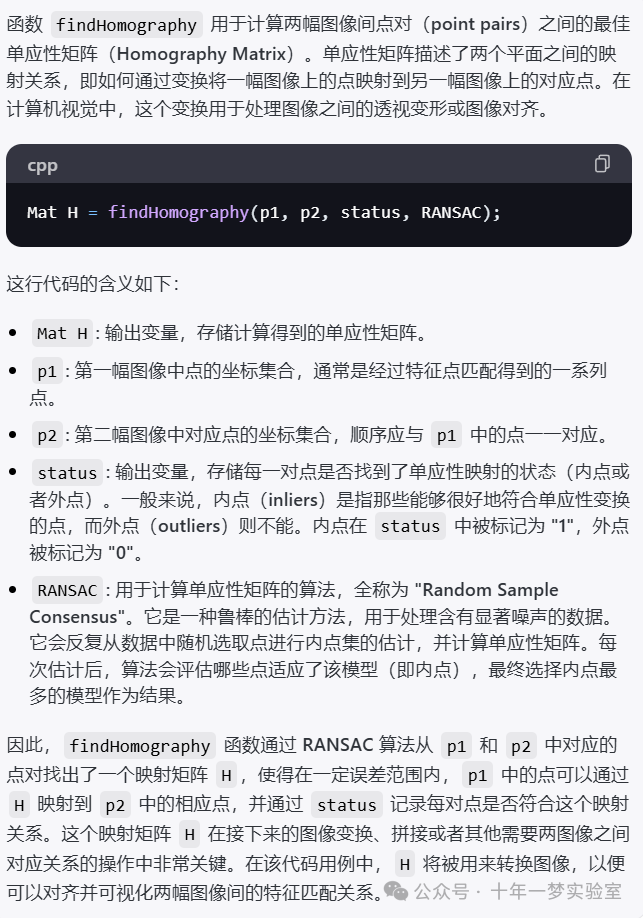

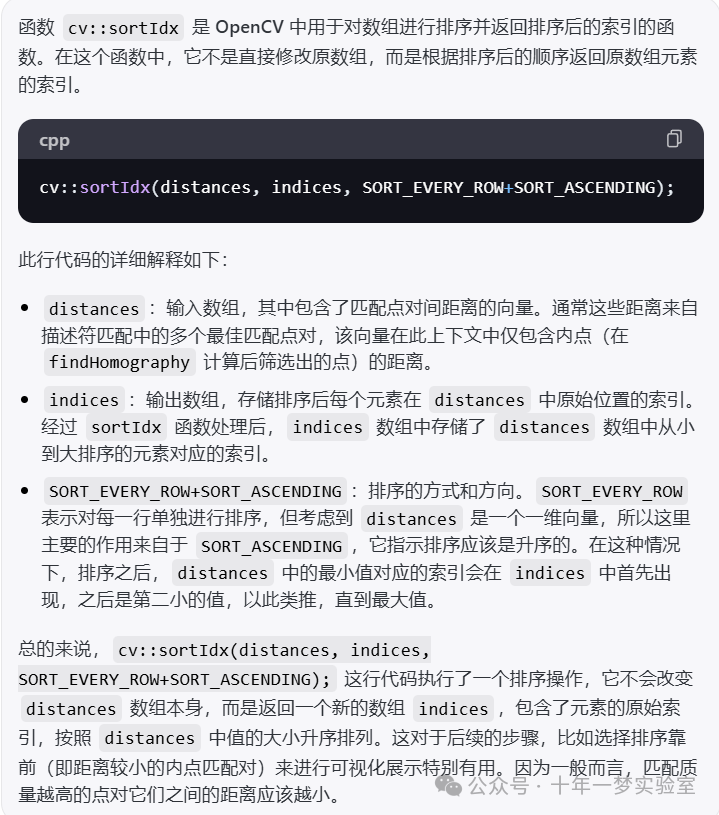

{vector<String> fileName; // 存储文件名的字符串向量// 使用OpenCV的命令行解析器解析输入的命令行参数cv::CommandLineParser parser(argc, argv,"{help h ||}""{feature|brisk|}""{flann||}""{maxlines|50|}""{image1|aero1.jpg|}{image2|aero3.jpg|}");// 如果用户请求帮助,调用help函数并退出程序if (parser.has("help")){help(argv);return 0;}// 从解析器中获取输入的参数string feature = parser.get<string>("feature");bool useFlann = parser.has("flann");int maxlines = parser.get<int>("maxlines");// 查找并存储输入的图像文件路径fileName.push_back(samples::findFile(parser.get<string>("image1")));fileName.push_back(samples::findFile(parser.get<string>("image2")));// 检查参数是否有误if (!parser.check()){parser.printErrors();cout << "See --help (or missing '=' between argument name and value?)" << endl;return 1;}// 读取图像,并将其转换为灰度图Mat img1 = imread(fileName[0], IMREAD_GRAYSCALE);Mat img2 = imread(fileName[1], IMREAD_GRAYSCALE);// 确保图像成功加载if (img1.empty()){cerr << "Image " << fileName[0] << " is empty or cannot be found" << endl;return 1;}if (img2.empty()){cerr << "Image " << fileName[1] << " is empty or cannot be found" << endl;return 1;}// 声明特征检测器和描述符匹配器的指针Ptr<Feature2D> backend;Ptr<DescriptorMatcher> matcher;// 根据用户选择初始化特征检测器和匹配器if (feature == "sift"){backend = SIFT::create();if (useFlann)matcher = DescriptorMatcher::create("FlannBased");elsematcher = DescriptorMatcher::create("BruteForce");}else if (feature == "orb"){backend = ORB::create();if (useFlann)matcher = makePtr<FlannBasedMatcher>(makePtr<flann::LshIndexParams>(6, 12, 1));elsematcher = DescriptorMatcher::create("BruteForce-Hamming");}else if (feature == "brisk"){backend = BRISK::create();if (useFlann)matcher = makePtr<FlannBasedMatcher>(makePtr<flann::LshIndexParams>(6, 12, 1));elsematcher = DescriptorMatcher::create("BruteForce-Hamming");}else{cerr << feature << " is not supported. See --help" << endl;return 1;}// 提取特征点和描述符,并进行匹配cout << "extracting with " << feature << "..." << endl;Ptr<AffineFeature> ext = AffineFeature::create(backend);vector<KeyPoint> kp1, kp2;Mat desc1, desc2;ext->detectAndCompute(img1, Mat(), kp1, desc1);ext->detectAndCompute(img2, Mat(), kp2, desc2);cout << "img1 - " << kp1.size() << " features, "<< "img2 - " << kp2.size() << " features"<< endl;cout << "matching with " << (useFlann ? "flann" : "bruteforce") << "..." << endl;double start = timer(); // 开始计时// 匹配特征点,并筛选出好的匹配vector< vector<DMatch> > rawMatches;vector<Point2f> p1, p2;vector<float> distances;matcher->knnMatch(desc1, desc2, rawMatches, 2);// 筛选出好的匹配点for (size_t i = 0; i < rawMatches.size(); i++){const vector<DMatch>& m = rawMatches[i];if (m.size() == 2 && m[0].distance < m[1].distance * 0.75){p1.push_back(kp1[m[0].queryIdx].pt);p2.push_back(kp2[m[0].trainIdx].pt);distances.push_back(m[0].distance);}}// 利用单应性计算匹配点对的状态vector<uchar> status; // 创建一个uchar类型的向量status,用来存储每对匹配点是否是内点的状态vector< pair<Point2f, Point2f> > pointPairs; // 创建一个存储匹配点对(两个图像中匹配的点)的vectorMat H = findHomography(p1, p2, status, RANSAC); // 利用RANSAC算法计算从图像1到图像2的单应性矩阵Hint inliers = 0; // 初始化内点数量计数器// 遍历status向量,统计内点数量并存储这些点对for (size_t i = 0; i < status.size(); i++){// 如果status向量中的元素为true,则表示该匹配点对是内点if (status[i]){pointPairs.push_back(make_pair(p1[i], p2[i])); // 将内点对添加到pointPairs向量中distances[inliers] = distances[i]; // 将对应内点的距离存储到distances向量中// CV_Assert(inliers <= (int)i); // 断言inliers的值应小于等于当前索引,通常用于调试inliers++; // 内点数量加一}}distances.resize(inliers); // 重新调整distances向量的大小以匹配内点的数量// 输出执行时间cout << "execution time: " << fixed << setprecision(2) << (timer()-start)*1000 << " ms" << endl;// 输出内点与匹配点对的比例cout << inliers << " / " << status.size() << " inliers/matched" << endl;// 可视化匹配结果前的准备工作cout << "visualizing..." << endl;vector<int> indices(inliers); // 创建一个大小等于内点数量的整数型向量indices,用于存储排序后的索引// 将distances向量中元素的索引按照距离从小到大排序并存入indices向量cv::sortIdx(distances, indices, SORT_EVERY_ROW+SORT_ASCENDING);// 创建可视化图像并绘制匹配的特征点int h1 = img1.size().height;int w1 = img1.size().width;int h2 = img2.size().height;int w2 = img2.size().width;Mat vis = Mat::zeros(max(h1, h2), w1+w2, CV_8U);img1.copyTo(Mat(vis, Rect(0, 0, w1, h1)));img2.copyTo(Mat(vis, Rect(w1, 0, w2, h2)));cvtColor(vis, vis, COLOR_GRAY2BGR);vector<Point2f> corners(4);corners[0] = Point2f(0, 0);corners[1] = Point2f((float)w1, 0);corners[2] = Point2f((float)w1, (float)h1);corners[3] = Point2f(0, (float)h1);vector<Point2i> icorners;perspectiveTransform(corners, corners, H); // 对图像1的四个角进行单应性变换transform(corners, corners, Matx23f(1,0,(float)w1,0,1,0)); // 将变换后的角点移到图像2的右侧Mat(corners).convertTo(icorners, CV_32S); // 将角落点的类型转化为整数polylines(vis, icorners, true, Scalar(255,255,255)); // 在可视化图像中绘制边界线// 绘制前maxlines个的匹配对for (int i = 0; i < min(inliers, maxlines); i++){int idx = indices[i];const Point2f& pi1 = pointPairs[idx].first;const Point2f& pi2 = pointPairs[idx].second;circle(vis, pi1, 2, Scalar(0,255,0), -1); // 绘制圆点circle(vis, pi2 + Point2f((float)w1,0), 2, Scalar(0,255,0), -1); // 在图像2相应的位置绘制圆点line(vis, pi1, pi2 + Point2f((float)w1,0), Scalar(0,255,0)); // 绘制连线}if (inliers > maxlines)cout << "only " << maxlines << " inliers are visualized" << endl;imshow("affine find_obj", vis); // 显示最终的可视化结果窗口// 当存在更多的匹配时,输出提示信息// Mat vis2 = Mat::zeros(max(h1, h2), w1+w2, CV_8U); // 创建另一个可视化用的空白图像// Mat warp1; // 存储变换后图像的矩阵// warpPerspective(img1, warp1, H, Size(w1, h1)); // 对图像1应用单应性变换// warp1.copyTo(Mat(vis2, Rect(0, 0, w1, h1))); // 将变换后的图像1复制到可视化图像的左半边// img2.copyTo(Mat(vis2, Rect(w1, 0, w2, h2))); // 将图像2复制到可视化图像的右半边// imshow("warped", vis2); // 显示变换后图像与图像2的对比窗口waitKey(); // 等待任意键按下cout << "done" << endl; // 输出完成提示return 0; // 程序结束

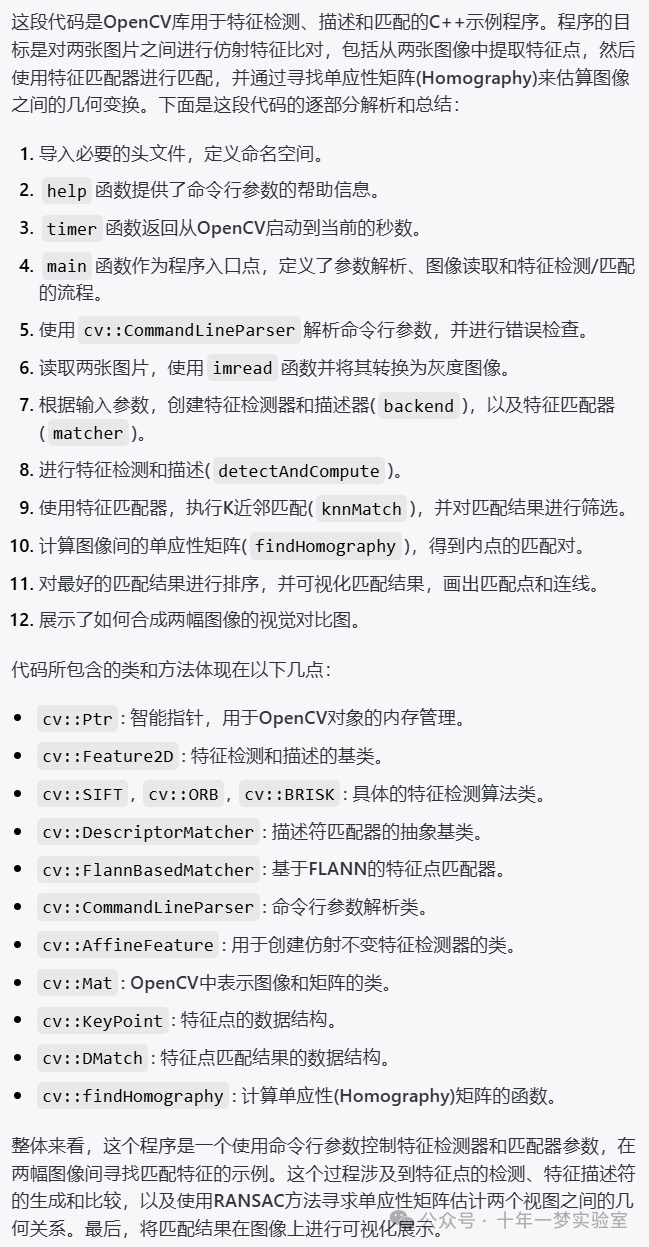

}此段C++代码的主要功能是载入两张图像,通过OpenCV库进行特征点检测和匹配,然后通过单应性变换计算两图像之间的匹配关系,并将匹配结果可视化显示出来。用户可以指定不同的特征检测算法(如SIFT、ORB、BRISK等)以及是否使用FLANN库进行近似最邻近搜索而不是暴力匹配。代码结束时还会显示执行时间和匹配对的数量。

终端输出:

img1 - 39607 features, img2 - 24674 features

matching with bruteforce...

execution time: 35513.65 ms

41 / 105 inliers/matched

visualizing...Ptr<AffineFeature> ext = AffineFeature::create(backend);

Mat H = findHomography(p1, p2, status, RANSAC);

仿射特征匹配有哪些应用场景

)

:vector类的实际OJ应用)

一个decoder-only的模型长啥样)

managed_shared_memory共享内存分配器)

学习窗口沉浸式能力】)