LLM在SFT之后会产生大量的冗余参数(delta参数),阿里团队提出DARE方法来消除delta参数,并将其合并到PRE模型中,从而实现多源模型能力的吸收。

DARE无需GPU重新训练,其思路非常简单,就跟dropout类似:

m t ∼ Bernoulli ( p ) δ ~ t = ( 1 − m t ) ⊙ δ t δ ^ t = δ ~ t / ( 1 − p ) θ D A R E t = δ ^ t + θ P R E \begin{gathered} \boldsymbol{m}^t \sim \operatorname{Bernoulli}(p) \\ \widetilde{\boldsymbol{\delta}}^t=\left(\mathbf{1}-\boldsymbol{m}^t\right) \odot \boldsymbol{\delta}^t \\ \hat{\boldsymbol{\delta}}^t=\widetilde{\boldsymbol{\delta}}^t /(1-p) \\ \boldsymbol{\theta}_{\mathrm{DARE}}^t=\hat{\boldsymbol{\delta}}^t+\boldsymbol{\theta}_{\mathrm{PRE}} \end{gathered} mt∼Bernoulli(p)δ t=(1−mt)⊙δtδ^t=δ t/(1−p)θDAREt=δ^t+θPRE

两个步骤:

- drop:随机mask参数为0

- rescale:对保存的参数rescale,这样可以保证神经元期望值不变: E n o t m a s k = x , E m a s k = p ∗ x p E_{not_{mask}}=x,E_{mask}=\frac{p*x}{p} Enotmask=x,Emask=pp∗x

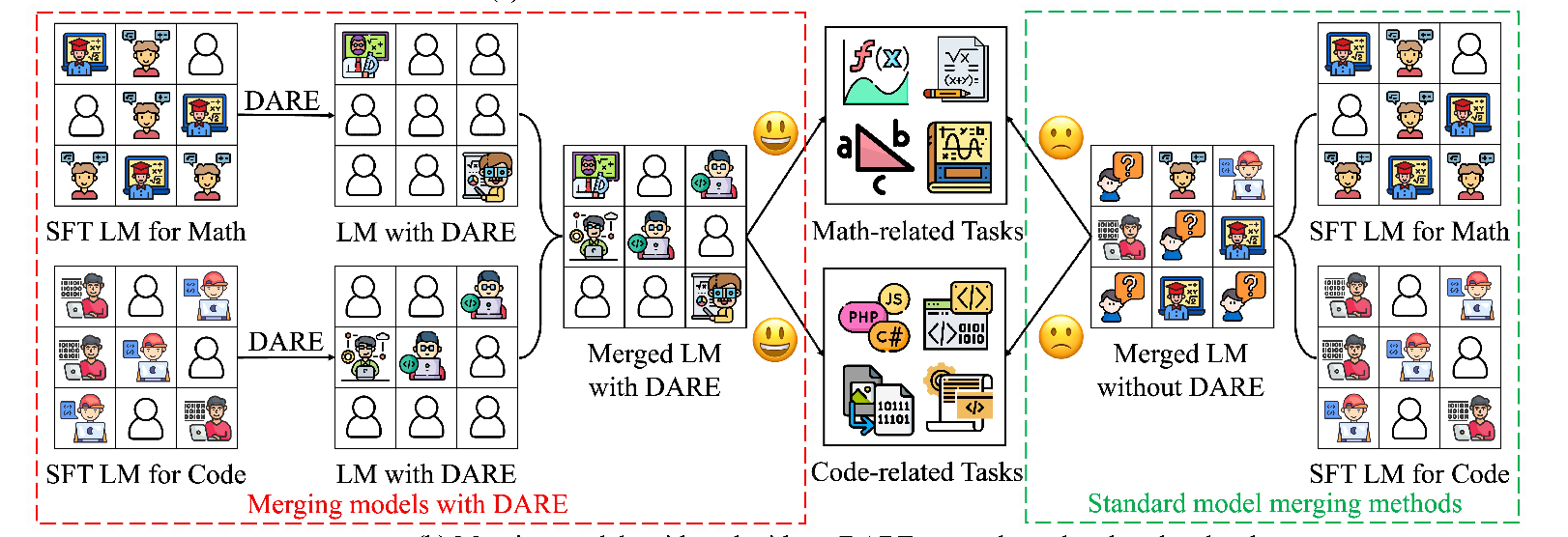

传统的模型融合只是对神经元进行加权求和,这样会导致模型能力骤降。DARE方法通过dropout避免了这种问题。

多源模型融合

θ D A R E t k = DARE ( θ S F T t k , θ P R E ) , for 1 ≤ k ≤ K , θ M = θ P R E + λ ⋅ ∑ k = 1 K δ ^ t k = θ P R E + λ ⋅ ∑ k = 1 K ( θ D A R E t k − θ P R E ) . \begin{gathered} \boldsymbol{\theta}_{\mathrm{DARE}}^{t_k}=\operatorname{DARE}\left(\boldsymbol{\theta}_{\mathrm{SFT}}^{t_k}, \boldsymbol{\theta}_{\mathrm{PRE}}\right), \text { for } 1 \leq k \leq K, \\ \boldsymbol{\theta}_{\mathrm{M}}=\boldsymbol{\theta}_{\mathrm{PRE}}+\lambda \cdot \sum_{k=1}^K \hat{\boldsymbol{\delta}}^{t_k}=\boldsymbol{\theta}_{\mathrm{PRE}}+\lambda \cdot \sum_{k=1}^K\left(\boldsymbol{\theta}_{\mathrm{DARE}}^{t_k}-\boldsymbol{\theta}_{\mathrm{PRE}}\right) . \end{gathered} θDAREtk=DARE(θSFTtk,θPRE), for 1≤k≤K,θM=θPRE+λ⋅k=1∑Kδ^tk=θPRE+λ⋅k=1∑K(θDAREtk−θPRE).

流程图:

实验结果

参考

- 丢弃99%的参数!阿里团队提出语言模型合体术,性能暴涨且无需重新训练和GPU

- MergeLM

)

)

中QKV的含义理解)