C#开发者必备!快速掌握onnxruntime实现YOWOv2视频动作检测技术!

目录

介绍

效果

模型信息

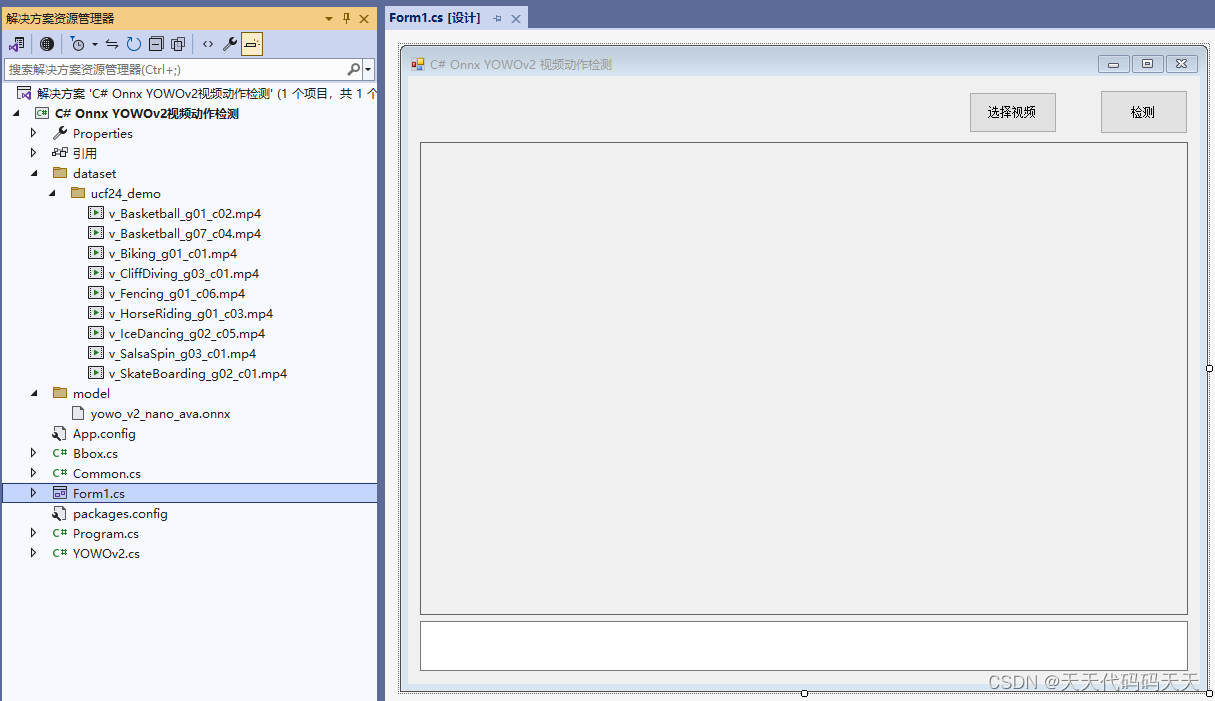

项目

代码

Form1.cs

YOWOv2.cs

下载

介绍

YOWOv2: A Stronger yet Efficient Multi-level Detection Framework for Real-time Spatio-temporal Action

代码实现参考

https://github.com/hpc203/YOWOv2-video-action-detect-onnxrun

训练源码

GitHub - yjh0410/YOWOv2: The second generation of YOWO action detector.

YOWOv2介绍

https://blog.csdn.net/weixin_46687145/article/details/136488363

效果

C# Onnx YOWOv2 视频动作检测

模型信息

Model Properties

-------------------------

---------------------------------------------------------------

Inputs

-------------------------

name:input

tensor:Float[1, 3, 16, 224, 224]

---------------------------------------------------------------

Outputs

-------------------------

name:conf_preds0

tensor:Float[1, 784, 1]

name:conf_preds1

tensor:Float[1, 196, 1]

name:conf_preds2

tensor:Float[1, 49, 1]

name:cls_preds0

tensor:Float[1, 784, 80]

name:cls_preds1

tensor:Float[1, 196, 80]

name:cls_preds2

tensor:Float[1, 49, 80]

name:reg_preds0

tensor:Float[1, 784, 4]

name:reg_preds1

tensor:Float[1, 196, 4]

name:erg_preds2

tensor:Float[1, 49, 4]

---------------------------------------------------------------

项目

代码

Form1.cs

using OpenCvSharp;

using OpenCvSharp.Extensions;

using System;

using System.Collections.Generic;

using System.Windows.Forms;

namespace C__Onnx_YOWOv2视频动作检测

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

YOWOv2 mynet = new YOWOv2("model/yowo_v2_nano_ava.onnx", "ava");

string videopath = "";

Mat currentFrame = new Mat();

VideoCapture capture;

private void button1_Click(object sender, EventArgs e)

{

if (videopath == "")

{

return;

}

int len_clip = mynet.len_clip;

float vis_thresh = 0.2f;

textBox1.Text = "正在检测,请稍后……";

//videopath = "dataset/ucf24_demo/v_Basketball_g01_c02.mp4";

string savepath = "result.mp4";

VideoCapture vcapture = new VideoCapture(videopath);

if (!vcapture.IsOpened())

{

MessageBox.Show("打开视频文件失败");

return;

}

VideoWriter vwriter = new VideoWriter(savepath, FourCC.X264, vcapture.Fps, new OpenCvSharp.Size(vcapture.FrameWidth, vcapture.FrameHeight));

Mat frame = new Mat();

List<Mat> video_clip = new List<Mat>();

int index = 0;

while (vcapture.Read(frame))

{

if (frame.Empty())

{

MessageBox.Show("打开视频文件失败");

return;

}

if (video_clip.Count <= 0)

{

for (int i = 0; i < len_clip; i++)

{

video_clip.Add(frame);

}

}

video_clip.Add(frame);

video_clip.RemoveAt(0);

if (mynet.multi_hot)

{

List<Bbox> boxes = new List<Bbox>();

List<float> det_conf = new List<float>();

List<List<float>> cls_conf = new List<List<float>>();

List<int> keep_inds = mynet.detect_multi_hot(video_clip, boxes, det_conf, cls_conf); //keep_inds记录vector里面的有效检测框的序号

Mat dstimg = Common.vis_multi_hot(frame, boxes, det_conf, cls_conf, keep_inds, vis_thresh);

//Cv2.ImWrite("img/" + (index++).ToString() + ".jpg", dstimg);

vwriter.Write(dstimg);

dstimg.Dispose();

}

else

{

List<Bbox> boxes = new List<Bbox>();

List<float> det_conf = new List<float>();

List<int> cls_id = new List<int>();

List<int> keep_inds = mynet.detect_one_hot(video_clip, boxes, det_conf, cls_id); //keep_inds记录vector里面的有效检测框的序号

Mat dstimg = Common.vis_one_hot(frame, boxes, det_conf, cls_id, keep_inds, vis_thresh, 0.4f);

vwriter.Write(dstimg);

dstimg.Dispose();

}

}

vcapture.Release();

vwriter.Release();

MessageBox.Show("检测完成,点击确认后播放检测后效果!");

textBox1.Text = "播放result.mp4";

videopath = "result.mp4";

capture = new VideoCapture(videopath);

if (!capture.IsOpened())

{

MessageBox.Show("打开视频文件失败");

return;

}

capture.Read(currentFrame);

if (!currentFrame.Empty())

{

pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);

timer1.Interval = (int)(1000.0 / capture.Fps);

timer1.Enabled = true;

}

}

private void button2_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = "Video files MP4 files (*.mp4)|*.mp4";

ofd.InitialDirectory = Application.StartupPath;

if (ofd.ShowDialog() == DialogResult.OK)

{

videopath = ofd.FileName;

capture = new VideoCapture(videopath);

if (!capture.IsOpened())

{

MessageBox.Show("打开视频文件失败");

return;

}

capture.Read(currentFrame);

if (!currentFrame.Empty())

{

pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);

timer1.Interval = (int)(1000.0 / capture.Fps);

timer1.Enabled = true;

}

}

}

private void timer1_Tick(object sender, EventArgs e)

{

capture.Read(currentFrame);

if (currentFrame.Empty())

{

//pictureBox1.Image = null;

timer1.Enabled = false;

capture.Release();

textBox1.Text = "播放完毕。";

return;

}

pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);

}

private void Form1_Load(object sender, EventArgs e)

{

videopath = "dataset/ucf24_demo/v_Basketball_g01_c02.mp4";

capture = new VideoCapture(videopath);

if (!capture.IsOpened())

{

MessageBox.Show("打开视频文件失败");

return;

}

textBox1.Text = "播放v_Basketball_g01_c02.mp4";

capture.Read(currentFrame);

if (!currentFrame.Empty())

{

pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);

timer1.Interval = (int)(1000.0 / capture.Fps);

timer1.Enabled = true;

}

}

}

}

using OpenCvSharp;

using OpenCvSharp.Extensions;

using System;

using System.Collections.Generic;

using System.Windows.Forms;namespace C__Onnx_YOWOv2视频动作检测

{public partial class Form1 : Form{public Form1(){InitializeComponent();}YOWOv2 mynet = new YOWOv2("model/yowo_v2_nano_ava.onnx", "ava");string videopath = "";Mat currentFrame = new Mat();VideoCapture capture;private void button1_Click(object sender, EventArgs e){if (videopath == ""){return;}int len_clip = mynet.len_clip;float vis_thresh = 0.2f;textBox1.Text = "正在检测,请稍后……";//videopath = "dataset/ucf24_demo/v_Basketball_g01_c02.mp4";string savepath = "result.mp4";VideoCapture vcapture = new VideoCapture(videopath);if (!vcapture.IsOpened()){MessageBox.Show("打开视频文件失败");return;}VideoWriter vwriter = new VideoWriter(savepath, FourCC.X264, vcapture.Fps, new OpenCvSharp.Size(vcapture.FrameWidth, vcapture.FrameHeight));Mat frame = new Mat();List<Mat> video_clip = new List<Mat>();int index = 0;while (vcapture.Read(frame)){if (frame.Empty()){MessageBox.Show("打开视频文件失败");return;}if (video_clip.Count <= 0){for (int i = 0; i < len_clip; i++){video_clip.Add(frame);}}video_clip.Add(frame);video_clip.RemoveAt(0);if (mynet.multi_hot){List<Bbox> boxes = new List<Bbox>();List<float> det_conf = new List<float>();List<List<float>> cls_conf = new List<List<float>>();List<int> keep_inds = mynet.detect_multi_hot(video_clip, boxes, det_conf, cls_conf); //keep_inds记录vector里面的有效检测框的序号Mat dstimg = Common.vis_multi_hot(frame, boxes, det_conf, cls_conf, keep_inds, vis_thresh);//Cv2.ImWrite("img/" + (index++).ToString() + ".jpg", dstimg);vwriter.Write(dstimg);dstimg.Dispose();}else{List<Bbox> boxes = new List<Bbox>();List<float> det_conf = new List<float>();List<int> cls_id = new List<int>();List<int> keep_inds = mynet.detect_one_hot(video_clip, boxes, det_conf, cls_id); //keep_inds记录vector里面的有效检测框的序号Mat dstimg = Common.vis_one_hot(frame, boxes, det_conf, cls_id, keep_inds, vis_thresh, 0.4f);vwriter.Write(dstimg);dstimg.Dispose();}}vcapture.Release();vwriter.Release();MessageBox.Show("检测完成,点击确认后播放检测后效果!");textBox1.Text = "播放result.mp4";videopath = "result.mp4";capture = new VideoCapture(videopath);if (!capture.IsOpened()){MessageBox.Show("打开视频文件失败");return;}capture.Read(currentFrame);if (!currentFrame.Empty()){pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);timer1.Interval = (int)(1000.0 / capture.Fps);timer1.Enabled = true;}}private void button2_Click(object sender, EventArgs e){OpenFileDialog ofd = new OpenFileDialog();ofd.Filter = "Video files MP4 files (*.mp4)|*.mp4";ofd.InitialDirectory = Application.StartupPath;if (ofd.ShowDialog() == DialogResult.OK){videopath = ofd.FileName;capture = new VideoCapture(videopath);if (!capture.IsOpened()){MessageBox.Show("打开视频文件失败");return;}capture.Read(currentFrame);if (!currentFrame.Empty()){pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);timer1.Interval = (int)(1000.0 / capture.Fps);timer1.Enabled = true;}}}private void timer1_Tick(object sender, EventArgs e){capture.Read(currentFrame);if (currentFrame.Empty()){//pictureBox1.Image = null;timer1.Enabled = false;capture.Release();textBox1.Text = "播放完毕。";return;}pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);}private void Form1_Load(object sender, EventArgs e){videopath = "dataset/ucf24_demo/v_Basketball_g01_c02.mp4";capture = new VideoCapture(videopath);if (!capture.IsOpened()){MessageBox.Show("打开视频文件失败");return;}textBox1.Text = "播放v_Basketball_g01_c02.mp4";capture.Read(currentFrame);if (!currentFrame.Empty()){pictureBox1.Image = BitmapConverter.ToBitmap(currentFrame);timer1.Interval = (int)(1000.0 / capture.Fps);timer1.Enabled = true;}}}

}

YOWOv2.cs

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.InteropServices;namespace C__Onnx_YOWOv2视频动作检测

{public class YOWOv2{public int len_clip;public bool multi_hot;List<float> input_tensor_data = new List<float>();int inpWidth;int inpHeight;float nms_thresh;float conf_thresh;int num_class;int topk = 40;int[] strides = new int[] { 8, 16, 32 };bool act_pose;SessionOptions options;InferenceSession onnx_session;public YOWOv2(string modelpath, string dataset = "ava_v2.2", float nms_thresh_ = 0.5f, float conf_thresh_ = 0.1f, bool act_pose_ = false){// 创建输出会话,用于输出模型读取信息options = new SessionOptions();options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;options.AppendExecutionProvider_CPU(0);// 设置为CPU上运行// 创建推理模型类,读取本地模型文件onnx_session = new InferenceSession(modelpath, options);//model_path 为onnx模型文件的路径this.len_clip = 16;this.inpHeight = 224;this.inpWidth = 224;if (dataset == "ava_v2.2" || dataset == "ava"){this.num_class = 80;this.multi_hot = true;}else{this.num_class = 24;this.multi_hot = false;}this.conf_thresh = conf_thresh_;this.nms_thresh = nms_thresh_;this.act_pose = act_pose_;}float[] ExtractMat(Mat src){OpenCvSharp.Size size = src.Size();int channels = src.Channels();float[] result = new float[size.Width * size.Height * channels];GCHandle resultHandle = default;try{resultHandle = GCHandle.Alloc(result, GCHandleType.Pinned);IntPtr resultPtr = resultHandle.AddrOfPinnedObject();for (int i = 0; i < channels; ++i){Mat cmat = new Mat(src.Height, src.Width,MatType.CV_32FC1,resultPtr + i * size.Width * size.Height * sizeof(float));Cv2.ExtractChannel(src, cmat, i);cmat.Dispose();}}finally{resultHandle.Free();}return result;}void preprocess(List<Mat> video_clip){input_tensor_data.Clear();for (int i = 0; i < this.len_clip; i++){Mat resizeimg = new Mat();Cv2.Resize(video_clip[i], resizeimg, new Size(this.inpWidth, this.inpHeight));resizeimg.ConvertTo(resizeimg, MatType.CV_32FC3);var data = ExtractMat(resizeimg);resizeimg.Dispose();input_tensor_data.AddRange(data.ToList());}}void generate_proposal_multi_hot(int stride, float[] conf_pred, float[] cls_pred, float[] reg_pred, List<Bbox> boxes, List<float> det_conf, List<List<float>> cls_conf){int feat_h = (int)Math.Ceiling((float)this.inpHeight / stride);int feat_w = (int)Math.Ceiling((float)this.inpWidth / stride);int area = feat_h * feat_w;float[] conf_pred_i = new float[area];for (int i = 0; i < area; i++){conf_pred_i[i] = Common.sigmoid(conf_pred[i]);}List<int> topk_inds = Common.TopKIndex(conf_pred_i.ToList(), this.topk);int length = this.num_class;if (this.act_pose){length = 14;}for (int i = 0; i < topk_inds.Count; i++){int ind = topk_inds[i];if (conf_pred_i[ind] > this.conf_thresh){int row = 0, col = 0;Common.ind2sub(ind, feat_w, feat_h, ref row, ref col);float cx = (col + 0.5f + reg_pred[ind * 4]) * stride;float cy = (row + 0.5f + reg_pred[ind * 4 + 1]) * stride;float w = (float)(Math.Exp(reg_pred[ind * 4 + 2]) * stride);float h = (float)(Math.Exp(reg_pred[ind * 4 + 3]) * stride);boxes.Add(new Bbox((int)(cx - 0.5 * w), (int)(cy - 0.5 * h), (int)(cx + 0.5 * w), (int)(cy + 0.5 * h)));det_conf.Add(conf_pred_i[ind]);float[] cls_conf_i = new float[length];for (int j = 0; j < length; j++){cls_conf_i[j] = Common.sigmoid(cls_pred[ind * this.num_class + j]);}cls_conf.Add(cls_conf_i.ToList());}}}void generate_proposal_one_hot(int stride, float[] conf_pred, float[] cls_pred, float[] reg_pred, List<Bbox> boxes, List<float> det_conf, List<int> cls_id){int feat_h = (int)Math.Ceiling((float)inpHeight / stride);int feat_w = (int)Math.Ceiling((float)inpWidth / stride);int area = feat_h * feat_w;float[] det_scores_i = new float[area * this.num_class];for (int i = 0; i < area; i++){for (int j = 0; j < this.num_class; j++){det_scores_i[i * this.num_class + j] = (float)Math.Sqrt(Common.sigmoid(conf_pred[i]) * Common.sigmoid(cls_pred[i * this.num_class + j]));}}int num_topk = Math.Min(this.topk, area);List<int> topk_inds = Common.TopKIndex(det_scores_i.ToList(), num_topk);for (int i = 0; i < topk_inds.Count; i++){int ind = topk_inds[i];if (det_scores_i[ind] > this.conf_thresh){det_conf.Add(det_scores_i[ind]);int idx = ind % this.num_class;cls_id.Add(idx);int row_ind = ind / this.num_class;int row = 0, col = 0;Common.ind2sub(row_ind, feat_w, feat_h, ref row, ref col);float cx = (col + 0.5f + reg_pred[row_ind * 4]) * stride;float cy = (row + 0.5f + reg_pred[row_ind * 4 + 1]) * stride;float w = (float)(Math.Exp(reg_pred[row_ind * 4 + 2]) * stride);float h = (float)(Math.Exp(reg_pred[row_ind * 4 + 3]) * stride);boxes.Add(new Bbox((int)(cx - 0.5 * w), (int)(cy - 0.5 * h), (int)(cx + 0.5 * w), (int)(cy + 0.5 * h)));}}}public List<int> detect_multi_hot(List<Mat> video_clip, List<Bbox> boxes, List<float> det_conf, List<List<float>> cls_conf){if (video_clip.Count != this.len_clip){Console.WriteLine("input frame number is not " + this.len_clip);throw new Exception("input frame number is not " + this.len_clip);}int origin_h = video_clip[0].Rows;int origin_w = video_clip[0].Cols;this.preprocess(video_clip);Tensor<float> input_tensor = new DenseTensor<float>(input_tensor_data.ToArray(), new[] { 1, 3, this.len_clip, this.inpHeight, this.inpWidth });List<NamedOnnxValue> input_container = new List<NamedOnnxValue>{NamedOnnxValue.CreateFromTensor("input", input_tensor)};var ort_outputs = onnx_session.Run(input_container).ToArray();float[] conf_preds0 = ort_outputs[0].AsTensor<float>().ToArray();float[] conf_preds1 = ort_outputs[1].AsTensor<float>().ToArray();float[] conf_preds2 = ort_outputs[2].AsTensor<float>().ToArray();float[] cls_preds0 = ort_outputs[3].AsTensor<float>().ToArray();float[] cls_preds1 = ort_outputs[4].AsTensor<float>().ToArray();float[] cls_preds2 = ort_outputs[5].AsTensor<float>().ToArray();float[] reg_preds0 = ort_outputs[6].AsTensor<float>().ToArray();float[] reg_preds1 = ort_outputs[7].AsTensor<float>().ToArray();float[] reg_preds2 = ort_outputs[8].AsTensor<float>().ToArray();this.generate_proposal_multi_hot(this.strides[0], conf_preds0, cls_preds0, reg_preds0, boxes, det_conf, cls_conf);this.generate_proposal_multi_hot(this.strides[1], conf_preds1, cls_preds1, reg_preds1, boxes, det_conf, cls_conf);this.generate_proposal_multi_hot(this.strides[2], conf_preds2, cls_preds2, reg_preds2, boxes, det_conf, cls_conf);List<int> keep_inds = Common.multiclass_nms_class_agnostic(boxes, det_conf, this.nms_thresh);int max_hw = Math.Max(this.inpHeight, this.inpWidth);float ratio_h = (float)((float)origin_h / max_hw);float ratio_w = (float)((float)origin_w / max_hw);for (int i = 0; i < keep_inds.Count; i++){int ind = keep_inds[i];boxes[ind].xmin = (int)(boxes[ind].xmin * ratio_w);boxes[ind].ymin = (int)(boxes[ind].ymin * ratio_h);boxes[ind].xmax = (int)(boxes[ind].xmax * ratio_w);boxes[ind].ymax = (int)(boxes[ind].ymax * ratio_h);}return keep_inds;}public List<int> detect_one_hot(List<Mat> video_clip, List<Bbox> boxes, List<float> det_conf, List<int> cls_id){if (video_clip.Count != this.len_clip){Console.WriteLine("input frame number is not " + this.len_clip);throw new Exception("input frame number is not " + this.len_clip);}int origin_h = video_clip[0].Rows;int origin_w = video_clip[0].Cols;this.preprocess(video_clip);// 输入TensorTensor<float> input_tensor = new DenseTensor<float>(input_tensor_data.ToArray(), new[] { 1, 3, this.len_clip, this.inpHeight, this.inpWidth });List<NamedOnnxValue> input_container = new List<NamedOnnxValue>{//将 input_tensor 放入一个输入参数的容器,并指定名称NamedOnnxValue.CreateFromTensor("input", input_tensor)};var ort_outputs = onnx_session.Run(input_container).ToArray();float[] conf_preds0 = ort_outputs[0].AsTensor<float>().ToArray();float[] conf_preds1 = ort_outputs[1].AsTensor<float>().ToArray();float[] conf_preds2 = ort_outputs[2].AsTensor<float>().ToArray();float[] cls_preds0 = ort_outputs[3].AsTensor<float>().ToArray();float[] cls_preds1 = ort_outputs[4].AsTensor<float>().ToArray();float[] cls_preds2 = ort_outputs[5].AsTensor<float>().ToArray();float[] reg_preds0 = ort_outputs[6].AsTensor<float>().ToArray();float[] reg_preds1 = ort_outputs[7].AsTensor<float>().ToArray();float[] reg_preds2 = ort_outputs[8].AsTensor<float>().ToArray();this.generate_proposal_one_hot(this.strides[0], conf_preds0, cls_preds0, reg_preds0, boxes, det_conf, cls_id);this.generate_proposal_one_hot(this.strides[1], conf_preds1, cls_preds1, reg_preds1, boxes, det_conf, cls_id);this.generate_proposal_one_hot(this.strides[2], conf_preds2, cls_preds2, reg_preds2, boxes, det_conf, cls_id);List<int> keep_inds = Common.multiclass_nms_class_aware(boxes, det_conf, cls_id,this.nms_thresh, 24);int max_hw = Math.Max(this.inpHeight, this.inpWidth);float ratio_h = (float)((float)origin_h / max_hw);float ratio_w = (float)((float)origin_w / max_hw);for (int i = 0; i < keep_inds.Count; i++){int ind = keep_inds[i];boxes[ind].xmin = (int)(boxes[ind].xmin * ratio_w);boxes[ind].ymin = (int)(boxes[ind].ymin * ratio_h);boxes[ind].xmax = (int)(boxes[ind].xmax * ratio_w);boxes[ind].ymax = (int)(boxes[ind].ymax * ratio_h);}return keep_inds;}}

}

下载

源码下载

)

)

)

)