在celery的配置方法中有个参数叫task_routes,是用来设置不同的任务 消费不同的队列(也就是路由)。

格式如下:

{ ‘task name’: { ‘queue’: ‘queue name’ }}

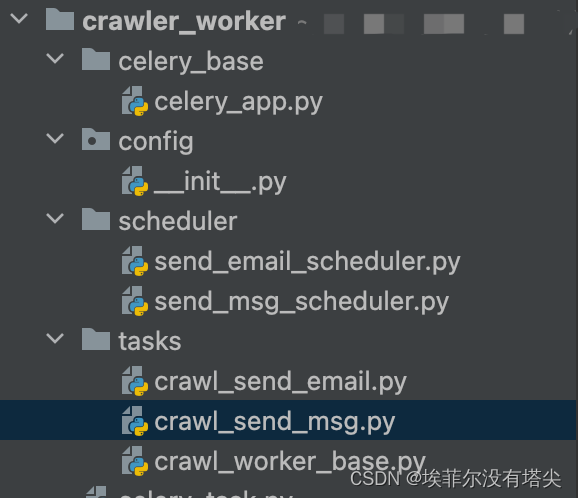

直接上代码,简单明了,目录格式如下:

首先是配置文件 config.init.py

import os

import sys

from pathlib import PathBASE_DIR = Path(__file__).resolve().parent.parent

sys.path.append(str(BASE_DIR))class Config(object):"""配置文件基类"""""" 项目名称 """PROJECT_NAME = "crawler_worker"""" celery backend存放结果 """CELERY_BACKEND_URL = "redis://127.0.0.1:6379/4"""" celery broker中间件 """CELERY_BROKER_URL = "redis://127.0.0.1:6379/5"""" worker 名称 """CRAWL_SEND_EMAIL_TASK = "crawl_service.crawl.send_email_task" # 抓取发送邮件任务CRAWL_SEND_MSG_TASK = "crawl_service.crawl.send_msg_task" # 抓取发送短信任务settings = Config()

celery应用程序模块配置相关 celery_base.celery_app.py

import os

import sys

import time

import celery

from pathlib import PathBASE_DIR = Path(__file__).resolve().parent.parent

sys.path.append(str(BASE_DIR))from config import settings# 实例化celery对象

celery_app = celery.Celery(settings.PROJECT_NAME,backend=settings.CELERY_BACKEND_URL,broker=settings.CELERY_BROKER_URL,include=["tasks.crawl_send_email","tasks.crawl_send_msg",],

)# 任务路由

task_routes = {settings.CRAWL_SEND_EMAIL_TASK: {"queue": f"{settings.CRAWL_SEND_EMAIL_TASK}_queue"},settings.CRAWL_SEND_MSG_TASK: {"queue": f"{settings.CRAWL_SEND_MSG_TASK}_queue"},

}

# 任务去重

celery_once = {"backend": "celery_once.backends.Redis","settings": {"url": settings.CELERY_BACKEND_URL, "default_timeout": 60 * 60},

}

# 配置文件

celery_app.conf.update(task_serializer="json",result_serializer="json",accept_content=["json"],task_default_queue="normal",timezone="Asia/Shanghai",enable_utc=False,task_routes=task_routes,task_ignore_result=True,redis_max_connections=100,result_expires=3600,ONCE=celery_once,

)

抓取基类 crawl_worker_base.py

from celery_once import QueueOnceclass CrawlBase(QueueOnce):"""抓取worker基类"""name = Noneonce = {"graceful": True}ignore_result = True

发送邮件任务 crawl_send_email.py

import os

import sys

import time

import celery

from loguru import logger

from pathlib import PathBASE_DIR = Path(__file__).resolve().parent.parent

sys.path.append(str(BASE_DIR))from config import settings

from celery_base.celery_app import celery_app

from tasks.crawl_worker_base import CrawlBase"""执行命令:

celery -A tasks.crawl_send_email worker -l info -Q crawl_service.crawl.send_email_task_queue"""class SendEmailClass(CrawlBase):name = settings.CRAWL_SEND_EMAIL_TASKdef __init__(self, *args, **kwargs):super(SendEmailClass, self).__init__(*args, **kwargs)def run(self, name):logger.info("class的方式, 向%s发送邮件..." % name)time.sleep(5)logger.info("class的方式, 向%s发送邮件完成" % name)return f"成功拿到{name}发送的邮件!"send_email = celery_app.register_task(SendEmailClass())

发送短信 crawl_send_msg.py

import os

import sys

import time

import celery

from loguru import logger

from pathlib import PathBASE_DIR = Path(__file__).resolve().parent.parent

sys.path.append(str(BASE_DIR))

from config import settings

from celery_base.celery_app import celery_app

from tasks.crawl_worker_base import CrawlBase"""执行命令:

celery -A tasks.crawl_send_msg worker -l info -Q crawl_service.crawl.send_msg_task_queue"""class SendMsgClass(CrawlBase):name = settings.CRAWL_SEND_MSG_TASKdef __init__(self, *args, **kwargs):super(SendMsgClass, self).__init__(*args, **kwargs)def run(self, name):logger.info("class的方式, 向%s发送短信..." % name)time.sleep(5)logger.info("class的方式, 向%s发送短信完成" % name)return f"成功拿到{name}发送的短信!"send_msg = celery_app.register_task(SendMsgClass())

发送邮件任务-调度器 send_email_scheduler.py

import sys

from pathlib import PathBASE_DIR = Path(__file__).resolve().parent.parent

sys.path.append(str(BASE_DIR))from config import settings

from celery_base.celery_app import celery_appif __name__ == "__main__":for i in range(100):result = celery_app.send_task(name=settings.CRAWL_SEND_EMAIL_TASK, args=(f"张三嘿嘿{i}",))print(result.id)

发送短信任务-调度器 send_msg_scheduler.py

import os

import sys

import time

from pathlib import PathBASE_DIR = Path(__file__).resolve().parent.parent

sys.path.append(str(BASE_DIR))from config import settings

from celery_base.celery_app import celery_appif __name__ == "__main__":for i in range(100, 500):result = celery_app.send_task(name=settings.CRAWL_SEND_MSG_TASK, args=(f"李四哈哈哈{i}",))print(result.id)

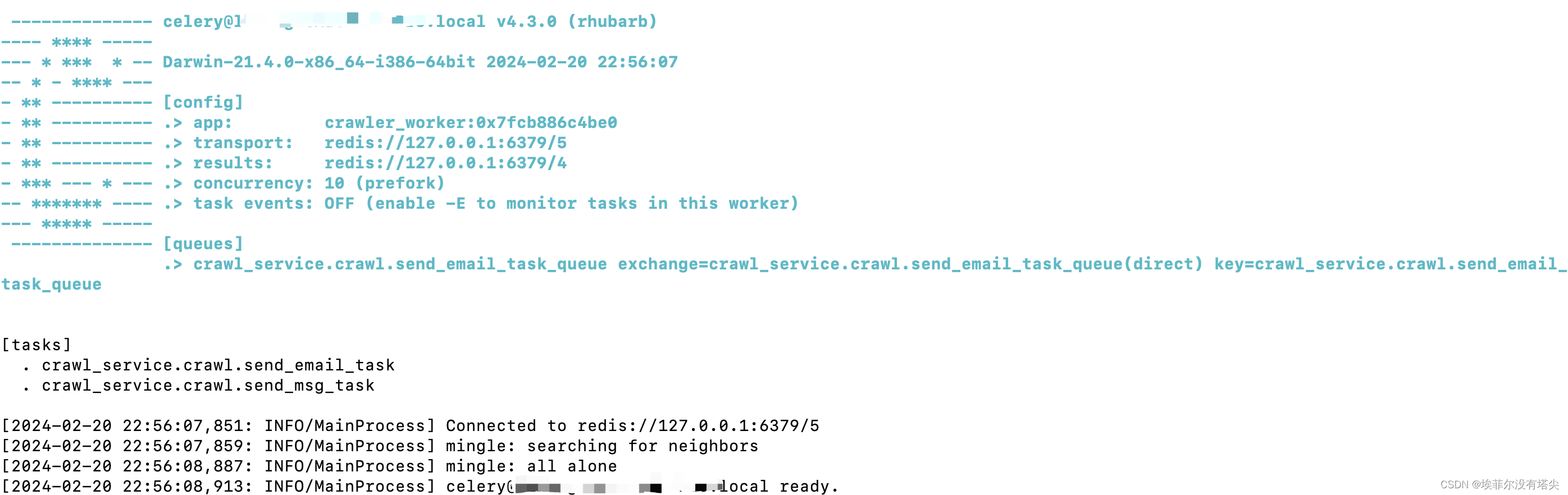

准备工作已经做好,紧接着分别执行命令:

celery -A tasks.crawl_send_email worker -l info -Q crawl_service.crawl.send_email_task_queue

celery -A tasks.crawl_send_msg worker -l info -Q crawl_service.crawl.send_msg_task_queue

出现👇🏻下面效果就代表celery启动成功:

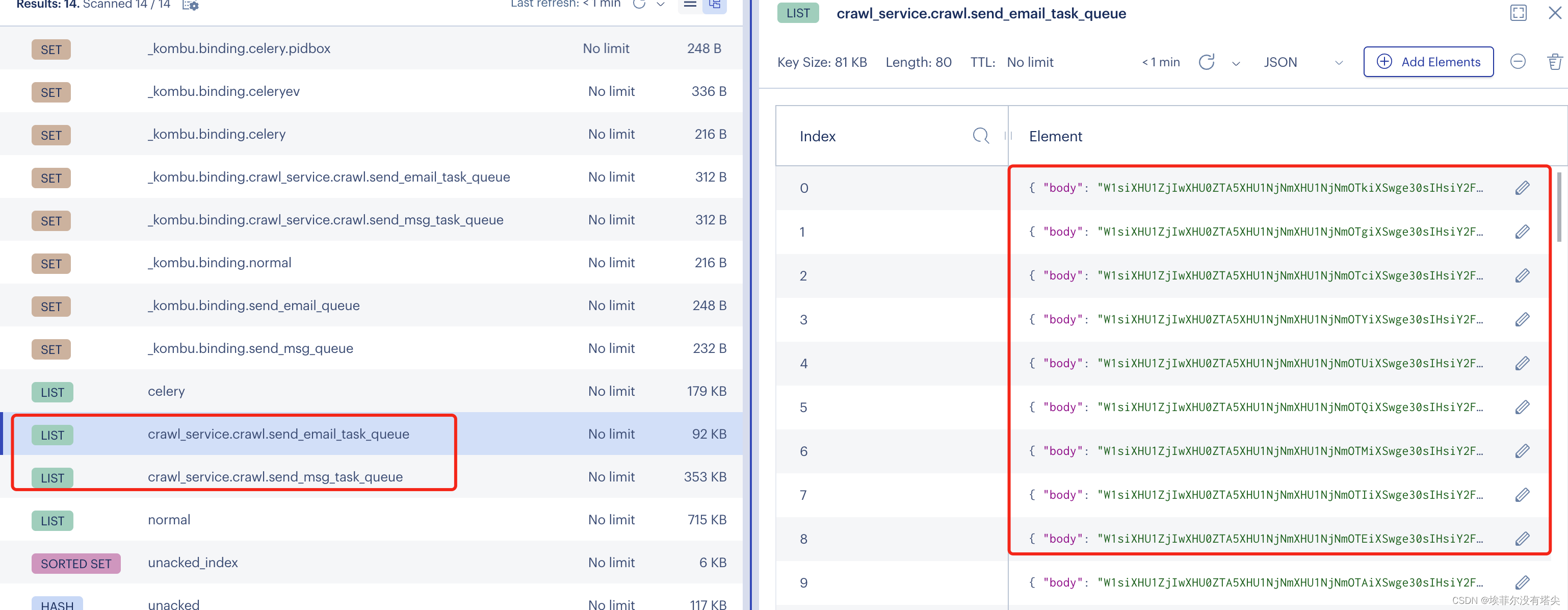

最后只要发送任务即可,在redis中就可以看到专门指定的两个队列了。

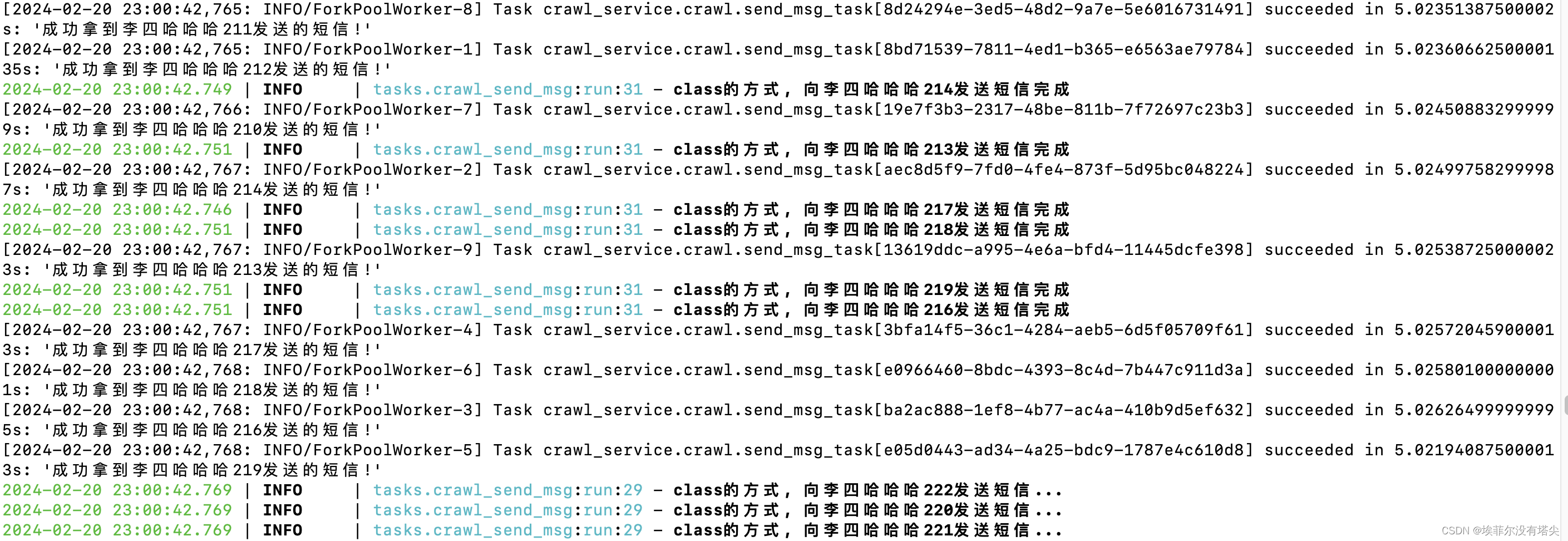

看下运行过程中的日志

一个简单的celery + 队列就实现了。

![[05] computed计算属性](http://pic.xiahunao.cn/[05] computed计算属性)

)