基础作业

- 使用 OpenCompass 评测 InternLM2-Chat-7B 模型在 C-Eval 数据集上的性能

环境配置

conda create --name opencompass --clone=/root/share/conda_envs/internlm-base

source activate opencompass

git clone https://github.com/open-compass/opencompass

cd opencompass

pip install -e .数据准备

# 解压评测数据集到 data/ 处

cp /share/temp/datasets/OpenCompassData-core-20231110.zip /root/opencompass/

unzip OpenCompassData-core-20231110.zip# 将会在opencompass下看到data文件夹查看支持的数据集与模型

# 列出所有跟 internlm 及 ceval 相关的配置

python tools/list_configs.py internlm ceval+--------------------------+--------------------------------------------------------+

| Model | Config Path |

|--------------------------+--------------------------------------------------------|

| hf_internlm_20b | configs/models/hf_internlm/hf_internlm_20b.py |

| hf_internlm_7b | configs/models/hf_internlm/hf_internlm_7b.py |

| hf_internlm_chat_20b | configs/models/hf_internlm/hf_internlm_chat_20b.py |

| hf_internlm_chat_7b | configs/models/hf_internlm/hf_internlm_chat_7b.py |

| hf_internlm_chat_7b_8k | configs/models/hf_internlm/hf_internlm_chat_7b_8k.py |

| hf_internlm_chat_7b_v1_1 | configs/models/hf_internlm/hf_internlm_chat_7b_v1_1.py |

| internlm_7b | configs/models/internlm/internlm_7b.py |

| ms_internlm_chat_7b_8k | configs/models/ms_internlm/ms_internlm_chat_7b_8k.py |

+--------------------------+--------------------------------------------------------+

+----------------------------+------------------------------------------------------+

| Dataset | Config Path |

|----------------------------+------------------------------------------------------|

| ceval_clean_ppl | configs/datasets/ceval/ceval_clean_ppl.py |

| ceval_gen | configs/datasets/ceval/ceval_gen.py |

| ceval_gen_2daf24 | configs/datasets/ceval/ceval_gen_2daf24.py |

| ceval_gen_5f30c7 | configs/datasets/ceval/ceval_gen_5f30c7.py |

| ceval_ppl | configs/datasets/ceval/ceval_ppl.py |

| ceval_ppl_578f8d | configs/datasets/ceval/ceval_ppl_578f8d.py |

| ceval_ppl_93e5ce | configs/datasets/ceval/ceval_ppl_93e5ce.py |

| ceval_zero_shot_gen_bd40ef | configs/datasets/ceval/ceval_zero_shot_gen_bd40ef.py |

+----------------------------+------------------------------------------------------+启动评测

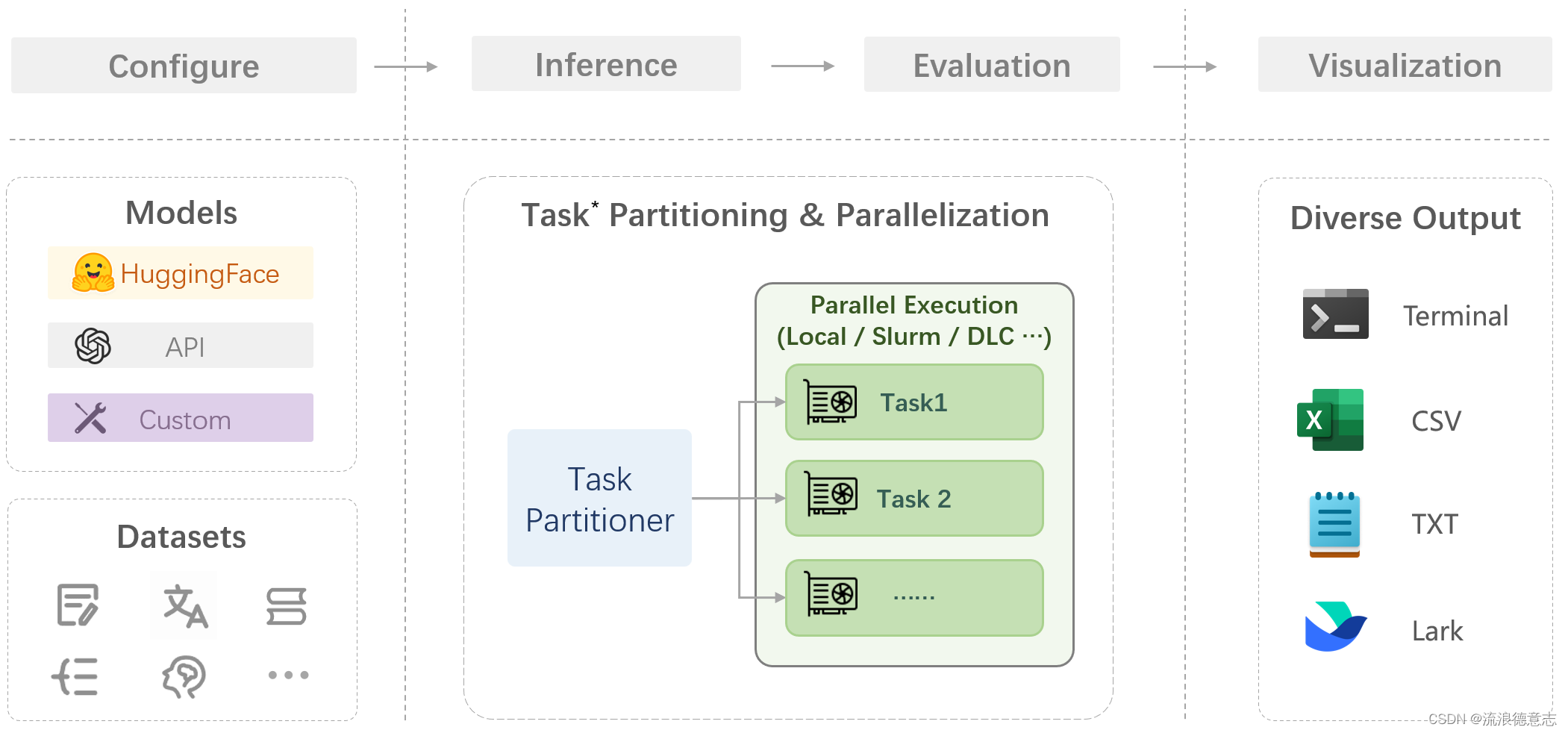

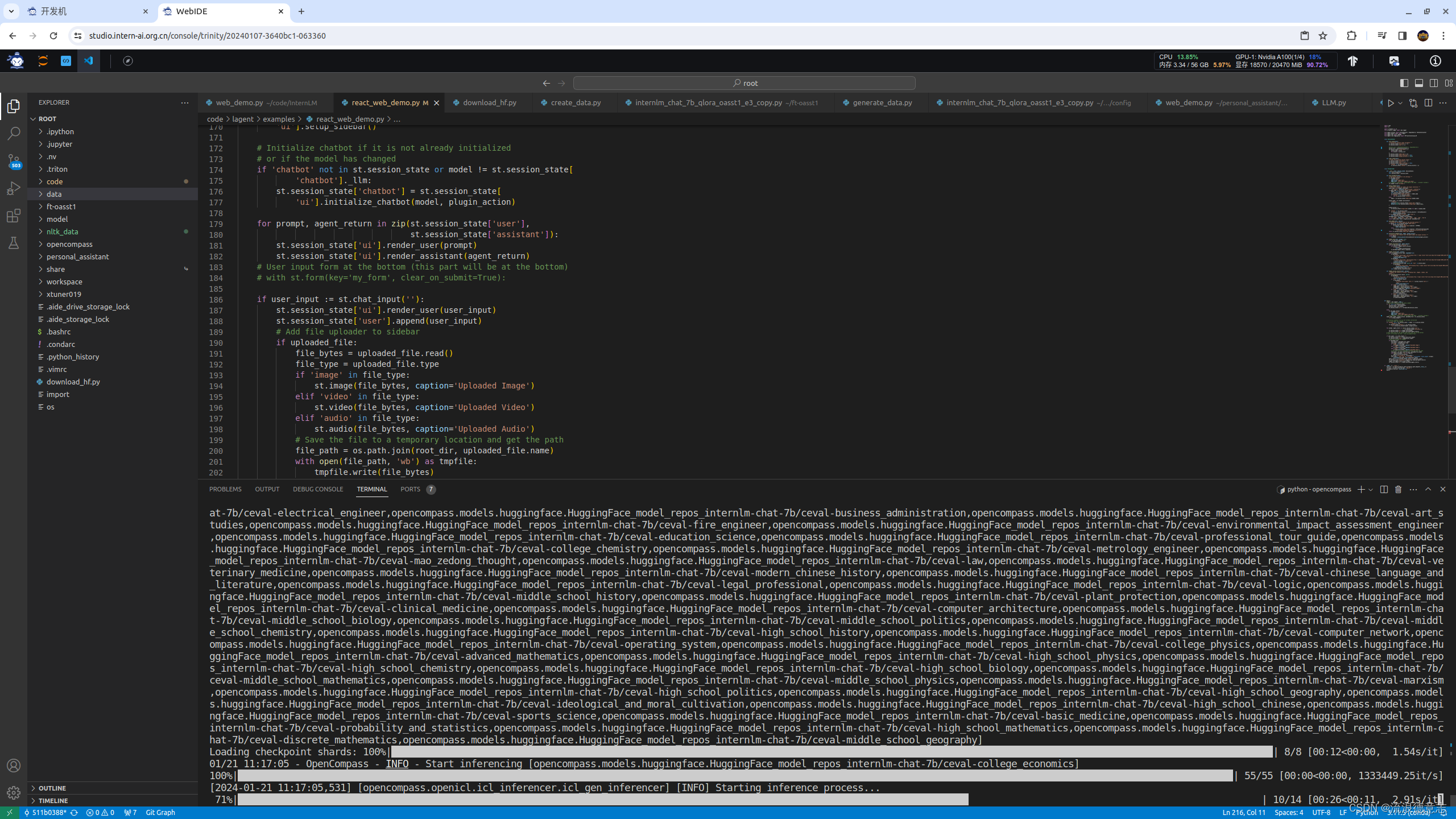

确保按照上述步骤正确安装 OpenCompass 并准备好数据集后,可以通过以下命令评测 InternLM-Chat-7B 模型在 C-Eval 数据集上的性能。由于 OpenCompass 默认并行启动评估过程,我们可以在第一次运行时以 --debug 模式启动评估,并检查是否存在问题。在 --debug 模式下,任务将按顺序执行,并实时打印输出。

python run.py --datasets ceval_gen --hf-path /share/temp/model_repos/internlm-chat-7b/ --tokenizer-path /share/temp/model_repos/internlm-chat-7b/ --tokenizer-kwargs padding_side='left' truncation='left' trust_remote_code=True --model-kwargs trust_remote_code=True device_map='auto' --max-seq-len 2048 --max-out-len 16 --batch-size 4 --num-gpus 1 --debug--datasets ceval_gen \

--hf-path /share/temp/model_repos/internlm-chat-7b/ \ # HuggingFace 模型路径

--tokenizer-path /share/temp/model_repos/internlm-chat-7b/ \ # HuggingFace tokenizer 路径(如果与模型路径相同,可以省略)

--tokenizer-kwargs padding_side='left' truncation='left' trust_remote_code=True \ # 构建 tokenizer 的参数

--model-kwargs device_map='auto' trust_remote_code=True \ # 构建模型的参数

--max-seq-len 2048 \ # 模型可以接受的最大序列长度

--max-out-len 16 \ # 生成的最大 token 数

--batch-size 4 \ # 批量大小

--num-gpus 1 # 运行模型所需的 GPU 数量

--debug

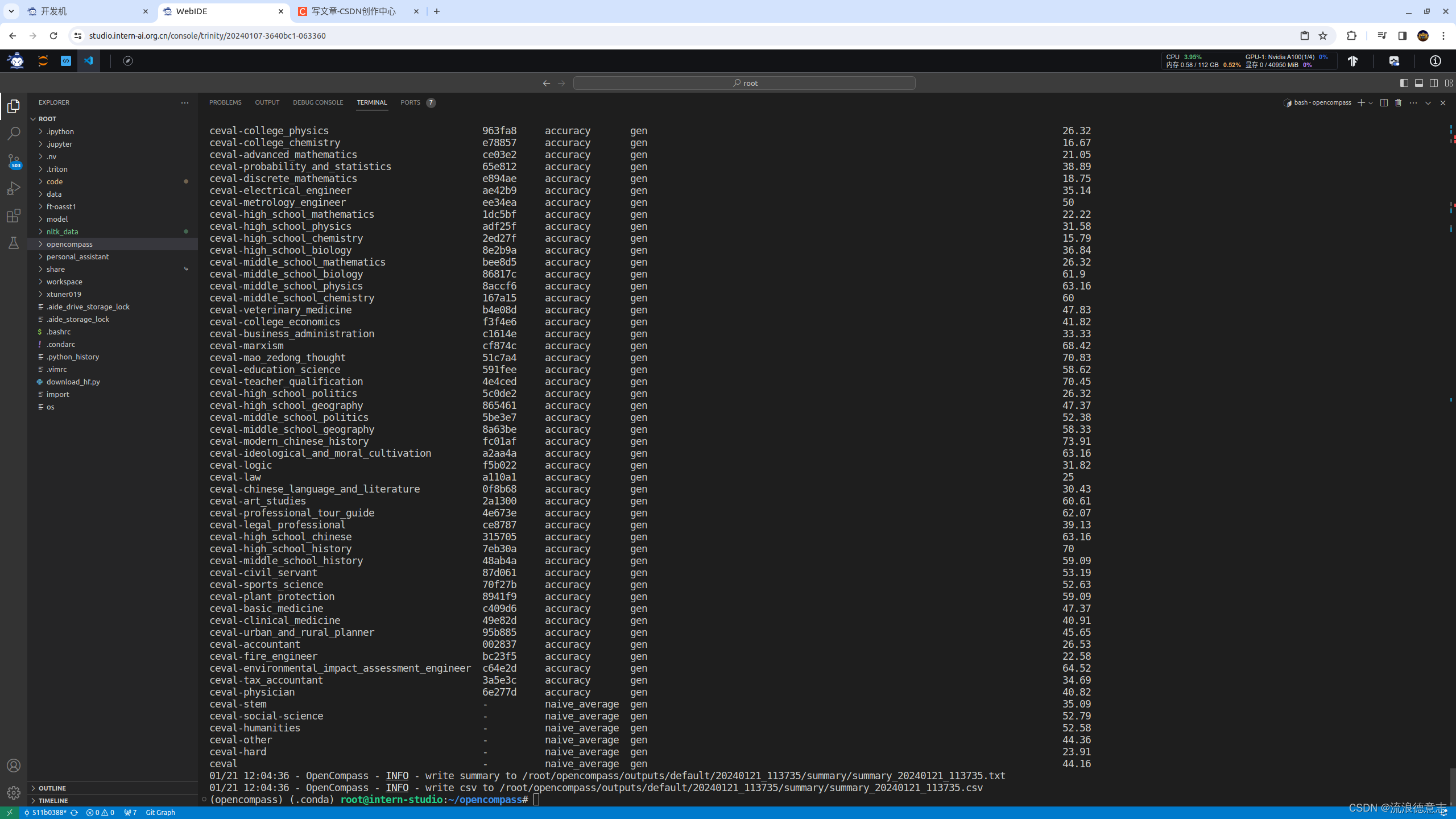

dataset version metric mode opencompass.models.huggingface.HuggingFace_model_repos_internlm-chat-7b

---------------------------------------------- --------- ------------- ------ -------------------------------------------------------------------------

ceval-computer_network db9ce2 accuracy gen 31.58

ceval-operating_system 1c2571 accuracy gen 36.84

ceval-computer_architecture a74dad accuracy gen 28.57

ceval-college_programming 4ca32a accuracy gen 32.43

ceval-college_physics 963fa8 accuracy gen 26.32

ceval-college_chemistry e78857 accuracy gen 16.67

ceval-advanced_mathematics ce03e2 accuracy gen 21.05

ceval-probability_and_statistics 65e812 accuracy gen 38.89

ceval-discrete_mathematics e894ae accuracy gen 18.75

ceval-electrical_engineer ae42b9 accuracy gen 35.14

ceval-metrology_engineer ee34ea accuracy gen 50

ceval-high_school_mathematics 1dc5bf accuracy gen 22.22

ceval-high_school_physics adf25f accuracy gen 31.58

ceval-high_school_chemistry 2ed27f accuracy gen 15.79

ceval-high_school_biology 8e2b9a accuracy gen 36.84

ceval-middle_school_mathematics bee8d5 accuracy gen 26.32

ceval-middle_school_biology 86817c accuracy gen 61.9

ceval-middle_school_physics 8accf6 accuracy gen 63.16

ceval-middle_school_chemistry 167a15 accuracy gen 60

ceval-veterinary_medicine b4e08d accuracy gen 47.83

ceval-college_economics f3f4e6 accuracy gen 41.82

ceval-business_administration c1614e accuracy gen 33.33

ceval-marxism cf874c accuracy gen 68.42

ceval-mao_zedong_thought 51c7a4 accuracy gen 70.83

ceval-education_science 591fee accuracy gen 58.62

ceval-teacher_qualification 4e4ced accuracy gen 70.45

ceval-high_school_politics 5c0de2 accuracy gen 26.32

ceval-high_school_geography 865461 accuracy gen 47.37

ceval-middle_school_politics 5be3e7 accuracy gen 52.38

ceval-middle_school_geography 8a63be accuracy gen 58.33

ceval-modern_chinese_history fc01af accuracy gen 73.91

ceval-ideological_and_moral_cultivation a2aa4a accuracy gen 63.16

ceval-logic f5b022 accuracy gen 31.82

ceval-law a110a1 accuracy gen 25

ceval-chinese_language_and_literature 0f8b68 accuracy gen 30.43

ceval-art_studies 2a1300 accuracy gen 60.61

ceval-professional_tour_guide 4e673e accuracy gen 62.07

ceval-legal_professional ce8787 accuracy gen 39.13

ceval-high_school_chinese 315705 accuracy gen 63.16

ceval-high_school_history 7eb30a accuracy gen 70

ceval-middle_school_history 48ab4a accuracy gen 59.09

ceval-civil_servant 87d061 accuracy gen 53.19

ceval-sports_science 70f27b accuracy gen 52.63

ceval-plant_protection 8941f9 accuracy gen 59.09

ceval-basic_medicine c409d6 accuracy gen 47.37

ceval-clinical_medicine 49e82d accuracy gen 40.91

ceval-urban_and_rural_planner 95b885 accuracy gen 45.65

ceval-accountant 002837 accuracy gen 26.53

ceval-fire_engineer bc23f5 accuracy gen 22.58

ceval-environmental_impact_assessment_engineer c64e2d accuracy gen 64.52

ceval-tax_accountant 3a5e3c accuracy gen 34.69

ceval-physician 6e277d accuracy gen 40.82

ceval-stem - naive_average gen 35.09

ceval-social-science - naive_average gen 52.79

ceval-humanities - naive_average gen 52.58

ceval-other - naive_average gen 44.36

ceval-hard - naive_average gen 23.91

ceval - naive_average gen 44.16

01/21 12:04:36 - OpenCompass - INFO - write summary to /root/opencompass/outputs/default/20240121_113735/summary/summary_20240121_113735.txt

01/21 12:04:36 - OpenCompass - INFO - write csv to /root/opencompass/outputs/default/20240121_113735/summary/summary_20240121_113735.csv进阶作业

- 使用 OpenCompass 评测 InternLM2-Chat-7B 模型使用 LMDeploy 0.2.0 部署后在 C-Eval 数据集上的性能

模型转换和部署

conda activate opencompass

pip install lmdeploy==0.2.0lmdeploy convert internlm2-chat-7b /root/share/model_repos/internlm2-chat-7b --dst-path /root/ws_lmdeploy2.0cd /root/opencompasscd configs

#新建eval_internlm2-7b-deploy2.0.pypython run.py configs/eval_internlm2-7b-deploy2.0.py --debug --num-gpus 1

from mmengine.config import read_base

from opencompass.models.turbomind import TurboMindModelwith read_base():# choose a list of datasets from .datasets.ceval.ceval_gen_5f30c7 import ceval_datasets # and output the results in a choosen formatfrom .summarizers.medium import summarizerdatasets = sum((v for k, v in locals().items() if k.endswith('_datasets')), [])internlm_meta_template = dict(round=[dict(role='HUMAN', begin='<|User|>:', end='\n'),dict(role='BOT', begin='<|Bot|>:', end='<eoa>\n', generate=True),

],eos_token_id=103028)# config for internlm2-chat-7b

internlm2_chat_7b = dict(type=TurboMindModel,abbr='internlm2-chat-7b-turbomind',path="/root/ws_lmdeploy2.0",engine_config=dict(session_len=2048,max_batch_size=32,rope_scaling_factor=1.0),gen_config=dict(top_k=1,top_p=0.8,temperature=1.0,max_new_tokens=100),max_out_len=100,max_seq_len=2048,batch_size=32,concurrency=32,run_cfg=dict(num_gpus=1, num_procs=1),)models = [internlm2_chat_7b]

20240121_143942

tabulate format

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

dataset version metric mode internlm2-chat-7b-turbomind

-------------------------------------- --------- ------------- ------ -----------------------------

--------- 考试 Exam --------- - - - -

ceval - naive_average gen 43.97

agieval - - - -

mmlu - - - -

GaokaoBench - - - -

ARC-c - - - -

--------- 语言 Language --------- - - - -

WiC - - - -

summedits - - - -

chid-dev - - - -

afqmc-dev - - - -

bustm-dev - - - -

cluewsc-dev - - - -

WSC - - - -

winogrande - - - -

flores_100 - - - -

--------- 知识 Knowledge --------- - - - -

BoolQ - - - -

commonsense_qa - - - -

nq - - - -

triviaqa - - - -

--------- 推理 Reasoning --------- - - - -

cmnli - - - -

ocnli - - - -

ocnli_fc-dev - - - -

AX_b - - - -

AX_g - - - -

CB - - - -

RTE - - - -

story_cloze - - - -

COPA - - - -

ReCoRD - - - -

hellaswag - - - -

piqa - - - -

siqa - - - -

strategyqa - - - -

math - - - -

gsm8k - - - -

TheoremQA - - - -

openai_humaneval - - - -

mbpp - - - -

bbh - - - -

--------- 理解 Understanding --------- - - - -

C3 - - - -

CMRC_dev - - - -

DRCD_dev - - - -

MultiRC - - - -

race-middle - - - -

race-high - - - -

openbookqa_fact - - - -

csl_dev - - - -

lcsts - - - -

Xsum - - - -

eprstmt-dev - - - -Model: internlm2-chat-7b-turbomind

ceval-computer_network: {'accuracy': 47.368421052631575}

ceval-operating_system: {'accuracy': 63.1578947368421}

ceval-computer_architecture: {'accuracy': 38.095238095238095}

ceval-college_programming: {'accuracy': 24.324324324324326}

ceval-college_physics: {'accuracy': 10.526315789473683}

ceval-college_chemistry: {'accuracy': 0.0}

ceval-advanced_mathematics: {'accuracy': 15.789473684210526}

ceval-probability_and_statistics: {'accuracy': 11.11111111111111}

ceval-discrete_mathematics: {'accuracy': 18.75}

ceval-electrical_engineer: {'accuracy': 21.62162162162162}

ceval-metrology_engineer: {'accuracy': 41.66666666666667}

ceval-high_school_mathematics: {'accuracy': 0.0}

ceval-high_school_physics: {'accuracy': 31.57894736842105}

ceval-high_school_chemistry: {'accuracy': 31.57894736842105}

ceval-high_school_biology: {'accuracy': 31.57894736842105}

ceval-middle_school_mathematics: {'accuracy': 31.57894736842105}

ceval-middle_school_biology: {'accuracy': 71.42857142857143}

ceval-middle_school_physics: {'accuracy': 52.63157894736842}

ceval-middle_school_chemistry: {'accuracy': 80.0}

ceval-veterinary_medicine: {'accuracy': 43.47826086956522}

ceval-college_economics: {'accuracy': 23.636363636363637}

ceval-business_administration: {'accuracy': 33.33333333333333}

ceval-marxism: {'accuracy': 84.21052631578947}

ceval-mao_zedong_thought: {'accuracy': 70.83333333333334}

ceval-education_science: {'accuracy': 62.06896551724138}

ceval-teacher_qualification: {'accuracy': 77.27272727272727}

ceval-high_school_politics: {'accuracy': 26.31578947368421}

ceval-high_school_geography: {'accuracy': 57.89473684210527}

ceval-middle_school_politics: {'accuracy': 57.14285714285714}

ceval-middle_school_geography: {'accuracy': 50.0}

ceval-modern_chinese_history: {'accuracy': 65.21739130434783}

ceval-ideological_and_moral_cultivation: {'accuracy': 89.47368421052632}

ceval-logic: {'accuracy': 13.636363636363635}

ceval-law: {'accuracy': 41.66666666666667}

ceval-chinese_language_and_literature: {'accuracy': 47.82608695652174}

ceval-art_studies: {'accuracy': 66.66666666666666}

ceval-professional_tour_guide: {'accuracy': 79.3103448275862}

ceval-legal_professional: {'accuracy': 26.08695652173913}

ceval-high_school_chinese: {'accuracy': 10.526315789473683}

ceval-high_school_history: {'accuracy': 70.0}

ceval-middle_school_history: {'accuracy': 68.18181818181817}

ceval-civil_servant: {'accuracy': 40.42553191489361}

ceval-sports_science: {'accuracy': 57.89473684210527}

ceval-plant_protection: {'accuracy': 63.63636363636363}

ceval-basic_medicine: {'accuracy': 57.89473684210527}

ceval-clinical_medicine: {'accuracy': 45.45454545454545}

ceval-urban_and_rural_planner: {'accuracy': 60.86956521739131}

ceval-accountant: {'accuracy': 32.6530612244898}

ceval-fire_engineer: {'accuracy': 16.129032258064516}

ceval-environmental_impact_assessment_engineer: {'accuracy': 35.483870967741936}

ceval-tax_accountant: {'accuracy': 38.775510204081634}

ceval-physician: {'accuracy': 55.10204081632652}

ceval-stem: {'naive_average': 33.313263390065444}

ceval-social-science: {'naive_average': 54.2708632867435}

ceval-humanities: {'naive_average': 52.59929952379182}

ceval-other: {'naive_average': 45.8471813980099}

ceval-hard: {'naive_average': 14.916849415204679}

ceval: {'naive_average': 44.07471520785698}

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

)

)