一,集群规划及准备工作

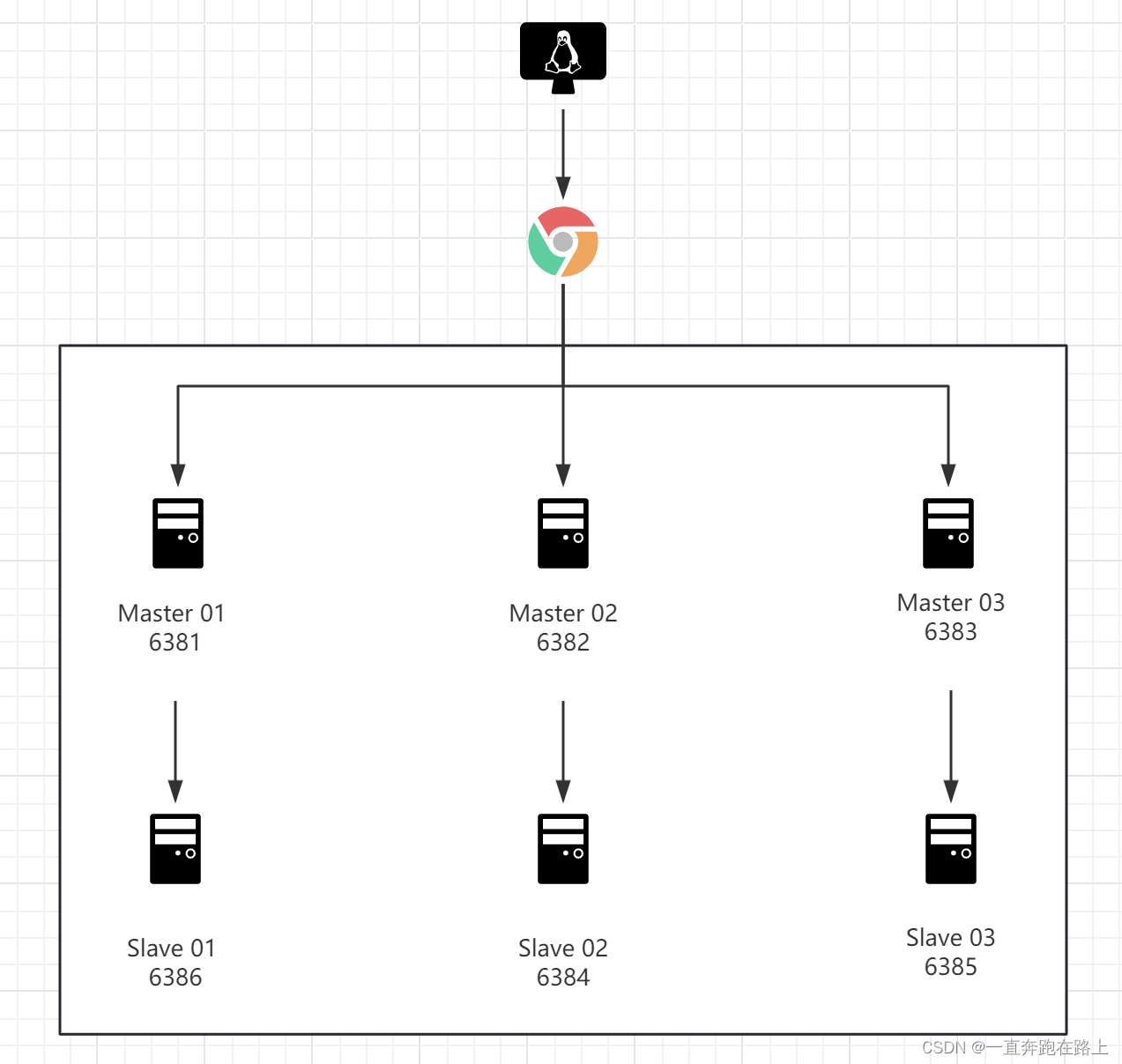

架构实现:Redis3主3从

二,搭建命令

第一步,创建6台服务:

docker run -d --name redis-node-1 --net host --privileged=true -v /data/redis/share/redis-node-1:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6381docker run -d --name redis-node-2 --net host --privileged=true -v /data/redis/share/redis-node-2:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382docker run -d --name redis-node-3 --net host --privileged=true -v /data/redis/share/redis-node-3:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383docker run -d --name redis-node-4 --net host --privileged=true -v /data/redis/share/redis-node-4:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384docker run -d --name redis-node-5 --net host --privileged=true -v /data/redis/share/redis-node-5:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385docker run -d --name redis-node-6 --net host --privileged=true -v /data/redis/share/redis-node-6:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386

参数说明:

--cluster-enabled yes: 是否开启集群

--privileged=true:获取宿主机root用户权限

--net host:使用宿主机的端口和ip,默认

--appendonly yes:开启持久化

查看是否启动创建成功:

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

528b29c8330b redis:6.0.8 "docker-entrypoint.s…" 5 seconds ago Up 5 seconds redis-node-6

fd9184cd6761 redis:6.0.8 "docker-entrypoint.s…" 7 seconds ago Up 7 seconds redis-node-5

44397301e3ac redis:6.0.8 "docker-entrypoint.s…" 7 seconds ago Up 7 seconds redis-node-4

18720ac44b13 redis:6.0.8 "docker-entrypoint.s…" 8 seconds ago Up 7 seconds redis-node-3

2b2987704c6d redis:6.0.8 "docker-entrypoint.s…" 8 seconds ago Up 8 seconds redis-node-2

e2304c8acbd6 redis:6.0.8 "docker-entrypoint.s…" 9 seconds ago Up 8 seconds redis-node-1

第二步:进入容器redis-node-1并为6台机器构建集群关系

# 进入任何台都行(以redis-node-1为例)

docker exec -it redis-node-1 /bin/bash# 构建主从关系

redis-cli --cluster create 12.114.161.16:6381 12.114.161.16:6382 12.114.161.16:6383 12.114.161.16:6384 12.114.161.16:6385 12.114.161.16:6386 --cluster-replicas 1

--cluster-replicas 1:为每个Master创建一个slave节点

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 12.114.161.16:6385 to 12.114.161.16:6381

Adding replica 12.114.161.16:6386 to 12.114.161.16:6382

Adding replica 12.114.161.16:6384 to 12.114.161.16:6383

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: 6e961a4765b555189708bebb69badf7dfad25cd5 12.114.161.16:6381slots:[0-5460] (5461 slots) master

M: f097fec937f54d147d316c1c62e26cb67c9fd059 12.114.161.16:6382slots:[5461-10922] (5462 slots) master

M: 78ac7be13522bd4ffd6fcf900c6c149c6938ecc2 12.114.161.16:6383slots:[10923-16383] (5461 slots) master

S: 0e34147ce2544cd90f5cce78d5493ae9e8625dfe 12.114.161.16:6384replicates f097fec937f54d147d316c1c62e26cb67c9fd059

S: 9bc2417a9cd6545ef2445eb4aa0610d586acd73b 12.114.161.16:6385replicates 78ac7be13522bd4ffd6fcf900c6c149c6938ecc2

S: 4ed46e0368698cd9d5af2ee84631c878b8ebc4d0 12.114.161.16:6386replicates 6e961a4765b555189708bebb69badf7dfad25cd5

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 12.114.161.16:6381)

M: 6e961a4765b555189708bebb69badf7dfad25cd5 12.114.161.16:6381slots:[0-5460] (5461 slots) master1 additional replica(s)

S: 4ed46e0368698cd9d5af2ee84631c878b8ebc4d0 12.114.161.16:6386slots: (0 slots) slavereplicates 6e961a4765b555189708bebb69badf7dfad25cd5

M: f097fec937f54d147d316c1c62e26cb67c9fd059 12.114.161.16:6382slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: 0e34147ce2544cd90f5cce78d5493ae9e8625dfe 12.114.161.16:6384slots: (0 slots) slavereplicates f097fec937f54d147d316c1c62e26cb67c9fd059

M: 78ac7be13522bd4ffd6fcf900c6c149c6938ecc2 12.114.161.16:6383slots:[10923-16383] (5461 slots) master1 additional replica(s)

S: 9bc2417a9cd6545ef2445eb4aa0610d586acd73b 12.114.161.16:6385slots: (0 slots) slavereplicates 78ac7be13522bd4ffd6fcf900c6c149c6938ecc2

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered. # 哈希槽16384个全部覆盖

第三步,进入任意容器内部的Redis,查看集群状态

两个重要命令:

# 进入Redis

redis-cli -p 6381

# 查看槽位信息

cluster info

127.0.0.1:6382> cluster info

cluster_state:ok

cluster_slots_assigned:16384 # 16384个哈希槽位

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:2

cluster_stats_messages_ping_sent:62177

cluster_stats_messages_pong_sent:64979

cluster_stats_messages_meet_sent:1

cluster_stats_messages_sent:127157

cluster_stats_messages_ping_received:64979

cluster_stats_messages_pong_received:62178

cluster_stats_messages_received:127157

# 查看节点信息,尤其主从节点如何分配关系

cluster nodes

127.0.0.1:6381> cluster nodes

4ed46e0368698cd9d5af2ee84631c878b8ebc4d0 12.114.161.16:6386@16386 slave 6e961a4765b555189708bebb69badf7dfad25cd5 0 1705242300000 1 connected

f097fec937f54d147d316c1c62e26cb67c9fd059 12.114.161.16:6382@16382 master - 0 1705242299110 2 connected 5461-10922

0e34147ce2544cd90f5cce78d5493ae9e8625dfe 12.114.161.16:6384@16384 slave f097fec937f54d147d316c1c62e26cb67c9fd059 0 1705242300113 2 connected

78ac7be13522bd4ffd6fcf900c6c149c6938ecc2 12.114.161.16:6383@16383 master - 0 1705242298000 3 connected 10923-16383

6e961a4765b555189708bebb69badf7dfad25cd5 12.114.161.16:6381@16381 myself,master - 0 1705242297000 1 connected 0-5460

9bc2417a9cd6545ef2445eb4aa0610d586acd73b 12.114.161.16:6385@16385 slave 78ac7be13522bd4ffd6fcf900c6c149c6938ecc2 0 1705242301116 3 connected

可以看到 3主3从构建关系,通过id看出附属那几个从主机

至此,说明创建哈希槽成功!!!

)

:使用logging的配置文件配置日志)

)