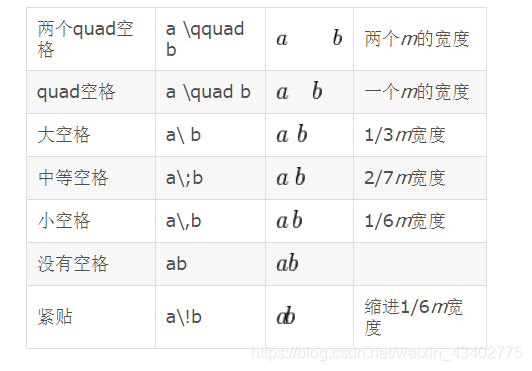

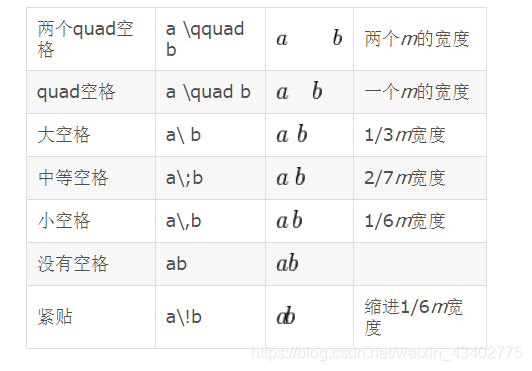

Latex 加空格的方法

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/535216.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

Latex的表格注释

调用threeparttable包

在文档的最前面加入以下命令:

\usepackage{threeparttable}在表格处插入命令

\begin{table*} \label{table:number}

\centering

\caption{....}

\begin{threeparttable}

\begin{tabular}{lc}\hlineA & B \\\hline

A & B\tnote{*} \…

Latex 插入目录 设置首页页码为空

插入目录

\begin{document}

\maketitle\tableofcontents

\newpage # 从下一页开始\end{document}\maketitle

\tableofcontents

\textbf{\ \\\\\\\\\\$*$ means that it is a supplementary experimental results for the manuscript.\\ $**$ means that it is a newly added …

Pytorch GPU内存占用很高,但是利用率很低

1.GPU 占用率,利用率

输入nvidia-smi来观察显卡的GPU内存占用率(Memory-Usage),显卡的GPU利用率(GPU-util)

GPU内存占用率(Memory-Usage) 往往是由于模型的大小以及batch size的大…

sambd ERROR: job for smbd.service failed

sudo service smbd restart

出现如下问题

解决方案

sudo cp /usr/share/samba/smb.conf /etc/sambs这样就可以重新启动了

Pytorch 反向传播实例,梯度下降

1.手写更新权重

#求 y w*x

x_data [1.0,2.0,3.0,4.0]

y_data [2.0,4.0,6.0,8.0]w 1.0def grad(x,y): #计算梯度# (y^ - y)^2 (wx -y)^2 grad:2w(wx-y)return 2 * x * (w * x - y)def loss(x,y):return (y - (w * x)) * (y - (w * x))for i in range(30):for …

python环境快速安装opencv 离线版安装

1. 进入清华大学opencv Python库:

https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple/opencv-python/

下载适合自己版本的opencv

怎么判断是否适合自己的环境? 只需要输入

pip install opencv-python # 太慢了 下载 我们不用它然后在网站下载…

Pytorch离线安装 matlibplot

因为使用pip安装太慢了,所以我们使用离线安装包来安装。

1.下载安装包

https://pypi.org/project/matplotlib/#files 选择自己合适的安装包

2. cd到指定的文件夹

然后

pip install .whl即可

Normalization 归一化方法 BN LN IN GN

1.分类

BN是在batch上,对N、H、W做归一化,而保留通道 C 的维度。BN对较小的batch size效果不好。BN适用于固定深度的前向神经网络,如CNN,不适用于RNN;LN在通道方向上,对C、H、W归一化,主要对RN…

Linux 服务器拷贝远程文件 SCP

1.复制文件

(1)将本地文件拷贝到远程 (有时候权限不允许) scp 文件名 用户名计算机IP或者计算机名称:远程路径

scp /root/install.* root192.168.1.12:/usr/local/src(2)从远程将文件拷回本地 scp 用户名…

Pytorch: model.eval(), model.train() 讲解

文章目录1. model.eval()2. model.train()两者只在一定的情况下有区别:训练的模型中含有dropout 和 batch normalization

1. model.eval()

在模型测试阶段使用

pytorch会自动把BN和DropOut固定住,不会取每个batchsize的平均,而是用训练好的…

Job for smbd.service failed because the control process exited with error code. See “systemctl statu

错误

$ sudo service smbd restartJob for smbd.service failed because the control process exited with

error code. See "systemctl status smbd.service" and "journalctl -xe"

for details.$ systemctl status smbd.servicesmbd.service - Samba SM…

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace o

问题

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.FloatTensor [4]] is at version 1; expected version 0 instead

分析

nn.relu(True) # 这个引起的问题原来的变量被替换掉了ÿ…

batchsize大小对训练速度的影响

1.batchsize越大 是不是训练越快?

GPU :一块2080Ti 平台:pytorch cuda 图片数量:2700

batchsize一个图片处理时间GPU内存占用GPU算力使用一个epoch 所用时间10.117s2.5G20%2700 * 0.0117 318s50.516s8G90%2700 * 0.516/5 279s

batchsize大…

os.environ[‘CUDA_VISIBLE_DEVICES‘]= ‘0‘设置环境变量

os.environ[‘环境变量名称’]‘环境变量值’ #其中key和value均为string类型

import os

os.environ["CUDA_VISIBLE_DEVICES"]‘6‘’,‘7’这个语句的作用是程序可见的显卡ID。

注意 如果"CUDA_VISIBLE_DEVICES" 书写错误,也不报…

nn.ReLU() 和 nn.ReLU(inplace=True)中inplace的作用

inplace

作用: 1.用relu后的变量进行替换,直接把原来的变量覆盖掉 2.节省了内存

缺点: 有时候出现梯度回传失败的问题,因为之前的变量被替换了,找不到之前的变量了 参考这篇文章

pytorch:加载预训练模型(多卡加载单卡预训练模型,多GPU,单GPU)

在pytorch加载预训练模型时,可能遇到以下几种情况。 分为以下几种在pytorch加载预训练模型时,可能遇到以下几种情况。1.多卡训练模型加载单卡预训练模型2. 多卡训练模型加载多卡预训练模型3. 单卡训练模型加载单卡预训练模型4. 单卡训练模型加载多卡预训…

python中 numpy转list list 转numpy

list to numpy

import numpy as np

a [1,2]

b np.array(a)numpy to list

a np.zero(1,1)

a.tolist()

知识蒸馏 knowledge distill 相关论文理解

Knowledge Distil 相关文章1.FitNets : Hints For Thin Deep Nets (ICLR2015)2.A Gift from Knowledge Distillation:Fast Optimization, Network Minimization and Transfer Learning (CVPR 2017)3.Matching Guided Distillation(…

模型压缩 相关文章解读

模型压缩相关文章Learning both Weights and Connections for Efficient Neural Networks (NIPS2015)Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding(ICLR2016)Learning both Weights and …

, model.train() 讲解)

![os.environ[‘CUDA_VISIBLE_DEVICES‘]= ‘0‘设置环境变量](http://pic.xiahunao.cn/os.environ[‘CUDA_VISIBLE_DEVICES‘]= ‘0‘设置环境变量)

和 nn.ReLU(inplace=True)中inplace的作用)

)