模型压缩相关文章

- Learning both Weights and Connections for Efficient Neural Networks (NIPS2015)

- Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding(ICLR2016)

Learning both Weights and Connections for Efficient Neural Networks (NIPS2015)

论文目的:

训练过程中不仅学习权重参数,也学习网络连接的重要性,把不重要的删除掉。

论文内容:

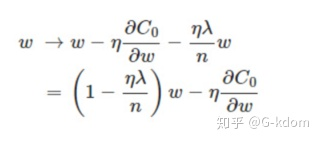

1.使用L2正则化

2.Drop 比率调节

3.参数共适应性,修剪之后重新训练的时候,参数使用原来的参数效果好。

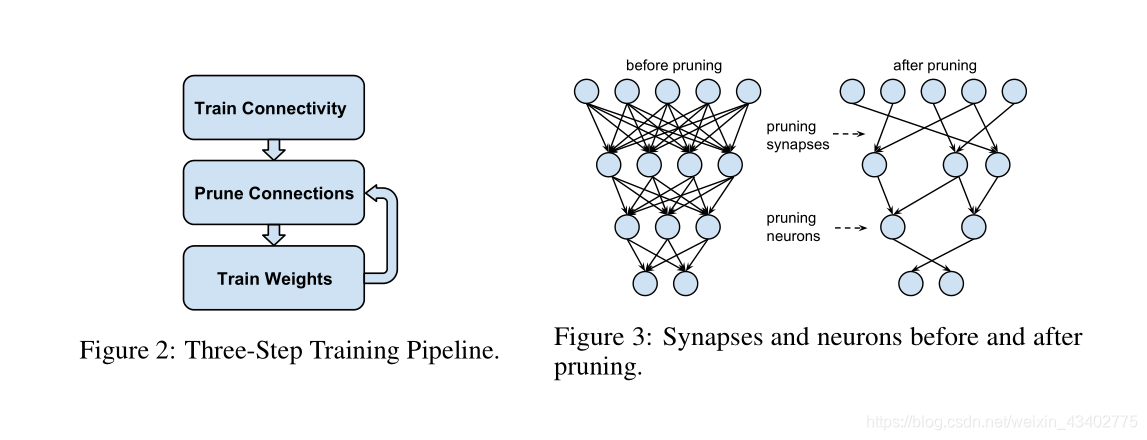

4.迭代修剪连接,修剪-》训练-》修剪-》训练

5.修剪0值神经元

Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding(ICLR2016)

论文内容:

修剪,参数精度变小,哈夫曼编码 压缩网络