文章目录

- KL 散度

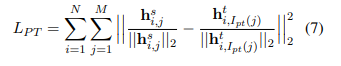

- L2 loss

- 做标准化处理

- CEloss

- CTCLoss

- AdaptiveAvgPool2d

KL 散度

算KL散度的时候要注意前后顺序以及加log

import torhch.nn as nn

d_loss = nn.KLDivLoss(reduction=reduction_kd)(F.log_softmax(y / T, dim=1),F.softmax(teacher_scores / T, dim=1)) * T * T

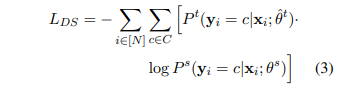

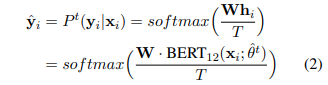

蒸馏loss T 为

L2 loss

import torch.nn.functional as F

F.mse_loss(teacher_patience.float(), student_patience.float()).half()

做标准化处理

if normalized_patience:teacher_patience = F.normalize(teacher_patience, p=2, dim=2)student_patience = F.normalize(student_patience, p=2, dim=2)

L2 范数

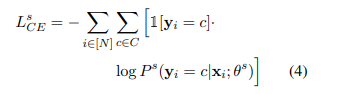

CEloss

分类问题

nll_loss = F.cross_entropy(y, labels, reduction=reduction_nll)

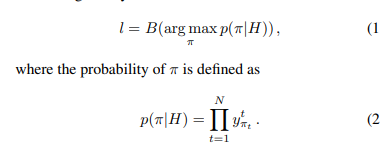

CTCLoss

计算连续(未分割)时间序列和目标序列之间的损失.

torch.nn.CTCLoss(blank=0, reduction=‘mean’, zero_infinity=False)

>>> # Target are to be padded

>>> T = 50 # Input sequence length

>>> C = 20 # Number of classes (including blank)

>>> N = 16 # Batch size

>>> S = 30 # Target sequence length of longest target in batch (padding length)

>>> S_min = 10 # Minimum target length, for demonstration purposes

>>>

>>> # Initialize random batch of input vectors, for *size = (T,N,C)

>>> input = torch.randn(T, N, C).log_softmax(2).detach().requires_grad_()

>>>

>>> # Initialize random batch of targets (0 = blank, 1:C = classes)

>>> target = torch.randint(low=1, high=C, size=(N, S), dtype=torch.long)

>>>

>>> input_lengths = torch.full(size=(N,), fill_value=T, dtype=torch.long)

>>> target_lengths = torch.randint(low=S_min, high=S, size=(N,), dtype=torch.long)

>>> ctc_loss = nn.CTCLoss()

>>> loss = ctc_loss(input, target, input_lengths, target_lengths)

图片卷积-》序列做loss

31,1,64,256 -》 31,512,3,65-》31,65,512,3-》polling-》31,65,512》》liner》31,65,103》TNC》65,31,103

AdaptiveAvgPool2d

自适应池化,最后设置要输出的H和W,B和N(C)不变

,torch.cuda.device_count(),torch.cuda.get_device_name(0))

)

)

)

时间单位(ms μs ns ps)长度单位(dm cm mm μm nm pm fm am zm ym))