接上一篇:企业实战_20_MyCat使用HAPpoxy对Mycat负载均衡

https://gblfy.blog.csdn.net/article/details/100087884

| 主机名 | IP地址 | 角色 |

|---|---|---|

| mycat | 192.168.43.32 | MYCAT MYSQL,ZK,Haproxy,Keepalived |

| node1 | 192.168.43.104 | MYSQL,ZK |

| node2 | 192.168.43.217 | MYSQL,ZK |

| node3 | 192.168.43.172 | MYSQL,MYCAT ,Haproxy,Keepalived |

文章目录

- 一、mycat01 节点

- 1. 在线安装keepalived

- 2. 获取网卡名

- 3. 配置 keepalived.conf

- 4. 编写监控脚本

- 5. 脚本赋予权限

- 6. 运行脚本

- 7. 查看脚本状态

- 8. 查看虚拟vip

- 二、mycat02 节点

- 2.1. 在线安装keepalived

- 2. 获取网卡名

- 2.3. 配置 keepalived.conf

- 2.4. 编写监控脚本

- 2.5. 脚本赋予权限

- 2.6. 运行脚本

- 2.7. 查看脚本状态

- 2.8. 查看虚拟vip

- 三、vip迁移模拟

- 3.1. 验证思路

- 3.2. keepalived 主节点服务停止掉

- 3.3. keepalived 从节点验证

- 3.4. 重启启动主节点keepalived

- 3.5. 登录从节点

- 3.6. 结论

一、mycat01 节点

1. 在线安装keepalived

#安装依赖包yum install -y curl gcc openssl-devel libnl3-devel net-snmp-devel#yum安装软件yum install -y keepalived

2. 获取网卡名

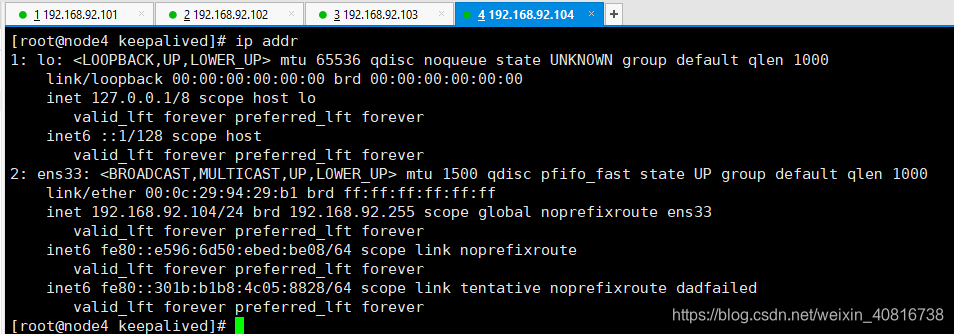

# 获取网卡名,等会用于绑定

ip addr

3. 配置 keepalived.conf

#进入/etc/keepalived/cd /etc/keepalived/#编辑keepalived.confvim keepalived.conf

! Configuration Fileforkeepalived

vrrp_script chk_http_port {script"/etc/keepalived/check_haproxy.sh"interval 2weight 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 150

advert_int 1

authentication {auth_type PASSauth_pass 1111

}

track_script {chk_http_port

}

virtual_ipaddress {

192.168.92.127 dev ens33 scope global}

}说明:虚拟vip怎样获取的?

自己虚拟机玩耍:自己随机找一个与本机ip段一致的ip即可,

在公司的话:需要发邮件申请

4. 编写监控脚本

#创建check_haproxy.sh

vim /etc/keepalived/check_haproxy.sh#添加内容

#!/bin/bash

STARTHAPROXY="/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg"

#STOPKEEPALIVED="/etc/init.d/keepalived stop"

STOPKEEPALIVED="/usr/bin/systemctl stop keepalived"

LOGFILE="/var/log/keepalived-haproxy-state.log"

echo "[check_haproxy status]" >> $LOGFILE

A=`ps -C haproxy --no-header |wc -l`

echo "[check_haproxy status]" >> $LOGFILE

date >> $LOGFILE

if [ $A -eq 0 ];thenecho $STARTHAPROXY >> $LOGFILE$STARTHAPROXY >> $LOGFILE 2>&1sleep 5

fi

if [ `ps -C haproxy --no-header |wc -l` -eq 0 ];thenexit 0

elseexit 1

fi

5. 脚本赋予权限

#给这个脚本赋予可执行权限

chmod a+x /etc/keepalived/check_haproxy.sh

6. 运行脚本

# 启动 keepalived

systemctl start keepalived

7. 查看脚本状态

# 查看 keepalived 状态

systemctl status keepalived

8. 查看虚拟vip

ip addr

二、mycat02 节点

2.1. 在线安装keepalived

#安装依赖包yum install -y curl gcc openssl-devel libnl3-devel net-snmp-devel#yum安装软件yum install -y keepalived

2. 获取网卡名

# 获取网卡名,等会用于绑定

ip addr

2.3. 配置 keepalived.conf

#进入/etc/keepalived/cd /etc/keepalived/#编辑keepalived.conf,把下面的配置覆盖掉默认的vim keepalived.conf

! Configuration Fileforkeepalived

vrrp_script chk_http_port {script"/etc/keepalived/check_haproxy.sh"interval 2weight 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 120

advert_int 1

authentication {auth_type PASSauth_pass 1111

}

track_script {chk_http_port

}

virtual_ipaddress {

192.168.92.127 dev ens33 scope global}

}

2.4. 编写监控脚本

#创建check_haproxy.sh

vim /etc/keepalived/check_haproxy.sh#添加内容

#!/bin/bash

STARTHAPROXY="/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg"

#STOPKEEPALIVED="/etc/init.d/keepalived stop"

STOPKEEPALIVED="/usr/bin/systemctl stop keepalived"

LOGFILE="/var/log/keepalived-haproxy-state.log"

echo "[check_haproxy status]" >> $LOGFILE

A=`ps -C haproxy --no-header |wc -l`

echo "[check_haproxy status]" >> $LOGFILE

date >> $LOGFILE

if [ $A -eq 0 ];thenecho $STARTHAPROXY >> $LOGFILE$STARTHAPROXY >> $LOGFILE 2>&1sleep 5

fi

if [ `ps -C haproxy --no-header |wc -l` -eq 0 ];thenexit 0

elseexit 1

fi

2.5. 脚本赋予权限

#给这个脚本赋予可执行权限

chmod a+x /etc/keepalived/check_haproxy.sh

2.6. 运行脚本

# 启动 keepalived

systemctl start keepalived

2.7. 查看脚本状态

# 查看 keepalived 状态

systemctl status keepalived

2.8. 查看虚拟vip

ip addr

发现是没有虚拟vip,对吧!

说明:

由于我们在keepalived 主节点MASTER配置的权重是150,在keepalived 从节点BACKUP配置的权重是120,因此虚拟vip默认会在权重高的机器上。

什么场景下虚拟vip会迁移到keepalived 从节点BACKUP呢?

当keepalived 主节点宕机之后,虚拟vip就会自动迁移到keepalived的slave节点。

三、vip迁移模拟

3.1. 验证思路

把带有虚拟ip的haproxy停止掉,虚拟ip,keepalived就会把虚拟ip转移到另一台haproxy机器上,当停止掉的haproxy重启启动后,虚拟ip就会转移回来,因为设置了权重。

3.2. keepalived 主节点服务停止掉

[root@node1 ~]# systemctl stop keepalived

[root@node1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:72:fe:1f brd ff:ff:ff:ff:ff:ffinet 192.168.92.101/24 brd 192.168.92.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet6 fe80::e596:6d50:ebed:be08/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft foreverinet6 fe80::301b:b1b8:4c05:8828/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft foreverinet6 fe80::dec4:912f:fd7f:bbd5/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft forever

[root@node1 ~]#

发现虚拟vip不见了。

3.3. keepalived 从节点验证

ip addr

发现虚拟vip启动迁移到了从节点机器上

3.4. 重启启动主节点keepalived

[root@node1 ~]# systemctl start keepalived

You have new mail in /var/spool/mail/root

[root@node1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:72:fe:1f brd ff:ff:ff:ff:ff:ffinet 192.168.92.101/24 brd 192.168.92.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet 192.168.92.127/32 scope global ens33valid_lft forever preferred_lft foreverinet6 fe80::e596:6d50:ebed:be08/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft foreverinet6 fe80::301b:b1b8:4c05:8828/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft foreverinet6 fe80::dec4:912f:fd7f:bbd5/64 scope link tentative noprefixroute dadfailed valid_lft forever preferred_lft forever

[root@node1 ~]#

3.5. 登录从节点

ip addr

发现虚拟vip自动又迁移了回去

3.6. 结论

由于主节点的keepalived 权重比从节点的keepalived高,因此,当主从服务都正常运行的场景,虚拟vip会在主节点上;当主节点宕机后,虚拟vip会自动迁移到从节点上。

下一篇:企业实战_22_MyCatSQL拦截

https://gblfy.blog.csdn.net/article/details/100073474

)

!)