简介: 应用级扩缩容是相对于运维级而言的。像监控CPU/内存的利用率就属于应用无关的纯运维指标,针对这种指标进行扩缩容的HPA配置就是运维级扩缩容。而像请求数量、请求延迟、P99分布等指标就属于应用相关的,或者叫业务感知的监控指标。 本篇将介绍3种应用级监控指标在HPA中的配置,以实现应用级自动扩缩容。

应用级扩缩容是相对于运维级而言的。像监控CPU/内存的利用率就属于应用无关的纯运维指标,针对这种指标进行扩缩容的HPA配置就是运维级扩缩容。而像请求数量、请求延迟、P99分布等指标就属于应用相关的,或者叫业务感知的监控指标。

本篇将介绍3种应用级监控指标在HPA中的配置,以实现应用级自动扩缩容。

Setup HPA

1 部署metrics-adapter

执行如下命令部署kube-metrics-adapter(完整脚本参见:demo_hpa.sh)。:

helm --kubeconfig "$USER_CONFIG" -n kube-system install asm-custom-metrics \$KUBE_METRICS_ADAPTER_SRC/deploy/charts/kube-metrics-adapter \--set prometheus.url=http://prometheus.istio-system.svc:9090执行如下命令验证部署情况:

#验证POD

kubectl --kubeconfig "$USER_CONFIG" get po -n kube-system | grep metrics-adapterasm-custom-metrics-kube-metrics-adapter-6fb4949988-ht8pv 1/1 Running 0 30s#验证CRD

kubectl --kubeconfig "$USER_CONFIG" api-versions | grep "autoscaling/v2beta"autoscaling/v2beta1

autoscaling/v2beta2#验证CRD

kubectl --kubeconfig "$USER_CONFIG" get --raw "/apis/external.metrics.k8s.io/v1beta1" | jq .{"kind": "APIResourceList","apiVersion": "v1","groupVersion": "external.metrics.k8s.io/v1beta1","resources": []

}2 部署loadtester

执行如下命令部署flagger loadtester:

kubectl --kubeconfig "$USER_CONFIG" apply -f $FLAAGER_SRC/kustomize/tester/deployment.yaml -n test

kubectl --kubeconfig "$USER_CONFIG" apply -f $FLAAGER_SRC/kustomize/tester/service.yaml -n test3 部署HPA

3.1 根据应用请求数量扩缩容

首先我们创建一个感知应用请求数量(istio_requests_total)的HorizontalPodAutoscaler配置:

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

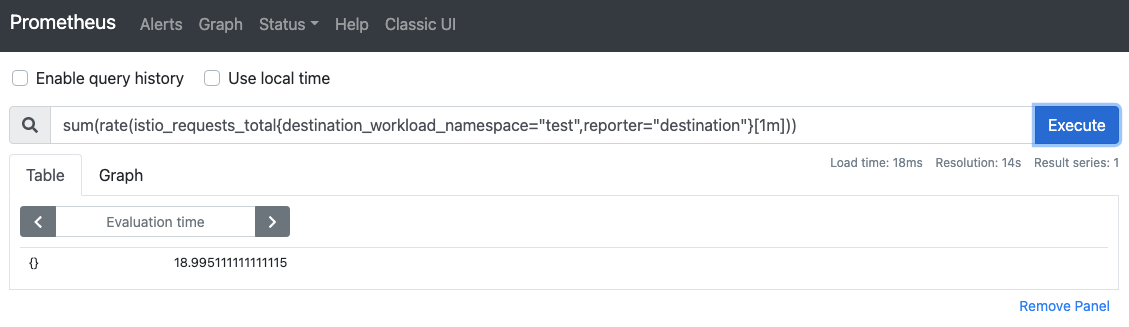

metadata:name: podinfo-totalnamespace: testannotations:metric-config.external.prometheus-query.prometheus/processed-requests-per-second: |sum(rate(istio_requests_total{destination_workload_namespace="test",reporter="destination"}[1m]))

spec:maxReplicas: 5minReplicas: 1scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: podinfometrics:- type: Externalexternal:metric:name: prometheus-queryselector:matchLabels:query-name: processed-requests-per-secondtarget:type: AverageValueaverageValue: "10"执行如下命令部署这个HPA配置:

kubectl --kubeconfig "$USER_CONFIG" apply -f resources_hpa/requests_total_hpa.yaml执行如下命令校验:

kubectl --kubeconfig "$USER_CONFIG" get --raw "/apis/external.metrics.k8s.io/v1beta1" | jq .结果如下:

{"kind": "APIResourceList","apiVersion": "v1","groupVersion": "external.metrics.k8s.io/v1beta1","resources": [{"name": "prometheus-query","singularName": "","namespaced": true,"kind": "ExternalMetricValueList","verbs": ["get"]}]

}类似地,我们可以使用其他维度的应用级监控指标配置HPA。举例如下,不再冗述。

3.2 根据平均延迟扩缩容

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:name: podinfo-latency-avgnamespace: testannotations:metric-config.external.prometheus-query.prometheus/latency-average: |sum(rate(istio_request_duration_milliseconds_sum{destination_workload_namespace="test",reporter="destination"}[1m]))/sum(rate(istio_request_duration_milliseconds_count{destination_workload_namespace="test",reporter="destination"}[1m]))

spec:maxReplicas: 5minReplicas: 1scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: podinfometrics:- type: Externalexternal:metric:name: prometheus-queryselector:matchLabels:query-name: latency-averagetarget:type: AverageValueaverageValue: "0.005"3.3 根据P95分布扩缩容

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:name: podinfo-p95namespace: testannotations:metric-config.external.prometheus-query.prometheus/p95-latency: |histogram_quantile(0.95,sum(irate(istio_request_duration_milliseconds_bucket{destination_workload_namespace="test",destination_canonical_service="podinfo"}[5m]))by (le))

spec:maxReplicas: 5minReplicas: 1scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: podinfometrics:- type: Externalexternal:metric:name: prometheus-queryselector:matchLabels:query-name: p95-latencytarget:type: AverageValueaverageValue: "4"验证HPA

1 生成负载

执行如下命令产生实验流量,以验证HPA配置自动扩容生效。

alias k="kubectl --kubeconfig $USER_CONFIG"

loadtester=$(k -n test get pod -l "app=flagger-loadtester" -o jsonpath='{.items..metadata.name}')

k -n test exec -it ${loadtester} -c loadtester -- hey -z 5m -c 2 -q 10 http://podinfo:9898这里运行了一个持续5分钟、QPS=10、并发数为2的请求。

hey命令详细参考如下:

Usage: hey [options...] <url>Options:-n Number of requests to run. Default is 200.-c Number of workers to run concurrently. Total number of requests cannotbe smaller than the concurrency level. Default is 50.-q Rate limit, in queries per second (QPS) per worker. Default is no rate limit.-z Duration of application to send requests. When duration is reached,application stops and exits. If duration is specified, n is ignored.Examples: -z 10s -z 3m.-o Output type. If none provided, a summary is printed."csv" is the only supported alternative. Dumps the responsemetrics in comma-separated values format.-m HTTP method, one of GET, POST, PUT, DELETE, HEAD, OPTIONS.-H Custom HTTP header. You can specify as many as needed by repeating the flag.For example, -H "Accept: text/html" -H "Content-Type: application/xml" .-t Timeout for each request in seconds. Default is 20, use 0 for infinite.-A HTTP Accept header.-d HTTP request body.-D HTTP request body from file. For example, /home/user/file.txt or ./file.txt.-T Content-type, defaults to "text/html".-a Basic authentication, username:password.-x HTTP Proxy address as host:port.-h2 Enable HTTP/2.-host HTTP Host header.-disable-compression Disable compression.-disable-keepalive Disable keep-alive, prevents re-use of TCPconnections between different HTTP requests.-disable-redirects Disable following of HTTP redirects-cpus Number of used cpu cores.(default for current machine is 4 cores)2 自动扩容

执行如下命令观察扩容情况:

watch kubectl --kubeconfig $USER_CONFIG -n test get hpa/podinfo-total结果如下:

Every 2.0s: kubectl --kubeconfig /Users/han/shop_config/ack_zjk -n test get hpa/podinfo East6C16G: Tue Jan 26 18:01:30 2021NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

podinfo Deployment/podinfo 10056m/10 (avg) 1 5 2 4m45s另外两个HPA类似,命令如下:

kubectl --kubeconfig $USER_CONFIG -n test get hpawatch kubectl --kubeconfig $USER_CONFIG -n test get hpa/podinfo-latency-avg

watch kubectl --kubeconfig $USER_CONFIG -n test get hpa/podinfo-p953 监控指标

同时,我们可以实时在Prometheus中查看相关的应用级监控指标的实时数据。示意如下:

原文链接

本文为阿里云原创内容,未经允许不得转载。

...)

..doc...)