一.集群环境配置

#调试Master,在master节点的spark-env.sh中添加SPARK_MASTER_OPTS变量

export SPARK_MASTER_OPTS="-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=y,address=10000"

#调试Worker,在worker节点的spark-env.sh中添加SPARK_WORKER_OPTS变量

export SPARK_WORKER_OPTS="-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=y,address=10001"

#

#调试spark-submit + app

bin/spark-submit --class cn.daxin.spark.WordCount --master spark://node-1.daxin.cn:7077 --driver-java-options "-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=y,address=10002" /root/wc.jar hdfs://node-1.daxin.cn:9000/words.txt hdfs://node-1.daxin.cn:9000/out2

#调试spark-submit + app + executor

bin/spark-submit --class cn.daxin.spark.WordCount --master spark://node-1.daxin.cn:7077 --conf "spark.executor.extraJavaOptions=-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=y,address=10003" --driver-java-options "-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=y,address=10002" /root/wc.jar hdfs://node-1.daxin.cn:9000/words.txt hdfs://node-1.daxin.cn:9000/out2

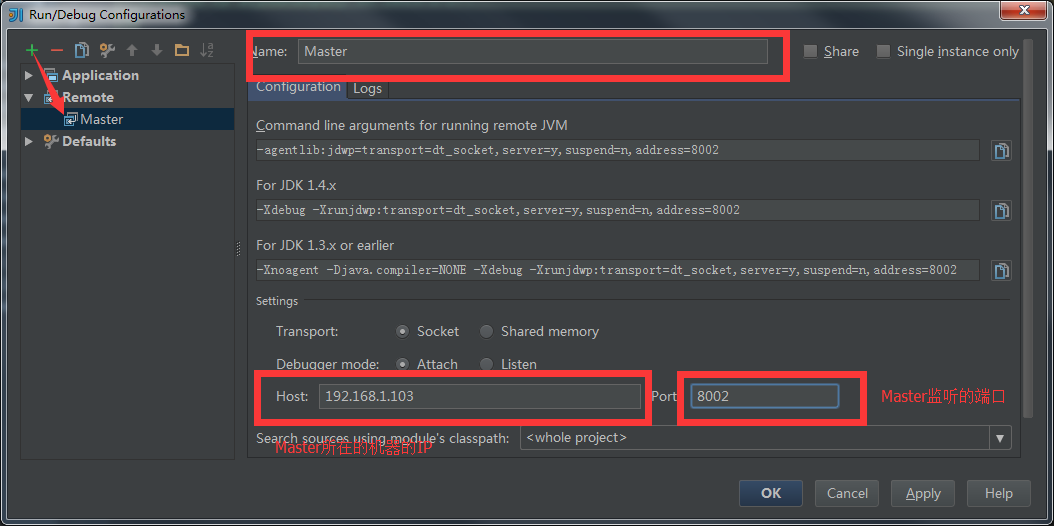

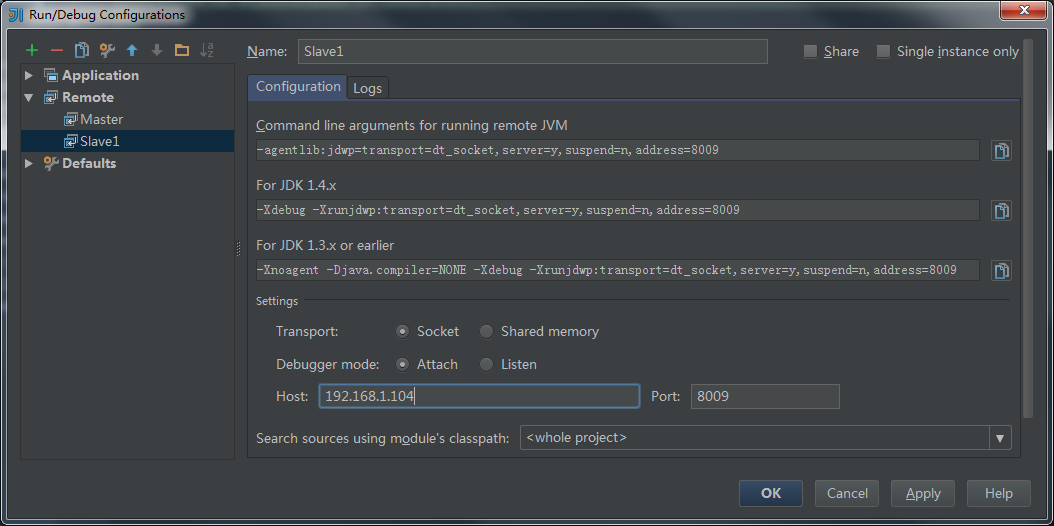

二.idea配置

在我们的idea中,添加两个Remote启动项

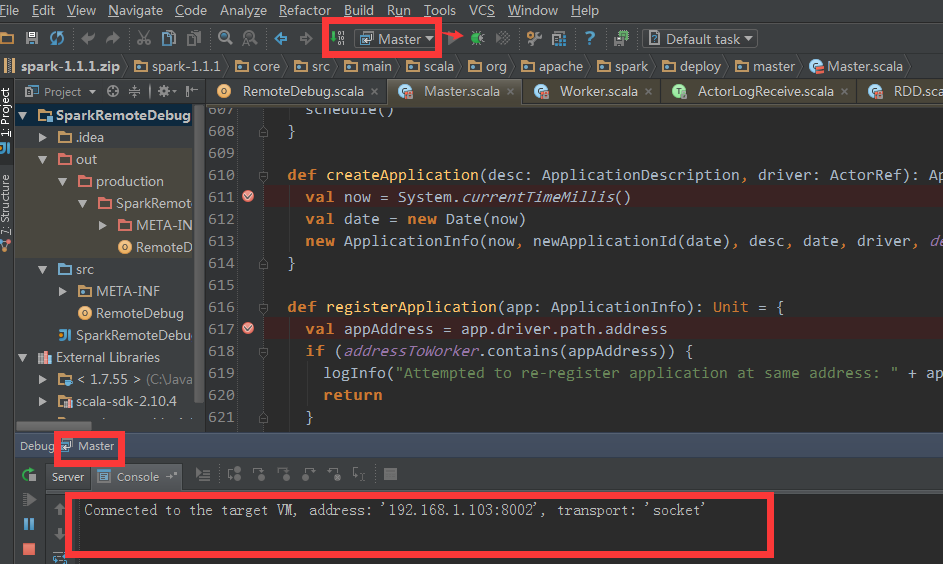

重要的时刻来了,我们先启动调试Master,并加上属于Master代码的断点:

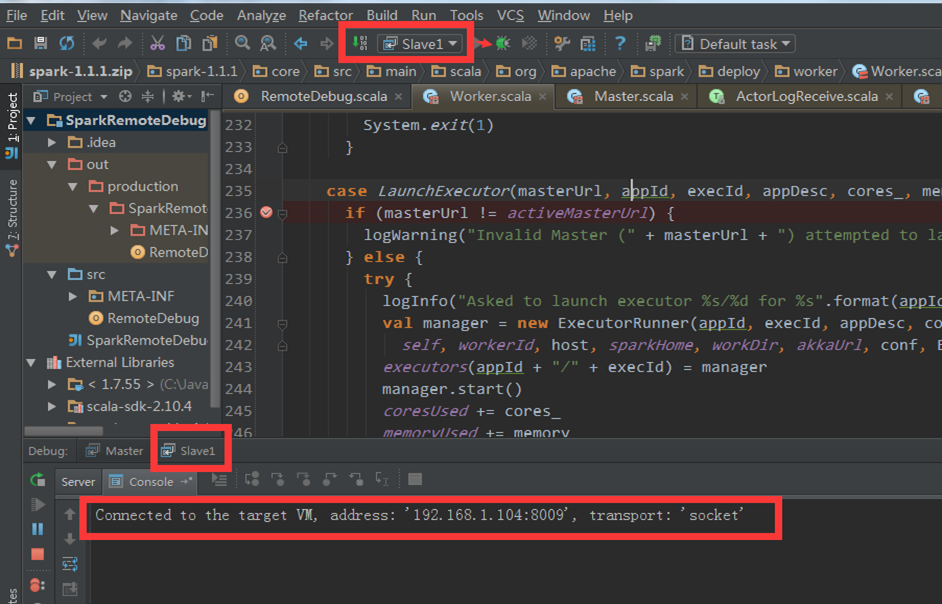

可以看到,idea已经连接到了我们Cluster中的Master机器的10000端口,而这正是我们在集群中配置的端口。同理启动Slave1(Worker)

函数进行url编码?(代码示例))