参考:https://github.com/wkentaro/labelme

一.labelme标注文件转coco json

1.标注时带图片ImageData信息,将一个文件夹下的照片和labelme的标注文件,分成了train和val的coco json文件和照片, (COCO的格式: [x1,y1,w,h],x1,y1为box的左上角,w,h为box的宽长).

import os

import json

import numpy as np

import glob

import shutil

from sklearn.model_selection import train_test_split

from labelme import utilsnp.random.seed(41)#0为背景

classname_to_id = {"Red": 1}class Lableme2CoCo:def __init__(self):self.images = []self.annotations = []self.categories = []self.img_id = 0self.ann_id = 0def save_coco_json(self, instance, save_path):json.dump(instance, open(save_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=1) # indent=2 更加美观显示# 由json文件构建COCOdef to_coco(self, json_path_list):self._init_categories()for json_path in json_path_list:obj = self.read_jsonfile(json_path)self.images.append(self._image(obj, json_path))shapes = obj['shapes']for shape in shapes:annotation = self._annotation(shape)self.annotations.append(annotation)self.ann_id += 1self.img_id += 1instance = {}instance['info'] = 'spytensor created'instance['license'] = ['license']instance['images'] = self.imagesinstance['annotations'] = self.annotationsinstance['categories'] = self.categoriesreturn instance# 构建类别def _init_categories(self):for k, v in classname_to_id.items():category = {}category['id'] = vcategory['name'] = kself.categories.append(category)# 构建COCO的image字段def _image(self, obj, path):image = {}img_x = utils.img_b64_to_arr(obj['imageData'])h, w = img_x.shape[:-1]image['height'] = himage['width'] = wimage['id'] = self.img_idimage['file_name'] = os.path.basename(path).replace(".json", ".jpg")return image# 构建COCO的annotation字段def _annotation(self, shape):label = shape['label']points = shape['points']annotation = {}annotation['id'] = self.ann_idannotation['image_id'] = self.img_idannotation['category_id'] = int(classname_to_id[label])annotation['segmentation'] = [np.asarray(points).flatten().tolist()]annotation['bbox'] = self._get_box(points)annotation['iscrowd'] = 0annotation['area'] = annotation['bbox'][-1]*annotation['bbox'][-2]return annotation# 读取json文件,返回一个json对象def read_jsonfile(self, path):with open(path, "r", encoding='utf-8') as f:return json.load(f)# COCO的格式: [x1,y1,w,h] 对应COCO的bbox格式def _get_box(self, points):min_x = min_y = np.infmax_x = max_y = 0for x, y in points:min_x = min(min_x, x)min_y = min(min_y, y)max_x = max(max_x, x)max_y = max(max_y, y)return [min_x, min_y, max_x - min_x, max_y - min_y]if __name__ == '__main__':#将一个文件夹下的照片和labelme的标注文件,分成了train和val的coco json文件和照片labelme_path = './data/fzh_img_label_example'train_img_out_path='./train_img'val_img_out_path = './val_img'if not (os.path.exists(train_img_out_path) and os.path.exists(val_img_out_path)):os.mkdir(train_img_out_path)os.mkdir(val_img_out_path)# 获取images目录下所有的joson文件列表json_list_path = glob.glob(labelme_path + "/*.json")print('json_list_path=', json_list_path)# 数据划分,这里没有区分val2017和tran2017目录,所有图片都放在images目录下train_path, val_path = train_test_split(json_list_path, test_size=0.5)print("train_n:", len(train_path), 'val_n:', len(val_path))print('train_path=',train_path)# 把训练集转化为COCO的json格式l2c_train = Lableme2CoCo()train_instance = l2c_train.to_coco(train_path)l2c_train.save_coco_json(train_instance, 'train.json')# 把验证集转化为COCO的json格式l2c_val = Lableme2CoCo()val_instance = l2c_val.to_coco(val_path)l2c_val.save_coco_json(val_instance, 'val.json')for file in train_path:shutil.copy(file.replace("json",'png' or "jpg"),train_img_out_path)for file in val_path:shutil.copy(file.replace("json",'png' or "jpg"),val_img_out_path)

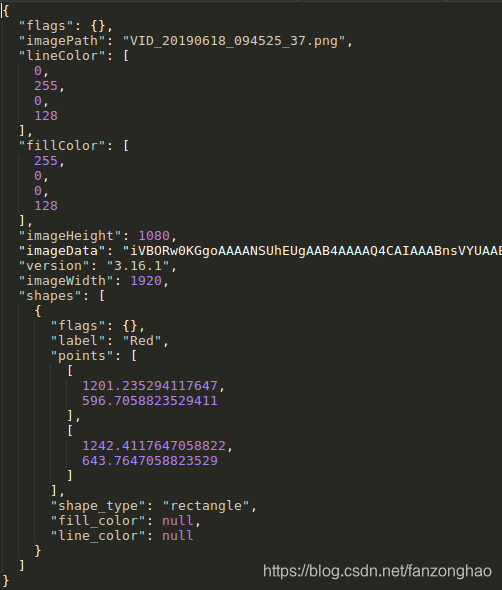

labelme标注好的文件和json格式

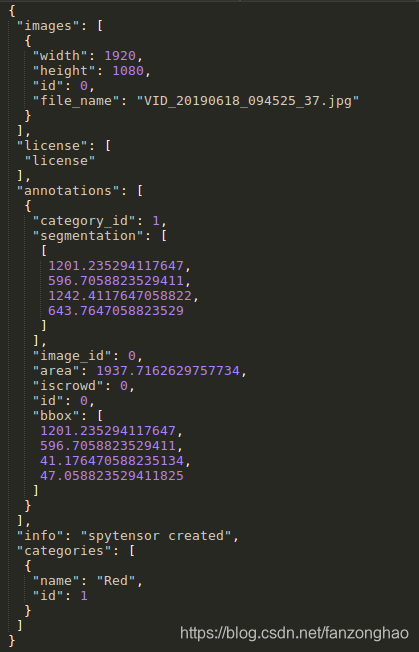

生成的训练集 生成的验证集

生成的训练集和验证集的json文件

label me标注的json格式 转好的coco json格式

2.标注时图片不带ImageData信息

import os

import json

import numpy as np

import glob

import shutil

from sklearn.model_selection import train_test_split

from labelme import utils

import cv2np.random.seed(41)# 0为背景

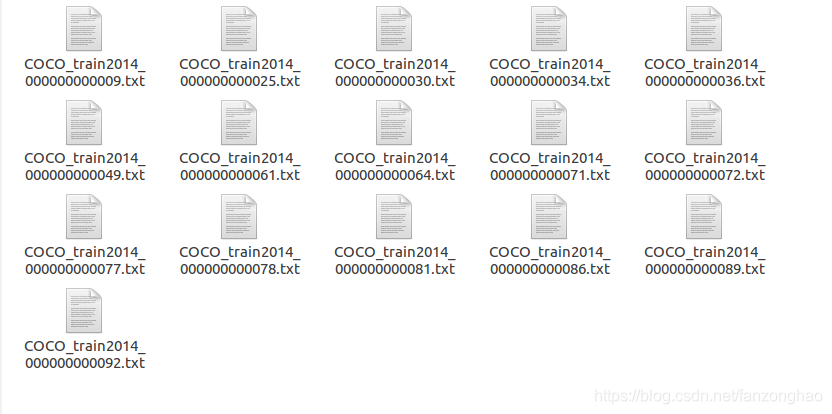

classname_to_id = {"person": 1,"bus": 6}class Lableme2CoCo:def __init__(self):self.images = []self.annotations = []self.categories = []self.img_id = 0self.ann_id = 0def save_coco_json(self, instance, save_path):json.dump(instance, open(save_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=1) # indent=2 更加美观显示# 由json文件构建COCOdef to_coco(self, json_path_list):self._init_categories()for json_path in json_path_list:obj = self.read_jsonfile(json_path)self.images.append(self._image(obj, json_path))shapes = obj['shapes']for shape in shapes:annotation = self._annotation(shape)self.annotations.append(annotation)self.ann_id += 1self.img_id += 1instance = {}instance['info'] = 'spytensor created'instance['license'] = ['license']instance['images'] = self.imagesinstance['annotations'] = self.annotationsinstance['categories'] = self.categoriesreturn instance# 构建类别def _init_categories(self):for k, v in classname_to_id.items():category = {}category['id'] = vcategory['name'] = kself.categories.append(category)# 构建COCO的image字段def _image(self, obj, path):image = {}img_path = path.replace(".json", ".jpg")# img_x = utils.img_b64_to_arr(obj['imageData'])# h, w = img_x.shape[:-1]# print("img_path:",img_path)img_x = cv2.imread(img_path)h, w = img_x.shape[:-1]image['height'] = himage['width'] = wimage['id'] = self.img_idimage['file_name'] = os.path.basename(path).replace(".json", ".jpg")return image# 构建COCO的annotation字段def _annotation(self, shape):label = shape['label']points = shape['points']annotation = {}annotation['id'] = self.ann_idannotation['image_id'] = self.img_idannotation['category_id'] = int(classname_to_id[label])annotation['segmentation'] = [np.asarray(points).flatten().tolist()]annotation['bbox'] = self._get_box(points)annotation['iscrowd'] = 0annotation['area'] = annotation['bbox'][-1] * annotation['bbox'][-2]return annotation# 读取json文件,返回一个json对象def read_jsonfile(self, path):with open(path, "r", encoding='utf-8') as f:return json.load(f)# COCO的格式: [x1,y1,w,h] 对应COCO的bbox格式def _get_box(self, points):min_x = min_y = np.infmax_x = max_y = 0for x, y in points:min_x = min(min_x, x)min_y = min(min_y, y)max_x = max(max_x, x)max_y = max(max_y, y)return [min_x, min_y, max_x - min_x, max_y - min_y]if __name__ == '__main__':# 将一个文件夹下的照片和labelme的标注文件,分成了train和val的coco json文件和照片labelme_path = './data'train_img_out_path = './train_img'val_img_out_path = './val_img'if not (os.path.exists(train_img_out_path) and os.path.exists(val_img_out_path)):os.mkdir(train_img_out_path)os.mkdir(val_img_out_path)# 获取images目录下所有的joson文件列表json_list_path = glob.glob(labelme_path + "/*.json")print('json_list_path=', json_list_path)# 数据划分,这里没有区分val2017和tran2017目录,所有图片都放在images目录下train_path, val_path = train_test_split(json_list_path, test_size=0)print("train_n:", len(train_path), 'val_n:', len(val_path))print('train_path=', train_path)# 把训练集转化为COCO的json格式l2c_train = Lableme2CoCo()train_instance = l2c_train.to_coco(train_path)l2c_train.save_coco_json(train_instance, 'train.json')# 把验证集转化为COCO的json格式l2c_val = Lableme2CoCo()val_instance = l2c_val.to_coco(val_path)l2c_val.save_coco_json(val_instance, 'val.json')for file in train_path:shutil.copy(file.replace("json", "jpg"), train_img_out_path)for file in val_path:shutil.copy(file.replace("json", "jpg"), val_img_out_path)二.coco josn转yolo txt格式,yolo格式(cx/img_w,cy/img_h,w/img_w,h/img_h,其中cx,cy为box中心点,w,h为box宽长,img_w,img_h为图片长宽)

from __future__ import print_function

import os, sys, zipfile

import jsondef convert(size, box):dw = 1. / (size[0])dh = 1. / (size[1])x = box[0] + box[2] / 2.0y = box[1] + box[3] / 2.0w = box[2]h = box[3]x = x * dww = w * dwy = y * dhh = h * dhreturn (x, y, w, h)json_file = 'train.json' # # Object Instance 类型的标注data = json.load(open(json_file, 'r'))ana_txt_save_path = "./new" # 保存的路径

if not os.path.exists(ana_txt_save_path):os.makedirs(ana_txt_save_path)for img in data['images']:# print(img["file_name"])filename = img["file_name"]img_width = img["width"]img_height = img["height"]# print(img["height"])# print(img["width"])img_id = img["id"]ana_txt_name = filename.split(".")[0] + ".txt" # 对应的txt名字,与jpg一致print(ana_txt_name)f_txt = open(os.path.join(ana_txt_save_path, ana_txt_name), 'w')for ann in data['annotations']:if ann['image_id'] == img_id:# annotation.append(ann)# print(ann["category_id"], ann["bbox"])box = convert((img_width, img_height), ann["bbox"])f_txt.write("%s %s %s %s %s\n" % (ann["category_id"], box[0], box[1], box[2], box[3]))f_txt.close()

三. coco json转xml

import json

import os

import cv2

import xml.etree.ElementTree as ET

import numpy as np

def get_red_voc():img_path = './train_all_2020_05_31'json_path = './train_all_2020_05_31.json'xml_dir = './Annotations'# img_path = './train_all_2019_7_18'# json_path = './train_all_2019_7_18.json'# xml_dir = './Annotations'if not os.path.exists(xml_dir):os.mkdir(xml_dir)with open(json_path) as file:json_info = json.load(file)print(json_info.keys())images_info = json_info['images']annotations_info = json_info['annotations']for i, image_info in enumerate(images_info):# if i<1:print('==image_info', image_info)name = image_info['file_name']print('==name:', name)img_list_path = os.path.join(img_path, name)img = cv2.imread(img_list_path)img_h, img_w, _ = img.shapeimg_id = image_info['id']xml_file = open((xml_dir + '/' + name.split('.jpg')[0] + '.xml'), 'w')xml_file.write('<annotation>\n')xml_file.write(' <folder>red_voc</folder>\n')xml_file.write(' <filename>' + name + '</filename>\n')xml_file.write(' <size>\n')xml_file.write(' <width>' + str(img_w) + '</width>\n')xml_file.write(' <height>' + str(img_h) + '</height>\n')xml_file.write(' <depth>3</depth>\n')xml_file.write(' </size>\n')for annotation_info in annotations_info:# print(annotation_info)if img_id == annotation_info['image_id']:# print('==annotation_info', annotation_info)if len(annotation_info['segmentation'])==1:box = annotation_info['segmentation'][0]else:box = annotation_info['segmentation']# print('==box', box)box = np.array(box).reshape(-1, 2)x1, y1, x2, y2 = np.min(box[:, 0]), np.min(box[:, 1]),np.max(box[:, 0]), np.max(box[:, 1])# print('x1, y1, x2, y2+', x1, y1, x2, y2)xml_file.write(' <object>\n')xml_file.write(' <name>' + 'red' + '</name>\n')xml_file.write(' <pose>Unspecified</pose>\n')xml_file.write(' <truncated>0</truncated>\n')xml_file.write(' <difficult>0</difficult>\n')xml_file.write(' <bndbox>\n')xml_file.write(' <xmin>' + str(int(x1)) + '</xmin>\n')xml_file.write(' <ymin>' + str(int(y1)) + '</ymin>\n')xml_file.write(' <xmax>' + str(int(x2)) + '</xmax>\n')xml_file.write(' <ymax>' + str(int(y2)) + '</ymax>\n')xml_file.write(' </bndbox>\n')xml_file.write(' </object>\n')xml_file.write('</annotation>')def _get_annotation(image_id):annotation_file = "Annotations/{}.xml".format(image_id)img_path = "train_all_2020_05_31/{}.jpg".format(image_id)h, w, _ = cv2.imread(img_path).shape# print('===annotation_file,', annotation_file)objects = ET.parse(annotation_file).findall("object")# class_dict = {'BACKGROUND': 0, 'person': 1, 'bicycle': 2, 'car': 3, 'motorcycle': 4, 'airplane': 5, 'bus': 6, 'train': 7, 'truck': 8, 'boat': 9, 'traffic light': 10, 'fire hydrant': 11, 'stop sign': 12, 'parking meter': 13, 'bench': 14, 'bird': 15, 'cat': 16, 'dog': 17, 'horse': 18, 'sheep': 19, 'cow': 20, 'elephant': 21, 'bear': 22, 'zebra': 23, 'giraffe': 24, 'backpack': 25, 'umbrella': 26, 'handbag': 27, 'tie': 28, 'suitcase': 29, 'frisbee': 30, 'skis': 31, 'snowboard': 32, 'sports ball': 33, 'kite': 34, 'baseball bat': 35, 'baseball glove': 36, 'skateboard': 37, 'surfboard': 38, 'tennis racket': 39, 'bottle': 40, 'wine glass': 41, 'cup': 42, 'fork': 43, 'knife': 44, 'spoon': 45, 'bowl': 46, 'banana': 47, 'apple': 48, 'sandwich': 49, 'orange': 50, 'broccoli': 51, 'carrot': 52, 'hot dog': 53, 'pizza': 54, 'donut': 55, 'cake': 56, 'chair': 57, 'couch': 58, 'potted plant': 59, 'bed': 60, 'dining table': 61, 'toilet': 62, 'tv': 63, 'laptop': 64, 'mouse': 65, 'remote': 66, 'keyboard': 67, 'cell phone': 68, 'microwave': 69, 'oven': 70, 'toaster': 71, 'sink': 72, 'refrigerator': 73, 'book': 74, 'clock': 75, 'vase': 76, 'scissors': 77, 'teddy bear': 78, 'hair drier': 79, 'toothbrush': 80}class_dict = {'BACKGROUND': 0, 'red': 1}# print('==objects', objects)boxes = []labels = []is_difficult = []for object in objects:class_name = object.find('name').text.lower().strip()# we're only concerned with clases in our listif class_name in class_dict:bbox = object.find('bndbox')if bbox is not None:# VOC dataset format follows Matlab, in which indexes start from 0x1 = float(bbox.find('xmin').text)y1 = float(bbox.find('ymin').text)x2 = float(bbox.find('xmax').text)y2 = float(bbox.find('ymax').text)boxes.append([x1, y1, x2, y2])labels.append(class_dict[class_name])is_difficult_str = object.find('difficult').textis_difficult.append(int(is_difficult_str) if is_difficult_str else 0)else:polygons = object.find('polygon')x = []y = []for polygon in polygons.iter('pt'):# scale height or widthx.append(int(polygon.find('x').text))y.append(int(polygon.find('y').text))boxes.append([min(x), min(y), max(x), max(y)])labels.append(self.class_dict[class_name])is_difficult.append(0)return (np.array(boxes, dtype=np.float32),np.array(labels, dtype=np.int64),np.array(is_difficult, dtype=np.uint8))def show_box():img_path = './train_all_2020_05_31'out_path = './train_all_2020_05_31_out'if not os.path.exists(out_path):os.mkdir(out_path)xml_path ='./Annotations'imgs_id = [i.split('.xml')[0] for i in os.listdir(xml_path)]for img_id in imgs_id:# if img_id =='000000049777':print('==img_id:', img_id)boxes, labels, is_difficult = _get_annotation(img_id)print('==boxes, labels, is_difficult', boxes, labels, is_difficult)img = cv2.imread(os.path.join(img_path, img_id+'.jpg'))for box in boxes:cv2.rectangle(img, (box[0], box[1]), (box[2], box[3]), thickness=1, color=(255, 255, 255))cv2.imwrite(os.path.join(out_path, img_id+'.jpg'), img)if __name__ == '__main__':get_red_voc()# show_box()四.labelme标注文件转成分割图片

import json

import os

import os.path as osp

import numpy as np

import PIL.Image

import yamlfrom labelme.logger import logger

from labelme import utils

import cv2def main():img_path = './002.jpg'json_file = './002.json'output_path = './output'if not os.path.exists(output_path):os.mkdir(output_path)json_data = json.load(open(json_file))img = cv2.imread(img_path)print('img.shape:',img.shape)label_name_to_value = {'_background_': 0}for shape in sorted(json_data['shapes'], key=lambda x: x['label']):label_name = shape['label']print('label_name:',label_name)if label_name in label_name_to_value:label_value = label_name_to_value[label_name]else:label_value = len(label_name_to_value)print('label_value:',label_value)label_name_to_value[label_name] = label_valuelbl = utils.shapes_to_label(img.shape, json_data['shapes'], label_name_to_value)cv2.imwrite(output_path+'/'+img_path.split('/')[-1], lbl * 255)

五.boxes转labelme json

def output_json(self, save_path, im_file, bboxs):"""输入:图片,对应的bbbox左上角顺时针[[x1,y1,x2,y1,x2,y2,x1,y2]]和名字输出:labelme json文件"""import jsonimg = cv2.imread(im_file)h, w, _ = img.shapeim_name = im_file.split('/')[-1]# 对应输出json的格式jsonaug = {}jsonaug['flags'] = {}jsonaug['fillColor'] = [255, 0, 0, 128]# jsonaug['shapes']jsonaug['imagePath'] = im_namejsonaug['imageWidth'] = wjsonaug['imageHeight'] = hshapes = []for i, bbox in enumerate(bboxs):print('==bbox:', bbox)print('type(bbox[0]):', type(bbox[0]))temp = {"flags": {},"line_color": None,# "shape_type": "rectangle","shape_type": "polygon","fill_color": None,"label": "red"}temp['points'] = []temp['points'].append([int(bbox[0]), int(bbox[1])])temp['points'].append([int(bbox[2]), int(bbox[3])])temp['points'].append([int(bbox[4]), int(bbox[5])])temp['points'].append([int(bbox[6]), int(bbox[7])])shapes.append(temp)print('==shapes:', shapes)jsonaug['shapes'] = shapesjsonaug['imageData'] = Nonejsonaug['lineColor'] = [0, 255, 0, 128]jsonaug['version'] = '3.16.3'cv2.imwrite(os.path.join(save_path, im_name), img)with open(os.path.join(save_path, im_name.replace('.jpg', '.json')), 'w+') as fp:json.dump(jsonaug, fp=fp, ensure_ascii=False, indent=4, separators=(',', ': '))return jsonaug

预测:2019年七大人工智能科技趋势)

+异步+gunicorn部署Flask服务+多gpu卡部署)