一.生成高斯热力图

from math import exp

import numpy as np

import cv2

import osclass GaussianTransformer(object):def __init__(self, imgSize=512, region_threshold=0.4,affinity_threshold=0.2):distanceRatio = 3.34scaledGaussian = lambda x: exp(-(1 / 2) * (x ** 2))self.region_threshold = region_thresholdself.imgSize = imgSizeself.standardGaussianHeat = self._gen_gaussian_heatmap(imgSize, distanceRatio)_, binary = cv2.threshold(self.standardGaussianHeat, region_threshold * 255, 255, 0)np_contours = np.roll(np.array(np.where(binary != 0)), 1, axis=0).transpose().reshape(-1, 2)x, y, w, h = cv2.boundingRect(np_contours)self.regionbox = np.array([[x, y], [x + w, y], [x + w, y + h], [x, y + h]], dtype=np.int32)# print("regionbox", self.regionbox)_, binary = cv2.threshold(self.standardGaussianHeat, affinity_threshold * 255, 255, 0)np_contours = np.roll(np.array(np.where(binary != 0)), 1, axis=0).transpose().reshape(-1, 2)x, y, w, h = cv2.boundingRect(np_contours)self.affinitybox = np.array([[x, y], [x + w, y], [x + w, y + h], [x, y + h]], dtype=np.int32)# print("affinitybox", self.affinitybox)self.oribox = np.array([[0, 0, 1], [imgSize - 1, 0, 1], [imgSize - 1, imgSize - 1, 1], [0, imgSize - 1, 1]],dtype=np.int32)def _gen_gaussian_heatmap(self, imgSize, distanceRatio):scaledGaussian = lambda x: exp(-(1 / 2) * (x ** 2))heat = np.zeros((imgSize, imgSize), np.uint8)for i in range(imgSize):for j in range(imgSize):distanceFromCenter = np.linalg.norm(np.array([i - imgSize / 2, j - imgSize / 2]))distanceFromCenter = distanceRatio * distanceFromCenter / (imgSize / 2)scaledGaussianProb = scaledGaussian(distanceFromCenter)heat[i, j] = np.clip(scaledGaussianProb * 255, 0, 255)return heatdef _test(self):sigma = 10spread = 3extent = int(spread * sigma)center = spread * sigma / 2gaussian_heatmap = np.zeros([extent, extent], dtype=np.float32)for i_ in range(extent):for j_ in range(extent):gaussian_heatmap[i_, j_] = 1 / 2 / np.pi / (sigma ** 2) * np.exp(-1 / 2 * ((i_ - center - 0.5) ** 2 + (j_ - center - 0.5) ** 2) / (sigma ** 2))gaussian_heatmap = (gaussian_heatmap / np.max(gaussian_heatmap) * 255).astype(np.uint8)images_folder = os.path.abspath(os.path.dirname(__file__)) + '/images'threshhold_guassian = cv2.applyColorMap(gaussian_heatmap, cv2.COLORMAP_JET)cv2.imwrite(os.path.join(images_folder, 'test_guassian.jpg'), threshhold_guassian)def add_region_character(self, image, target_bbox, regionbox=None):if np.any(target_bbox < 0) or np.any(target_bbox[:, 0] > image.shape[1]) or np.any(target_bbox[:, 1] > image.shape[0]):return imageaffi = Falseif regionbox is None:regionbox = self.regionbox.copy()else:affi = TrueM = cv2.getPerspectiveTransform(np.float32(regionbox), np.float32(target_bbox))oribox = np.array([[[0, 0], [self.imgSize - 1, 0], [self.imgSize - 1, self.imgSize - 1], [0, self.imgSize - 1]]],dtype=np.float32)test1 = cv2.perspectiveTransform(np.array([regionbox], np.float32), M)[0]real_target_box = cv2.perspectiveTransform(oribox, M)[0]# print("test\ntarget_bbox", target_bbox, "\ntest1", test1, "\nreal_target_box", real_target_box)real_target_box = np.int32(real_target_box)real_target_box[:, 0] = np.clip(real_target_box[:, 0], 0, image.shape[1])real_target_box[:, 1] = np.clip(real_target_box[:, 1], 0, image.shape[0])# warped = cv2.warpPerspective(self.standardGaussianHeat.copy(), M, (image.shape[1], image.shape[0]))# warped = np.array(warped, np.uint8)# image = np.where(warped > image, warped, image)if np.any(target_bbox[0] < real_target_box[0]) or (target_bbox[3, 0] < real_target_box[3, 0] or target_bbox[3, 1] > real_target_box[3, 1]) or (target_bbox[1, 0] > real_target_box[1, 0] or target_bbox[1, 1] < real_target_box[1, 1]) or (target_bbox[2, 0] > real_target_box[2, 0] or target_bbox[2, 1] > real_target_box[2, 1]):# if False:warped = cv2.warpPerspective(self.standardGaussianHeat.copy(), M, (image.shape[1], image.shape[0]))warped = np.array(warped, np.uint8)image = np.where(warped > image, warped, image)# _M = cv2.getPerspectiveTransform(np.float32(regionbox), np.float32(_target_box))# warped = cv2.warpPerspective(self.standardGaussianHeat.copy(), _M, (width, height))# warped = np.array(warped, np.uint8)## # if affi:# # print("warped", warped.shape, real_target_box, target_bbox, _target_box)# # cv2.imshow("1123", warped)# # cv2.waitKey()# image[ymin:ymax, xmin:xmax] = np.where(warped > image[ymin:ymax, xmin:xmax], warped,# image[ymin:ymax, xmin:xmax])else:xmin = real_target_box[:, 0].min()xmax = real_target_box[:, 0].max()ymin = real_target_box[:, 1].min()ymax = real_target_box[:, 1].max()width = xmax - xminheight = ymax - ymin_target_box = target_bbox.copy()_target_box[:, 0] -= xmin_target_box[:, 1] -= ymin_M = cv2.getPerspectiveTransform(np.float32(regionbox), np.float32(_target_box))warped = cv2.warpPerspective(self.standardGaussianHeat.copy(), _M, (width, height))warped = np.array(warped, np.uint8)if warped.shape[0] != (ymax - ymin) or warped.shape[1] != (xmax - xmin):print("region (%d:%d,%d:%d) warped shape (%d,%d)" % (ymin, ymax, xmin, xmax, warped.shape[1], warped.shape[0]))return image# if affi:# print("warped", warped.shape, real_target_box, target_bbox, _target_box)# cv2.imshow("1123", warped)# cv2.waitKey()image[ymin:ymax, xmin:xmax] = np.where(warped > image[ymin:ymax, xmin:xmax], warped,image[ymin:ymax, xmin:xmax])return imagedef add_affinity_character(self, image, target_bbox):return self.add_region_character(image, target_bbox, self.affinitybox)def add_affinity(self, image, bbox_1, bbox_2):center_1, center_2 = np.mean(bbox_1, axis=0), np.mean(bbox_2, axis=0)tl = np.mean([bbox_1[0], bbox_1[1], center_1], axis=0)bl = np.mean([bbox_1[2], bbox_1[3], center_1], axis=0)tr = np.mean([bbox_2[0], bbox_2[1], center_2], axis=0)br = np.mean([bbox_2[2], bbox_2[3], center_2], axis=0)affinity = np.array([tl, tr, br, bl])return self.add_affinity_character(image, affinity.copy()), np.expand_dims(affinity, axis=0)def generate_region(self, image_size, bboxes):height, width = image_size[0], image_size[1]target = np.zeros([height, width], dtype=np.uint8)for i in range(len(bboxes)):character_bbox = np.array(bboxes[i].copy())for j in range(bboxes[i].shape[0]):target = self.add_region_character(target, character_bbox[j])return targetdef generate_affinity(self, image_size, bboxes, words):height, width = image_size[0], image_size[1]target = np.zeros([height, width], dtype=np.uint8)affinities = []for i in range(len(words)):character_bbox = np.array(bboxes[i])total_letters = 0for char_num in range(character_bbox.shape[0] - 1):target, affinity = self.add_affinity(target, character_bbox[total_letters],character_bbox[total_letters + 1])affinities.append(affinity)total_letters += 1if len(affinities) > 0:affinities = np.concatenate(affinities, axis=0)return target, affinitiesdef saveGaussianHeat(self):images_folder = os.path.abspath(os.path.dirname(__file__)) + '/images'print('==images_folder:', images_folder)if not os.path.exists(images_folder):os.mkdir(images_folder)cv2.imwrite(os.path.join(images_folder, 'standard.jpg'), self.standardGaussianHeat)warped_color = cv2.applyColorMap(self.standardGaussianHeat, cv2.COLORMAP_JET)cv2.polylines(warped_color, [np.reshape(self.regionbox, (-1, 1, 2))], True, (255, 255, 255), thickness=1)cv2.imwrite(os.path.join(images_folder, 'standard_color.jpg'), warped_color)standardGaussianHeat1 = self.standardGaussianHeat.copy()threshhold = self.region_threshold * 255standardGaussianHeat1[standardGaussianHeat1 > 0] = 255threshhold_guassian = cv2.applyColorMap(standardGaussianHeat1, cv2.COLORMAP_JET)cv2.polylines(threshhold_guassian, [np.reshape(self.regionbox, (-1, 1, 2))], True, (255, 255, 255), thickness=1)cv2.imwrite(os.path.join(images_folder, 'threshhold_guassian.jpg'), threshhold_guassian)if __name__ == '__main__':gaussian = GaussianTransformer(512, 0.4, 0.2)gaussian.saveGaussianHeat()gaussian._test()bbox0 = np.array([[[0, 0], [100, 0], [100, 100], [0, 100]]])image = np.zeros((500, 500), np.uint8)# image = gaussian.add_region_character(image, bbox)bbox1 = np.array([[[100, 0], [200, 0], [200, 100], [100, 100]]])bbox2 = np.array([[[100, 100], [200, 100], [200, 200], [100, 200]]])bbox3 = np.array([[[0, 100], [100, 100], [100, 200], [0, 200]]])bbox4 = np.array([[[96, 0], [151, 9], [139, 64], [83, 58]]])# image = gaussian.add_region_character(image, bbox)# print(image.max())image = gaussian.generate_region((500, 500, 1), [bbox3])# target_gaussian_heatmap_color = imgproc.cvt2HeatmapImg(image.copy() / 255)# cv2.imshow("test", target_gaussian_heatmap_color)# cv2.imwrite("test.jpg", target_gaussian_heatmap_color)# cv2.waitKey()

1.标准高斯分布 2.伪彩色

二.3d点 生成3d heatmap

注意的是此时3d点是手腕坐标系,取的400是可以正负活动,x,y,z范围是[-200, 200],所以除以400,在加以0.5归一化至[0, 1]

import numpy as np

joints_3d = np.array([[-24.25473, -14.594984, -123.86395],[-21.384483, -2.5870495, -102.83917],[-2.1389189, 0.4295807, -77.805786],[0.4713831, -4.49901, -45.992554],[-24.65406, -7.517185, -131.60638],[-20.059254, 10.334126, -121.49127],[-14.004571, 31.53947, -106.92682],[0.97307205, 54.702316, -72.0885],[-34.38083, -5.5681667, -132.75537],[-36.56296, 15.5307255, -122.979614],[-34.097603, 38.7413, -104.22839],[-23.116486, 59.41091, -62.15027],[-40.734467, -13.705656, -126.29144],[-48.140816, 5.673216, -115.56366],[-52.453285, 28.091465, -97.55463],[-41.134747, 46.38981, -56.097656],[-38.43096, -12.890821, -117.34686],[-44.426872, -1.405302, -101.77527],[-50.584797, 10.186543, -84.22754],[-53.451706, 24.639828, -51.59436],[0., 0., 0.]], dtype=np.float32)

joints_3d_visible = np.array([[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.]], dtype=np.float32)

heatmap3d_depth_bound = 400

W, H, D = 64, 64, 64

num_joints = 21

mu_x = (joints_3d[:, 0] / heatmap3d_depth_bound + 0.5) * W

mu_y = (joints_3d[:, 1] / heatmap3d_depth_bound + 0.5) * H

mu_z = (joints_3d[:, 2] / heatmap3d_depth_bound + 0.5) * D

print('==mu_x:', mu_x)

print('==mu_y:', mu_y)

print('==mu_z:', mu_z)

target = np.zeros([num_joints, D, H, W], dtype=np.float32)target_weight = joints_3d_visible[:, 0].astype(np.float32)

# print('==target_weight:', target_weight)

target_weight = target_weight * (mu_z >= 0) * (mu_z < D) * (mu_x >= 0) * (mu_x < W) * (mu_y >= 0) * (mu_y < H)

# print('==target_weight:', target_weight)

# if use_different_joint_weights:

# target_weight = target_weight * joint_weights

target_weight = target_weight[:, None]sigma = 2.5

# only compute the voxel value near the joints location

tmp_size = 3 * sigma# get neighboring voxels coordinates

x = y = z = np.arange(2 * tmp_size + 1, dtype=np.float32) - tmp_size

# print('==x,y,z:', x, y, z)

# print('==len(x):', len(x))

zz, yy, xx = np.meshgrid(z, y, x)

# print('==xx.shape:', xx.shape)

# print(xx[0])

xx = xx[None, ...].astype(np.float32) # (1, 2 * tmp_size + 1, 2 * tmp_size + 1,2 * tmp_size + 1, 2 * tmp_size + 1)

yy = yy[None, ...].astype(np.float32)

zz = zz[None, ...].astype(np.float32)

mu_x = mu_x[..., None, None, None] # (42, 1, 1, 1)

mu_y = mu_y[..., None, None, None]

mu_z = mu_z[..., None, None, None]

xx, yy, zz = xx + mu_x, yy + mu_y, zz + mu_z # (42, 2 * tmp_size + 1, 2 * tmp_size + 1, 2 * tmp_size + 1)

# print('==np.min(xx):', np.min(xx))

# print('==np.max(xx):', np.max(xx))

# print('==np.min(yy):', np.min(yy))

# print('==np.max(yy):', np.max(yy))

# print('==np.min(zz):', np.min(zz))

# print('==np.max(zz):', np.max(zz))

# round the coordinates

xx = xx.round().clip(0, W - 1)

yy = yy.round().clip(0, H - 1)

zz = zz.round().clip(0, D - 1)# compute the target value near joints

# (42, 2 * tmp_size + 1, 2 * tmp_size + 1, 2 * tmp_size + 1)

local_target = \np.exp(-((xx - mu_x) ** 2 + (yy - mu_y) ** 2 + (zz - mu_z) ** 2) /(2 * sigma ** 2))

# print('==local_target.shape:', local_target.shape)

# import pdb;pdb.set_trace()

# put the local target value to the full target heatmap

# print('==xx.shape:', xx.shape)

local_size = xx.shape[1]

idx_joints = np.tile(np.arange(num_joints)[:, None, None, None],[1, local_size, local_size, local_size])

# print('==idx_joints.shape:', idx_joints.shape)

# print("===np.stack([idx_joints, zz, yy, xx],axis=-1)", np.stack([idx_joints, zz, yy, xx], axis=-1).shape)

idx = np.stack([idx_joints, zz, yy, xx],axis=-1).astype(np.long).reshape(-1, 4)target[idx[:, 0], idx[:, 1], idx[:, 2],idx[:, 3]] = local_target.reshape(-1)

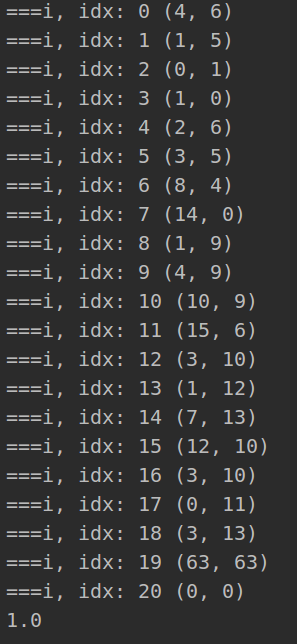

for i in range(num_joints):idx = np.unravel_index(np.argmax(target[i], axis=None), target[i].shape)print('===i, idx:', i, idx)

max_bound = 255

target = target * max_bound

print('==np.max(target):', np.max(target))

3d点坐标:

3d heatmap最大值索引

三.2d点生成 heatmap

import numpy as np

#x,y是平面坐标系,z是手腕坐标系

joints_3d = np.array([[24.25473, 14.594984, -220.86395],[21.384483, 2.5870495, -102.83917],[2.1389189, 0.4295807, -77.805786],[0.4713831, 4.49901, -45.992554],[24.65406, 7.517185, -131.60638],[20.059254, 10.334126, -121.49127],[14.004571, 31.53947, -106.92682],[0.97307205, 54.702316, -72.0885],[34.38083, 5.5681667, -132.75537],[36.56296, 15.5307255, -122.979614],[34.097603, 38.7413, -104.22839],[23.116486, 59.41091, -62.15027],[40.734467, 13.705656, -126.29144],[48.140816, 5.673216, -115.56366],[52.453285, 28.091465, -97.55463],[41.134747, 46.38981, -56.097656],[38.43096, 12.890821, -117.34686],[44.426872, 1.405302, -101.77527],[50.584797, 10.186543, -84.22754],[255, 255, 51.59436],[0., 0., 0.]], dtype=np.float32)joints_3d_visible = np.array([[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.],[1., 1., 1.]], dtype=np.float32)img_size = [256, 256]

W, H, D = 64, 64, 64

num_joints = 21

mu_x = joints_3d[:, 0] * W / img_size[0]

mu_y = joints_3d[:, 1] * H / img_size[1]print('==mu_x:', mu_x)

print('==mu_y:', mu_y)target = np.zeros([num_joints, H, W], dtype=np.float32)target_weight = joints_3d_visible[:, 0].astype(np.float32)

# print('==target_weight:', target_weight)

target_weight = target_weight * (mu_x >= 0) * (mu_x < W) * (mu_y >= 0) * (mu_y < H)target_weight = target_weight[:, None]

sigma = 2.5

# only compute the voxel value near the joints location

tmp_size = 3 * sigma# get neighboring voxels coordinates

x = y = np.arange(2 * tmp_size + 1, dtype=np.float32) - tmp_size

yy, xx = np.meshgrid(y, x)xx = xx[None, ...].astype(np.float32) # (1, 2 * tmp_size + 1, 2 * tmp_size + 1,2 * tmp_size + 1, 2 * tmp_size + 1)

yy = yy[None, ...].astype(np.float32)mu_x = mu_x[..., None, None] # (21, 1, 1)

mu_y = mu_y[..., None, None]

xx, yy = xx + mu_x, yy + mu_y # (42, 2 * tmp_size + 1, 2 * tmp_size + 1, 2 * tmp_size + 1)

print('==np.min(xx):', np.min(xx))

print('==np.max(xx):', np.max(xx))

# round the coordinates

xx = xx.round().clip(0, W - 1)

yy = yy.round().clip(0, H - 1)# compute the target value near joints

local_target = np.exp(-((xx - mu_x) ** 2 + (yy - mu_y) ** 2) / (2 * sigma ** 2))

print('==local_target.shape:', local_target.shape)

print('==np.max(local_target):', np.max(local_target))

# import pdb;pdb.set_trace()

# put the local target value to the full target heatmap

local_size = xx.shape[1]

idx_joints = np.tile(np.arange(num_joints)[:, None, None],[1, local_size, local_size])

print('==idx_joints.shape:', idx_joints.shape)

# print("===np.stack([idx_joints, zz, yy, xx],axis=-1)", np.stack([idx_joints, zz, yy, xx], axis=-1).shape)

idx = np.stack([idx_joints, yy, xx], axis=-1).astype(np.long).reshape(-1, 3)target[idx[:, 0], idx[:, 1], idx[:, 2]] = local_target.reshape(-1)

for i in range(num_joints):idx = np.unravel_index(np.argmax(target[i], axis=None), target[i].shape)print('===i, idx:', i, idx)

print(np.max(target))

print(np.min(target))2d点坐标:

2d heatmap最大值索引

![POP动画[1]](http://pic.xiahunao.cn/POP动画[1])

)

+知识蒸馏+tensorrt推理+利用pyzbar和zxing进行条形码解析)

和普里姆算法(Prim算法);最短路径算法Dijkstra(迪杰斯特拉)和Floyd(弗洛伊德))

)

)

)