文章目录

- 目录

- 1.DenseNet网络结构

- 2.稠密连接及其优点

- 3.代码实现

- 4.补充说明

目录

1.DenseNet网络结构

2.稠密连接及其优点

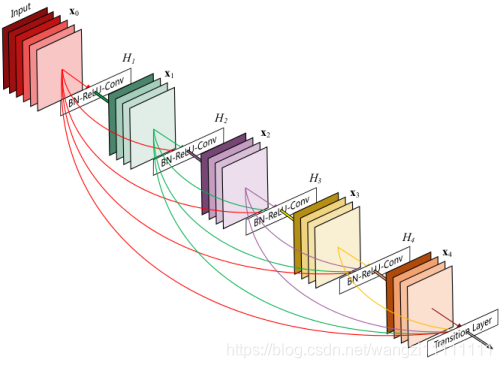

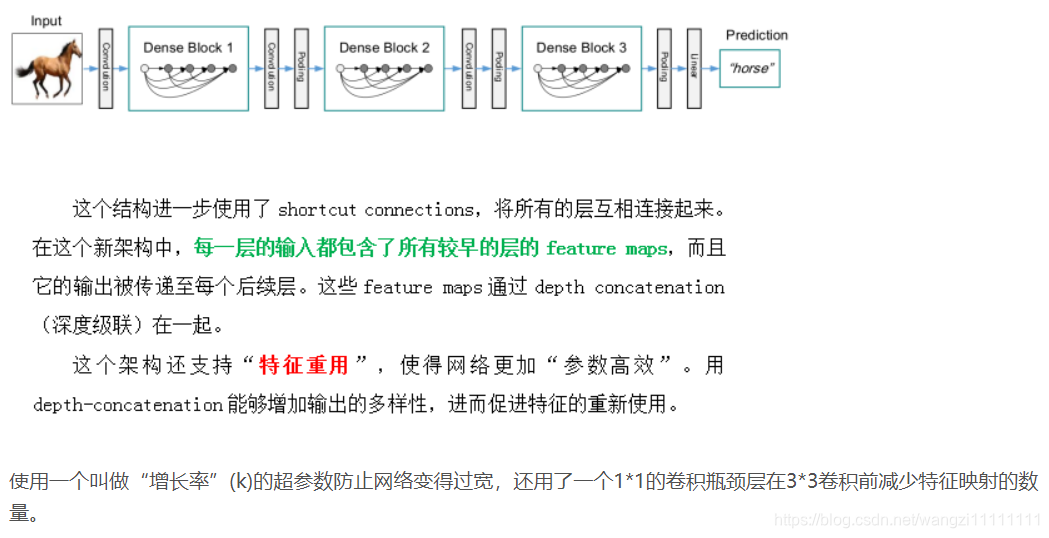

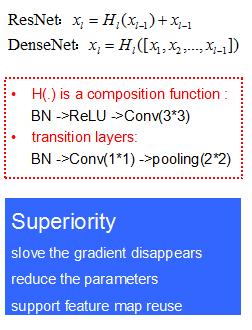

每层以之前层的输出为输入,对于有L层的传统网络,一共有L个连接,对于DenseNet,则有L*(L+1)/2。

这篇论文主要参考了Highway Networks,Residual Networks (ResNets)以及GoogLeNet,通过加深网络结构,提升分类结果。

加深网络结构首先需要解决的是梯度消失问题

解决方案是:尽量缩短前层和后层之间的连接。

比如上图中,H4层可以直接用到原始输入信息X0,同时还用到了之前层对X0处理后的信息,这样能够最大化信息的流动。

反向传播过程中,X0的梯度信息包含了损失函数直接对X0的导数,有利于梯度传播。

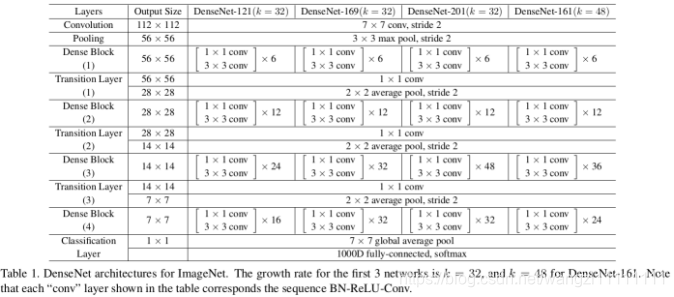

DenseNet具体网络结构:

3.代码实现

conv block、transition block、Dense block

def conv_block(x, stage, branch, nb_filter, dropout_rate=None, weight_decay=1e-4):"""Apply BatchNorm, Relu, bottleneck 1x1 Conv2D, 3x3 Conv2D, and option dropout# Argumentsx: input tensor stage: index for dense blockbranch: layer index within each dense blocknb_filter: number of filtersdropout_rate: dropout rateweight_decay: weight decay factor"""eps = 1.1e-5conv_name_base = 'conv' + str(stage) + '_' + str(branch)relu_name_base = 'relu' + str(stage) + '_' + str(branch)" 1*1 convolutional (Bottleneck layer)"inter_channel = 4 * nb_filterx = BatchNormalization(epsilon=eps, axis=3, gamma_regularizer=l2(weight_decay),beta_regularizer=l2(weight_decay), name=conv_name_base+'_x1_bn')(x)x = Activation('relu', name=relu_name_base + '_x1')(x)x = Conv2D(filters=inter_channel, kernel_size=(1,1), strides=(1,1), padding='same',kernel_initializer='he_uniform',kernel_regularizer=l2(weight_decay),name=conv_name_base + '_x1')(x)if dropout_rate:x = Dropout(dropout_rate)(x)" 3*3 convolutional"x = BatchNormalization(epsilon=eps, axis=3, gamma_regularizer=l2(weight_decay),beta_regularizer=l2(weight_decay), name=conv_name_base + '_x2_bn')(x)x = Activation('relu', name=relu_name_base + '_x2')(x)x = Conv2D(filters=nb_filter, kernel_size=(3,3), strides=(1,1), padding='same', kernel_initializer='he_uniform',kernel_regularizer=l2(weight_decay),name=conv_name_base + '_x2')(x)if dropout_rate:x = Dropout(dropout_rate)(x)return xdef transition_block(x, stage, nb_filter, compression=1.0, dropout_rate=None, weight_decay=1e-4):"""Apply BatchNorm, 1x1 Convolution, averagePooling, optional compression, dropout # Argumentsx: input tensorstage: index for dense blocknb_filter: number of filterscompression: calculated as 1 - reduction. Reduces the number of feature maps in the transition block.dropout_rate: dropout rateweight_decay: weight decay factor"""eps = 1.1e-5conv_name_base = 'conv' + str(stage) + '_blk'relu_name_base = 'relu' + str(stage) + '_blk'pool_name_base = 'pool' + str(stage) x = BatchNormalization(epsilon=eps, axis=3, name=conv_name_base + '_bn')(x)x = Activation('relu', name=relu_name_base)(x)x = Conv2D(filters=int(nb_filter * compression), kernel_size=(1,1), strides=(1,1), padding='same', name=conv_name_base)(x)if dropout_rate:x = Dropout(dropout_rate)(x)x = AveragePooling2D((2,2), strides=(2,2), name=pool_name_base)(x)return xdef dense_block(x, stage, nb_layers, nb_filter, growth_rate, dropout_rate=None, weight_decay=1e-4, grow_nb_filters=True):"""Build a dense_block where the output of each conv_block is fed to subsequent ones# Argumentsx: input tensorstage: index for dense blocknb_layers: the number of layers of conv_block to append to the model.nb_filter: number of filtersgrowth_rate: growth ratedropout_rate: dropout rateweight_decay: weight decay factorgrow_nb_filters: flag to decide to allow number of filters to grow"""eps = 1.1e-5concat_feat = xfor i in range(nb_layers):branch = i+1x = conv_block(concat_feat, stage, branch, growth_rate, dropout_rate, weight_decay)concat_feat = concatenate([concat_feat, x], axis=3, name='concat_' + str(stage) + '_' + str(branch))if grow_nb_filters:nb_filter += growth_ratereturn concat_feat, nb_filterDenseNet-BC-121

def DenseNet_BC_121(input_shape=(64,64,3), nb_dense_block=4, growth_rate=32, nb_filter=16,reduction=0.0, dropout_rate=0.0, classes=6, weight_decay=1e-4, weights_path=None):"""Instantiate the DenseNet 121 architecture,# Argumentsnb_dense_block: number of dense blocks to add to endgrowth_rate: number of filters to add per dense blocknb_filter: initial number of filtersreduction: reduction factor of transition blocks.dropout_rate: dropout rateweight_decay: weight decay factorclasses: optional number of classes to classify imagesweights_path: path to pre-trained weights# ReturnsA Keras model instance."""eps = 1.1e-5compression = 1.0 - reductionnb_layers = [6,12,24,16]x_input = Input(input_shape)"Initial convolution"x = Conv2D(filters=nb_filter, kernel_size=(7,7), strides=(1,1), padding='same', name='conv1')(x_input)x = BatchNormalization(epsilon=eps, axis=3, name='conv1_bn')(x)x = Activation('relu', name='relu1')(x)x = MaxPooling2D((3,3), strides=(2,2), padding='same', name='pool1')(x)"Add dense blocks"for block_idx in range(nb_dense_block - 1):stage = block_idx + 2x, nb_filter = dense_block(x, stage, nb_layers[block_idx], nb_filter, growth_rate,dropout_rate=dropout_rate, weight_decay=weight_decay)"Add transition_block"x = transition_block(x, stage, nb_filter, compression=compression, dropout_rate=dropout_rate, weight_decay=weight_decay)nb_filter = int(nb_filter * compression)"the last dense block does not have a transition"final_stage = stage + 1x, nb_filter = dense_block(x, final_stage, nb_layers[-1], nb_filter, growth_rate,dropout_rate=dropout_rate, weight_decay=weight_decay)x = BatchNormalization(epsilon=eps, axis=3, name='conv' + str(final_stage) + 'blk_bn')(x)x = Activation('relu', name='relu' + str(final_stage) + '_blk')(x)x = GlobalAveragePooling2D(name='pool' + str(final_stage))(x)x = Dense(classes, activation='softmax', name='softmax_prob')(x)model = Model(inputs=x_input, outputs=x, name='DenseNet_BC_121')if weights_path is not None:model.load_weights(weights_path)return model

4.补充说明

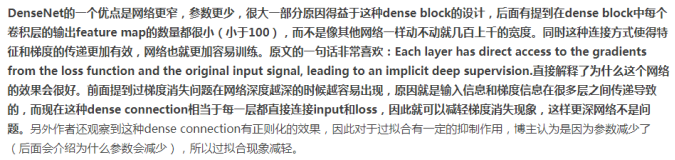

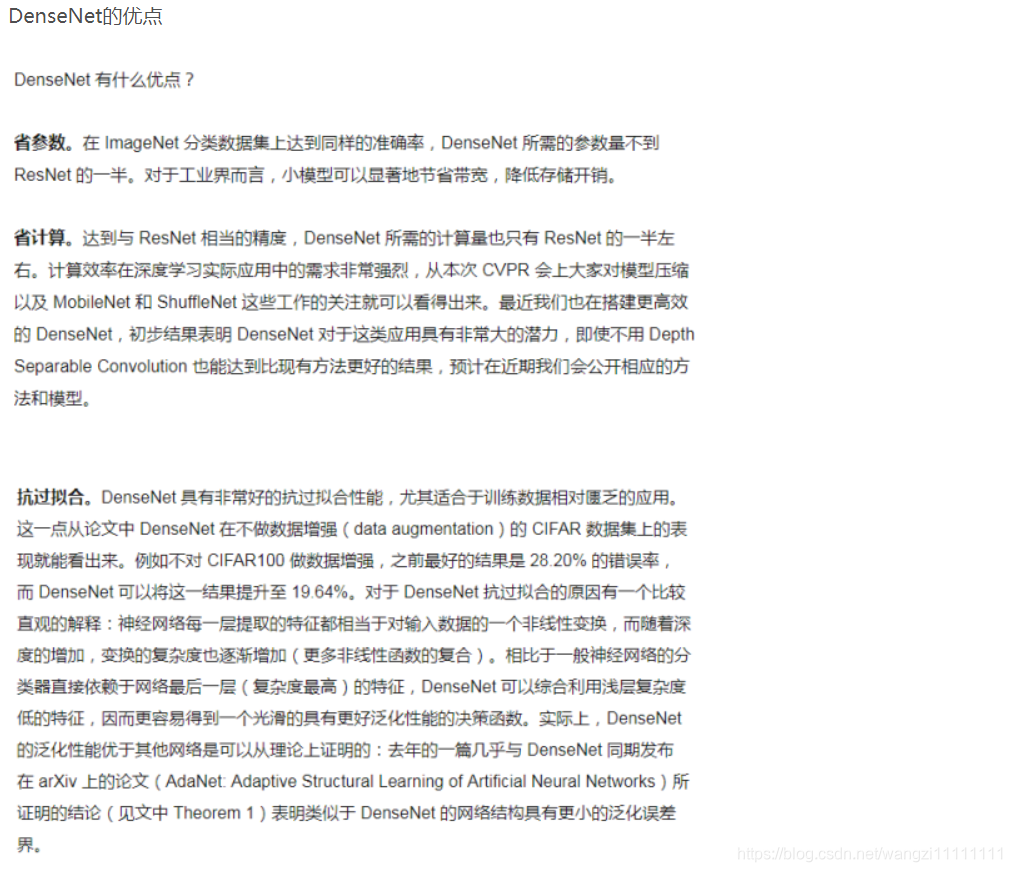

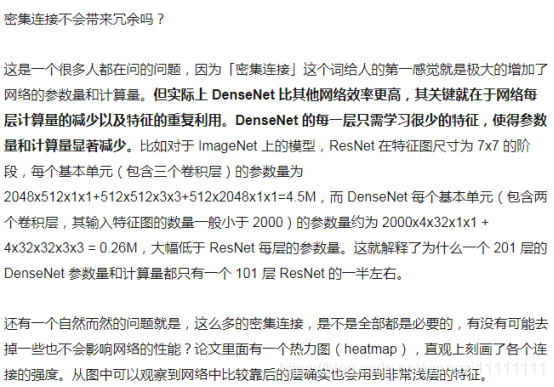

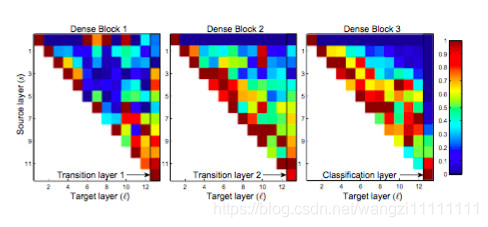

DenseNet网络更窄、参数更少

文中还用到dropout操作来随机减少分支,避免过拟合,毕竟这篇文章的连接确实多。

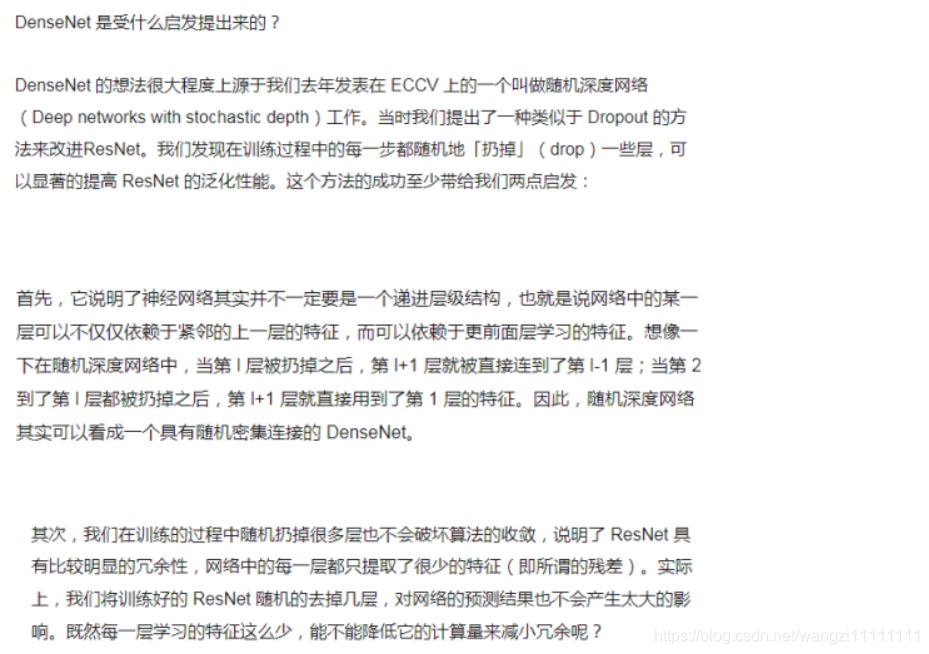

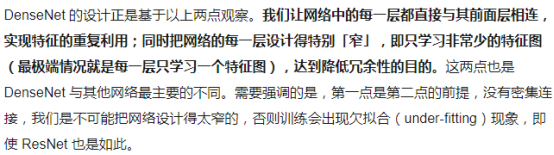

** 原作者的一些解释 **

-window7配置iPython)

-- Capsules Networks(CapsNet))

-列表list,for循环)

-- GAN)

--try-expect)

-Argparse 简易使用教程)

-元组tuple)

Keras)

)

-数据载入接口:Dataloader、datasets)

)

-- NoSQL数据库)

-字典dictionary、集合)