数据不平衡处理

重点 (Top highlight)

One of the common problems in Machine Learning is handling the imbalanced data, in which there is a highly disproportionate in the target classes.

机器学习中的常见问题之一是处理不平衡的数据,其中目标类别的比例非常不均衡。

Hello world, this is my second blog for the Data Science community. In this blog, we are going to see how to deal with the multiclass imbalanced data problem.

大家好,这是我的第二本面向数据科学社区的博客 。 在此博客中,我们将看到如何处理多类不平衡数据问题。

什么是多类不平衡数据? (What is Multiclass Imbalanced Data?)

When the target classes (two or more) of classification problems are not equally distributed, then we call it Imbalanced data. If we failed to handle this problem then the model will become a disaster because modeling using class-imbalanced data is biased in favor of the majority class.

当分类问题的目标类别(两个或多个)没有平均分布时,我们称其为不平衡数据。 如果我们不能解决这个问题,那么该模型将成为灾难,因为使用类不平衡数据进行建模会偏向多数类。

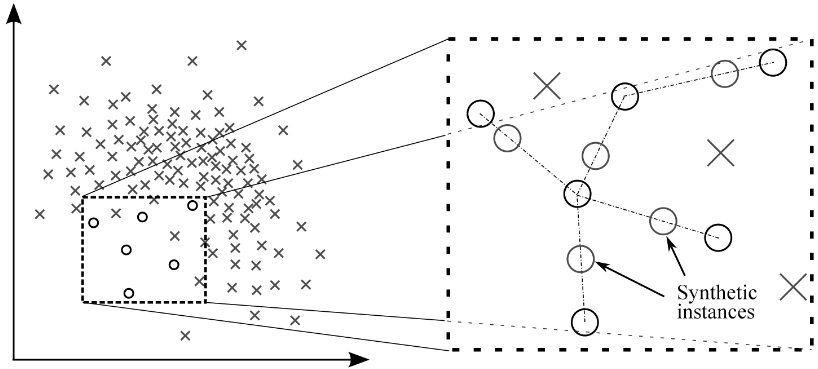

There are different methods of handling imbalanced data, the most common methods are Oversampling and creating synthetic samples.

处理不平衡数据的方法多种多样,最常见的方法是过采样和创建合成样本。

什么是SMOTE? (What is SMOTE?)

SMOTE is an oversampling technique that generates synthetic samples from the dataset which increases the predictive power for minority classes. Even though there is no loss of information but it has a few limitations.

SMOTE是一种过采样技术,可从数据集中生成合成样本,从而提高了少数群体的预测能力。 即使没有信息丢失,它也有一些局限性。

Limitations:

局限性:

- SMOTE is not very good for high dimensionality data SMOTE对于高维数据不是很好

- Overlapping of classes may happen and can introduce more noise to the data. 类的重叠可能会发生,并可能给数据带来更多的噪音。

So, to skip this problem, we can assign weights for the class manually with the ‘class_weight’ parameter.

因此,要跳过此问题,我们可以使用' class_weight '参数为该类手动分配权重。

为什么要使用班级重量? (Why use Class weight?)

Class weights modify the loss function directly by giving a penalty to the classes with different weights. It means purposely increasing the power of the minority class and reducing the power of the majority class. Therefore, it gives better results than SMOTE.

类权重通过对具有不同权重的类进行惩罚来直接修改损失函数。 这意味着有目的地增加少数群体的权力,并减少多数阶级的权力。 因此,它比SMOTE提供更好的结果。

概述: (Overview:)

I aim to keep this blog very simple. We have a few most preferred techniques for getting the weights for the data which worked for my Imbalanced learning problems.

我的目的是使这个博客非常简单。 我们有一些最优选的技术来获取对我的失衡学习问题有用的数据权重。

- Sklearn utils. Sklearn实用程序。

- Counts to Length. 数到长度。

- Smoothen Weights. 平滑权重。

- Sample Weight Strategy. 样品重量策略。

1. Sklearn实用程序: (1. Sklearn utils:)

We can get class weights using sklearn to compute the class weight. By adding those weight to the minority classes while training the model, can help the performance while classifying the classes.

我们可以使用sklearn计算班级权重。 通过在训练模型时将这些权重添加到少数类中,可以在对类进行分类的同时帮助提高性能。

from sklearn.utils import class_weightclass_weight = class_weight.compute_class_weight('balanced,

np.unique(target_Y),

target_Y)model = LogisticRegression(class_weight = class_weight)

model.fit(X,target_Y)# ['balanced', 'calculated balanced', 'normalized'] are hyperpaameters whic we can play with.We have a class_weight parameter for almost all the classification algorithms from Logistic regression to Catboost. But XGboost has scale_pos_weight for binary classification and sample_weights (refer 4) for both binary and multiclass problems.

对于从Logistic回归到Catboost的几乎所有分类算法,我们都有一个class_weight参数。 但是XGboost具有用于二进制分类的scale_pos_weight和用于二进制和多类问题的sample_weights(请参阅4)。

2.数长比: (2. Counts to Length Ratio:)

Very simple and straightforward! Dividing the no. of counts of each class with the no. of rows. Then

非常简单明了! 除数 每个班级的人数 行。 然后

weights = df[target_Y].value_counts()/len(df)

model = LGBMClassifier(class_weight = weights)

model.fit(X,target_Y)3.平滑权重技术: (3. Smoothen Weights Technique:)

This is one of the preferable methods of choosing weights.

这是选择权重的首选方法之一。

labels_dict is the dictionary object contains counts of each class.

labels_dict是字典对象,包含每个类的计数。

The log function smooths the weights for the imbalanced class.

对数函数可平滑不平衡类的权重。

def class_weight(labels_dict,mu=0.15):

total = np.sum(labels_dict.values())

keys = labels_dict.keys()

weight = dict()for i in keys:

score = np.log(mu*total/float(labels_dict[i]))

weight[i] = score if score > 1 else 1return weight# random labels_dict

labels_dict = weights = class_weight(labels_dict)model = RandomForestClassifier(class_weight = weights)

model.fit(X,target_Y)4.样本权重策略: (4. Sample Weight Strategy:)

This below function is different from the class_weight parameter which is used to get sample weights for the XGboost algorithm. It returns different weights for each training sample.

下面的函数不同于用于获取XGboost算法的样本权重的class_weight参数。 对于每个训练样本,它返回不同的权重。

Sample_weight is an array of the same length as data, containing weights to apply to the model’s loss for each sample.

Sample_weight是与数据长度相同的数组,其中包含权重以应用于每个样本的模型损失。

def BalancedSampleWeights(y_train,class_weight_coef):

classes = np.unique(y_train, axis = 0)

classes.sort()

class_samples = np.bincount(y_train)

total_samples = class_samples.sum()

n_classes = len(class_samples)

weights = total_samples / (n_classes * class_samples * 1.0)

class_weight_dict = {key : value for (key, value) in zip(classes, weights)}

class_weight_dict[classes[1]] = class_weight_dict[classes[1]] *

class_weight_coef

sample_weights = [class_weight_dict[i] for i in y_train]

return sample_weights#Usage

weight=BalancedSampleWeights(

model = XGBClassifier(sample_weight = weight)

model.fit(X, class_weights vs sample_weight:

class_weights与sample_weight:

sample_weights is used to give weights for each training sample. That means that you should pass a one-dimensional array with the exact same number of elements as your training samples.

sample_weights用于给出每个训练样本的权重。 这意味着您应该传递一维数组,该数组具有与训练样本完全相同数量的元素。

class_weights is used to give weights for each target class. This means you should pass a weight for each class that you are trying to classify.

class_weights用于为每个目标类赋予权重。 这意味着您应该为要分类的每个类传递权重。

结论: (Conclusion:)

The above are few methods of finding class weights and sample weights for your classifier. I mention almost all the techniques which worked well for my project.

上面是为分类器找到分类权重和样本权重的几种方法。 我提到几乎所有对我的项目都有效的技术。

I’m requesting the readers to give a try on these techniques that could help you, if not take it as learning 😄 it may help you another time 😜

我要求读者尝试这些可以帮助您的技术,如果不以学习为learning,那可能会再次帮助您😜

Reach me at LinkedIn 😍

在LinkedIn上到达我

翻译自: https://towardsdatascience.com/how-to-handle-multiclass-imbalanced-data-say-no-to-smote-e9a7f393c310

数据不平衡处理

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/389513.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!思想及实现)

)

和归一化实现)

or ‘1type‘ as a synonym of type is deprecated解决办法)

转移方式及参数传递)