Any data science or data analytics project can be generally described with the following steps:

通常可以通过以下步骤来描述任何数据科学或数据分析项目:

- Acquiring a business understanding & defining the goal of a project 获得业务理解并定义项目目标

- Getting data 获取数据

- Preprocessing and exploring data 预处理和探索数据

- Improving data, e.g., by feature engineering 改善数据,例如通过特征工程

- Visualizing data 可视化数据

- Building a model 建立模型

- Deploying the model 部署模型

- Scoring its performance 对其表现进行评分

This time, I would like to bring your attention to the data cleaning and exploration phase since it’s a step which value is hard to measure, but the impact it brings is difficult to overestimate. Insights gained during this stage can affect all further work.

这次,我想提请您注意数据清理和探索阶段,因为这是一个难以衡量的步骤,但很难估量其带来的影响。 在此阶段获得的见解会影响所有进一步的工作。

There are multiple ways you can start exploratory data analysis with:

您可以通过多种方式开始探索性数据分析:

- Load data and preprocess it: clean it from unnecessary artifacts, deal with missing values. Make your dataset comfortable to work with. 加载数据并进行预处理:清除不必要的工件,处理缺失值。 使数据集易于使用。

- Visualize as much data as possible using different kinds of plots & a pivot table. 使用不同种类的绘图和数据透视表,可视化尽可能多的数据。

目的 (Purpose)

In this tutorial, I would like to show how to prepare your data with Python and explore it using a JavaScript library for data visualization. To get the most value out of exploration, I recommend using interactive visualizations since they make exploring your data faster and more comfortable.

在本教程中,我想展示如何使用Python 准备数据并使用JavaScript库进行数据可视化探索。 为了从探索中获得最大价值,我建议使用交互式可视化,因为它们可以使您更快,更舒适地浏览数据。

Hence, we will present data in an interactive pivot table and pivot charts.

因此,我们将在交互式数据透视表和数据透视图中显示数据。

Hopefully, this approach will help you facilitate the data analysis and visualization process in Jupyter Notebook.

希望这种方法将帮助您促进Jupyter Notebook中的数据分析和可视化过程。

设置环境 (Set up your environment)

Run your Jupyter Notebook and let’s start. If Jupyter is not installed on your machine, choose the way to get it.

运行Jupyter Notebook,开始吧。 如果您的计算机上未安装Jupyter,请选择获取方式 。

获取数据 (Get your data)

Choosing the data set to work with is the number one step.

选择要使用的数据集是第一步。

If your data is already cleaned and ready to be visualized, jump to the Visualization section.

如果您的数据已被清理并准备可视化,请跳至“ 可视化”部分。

For demonstration purposes, I’ve chosen the data for the prediction of Bike Sharing Demand. It’s provided as data for the Kaggle’s competition.

出于演示目的,我选择了用于预测“ 自行车共享需求”的数据 。 作为Kaggle比赛数据提供。

本教程的导入 (Imports for this tutorial)

Classically, we will use the “pandas” library to read data into a dataframe.

传统上,我们将使用“ pandas”库将数据读入数据框。

Additionally, we will need json and IPython.display modules. The former will help us serialize/deserialize data and the latter — render HTML in the cells.

此外,我们将需要json和IPython.display模块。 前者将帮助我们对数据进行序列化/反序列化,而后者将在单元格中呈现HTML。

Here’s the full code sample with imports we need:

这是我们需要导入的完整代码示例:

from IPython.display import HTMLimport jsonimport pandas as pd读取数据 (Read data)

df = pd.read_csv('train.csv')

df = pd.read_csv('train.csv')

清理和预处理数据 (Clean & preprocess data)

Before starting data visualization, it’s a good practice to see what’s going on in the data.

在开始数据可视化之前,最好先查看数据中发生了什么。

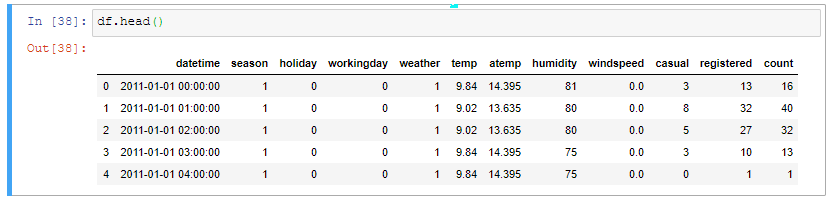

df.head()

df.head()

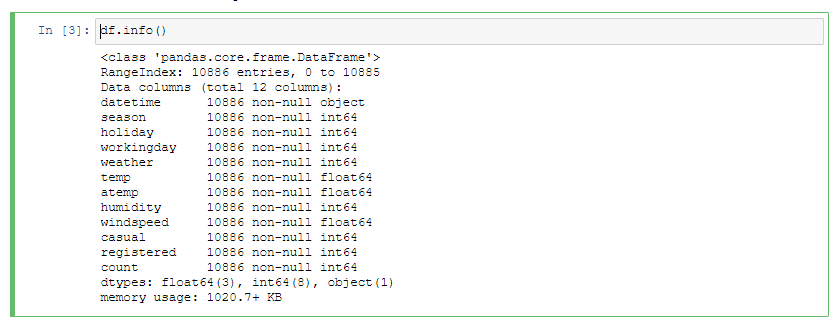

df.info()

df.info()

First, we should check the percentage of missing values.

首先,我们应该检查缺失值的百分比。

missing_percentage = df.isnull().sum() * 100 / len(df)

missing_percentage = df.isnull().sum() * 100 / len(df)

There are a lot of strategies to follow when dealing with missing data. Let me mention the main ones:

处理丢失的数据时,有许多策略可以遵循。 让我提到主要的:

- Dropping missing values. The only reason to follow this approach is when you need to quickly remove all NaNs from the data. 删除缺失值。 遵循这种方法的唯一原因是当您需要快速从数据中删除所有NaN时。

Replacing NaNs with values. This is called imputation. A common decision is to replace missing values with zeros or with a mean value.

用值替换NaN。 这称为归因 。 常见的决定是用零或平均值替换缺失值。

Luckily, we don’t have any missing values in the dataset. But if your data has, I suggest you look into a quick guide with the pros and cons of different imputation techniques.

幸运的是,我们在数据集中没有任何缺失值。 但是,如果您有数据,建议您快速了解各种插补技术的优缺点 。

管理要素数据类型 (Manage features data types)

Let’s convert the type of “datetime”’ column from object to datetime:

让我们将“ datetime”列的类型从对象转换为datetime:

df['datetime'] = pd.to_datetime(df['datetime'])

df['datetime'] = pd.to_datetime(df['datetime'])

Now we are able to engineer new features based on this column, for example:

现在,我们可以根据此专栏设计新功能,例如:

- a day of the week 一周中的一天

- a month 一个月

- an hour 一小时

df['weekday'] = df['datetime'].dt.dayofweekdf['hour'] = df['datetime'].dt.hourdf['month'] = df['datetime'].dt.monthThese features can be used further to figure out trends in rent.

这些功能可以进一步用于确定租金趋势。

Next, let’s convert string types to categorical:

接下来,让我们将字符串类型转换为分类类型:

categories = ['season', 'workingday', 'weekday', 'hour', 'month', 'weather', 'holiday']for category in categories: df[category] = df[category].astype('category')Read more about when to use the categorical data type here.

在此处阅读有关何时使用分类数据类型的更多信息。

Now, let’s make values of categorical more meaningful by replacing numbers with their categorical equivalents:

现在,通过将数字替换为对应的类别,使分类的值更有意义:

df['season'] = df['season'].replace([1, 2, 3, 4], ['spring', 'summer', 'fall', 'winter'])df['holiday'] = df['holiday'].replace([0, 1],['No', 'Yes'])By doing so, it will be easier for us to interpret data visualization later on. We won’t need to look up the meaning of a category each time we need it.

这样,以后我们将更容易解释数据可视化。 我们不需要每次都需要查找类别的含义。

使用数据透视表和图表可视化数据 (Visualize data with a pivot table and charts)

Now that you cleaned the data, let’s visualize it.

现在您已经清理了数据,让我们对其可视化。

The data visualization type depends on the question you are asking.

数据可视化类型取决于您要询问的问题。

In this tutorial, we’ll be using:

在本教程中,我们将使用:

- a pivot table for tabular data visualization 用于表格数据可视化的数据透视表

- a bar chart 条形图

为数据透视表准备数据 (Prepare data for the pivot table)

Before loading data to the pivot table, convert the dataframe to an array of JSON objects. For this, use the to_json() function from the json module.

在将数据加载到数据透视表之前,将数据帧转换为JSON对象数组。 为此,请使用json模块中的to_json()函数。

The records orientation is needed to make sure the data is aligned according to the format the pivot table requires.

需要records方向,以确保数据根据数据透视表所需的格式对齐。

json_data = df.to_json(orient=”records”)

json_data = df.to_json(orient=”records”)

创建数据透视表 (Create a pivot table)

Next, define a pivot table object and feed it with the data. Note that the data has to be deserialized using the loads() function that decodes JSON:

接下来,定义数据透视表对象并向其提供数据。 请注意,必须使用可解码JSON的loads()函数对数据进行反序列化:

pivot_table = {

"container": "#pivot-container",

"componentFolder": "https://cdn.flexmonster.com/",

"toolbar": True,

"report": {

"dataSource": {

"type": "json",

"data": json.loads(json_data)

},

"slice": {

"rows": [{

"uniqueName": "weekday"

}],

"columns": [{

"uniqueName": "[Measures]"

}],

"measures": [{

"uniqueName": "count",

"aggregation": "median"

}],

"sorting": {

"column": {

"type": "desc",

"tuple": [],

"measure": {

"uniqueName": "count",

"aggregation": "median"

}

}

}

}

}

}In the above pivot table initialization, we specified a simple report that consists of a slice (a set of fields visible on the grid), data source, options, formats, etc. We also specified a container where the pivot table should be rendered. The container will be defined a bit later.

在上述数据透视表初始化中,我们指定了一个简单的报告,该报告由一个切片(网格上可见的一组字段),数据源,选项,格式等组成。我们还指定了一个应在其中呈现数据透视表的容器。 稍后将定义容器。

Plus, here we can add a mapping object to prettify the field captions or set their data types. Using this object eliminates the need in modifying the data source.

另外,在这里我们可以添加一个映射对象来美化字段标题或设置其数据类型。 使用此对象消除了修改数据源的需要。

Next, convert the pivot table object to a JSON-formatted string to be able to pass it for rendering in the HTML layout:

接下来,将数据透视表对象转换为JSON格式的字符串,以便能够将其传递以在HTML布局中呈现:

pivot_json_object = json.dumps(pivot_table)

pivot_json_object = json.dumps(pivot_table)

定义仪表板布局 (Define a dashboard layout)

Define a function that renders the pivot table in the cell:

定义一个在单元格中呈现数据透视表的函数:

In this function, we call HTML() from the IPython.display module — it will render the layout enclosed into a multi-line string.

在此函数中,我们从IPython.display模块调用HTML() - 它会 将布局呈现为多行字符串。

Next, let’s call this function and pass to it the pivot table previously encoded into JSON:

接下来,让我们调用此函数并将之前编码为JSON的数据透视表传递给它:

render_pivot_table(pivot_json_object)

render_pivot_table(pivot_json_object)

Likewise, you can create and render as many data visualization components as you need. For example, interactive pivot charts that visualize aggregated data:

同样,您可以根据需要创建和呈现任意数量的数据可视化组件 。 例如,可视化聚合数据的交互式数据透视图 :

下一步是什么 (What’s next)

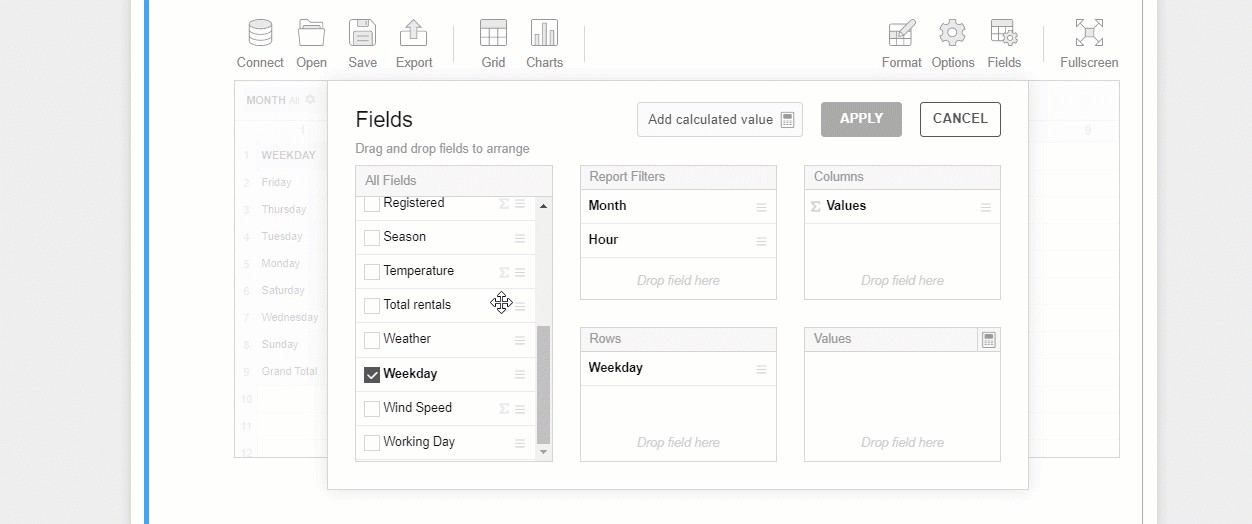

Now that you embedded the pivot table into Jupyter, it’s time to start exploring your data:

现在,您已将数据透视表嵌入Jupyter中,是时候开始探索数据了:

drag and drop fields to rows, columns, and measures of the pivot table

将字段拖放到数据透视表的行,列和度量

set Excel-like filtering

设置类似Excel的过滤

highlight important values with conditional formatting

使用条件格式突出显示重要的值

At any moment, you can save your results to a JSON or PDF/Excel/HTML report.

您随时可以将结果保存到JSON或PDF / Excel / HTML报告中。

例子 (Examples)

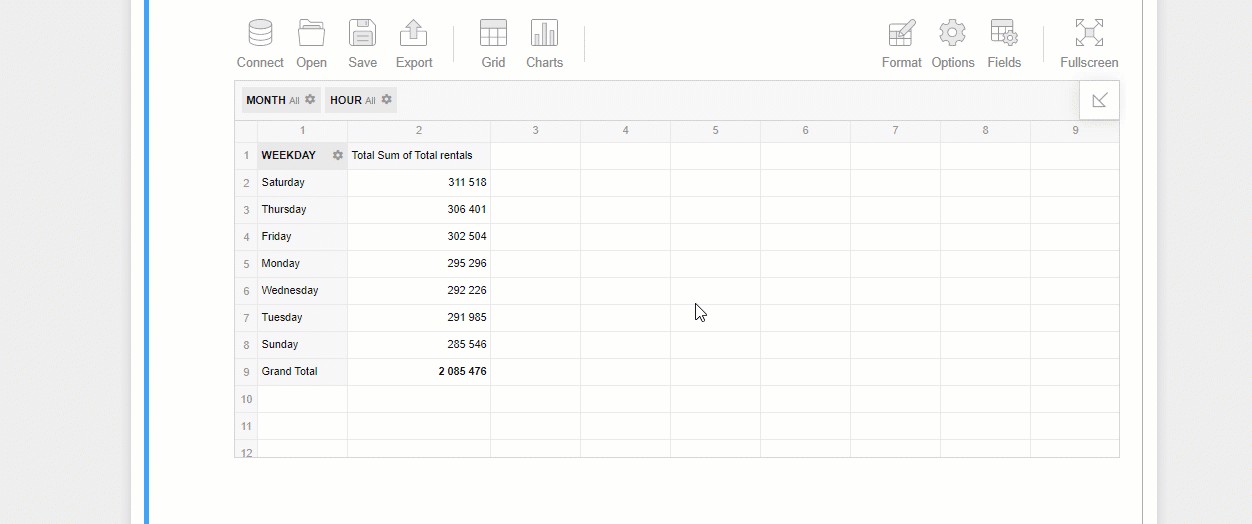

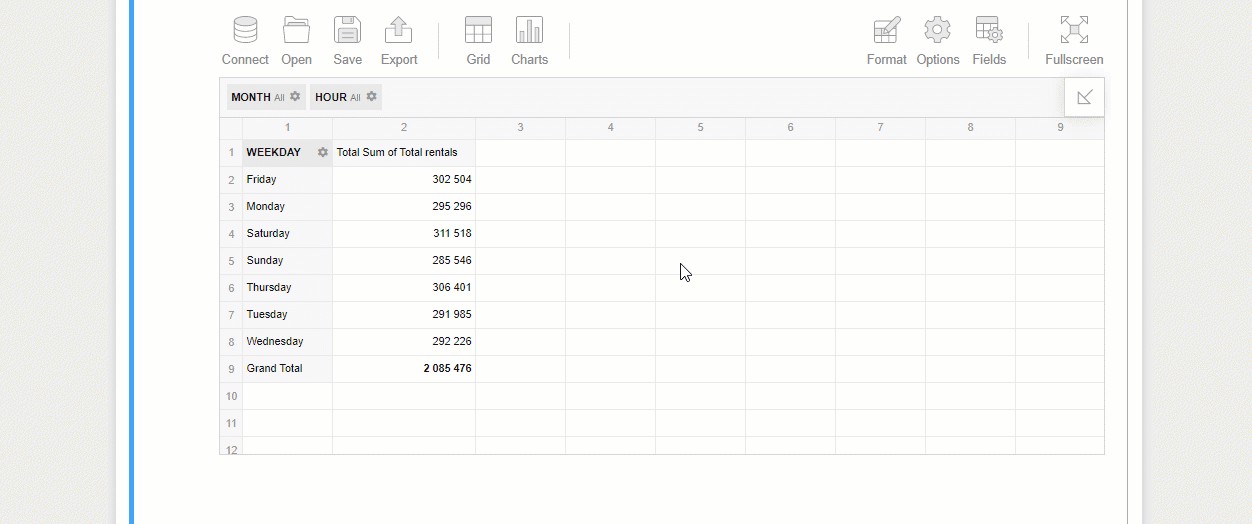

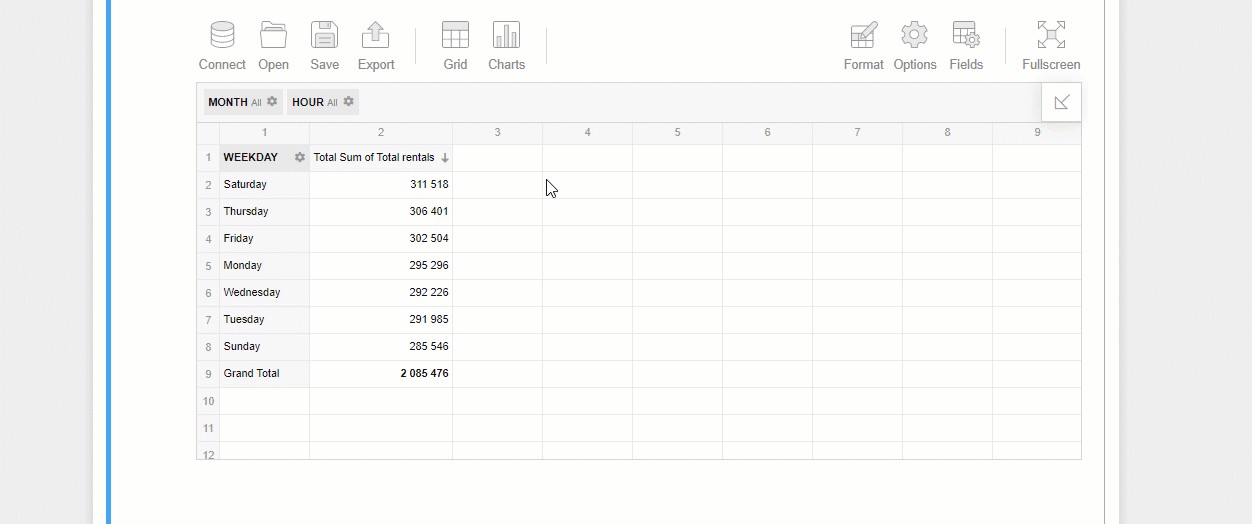

Here is how you can try identifying trends on bikes usage depending on the day of the week:

您可以按照以下方式尝试确定自行车使用情况的趋势,具体取决于星期几:

You can also figure out if any weather conditions affect the number of rents by registered and unregistered users:

您还可以确定是否有任何天气情况影响注册和未注册用户的租金数量:

To dig deeper into the data, drill through aggregated values by double-clicking and see the raw records they are composed of:

要通过双击深入挖掘数据, 追溯汇总值,看看它们是由原始的记录:

Or simply switch to the pivot charts mode and give your data an even more comprehensible look:

或者,只需切换到数据透视图模式,即可使您的数据看起来更清晰:

汇集全部 (Bringing it all together)

By completing this tutorial, you learned a new way to interactively explore your multi-dimensional data in Jupyter Notebook using Python and the JavaScript data visualization library. I hope this will make your exploration process more insightful than before.

通过完成本教程,您学习了一种使用Python和JavaScript数据可视化库在Jupyter Notebook中交互式浏览多维数据的新方法。 我希望这将使您的探索过程比以往更有见识。

有用的链接 (Useful links)

Jupyter Notebook dashboard sample

Jupyter Notebook仪表板示例

Web pivot table live demo

Web数据透视表实时演示

Pythonic Data Cleaning With Pandas and NumPy

使用Pandas和NumPy进行Pythonic数据清理

Exploratory Data Analysis With Python and Pandas on Coursera

在Coursera上使用Python和Pandas进行探索性数据分析

翻译自: https://medium.com/python-in-plain-english/data-visualization-with-python-and-javascript-c1c28a7212b2

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/389446.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

)

)