Following the previous article written about solving Python dependencies, we will take a look at the quality of software. This article will cover “inspections” of software stacks and will link a free dataset available on Kaggle. Even though the title says the quality of “machine learning software”, principles and ideas can be reused for inspecting any software quality.

在上一篇有关解决Python依赖关系的文章之后,我们将介绍软件的质量。 本文将介绍软件堆栈的“检查”,并将链接Kaggle上可用的免费数据集。 即使标题说明了“机器学习软件”的质量,也可以重用原理和思想来检查任何软件质量。

应用程序(软件和硬件)堆栈 (Application (Software & Hardware) Stack)

Let’s consider a Python machine learning application. This application can use a machine learning library, such as TensorFlow. TensorFlow is in that case a direct dependency of the application and by installing it, the machine learning application is using directly TensorFlow and indirectly dependencies of TensorFlow. Examples of such indirect dependencies of our application can be NumPy or absl-py that are used by TensorFlow.

让我们考虑一个Python机器学习应用程序。 该应用程序可以使用机器学习库,例如TensorFlow 。 在这种情况下,TensorFlow是应用程序的直接依赖项,通过安装它,机器学习应用程序将直接使用TensorFlow并间接使用TensorFlow依赖项。 我们应用程序的这种间接依赖关系的示例可以是TensorFlow使用的NumPy或absl-py 。

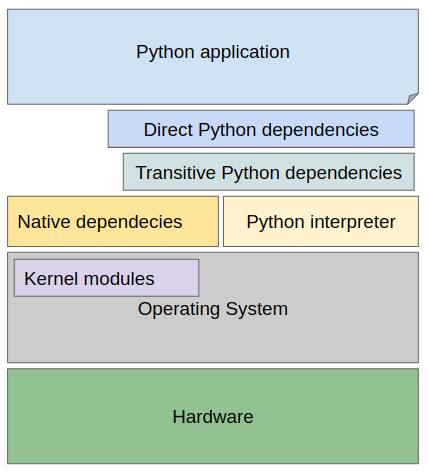

Our machine learning Python application and all the Python libraries run on top of a Python interpreter in some specific version. Moreover, they can use other additional native dependencies (provided by the operating system) such as glibc or CUDA (if running computations on GPU). To visualize this fact, let’s create a stack with all the items creating the application stack running on top of some hardware.

我们的机器学习Python应用程序和所有Python库在某些特定版本的Python解释器上运行。 此外,他们可以使用其他附加的本机依赖项(由操作系统提供),例如glibc或CUDA (如果在GPU上运行计算)。 为了形象化这一事实,让我们创建一个堆栈,其中所有项都创建在某些硬件之上运行的应用程序堆栈。

Note that an issue in any of the described layers causes that our Python application misbehaves, produces wrong output, produces runtime errors, or simply does not start at all.

请注意,任何描述的层中的问题都会导致我们的Python应用程序行为异常,产生错误的输出,产生运行时错误,或者根本无法启动。

Let’s try to identify any possible issues in the described stack by building the software and let’s have it running on our hardware. By doing so we can spot possible issues before pushing our application to a production environment or fine-tune the software so that we get the best possible out of our application on the hardware available.

让我们尝试通过构建软件来确定所描述堆栈中的任何可能问题,并使其在我们的硬件上运行。 这样,我们可以在将应用程序推送到生产环境之前发现可能的问题,或者对软件进行微调,以便在可用硬件上充分利用应用程序。

按需软件堆栈创建 (On-demand software stack creation)

If our application depends on a TensorFlow release starting version 2.0.0 (e.g. requirements on API offered by tensorflow>=2.0.0), we can test our application with different versions of TensorFlow up to the current 2.3.0 release available on PyPI to this date. The same can be applied to transitive dependencies of TensorFlow, e.g. absl-py, NumPy, or any other. A version change of any transitive dependency can be performed analogically to any other dependency in our software stack.

如果我们的应用程序依赖于2.0.0版本开始的TensorFlow版本(例如tensorflow>=2.0.0提供的API要求),我们可以使用不同版本的TensorFlow来测试我们的应用程序,直到PyPI上可用的当前2.3.0版本为止。 这个日期 。 这可以应用于TensorFlow的传递依赖项,例如absl-py , NumPy或任何其他。 任何传递依赖的版本更改都可以类似于我们软件堆栈中的任何其他依赖进行。

依赖猴子 (Dependency Monkey)

Note one version change can completely change (or even invalidate) what dependencies in what versions will be present in the application stack considering the dependency graph and version range specifications of libraries present in the software stack. To create a pinned down list of packages in specific versions to be installed a resolver needs to be run in order to resolve packages and their version range requirements.

请注意,考虑到软件堆栈中存在的库的依赖关系图和版本范围规范,一个版本更改可以完全更改(甚至无效)应用程序堆栈中将存在哪些版本的依赖关系。 要创建要安装的特定版本的软件包的固定列表,需要运行解析器以解析软件包及其版本范围要求。

Do you remember the state space described in the first article of “How to beat Python’s pip” series? Dependency Monkey can in fact create the state space of all the possible software stacks that can be resolved respecting version range specifications. If the state space is too large to resovle in a reasonable time, it can be sampled.

您还记得“如何击败Python的点子”系列的第一篇文章中描述的状态空间吗? Dependency Monkey实际上可以创建所有可能的软件堆栈的状态空间,这些版本可以根据版本范围规范进行解析。 如果状态空间太大而无法在合理的时间内恢复状态,则可以对其进行采样。

A component called “Dependency Monkey” is capable of creating different software stacks considering the dependency graph and version specifications of packages in the dependency graph. This all is done offline based on pre-computed results from Thoth’s solver runs (see the previous article from “How to beat Python’s pip” series). The results of solver runs are synced into Thoth’s database so that they are available in a query-able form. Doing so enables Dependency Monkey to resolve software stacks at a fast pace (see a YouTube video on optimizing Thoth’s resolver). Moreover, the underlying algorithm can consider Python packages published on different Python indices (besides PyPI, it can also use custom TensorFlow builds from an index such as the AICoE one). We will do a more in-depth explanation of Dependency Monkey in one of the upcoming articles. If you are too eager, feel free to browse its online documentation.

考虑到依赖关系图和依赖关系图中软件包的版本规格,称为“依赖关系猴子”的组件能够创建不同的软件堆栈。 所有这些都是根据Thoth的求解器运行的预先计算的结果脱机完成的(请参阅“ How to beat Python's pip”系列的上一篇文章) 。 求解器运行的结果将同步到Thoth的数据库中,以便以可查询的形式提供它们。 这样做使Dependency Monkey能够快速解决软件堆栈的问题 (请参见有关优化Thoth解析器的YouTube视频 )。 此外,底层算法可以考虑发布在不同Python索引上的Python包( 除了PyPI之外 ,它还可以使用来自诸如AICoE的索引的自定义TensorFlow构建 )。 我们将在后续文章之一中对Dependency Monkey做更深入的解释。 如果您太渴望了,请随时浏览其在线文档 。

Amun API (Amun API)

Now, let’s utilize a service called “Amun”. This service was designed to accept a specification of the software stack and hardware and execute an application given the specification.

现在,让我们利用一项名为“ Amun ”的服务。 该服务旨在接受软件堆栈和硬件的规范,并根据给定的规范执行应用程序。

Amun is an OpenShift cluster native application, that utilizes OpenShift features (such as builds, container image registry, …) and Argo Workflows to run desired software on specific hardware using a specific software environment. The specification is accepted in a JSON format that is subsequently translated into respective steps that need to be done in order to test the given stack build and run.

Amun是一个OpenShift群集本机应用程序,它利用OpenShift功能(例如构建,容器映像注册表等)和Argo Workflow在使用特定软件环境的特定硬件上运行所需的软件。 该规范以JSON格式接受,随后将其转换为需要执行的各个步骤,以测试给定的堆栈构建和运行。

The video linked above shows how Amun inspections are run and how the knowledge created is aggregated using OpenShift, Argo workflows, and Ceph. You can see inspected different TensorFlow builds tensorflow , tensorflow-cpu , intel-tensorflow and a community builds of TensorFlow for AVX2 instruction set support available on the AICoE index.

上面链接的视频显示了如何运行Amun检查以及如何使用OpenShift,Argo工作流程和Ceph汇总所创建的知识。 您可以在AICoE索引上看到经过检查的不同TensorFlow构建tensorflow , tensorflow-cpu , intel-tensorflow和TensorFlow for AVX2指令集支持的社区构建 。

在Kaggle上的Thoth检查数据集 (Thoth’s inspection dataset on Kaggle)

We (Red Hat) have produced multiple inspections as part of the project Thoth where we tested different TensorFlow releases and different TensorFlow builds.

我们(Red Hat)作为Thoth项目的一部分进行了多次检查,在其中我们测试了不同的TensorFlow版本和不同的TensorFlow版本。

One such dataset is Thoth’s performance data set in version 1 on Kaggle. It’s consisting out of nearly 4000 files capturing information about inspection runs of TensorFlow stacks. A notebook published together with the dataset can help one exploring the dataset.

这样的数据集就是在Kaggle的版本1中的Thoth的性能数据 。 它由近4000个文件组成,这些文件捕获有关TensorFlow堆栈检查运行的信息。 与数据集一起发布的笔记本可以帮助人们探索数据集。

托特计划 (Project Thoth)

Project Thoth is an application that aims to help Python developers. If you wish to be updated on any improvements and any progress we make in project Thoth, feel free to subscribe to our YouTube channel where we post updates as well as recordings from scrum demos.

Project Thoth是旨在帮助Python开发人员的应用程序。 如果您希望了解我们在Thoth项目中所做的任何改进和进展的最新信息,请随时订阅我们的YouTube频道 ,我们在其中发布更新以及Scrum演示的录音。

Stay tuned for any updates!

请随时关注任何更新!

翻译自: https://towardsdatascience.com/how-to-beat-pythons-pip-inspecting-the-quality-of-machine-learning-software-f1a028f0c42a

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/388418.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

)

![计算机网络中 子网掩码的算法,[网络天地]子网掩码快速算法(转载)](http://pic.xiahunao.cn/计算机网络中 子网掩码的算法,[网络天地]子网掩码快速算法(转载))