我正在尝试使用Scrapy从网站自动下载数据。在

我要做的是:使用我的凭据登录网站

通过在“RIC”行中写入代码并选择感兴趣的时段来选择我想要的数据

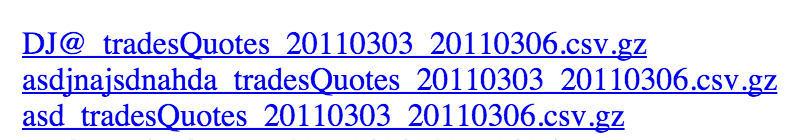

单击“获取数据”后,将生成.csv文件,我可以从“下载/”url下载该文件,其中我的所有文件如下所示:

我可以使用“FormRequest”登录。因此,我想做同样的事情来填充“RIC”代码并单击“Get data”按钮,但这失败了。(我现在改变日期不是为了了解它的工作原理)

这里是我的代码:class DmozSpider(scrapy.Spider):

name = "dmoz"

allowed_domains = ["myDomain"]

start_urls = [

"http://myDomain/dataServices/"

]

def parse(self, response):

return scrapy.FormRequest.from_response(

response,

formdata={'username': 'myName', 'password': 'myPass'},

callback=self.after_login

)

def after_login(self, response):

# check login succeed before going on

if "Your email adress and password did not match" in response.body:

print("\n\nFAIL\n\n")

self.logger.error("Login failed")

return

else:

print("\n\n LOGIN SUCCESSFUL \n\n")

filename = response.url.split("/")[-2] + '.html'

with open(filename, 'wb') as f:

f.write(response.body)

## THIS FAILS !!!

return scrapy.http.FormRequest.from_response(

response,

formxpath='//input[@value = ""]',

formdata={'value': 'DJ@'},

clickdata={'value': 'Get data'},

callback= self.foo

)

这是表单的html:

^{pr2}$

我从日志中得到的错误:2015-10-20 18:49:53 [scrapy] DEBUG: Retrying (failed 1 times): 500 Internal Server Error

2015-10-20 18:49:53 [scrapy] DEBUG: Retrying (failed 2 times): 500 Internal Server Error

2015-10-20 18:49:53 [scrapy] DEBUG: Gave up retrying (failed 3 times): 500 Internal Server Error

2015-10-20 18:49:53 [scrapy] DEBUG: Crawled (500) (referer: http://myDomain/dataServices/)

2015-10-20 18:49:53 [scrapy] DEBUG: Ignoring response <500 http://myDomain/dataServices/>: HTTP status code is not handled or not allowed

你知道我做错了什么吗?在

)

:完成一个简单的游戏)

![[HNOI2012]排队](http://pic.xiahunao.cn/[HNOI2012]排队)

)

函数)

![android paint 圆角 绘制_[BOT] 一种android中实现“圆角矩形”的方法](http://pic.xiahunao.cn/android paint 圆角 绘制_[BOT] 一种android中实现“圆角矩形”的方法)