一、hive配置文件

在spak/conf目录添加hive-site.xml配置,设置mysql作为元数据存储的数据库

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://192.168.150.1:3306/spark_metadata_db?createDatabaseIfNotExist=true&characterEncoding=UTF-8</value><description>JDBC connect string for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value><description>Driver class name for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value><description>Username to use against metastore database</description></property><property><name>javax.jdo.option.ConnectionPassword</name><value>admin</value><description>password to use against metastore database</description></property><!-- hive查询时输出列名 --><property><name>hive.cli.print.header</name><value>true</value></property><!-- 显示当前数据库名 --><property><name>hive.cli.print.current.db</name><value>true</value></property>

</configuration>

二、启动spark-sql shell

--driver-class-path 是spark元数据存储的驱动类路径,这里使用mysql作为metastore,故使用mysql-connector-java-5.1.26-bin.jar

--jars 是executer执行器的额外添加类的路径,这里使用mysql的test表进行操作,故使用mysql-connector-java-5.1.26-bin.jar

--total-executor-cores 启动的核数,默认是所有核数

--executor-memory 每个work分配的内存,默认是work的所有内存

cd ~/software/spark-2.4.4-bin-hadoop2.6

bin/spark-sql --master spark://hadoop01:7077,hadoop02:7077,hadoop03:7077 --driver-class-path /home/mk/mysql-connector-java-5.1.26-bin.jar --jars /home/mk/mysql-connector-java-5.1.26-bin.jar --total-executor-cores 2 --executor-memory 1g

启动shell前:

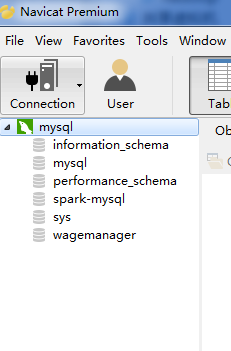

启动shell后:

mysql数据库里面创建了spark_metadata_db

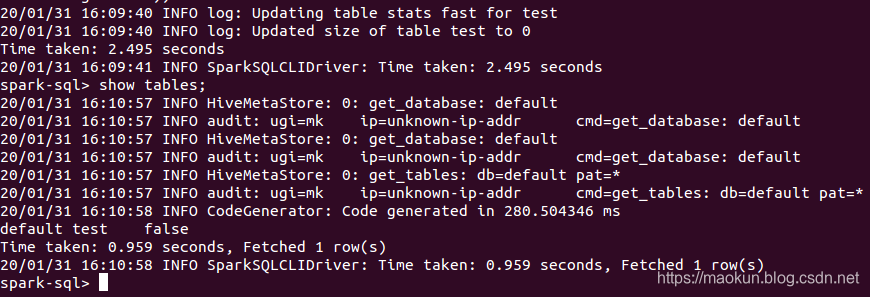

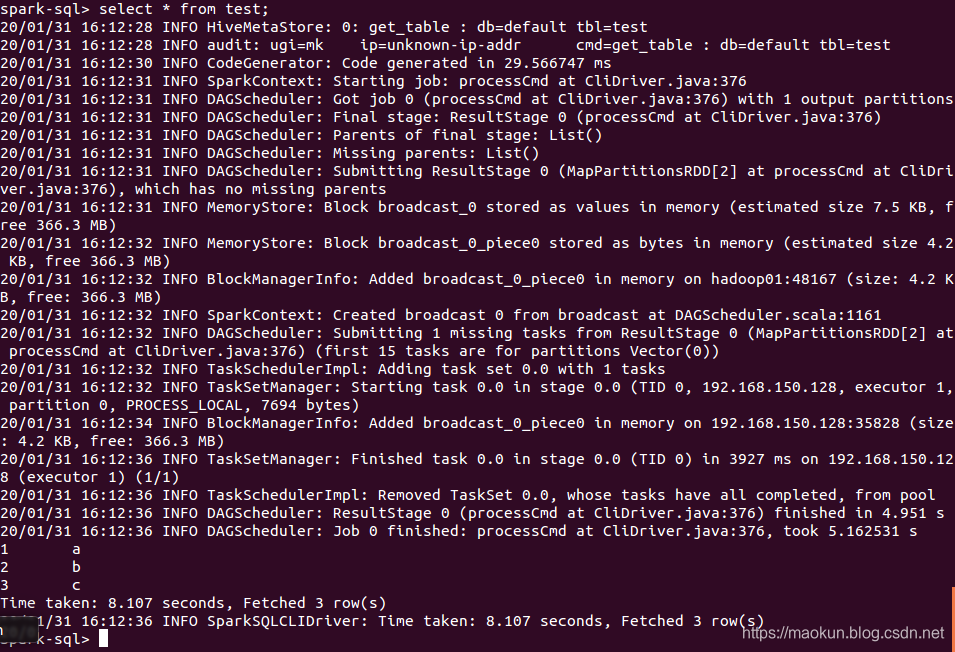

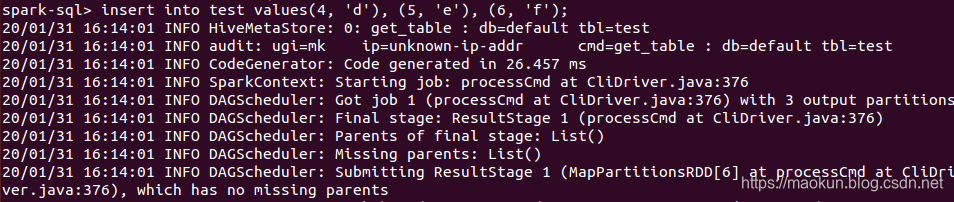

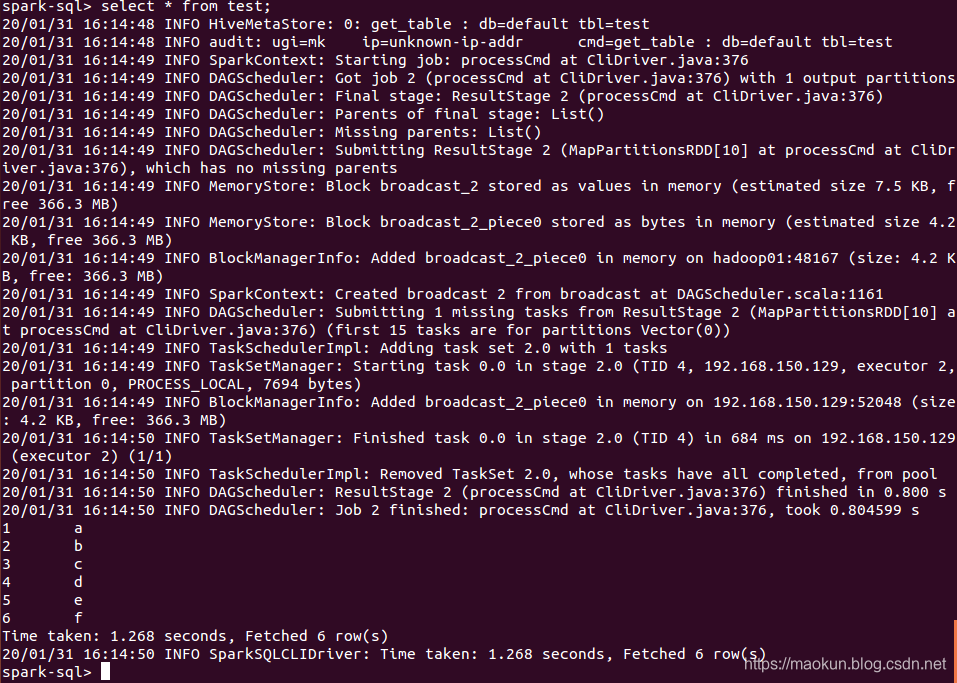

三、执行sql

show tables;create table test(id int, name string) USING org.apache.spark.sql.jdbc options(url 'jdbc:mysql://192.168.150.1:3306/spark-mysql?user=root&password=admin', dbtable 'test_a');show tables;select * from test;insert into test values(4, 'd'), (5, 'e'), (6, 'f');select * from test;

低配置虚拟机也能玩转深度学习,无需NC/NV系列)

Spark SQL thriftserver/beeline启动方式)

之 SQL Server 2017饕餮)

)

![P3225-[HNOI2012]矿场搭建【tarjan,图论】](http://pic.xiahunao.cn/P3225-[HNOI2012]矿场搭建【tarjan,图论】)