-

dictMenu =f’卡布奇洛’:32,‘摩卡’:30,‘抹茶蛋糕’:28,‘布朗尼’:26}, dictMenu 中存放了你的双人下午套餐(包括咖啡2份和点心2份)的价格,请编写程序,让Python帮忙计算并输出消费总额。

dictMenu = {'卡布奇洛': 32, '摩卡': 30, '抹茶蛋糕': 28, '布朗尼': 26}total = 0 for price in dictMenu.values():total += priceprint(f"消费总额为:{total}元") -

用字典数据类型编写会简单一问一答聊天的可学习机器人程序。(a)自己构建初始对话字典(对话字典自己创建),例如:memory = {你在干嘛:在呼吸和想你,你喜欢哪一天:跟你聊天’,你在想什么:'我在想你 b)对机器人无法回答的问题,请提问者给出答案,并更新字典数据。c)使用空格标识聊天结束。

def chat_bot():memory = {"你在干嘛": "在呼吸和想你", "你喜欢哪一天": "跟你聊天", "你在想什么": "我在想你"}while True:question = input("你:")if question in memory:print("机器人:" + memory[question])elif question == " ":print("机器人:再见!")breakelse:answer = input("机器人:对不起,我不知道该如何回答这个问题。请告诉我你的答案:")memory[question] = answerchat_bot() -

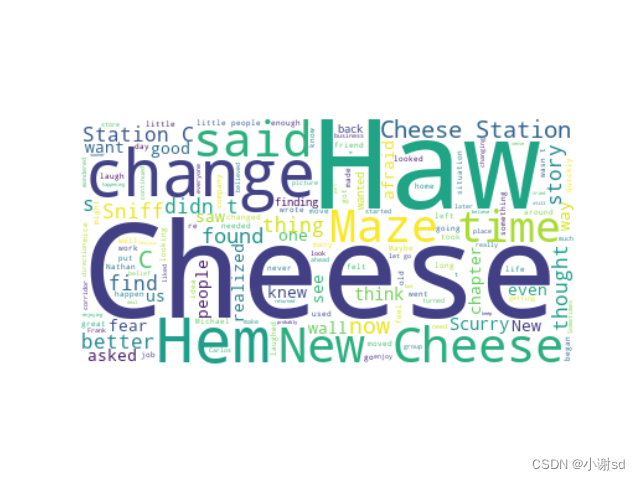

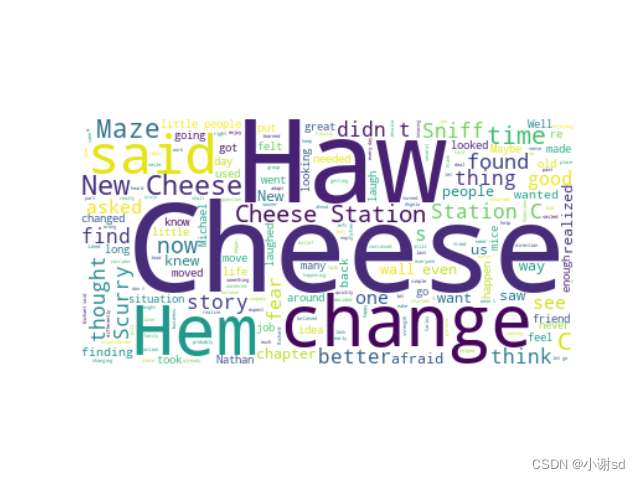

根据文件"Who Moved My Cheese.txt”的内容,先进行英文词频统计,之后分别用词频为参数的方法和全文英文字符串为参数绘制两个英文词云。要求设置背景色为白色并显示和保存词云图"My Cheese freq.jpg"和“MyCheese text.jpg" 。支持第三方库: wordcloud库和matplotlib 库。提示:文件的读取和去除汉字字符的语句如下txt = open(file, ‘r,encoding=“utf-8”).read()english_only_txt =’'.join(x for x in txt if ord(x) < 256) 。

# 先下载 wordcloud 库 pip install wordcloudfrom wordcloud import WordCloud import matplotlib.pyplot as plt# 读取文件内容并去除汉字字符 file = "Who Moved My Cheese.txt" txt = open(file, 'r', encoding="utf-8").read() english_only_txt = ''.join(x for x in txt if ord(x) < 256)# 英文词频统计 wordcloud = WordCloud(background_color="white").generate(english_only_txt)# 绘制词云图(词频为参数) plt.imshow(wordcloud, interpolation='bilinear') plt.axis("off") plt.savefig("MyCheese freq.jpg", dpi=300) plt.show()# 绘制词云图(全文英文字符串为参数) wordcloud = WordCloud(background_color="white").generate_from_text(english_only_txt)# 绘制词云图(全文英文字符串为参数) plt.imshow(wordcloud, interpolation='bilinear') plt.axis("off") plt.savefig("MyCheese text.jpg", dpi=300) plt.show()

-

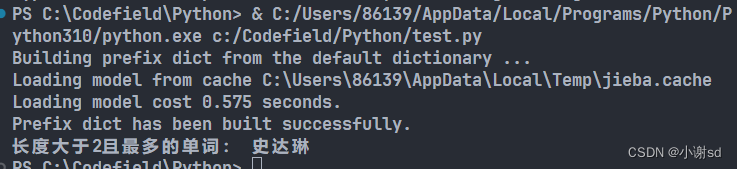

请读入“沉默的羔羊.txt”内容,分词后输出长度大于2且最多的单词。如果存在多个单词出现频率一致,请输出按照Unicode排序后最大的单词。使用jieba库。(史达琳)

# 先下载 jieba 库 pip install jiebaimport jieba# 读取文件内容 file = "沉默的羔羊.txt" txt = open(file, 'r', encoding="utf-8").read()# 分词 seg_list = jieba.lcut(txt)# 统计满足条件的单词 word_count = {} for word in seg_list:if len(word) > 2:word_count[word] = word_count.get(word, 0) + 1# 找到频率最大的单词列表 max_frequency = max(word_count.values()) max_words = [] for word, frequency in word_count.items():if frequency == max_frequency:max_words.append(word)# 按照Unicode排序并输出最大的单词 result = sorted(max_words)[-1]print("长度大于2且最多的单词:", result)

-

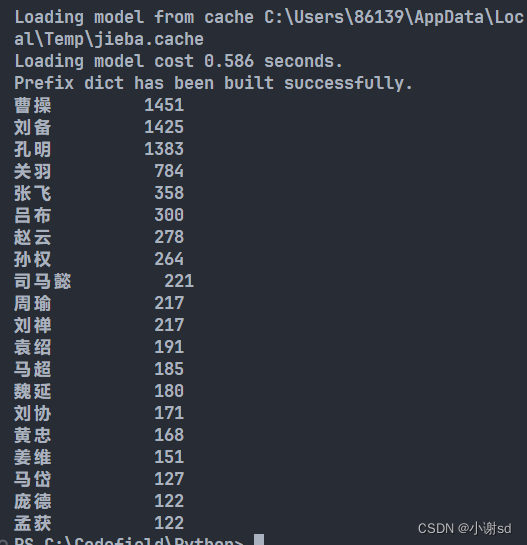

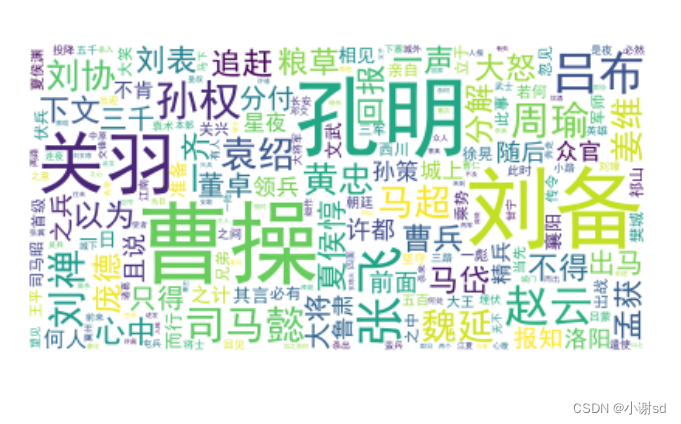

请读入“三国演义.txt”内容,统计人物出场次数。要求:1 输出排序前 15 人物名称及出场次数。使用 jieba。2根据出场次数制作出场次数前15的人物名称的词云图。使用wordcloud 库。

import jieba from wordcloud import WordCloud import matplotlib.pyplot as plt excludes = {"将军", "却说", "荆州", "二人", "不可", "不能", "如此", "商议", "如何","主公", "军士", "左右", "军马", "引兵", "次日", "大喜", "天下", "东吴","于是", "今日", "不敢", "魏兵", "陛下", "一人", "都督", "人马", "不知","汉中", "只见", "众将", "蜀兵", "上马", "大叫", "太守", "此人", "夫人","后人", "背后", "城中", "一面", "何不", "大军", "忽报", "先生", "百姓","何故", "然后", "先锋", "不如", "赶来", "原来", "令人", "江东", "下马","喊声", "正是", "徐州", "忽然", "因此", "成都", "不见", "未知", "大败","大事", "之后", "一军", "引军", "起兵", "军中", "接应", "进兵", "大惊", "可以"} txt = open("三国演义.txt", "r", encoding='utf-8').read() words = jieba.lcut(txt) counts = {} for word in words:if len(word) == 1:continueelif word == "诸葛亮" or word == "孔明曰":rword = "孔明"elif word == "关公" or word == "云长":rword = "关羽"elif word == "玄德" or word == "玄德曰" or word == "先主":rword = "刘备"elif word == "孟德" or word == "丞相":rword = "曹操"elif word == "后主":rword = "刘禅"elif word == "天子":rword = "刘协"else:rword = wordcounts[rword] = counts.get(rword, 0) + 1 for word in excludes:del counts[word] items = list(counts.items()) items.sort(key=lambda x: x[1], reverse=True) for i in range(20):word, count = items[i]print("{0:<10}{1:>5}".format(word, count))# 制作出场次数前15的人物名称的词云图 wordcloud = WordCloud(font_path="simhei.ttf", background_color="white").generate_from_frequencies(dict(items))plt.figure(figsize=(8, 8)) plt.imshow(wordcloud, interpolation="bilinear") plt.axis("off") plt.show()

【Python】基础练习题_组合数据类型_2

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/186806.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

【云平台】STM32微信小程序阿里云平台学习板

【云平台】STM32微信小程序阿里云平台学习板 文章目录 前言一、立创EDA(硬件设计)1.主控STM32F103C8T62.ESP8266模块3.温湿度模块4.光照强度模块5.OLED显示模块6.PCB正面7.PCB反面8.3D视角正面9.3D视角反面 二、【云平台】STM32微信小程序阿里云平台学习…

CentOS7 网络配置

前言: 安装虚拟机后,在虚拟机ping www.baidu.com ping不通。 一、centos7配置IP地址有两种方法:

动态获取IP(不推荐使用)设置静态ip(推荐) 二、centos7配置静态IP: 第一步ÿ…

使用npm发布typescript包

使用npm发布typescript包

什么是NPM

NPM(节点包管理器)是 JavaScript 编程语言的默认包管理器。NPM 注册表是一个公共存储库,充当存储和分发 JavaScript 包的中心枢纽。它允许开发人员轻松安装、管理和共享可重用的 JavaScript 代码包&…

2023年11月个人工作生活总结

本文为 2023 年 11 月工作生活总结。 研发编码

GIS

模仿了一些有名的地图服务商的网站,将离线地图页面做成全屏,对于大屏幕更加好友。再美化一下全区的边界和区内地域的边界。不过主要工作量还是绘制路线,而绘线作为内部工作,还…

lodash常见的方法

debounce 防抖 延迟 wait 毫秒后调用 func 方法。 提供 cancel 方法取消延迟的函数调用和 flush 方法立即调用。 可以提供一个 options(选项){leading ,trailing} 决定延迟前后如何触发(注:是 先调用后等待 还是 先等待…

python实现two way ANOVA

文章目录 目的:用python实现two way ANOVA 双因素方差分析1. python代码实现1 加载python库2 加载数据3 统计样本重复次数,均值和方差,绘制箱线图4 查看people和group是否存在交互效应5 模型拟合与Two Way ANOVA:双因素方差分析6 …

LeetCode(34)有效的数独【矩阵】【中等】

目录 1.题目2.答案3.提交结果截图 链接: 36. 有效的数独 1.题目

请你判断一个 9 x 9 的数独是否有效。只需要 根据以下规则 ,验证已经填入的数字是否有效即可。

数字 1-9 在每一行只能出现一次。数字 1-9 在每一列只能出现一次。数字 1-9 在每一个以粗…

np.random.uniform() 用法

用法:

np.random.uniform是NumPy库中用来生成在一个指定范围内均匀分布的随机数的函数。它的使用方法如下:

numpy.random.uniform(low0.0, high1.0, sizeNone)

low:浮点数或类似数组的对象,随机数生成的下界,默认为…

[原创][3]探究C#多线程开发细节-“用ConcurrentQueue<T>解决多线程的无顺序性的问题“

[简介] 常用网名: 猪头三 出生日期: 1981.XX.XXQQ: 643439947 个人网站: 80x86汇编小站 https://www.x86asm.org 编程生涯: 2001年~至今[共22年] 职业生涯: 20年 开发语言: C/C、80x86ASM、PHP、Perl、Objective-C、Object Pascal、C#、Python 开发工具: Visual Studio、Delphi…

Unity随笔1 - 安卓打包JDK not found

今天遇到一个很奇怪的事情,之前可以正常打安卓包,但是突然报错如下: 提示很明显,找不到JDK了。可是我在下载Unity的时候明明安装了所有需要的组件,为什么今天突然不行。

看了眼Unity hub里面,没问题。 那就…

MySQL表的查询、更新、删除

查询

全列查询 指定列查询 查询字段并添加自定义表达式 自定义表达式重命名 查询指定列并去重

select distinct 列名 from 表名 where条件

查询列数据为null的 null与 (空串)是不同的! 附:一般null不参与查询。 查询列数据不为null的 查询某列数据指定…

概念理论类-k8s :架构篇

转载:新手通俗易懂 k8s :架构篇

Kubernetes,读音是[kubə’netis],翻译成中文就是“库伯奈踢死”。当然了,也可以直接读它的简称:k8s。为什么把Kubernetes读作k8s,因为Kubernetes中间有8个字母…

力扣112. 路径总和

递归

思路: 终止条件是递归到根节点 root,剩余 target 与根节点值相等则路径存在,否则不存在;递归查找左子树或者右子树存在 target target - root->val 的路径;

/*** Definition for a binary tree node.* stru…

ssm+java车辆售后维护系统 springboot汽车保养养护管理系统+jsp

以前汽车维修人员只是在汽车运输行业中从事后勤保障工作,随着我国经济的发展,汽车维修行业已经从原来的从属部门发展成了如今的功能齐备的独立企业。这种结构的转变,给私营汽修企业和个体汽修企业的发展带来了契机,私营企业和个体维修企业的加入也带动了整个汽修行业的整体水平…

SSM校园组团平台系统开发mysql数据库web结构java编程计算机网页源码eclipse项目

一、源码特点 SSM 校园组团平台系统是一套完善的信息系统,结合springMVC框架完成本系统,对理解JSP java编程开发语言有帮助系统采用SSM框架(MVC模式开发),系统具有完整的源代码和数据库,系统主要采用B/S模…

Docker 下载加速

文章目录 方式1:使用 网易数帆容器镜像仓库进行下载。方式2:配置阿里云加速。方式3:方式4:结尾注意 Docker下载加速的原理是,在拉取镜像时使用一个国内的镜像站点,该站点已经缓存了各个版本的官方 Docker 镜…

什么是供应链金融分账系统?

一、供应链金融的重要性

供应链金融在很多行业都是要用到,比如在抖音,快手店铺的商家资金回笼,通常需要7-21天的回款周期,这对于商家的周转来说是一件很困难的事情,在供应链金融中,分账就扮演着至关重要的角色,不仅是金融流程中的一环,更是保…

《金融科技行业2023年专利分析白皮书》发布——科技变革金融,专利助力行业发展

金融是国民经济的血脉,是国家核心竞争力的重要组成部分,金融高质量发展成为2023年中央金融工作的重要议题。《中国金融科技调查报告》中指出,我国金融服务业在科技的助力下,从1.0时代的“信息科技金融”、2.0时代的“互联网金融”…

传统算法: Pygame 实现深度优先搜索(DFS)

使用 Pygame 模块实现了深度优先搜索(DFS)的动画演示。首先,它通过邻接矩阵表示了一个图的结构,其中每个节点表示一个字符,每个字符的邻居表示与之相邻的节点。然后,通过深度优先搜索算法递归地访问所有节点,过程中通过动画效果可视化每一步的变化。每次访问一个节点,该…

Goby 漏洞发布| CrushFTP as2-to 认证权限绕过漏洞(CVE-2023-43177)

漏洞名称: CrushFTP as2-to 认证权限绕过漏洞(CVE-2023-43177)

English Name:CrushFTP as2-to Authentication Permission bypass Vulnerability (CVE-2023-43177)

CVSS core: 9.8

影响资产数: 38695

漏洞描述&…

有效的数独【矩阵】【中等】)

用法)

![[原创][3]探究C#多线程开发细节-“用ConcurrentQueue<T>解决多线程的无顺序性的问题“](http://pic.xiahunao.cn/[原创][3]探究C#多线程开发细节-“用ConcurrentQueue<T>解决多线程的无顺序性的问题“)

)

)