基于已配置好的4个节点部署ceph-0 ceph-1 ceph-2 ceph-3(早期ceph测试环境,名称就不修改了)

获取fcfs.sh

mkdir /etc/fcfs

cd /etc/fcfs

wget http://fastcfs.net/fastcfs/ops/fcfs.sh配置/etc/fcfs/fcfs.settings

# 要安装的集群版本号(例如:5.0.0)

fastcfs_version=4.3.0# 要安装 fuseclient 客户端的IP列表,多个ip以英文逗号分隔

fuseclient_ips=172.17.163.105,172.17.112.206,172.17.227.100,172.17.67.157

conf目录

[root@ceph-0 fcfs]# cd /etc/fcfs/

[root@ceph-0 fcfs]# wget http://fastcfs.net/fastcfs/ops/fcfs-config-sample.tar.gz

--2023-11-21 10:37:55-- http://fastcfs.net/fastcfs/ops/fcfs-config-sample.tar.gz

Resolving fastcfs.net (fastcfs.net)... 39.106.8.170

Connecting to fastcfs.net (fastcfs.net)|39.106.8.170|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4569 (4.5K) [application/octet-stream]

Saving to: ‘fcfs-config-sample.tar.gz’

fcfs-config-sample.tar.gz 100%[==================================================================================>] 4.46K --.-KB/s in 0s

2023-11-21 10:37:55 (79.9 MB/s) - ‘fcfs-config-sample.tar.gz’ saved [4569/4569]

[root@ceph-0 fcfs]# tar -xvf fcfs-config-sample.tar.gz

conf/

conf/fcfs/

conf/fcfs/fuse.conf

conf/auth/

conf/auth/server.conf

conf/auth/client.conf

conf/auth/session.conf

conf/auth/cluster.conf

conf/auth/keys/

conf/auth/keys/session_validate.key

conf/auth/auth.conf

conf/vote/

conf/vote/server.conf

conf/vote/client.conf

conf/vote/cluster.conf

conf/fdir/

conf/fdir/storage.conf

conf/fdir/server.conf

conf/fdir/client.conf

conf/fdir/cluster.conf

conf/fstore/

conf/fstore/storage.conf

conf/fstore/server.conf

conf/fstore/client.conf

conf/fstore/cluster.conf

cluster.conf

[root@ceph-0 fcfs]# vim conf/auth/cluster.conf

[root@ceph-0 fcfs]# vim conf/fdir/cluster.conf

[root@ceph-0 fcfs]# vim conf/fstore/cluster.conf

[root@ceph-0 fcfs]# vim conf/vote/cluster.conf

修改server信息

[server-1]

host = 172.17.163.105

[server-2]

host = 172.17.112.206

[server-3]

host = 172.17.227.100

[server-4]

host = 172.17.67.157存储参数 store-path

[root@ceph-0 fcfs]# vim conf/fdir/storage.conf

[store-path-1]

# the path to store the file

# default value is the data path of storage engine

path = /opt/fastcfs/fdir/db

[root@ceph-0 fcfs]# vim conf/fstore/storage.conf

[store-path-1]

# the path to store the file

path = /opt/faststore/data

初始化lvm(每个节点均执行)

pv

[root@ceph-0 fcfs]# pvcreate /dev/vdb

Physical volume "/dev/vdb" successfully created.

vg

[root@ceph-0 fcfs]# vgcreate vg_fastcfs /dev/vdb

Volume group "vg_fastcfs" successfully created

pv

[root@ceph-0 fcfs]# lvcreate -y -L 50G -n lv_fastcfs_fdir1 vg_fastcfs

Wiping ceph_bluestore signature on /dev/vg_fastcfs/lv_fastcfs_fdir1.

Logical volume "lv_fastcfs_fdir1" created.

[root@ceph-0 fcfs]# lvcreate -y -l 100%FREE -n lv_fastcfs_fstore1 vg_fastcfs

Logical volume "lv_fastcfs_fstore1" created.

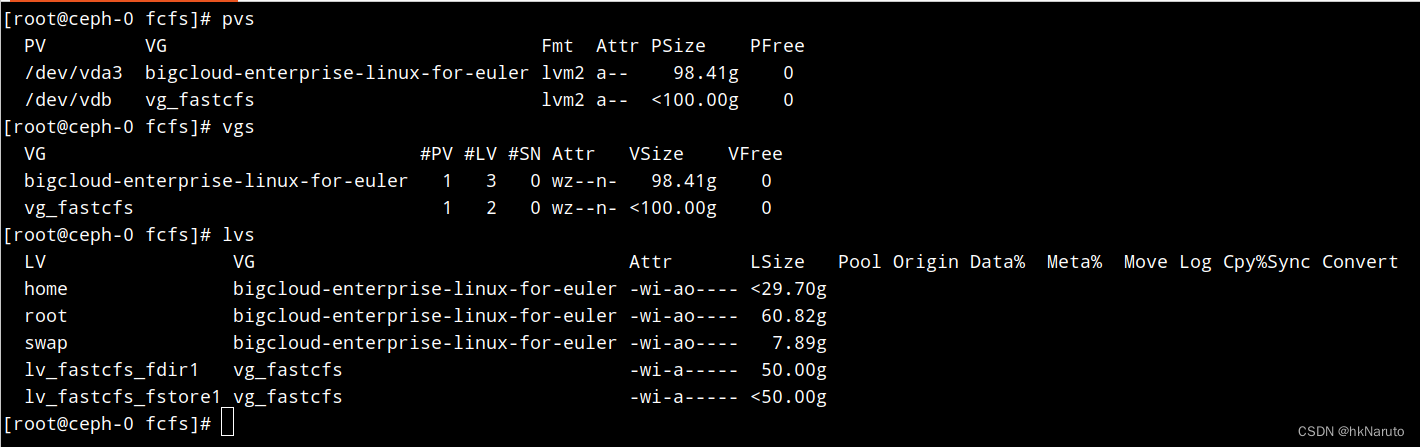

lvm最终状态

挂载存储目录(每个节点均执行)

创建数据目录

[root@ceph-0 fcfs]# mkdir -p /opt/fastcfs/fdir/db

[root@ceph-0 fcfs]# mkdir -p /opt/faststore/data

配置/etc/fstab

添加两行

/dev/mapper/vg_fastcfs-lv_fastcfs_fdir1 /opt/fastcfs/fdir/db xfs defaults 0 0

/dev/mapper/vg_fastcfs-lv_fastcfs_fstore1 /opt/faststore/data xfs defaults 0 0xfs格式化

[root@ceph-0 fcfs]# mkfs.xfs /dev/mapper/vg_fastcfs-lv_fastcfs_fdir1

meta-data=/dev/mapper/vg_fastcfs-lv_fastcfs_fdir1 isize=512 agcount=4, agsize=3276800 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=13107200, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=6400, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

[root@ceph-0 fcfs]# mkfs.xfs /dev/mapper/vg_fastcfs-lv_fastcfs_fstore1

meta-data=/dev/mapper/vg_fastcfs-lv_fastcfs_fstore1 isize=512 agcount=4, agsize=3276544 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=13106176, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=6399, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

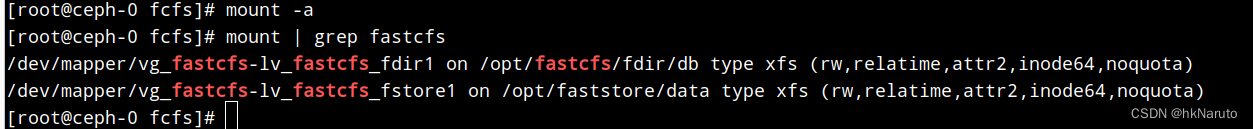

挂载

mount -a

其他三台初始化

pvcreate /dev/vdb

vgcreate vg_fastcfs /dev/vdb

lvcreate -y -L 50G -n lv_fastcfs_fdir1 vg_fastcfs

lvcreate -y -l 100%FREE -n lv_fastcfs_fstore1 vg_fastcfs

mkdir -p /opt/fastcfs/fdir/db

mkdir -p /opt/faststore/dataecho "/dev/mapper/vg_fastcfs-lv_fastcfs_fdir1 /opt/fastcfs/fdir/db xfs defaults 0 0" >> /etc/fstab

echo "/dev/mapper/vg_fastcfs-lv_fastcfs_fstore1 /opt/faststore/data xfs defaults 0 0" >> /etc/fstab

mkfs.xfs /dev/mapper/vg_fastcfs-lv_fastcfs_fdir1

mkfs.xfs /dev/mapper/vg_fastcfs-lv_fastcfs_fstore1

mount -a适配BigCloud(即:bclinux)

修改脚本,差异如下[root@ceph-0 fcfs]# diff -Npr fcfs.sh fcfs.sh.bak

*** fcfs.sh 2023-11-21 13:45:20.742219402 +0800

--- fcfs.sh.bak 2023-11-21 13:41:49.109731058 +0800

*************** declare -ir MIN_VERSION_OF_Anolis=7

*** 22,28 ****declare -ir MIN_VERSION_OF_Amazon=2declare -ir MIN_VERSION_OF_openEuler=20declare -ir MIN_VERSION_OF_UOS=20

! YUM_OS_ARRAY=(Red Rocky Oracle Fedora CentOS AlmaLinux Alibaba Anolis Amazon BigCloud openEuler Kylin UOS)APT_OS_ARRAY=(Ubuntu Debian Deepin)fcfs_settings_file="fcfs.settings"

--- 22,28 ----declare -ir MIN_VERSION_OF_Amazon=2declare -ir MIN_VERSION_OF_openEuler=20declare -ir MIN_VERSION_OF_UOS=20

! YUM_OS_ARRAY=(Red Rocky Oracle Fedora CentOS AlmaLinux Alibaba Anolis Amazon openEuler Kylin UOS)APT_OS_ARRAY=(Ubuntu Debian Deepin)fcfs_settings_file="fcfs.settings"

*************** check_remote_osname() {

*** 850,856 ****elseos_major_version=8fi

! elif [ $osname = 'BigCloud' ] || [ $osname = 'openEuler' ] || [ $osname = 'Kylin' ] || [ $osname = 'UOS' ]; thenos_major_version=8fielse

--- 850,856 ----elseos_major_version=8fi

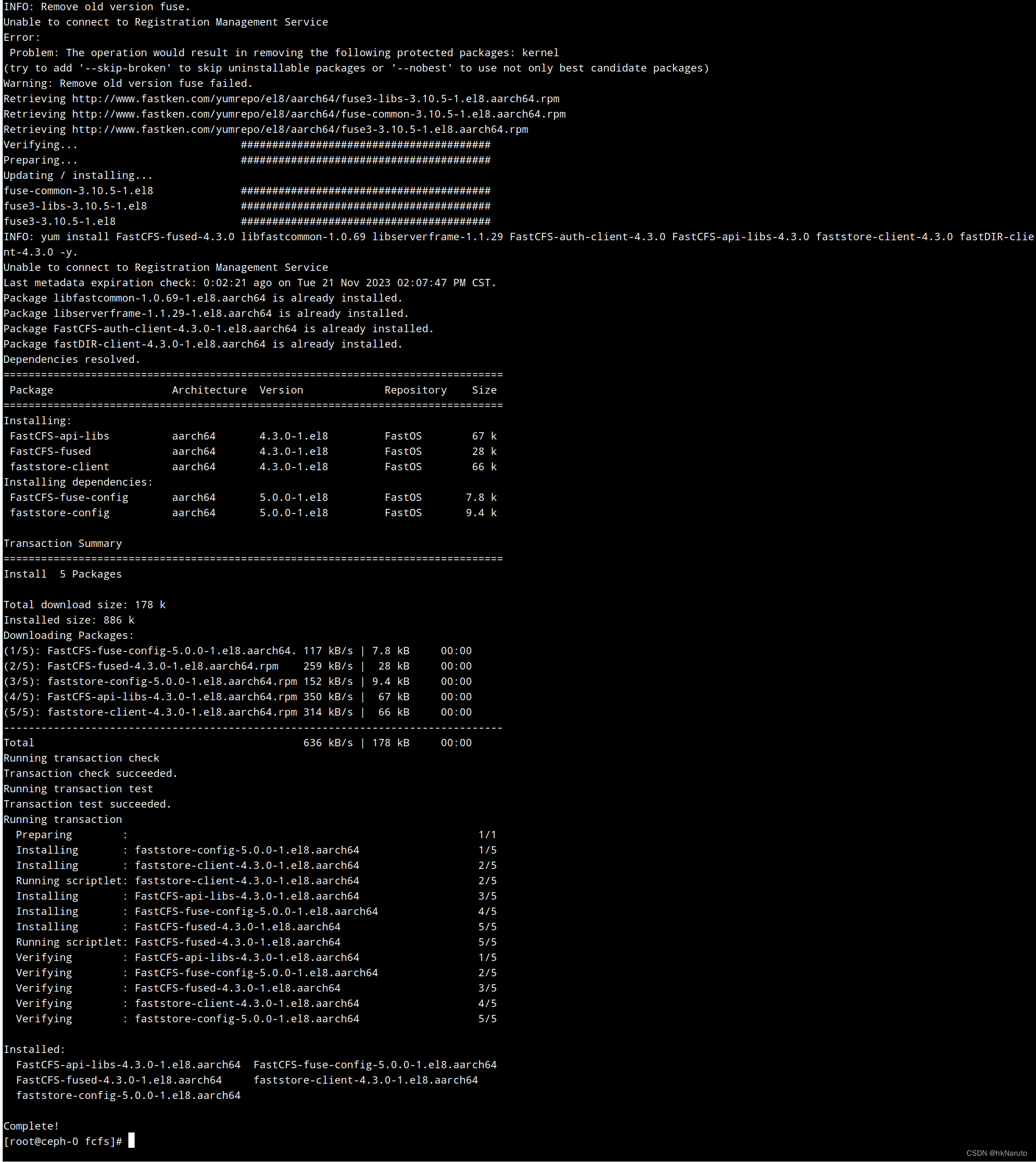

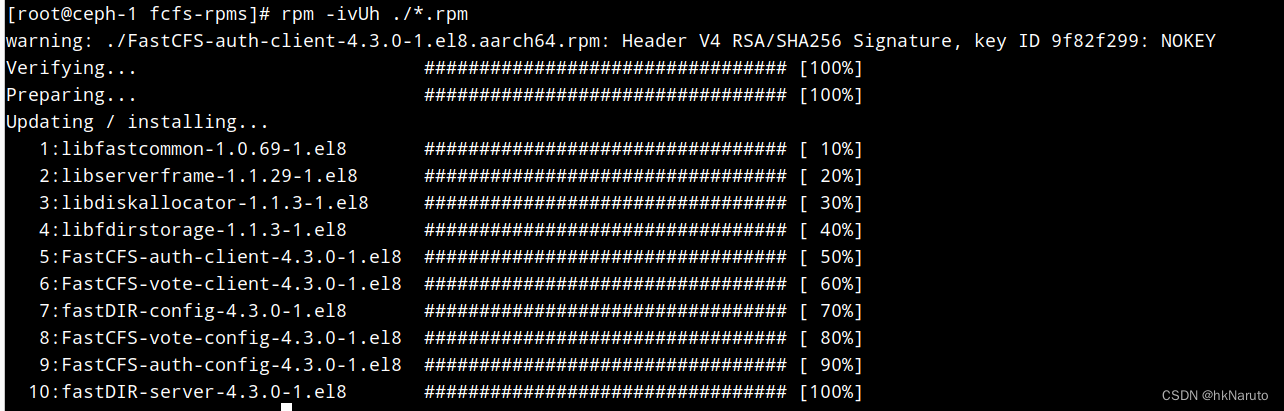

! elif [ $osname = 'openEuler' ] || [ $osname = 'Kylin' ] || [ $osname = 'UOS' ]; thenos_major_version=8fielse执行安装

bash fcfs.sh install

配置文件分发

bash fcfs.sh config分发的配置文件清单

[root@ceph-1 ~]# find /etc/fastcfs/

/etc/fastcfs/

/etc/fastcfs/fdir

/etc/fastcfs/fdir/cluster.conf

/etc/fastcfs/fdir/client.conf

/etc/fastcfs/fdir/server.conf

/etc/fastcfs/fdir/storage.conf

/etc/fastcfs/auth

/etc/fastcfs/auth/cluster.conf

/etc/fastcfs/auth/client.conf

/etc/fastcfs/auth/server.conf

/etc/fastcfs/auth/auth.conf

/etc/fastcfs/auth/session.conf

/etc/fastcfs/auth/keys

/etc/fastcfs/auth/keys/session_validate.key

/etc/fastcfs/vote

/etc/fastcfs/vote/cluster.conf

/etc/fastcfs/vote/client.conf

/etc/fastcfs/vote/server.conf

/etc/fastcfs/fcfs

/etc/fastcfs/fcfs/papi.conf

/etc/fastcfs/fcfs/fuse.conf

/etc/fastcfs/fstore

/etc/fastcfs/fstore/cluster.conf

/etc/fastcfs/fstore/client.conf

/etc/fastcfs/fstore/server.conf

/etc/fastcfs/fstore/storage.conf

/etc/fastcfs/fstore/dbstore.conf

启动集群

bash fcfs.sh start查看集群日志

bash fcfs.sh tail fdir故障:ERROR connect to fdir server Connection refused

[2023-11-21 14:21:26] ERROR - file: connection_pool.c, line: 140, connect to fdir server 172.17.112.206:11011 fail, errno: 111, error info: Connection refused

[2023-11-21 14:21:26] ERROR - file: connection_pool.c, line: 140, connect to fdir server 172.17.227.100:11011 fail, errno: 111, error info: Connection refused

[2023-11-21 14:21:26] ERROR - file: connection_pool.c, line: 140, connect to fdir server 172.17.67.157:11011 fail, errno: 111, error info: Connection refused

[2023-11-21 14:21:27] ERROR - file: connection_pool.c, line: 140, connect to fdir server 172.17.67.157:11011 fail, errno: 111, error info: Connection refused

过一会儿就好了

查看状态

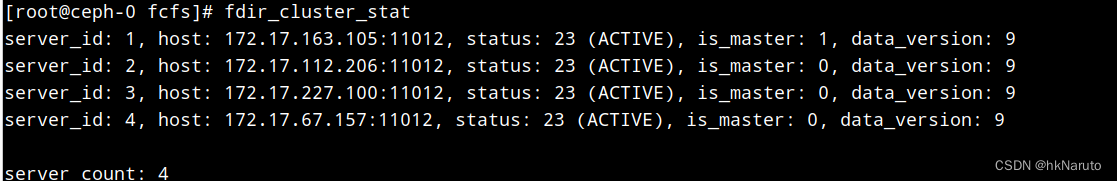

查看fastDIR状态

fdir_cluster_stat

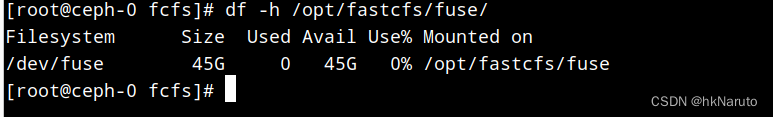

查看挂载目录空间情况

df -h /opt/fastcfs/fuse/

====

其他节点未同步,直接升级到5.0.0

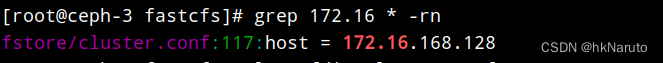

故障:fstore cluster ip配置未同步

fcfs.sh config 执行后,

除了 ceph-0节点,其他节点fstore/cluster.conf host地址均不对!

正确的地址

手动修改 ceph-1 2 3节点 /etc/fastcfs/fstore/cluster.conf

重启

cd /etc/fcfs; ./fcfs.sh restart

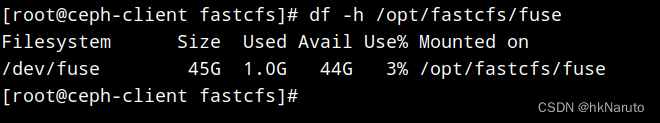

基本上OK了(还不会用)

简单dd进去一个1G文件,所有节点fuse目录已经同步了

[root@ceph-0 fcfs]# ssh ceph-0 df -h /opt/fastcfs/fuse/

Filesystem Size Used Avail Use% Mounted on

/dev/fuse 45G 1.0G 44G 3% /opt/fastcfs/fuse

[root@ceph-0 fcfs]# ssh ceph-1 df -h /opt/fastcfs/fuse/

Filesystem Size Used Avail Use% Mounted on

/dev/fuse 45G 1.0G 44G 3% /opt/fastcfs/fuse

[root@ceph-0 fcfs]# ssh ceph-2 df -h /opt/fastcfs/fuse/

Filesystem Size Used Avail Use% Mounted on

/dev/fuse 45G 1.0G 44G 3% /opt/fastcfs/fuse

[root@ceph-0 fcfs]# ssh ceph-3 df -h /opt/fastcfs/fuse/

Filesystem Size Used Avail Use% Mounted on

/dev/fuse 45G 1.0G 44G 3% /opt/fastcfs/fuse

客户端

rpm -ivh http://www.fastken.com/yumrepo/el8/noarch/FastOSrepo-1.0.1-1.el8.noarch.rpm

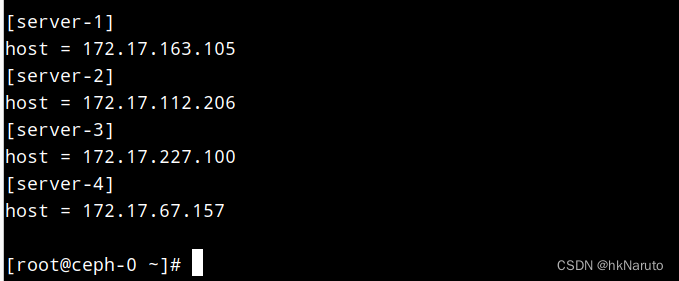

yum install -y FastCFS-fused-5.0.0配置cluster.conf

[root@ceph-client fastcfs]# vim /etc/fastcfs/fstore/cluster.conf

vim /etc/fastcfs/auth/cluster.conf

vim /etc/fastcfs/fdir/cluster.conf

[server-1]

host = 172.17.163.105

[server-2]

host = 172.17.112.206

[server-3]

host = 172.17.227.100

[server-4]

host = 172.17.67.157

systemctl daemon-reload

systemctl start fastcfs

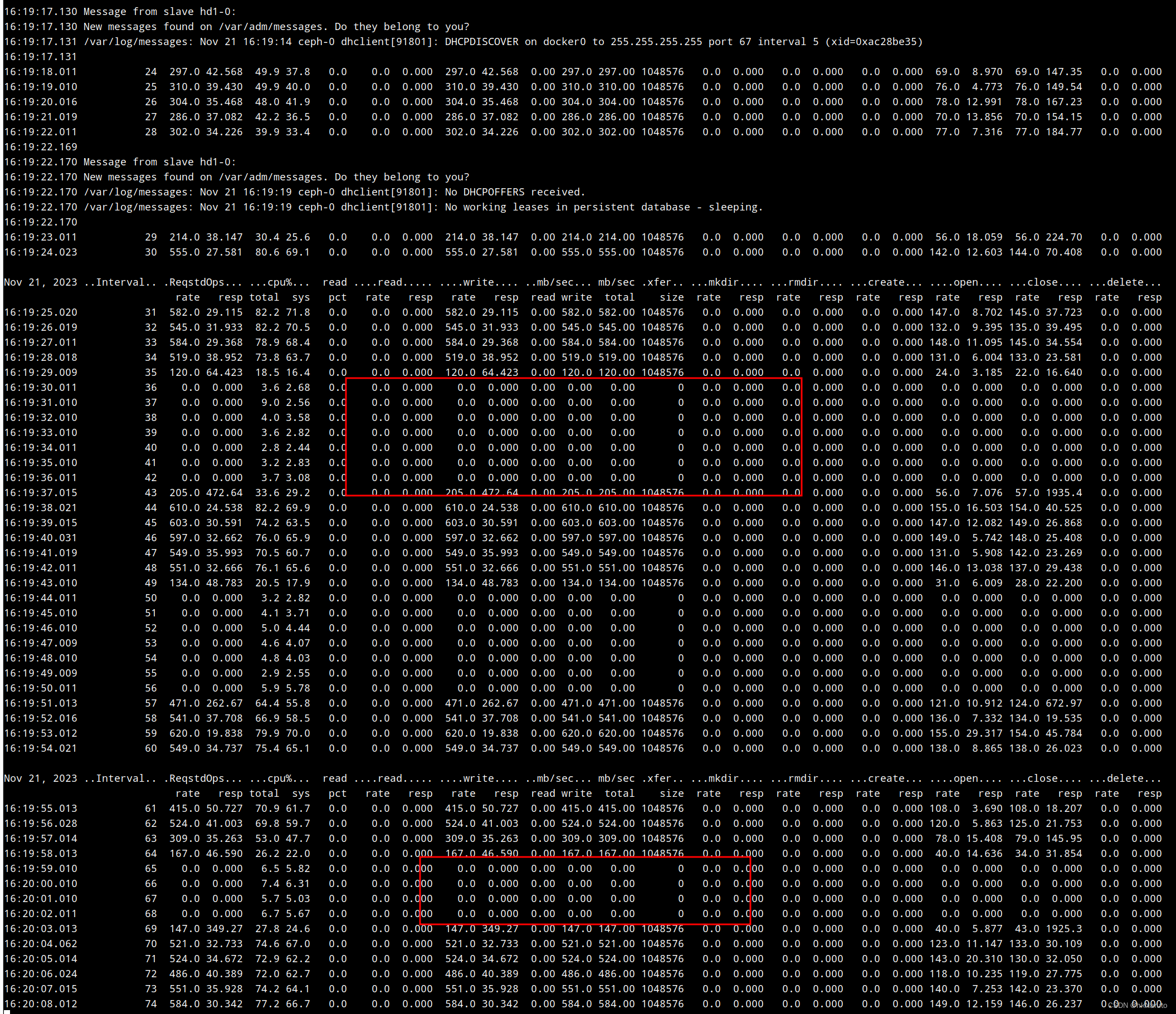

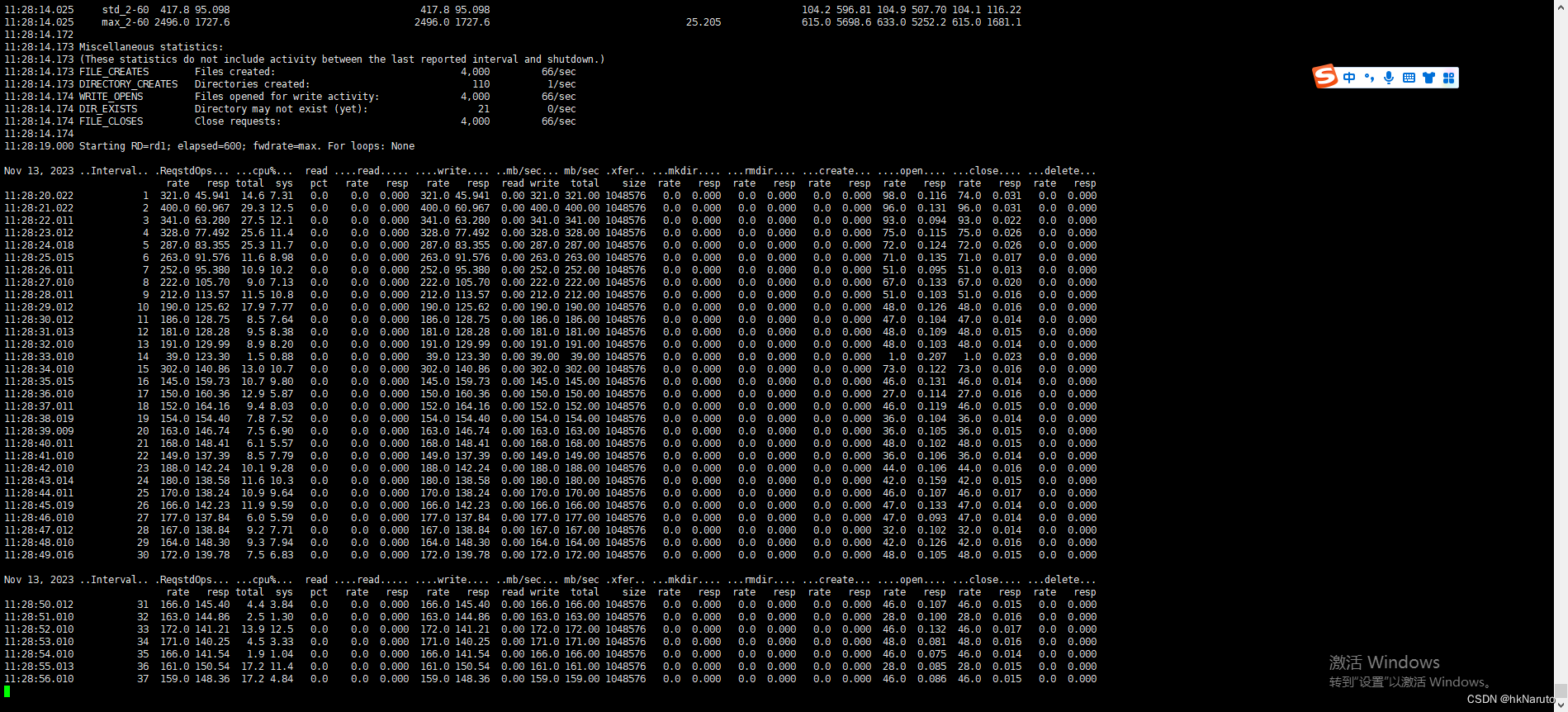

vdbench压测

fastcfs.conf

hd=default,vdbench=/root/vdbench,user=root,shell=ssh

hd=hd1,system=ceph-0

fsd=fsd1,anchor=/opt/fastcfs/fuse,depth=2,width=10,files=40,size=4M,shared=yes

fwd=format,threads=24,xfersize=1m

fwd=default,xfersize=1m,fileio=sequential,fileselect=sequential,operation=write,threads=24

fwd=fwd1,fsd=fsd1,host=hd1

rd=rd1,fwd=fwd*,fwdrate=max,format=restart,elapsed=600,interval=1

压测

cd /root/vdbench

./vdbench -f fastcfs.conf

中途卡了一会儿?

对比之前做的ceph集群,峰值更大,但是有停顿!

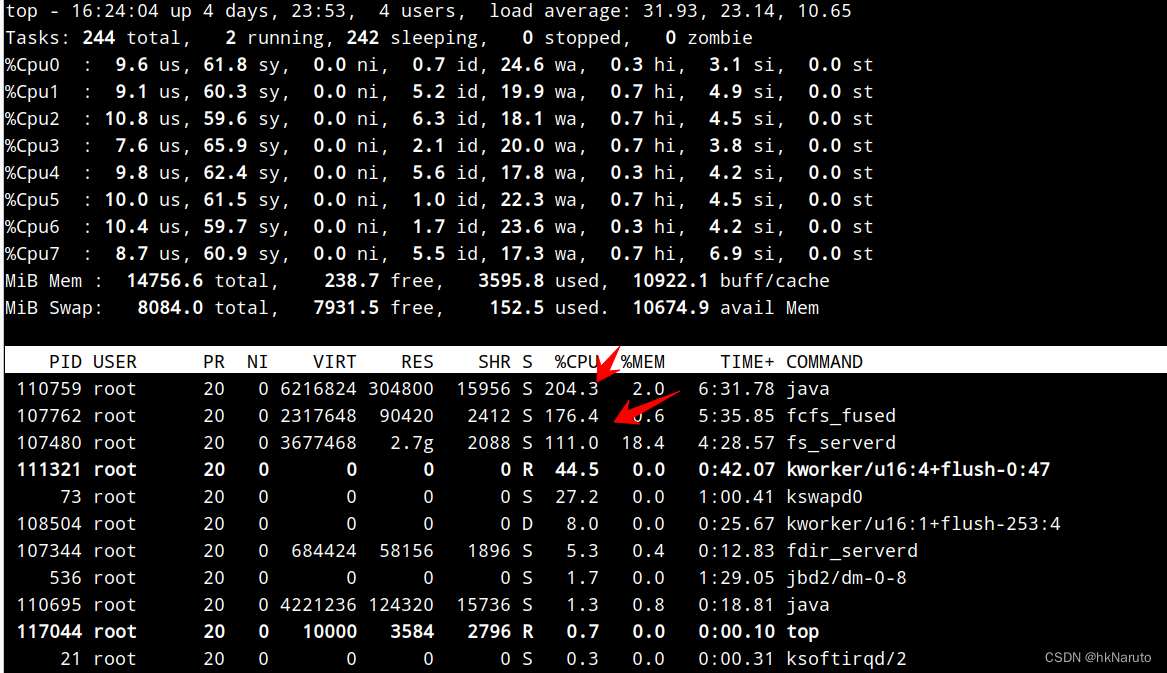

客户端消耗了大部分CPU资源(未在ceph-client上执行- -#,测试方案不严谨)

告一段落。

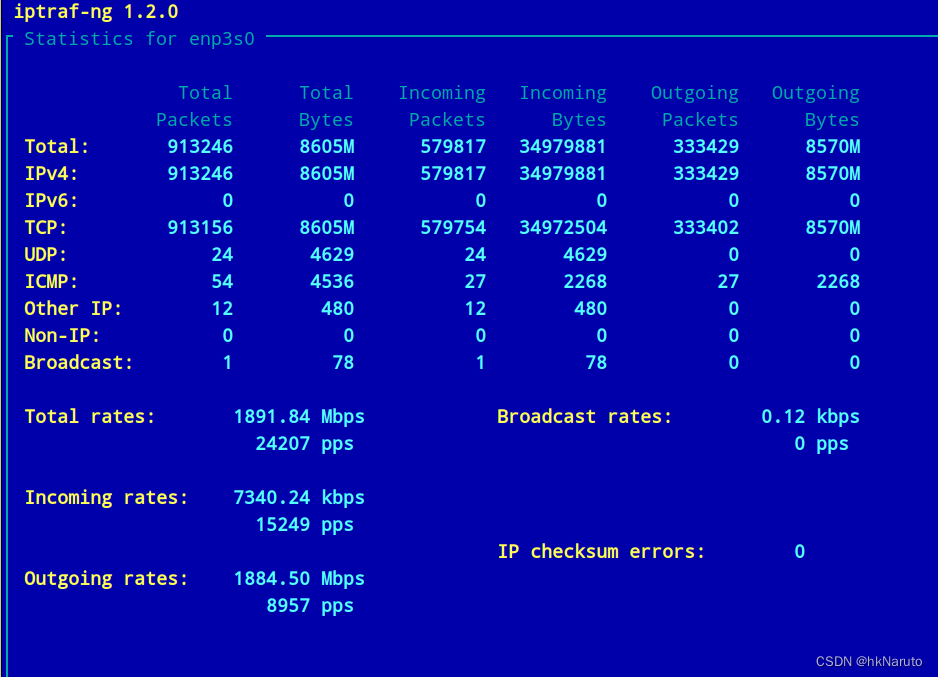

独立一个客户端ceph-client云主机测试

1884/8=235.5 虚拟网卡满了?比本地测试峰值要低

附:离线安装参考

参考

docs/fcfs-ops-tool-zh_CN.md · happyfish100/FastCFS - Gitee.com

:抽象类介绍)