hadoop 环境创建

1. 八、window搭建spark + IDEA开发环境

按照步骤安装完

2. windows下安装和配置hadoop

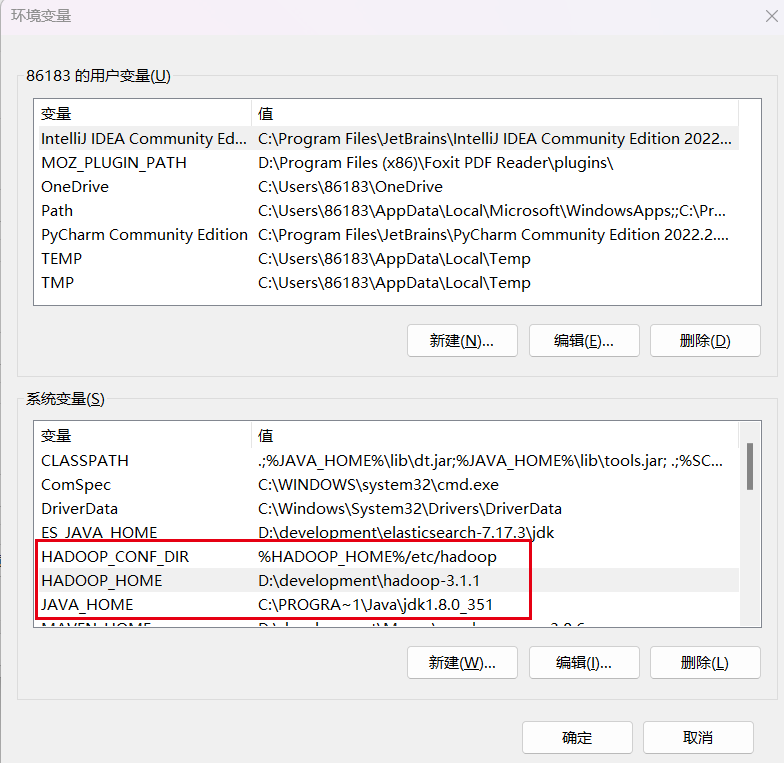

配置环境变量,注意JAVA_HOME路径,修改后,重启电脑,不重启容易报错!!!

新建data文件夹

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>hadoop.tmp.dir</name><value>/D:/development/hadoop-3.1.1/data</value></property><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property>

</configuration>hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/D:/development/hadoop-3.1.1/data/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/D:/development/hadoop-3.1.1/data/datanode</value> </property> </configuration>mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property>

</configuration>yarn-site.xml

<?xml version="1.0"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

-->

<configuration><!-- Site specific YARN configuration properties --><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name><value>org.apache.hahoop.mapred.ShuffleHandler</value></property>

</configuration>3**.节点格式化**

正常情况下,格式话结束会显示’namenode has been successfully formated’,如果格式话失败,原因可能是环境变量配置问题、hadoop版本和winutils版本不一致、etc中配置错误;

hdfs namenode -format

4. 启动hadoop

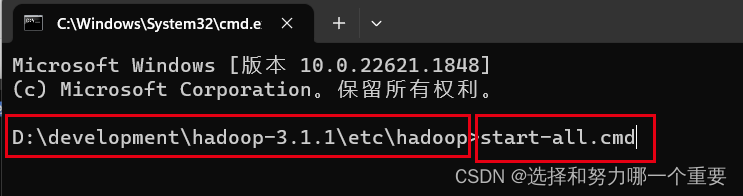

以管理员身份打开cmd,使用win+r快捷键打开运行,输入cmd,然后ctrl+shift+enter,选择‘是’,打开cmd;

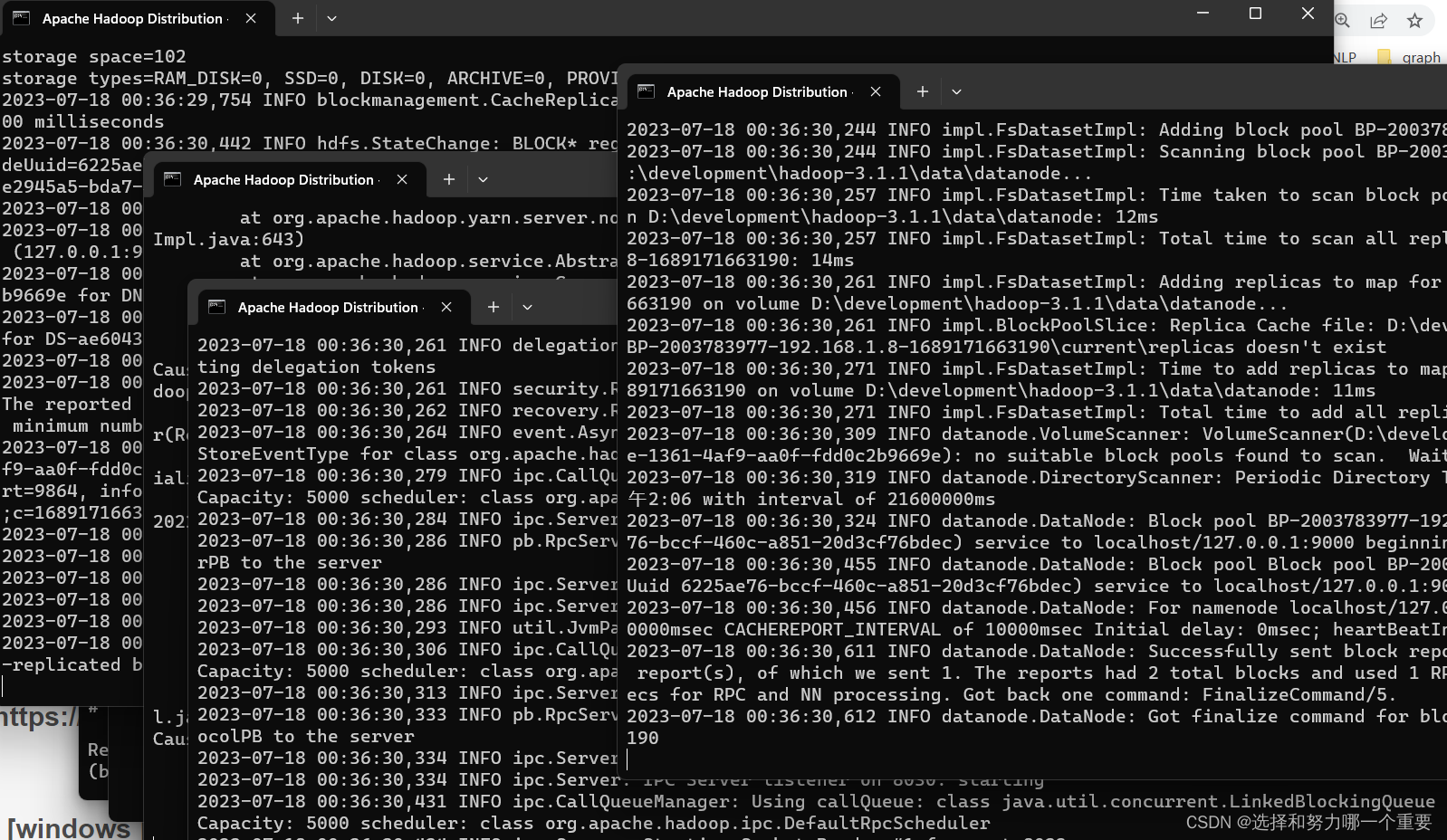

执行start-all.cmd,会新打开4个cmd,分别是namenode、resourcemanager、nodemanager、datanode的4个进程,如果这4个cmd启动没有报错,则启动成功;

出现四个窗口成功

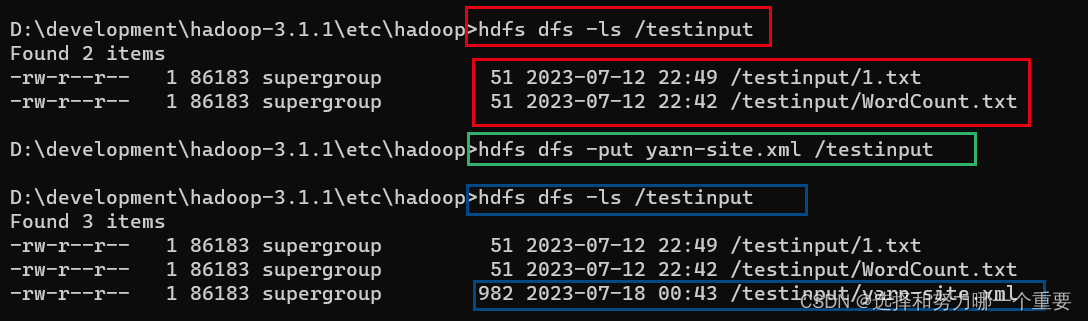

5. 使用hadoop

参考:

八、window搭建spark + IDEA开发环境

windows下安装和配置hadoop

Windows下配置Hadoop环境(全过程)

hadoop启动报错JAVA_HOME is incorrectly set

Eureka简介与依赖导入)

aidl中java.lang.RuntimeException: Didn‘t create service “XXX“)

)

)