tensorRT的多batch推理,导出的onnx模型必须是动态batch,只需在导出的时候,设置一个dynamic_axis参数即可。

torch.onnx.export(hybrik_model, dummy_input, "./best_model.onnx", verbose=True, input_names=input_names, \output_names=output_names, opset_version=9, dynamic_axes={'input':{0:'batch'}, 'output':{0:'batch'}})

序列化的时候,要构建一个profile, 指定最小的、合适的以及最大的输入数据维度,增加如下代码:

IOptimizationProfile* profile = builder->createOptimizationProfile();

profile->setDimensions("input", OptProfileSelector::kMIN, Dims4(1, 3, 256, 256));

profile->setDimensions("input", OptProfileSelector::kOPT, Dims4(5, 3, 256, 256));

profile->setDimensions("input", OptProfileSelector::kMAX, Dims4(5, 3, 256, 256));

config->addOptimizationProfile(profile);

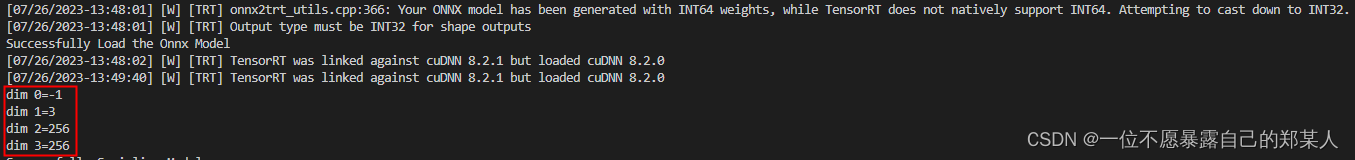

生成trt引擎的时候,可以打印维度,看batch维度是否为-1:

nvinfer1::Dims dim = engine->getBindingDimensions(0);

print_dims(dim);

void print_dims(const nvinfer1::Dims& dim)

{for (int nIdxShape = 0; nIdxShape < dim.nbDims; ++nIdxShape){printf("dim %d=%d\n", nIdxShape, dim.d[nIdxShape]);}

}

在反序列化推理时,也需增加运行时维度的指定:

在反序列化推理时,也需增加运行时维度的指定:

增加这一句:context->setBindingDimensions(0, Dims4(5, 3, 256, 256));

context->setBindingDimensions(0, Dims4(5, 3, 256, 256));

context->enqueueV2(gpu_buffers, stream, nullptr);CUDA_CHECK(cudaMemcpyAsync(output_uvd, gpu_buffers[output_uvd_index], 5 * 29 * 3 * sizeof(float), cudaMemcpyDeviceToHost, stream));

CUDA_CHECK(cudaMemcpyAsync(output_phi, gpu_buffers[output_phi_index], 5 * 23 * 2 * sizeof(float), cudaMemcpyDeviceToHost, stream));

CUDA_CHECK(cudaMemcpyAsync(output_shape, gpu_buffers[output_shape_index], 5 * 10 * sizeof(float), cudaMemcpyDeviceToHost, stream));

CUDA_CHECK(cudaMemcpyAsync(output_cam_depth, gpu_buffers[output_cam_depth_index], 5 * 1 * 1 * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

详见:https://blog.csdn.net/dou3516/article/details/125976923

)

)

![[containerd] 初始化流程概览](http://pic.xiahunao.cn/[containerd] 初始化流程概览)

无效问题)